caffe自定义模型训练的方法

1.准备数据集

共分为5类:

animal

flowers

plane

house

guitars

下载并进行解压

2.数据集进行分类

# coding:utf-8

import numpy as np

import os

import shutil

'''

数据集的分类

测试集: 300张

训练集: 500张

'''

root_caffe = 'D:/Development/caffe/caffe-master/caffe-master/'

type = ['train','test']

data_type = ['animal', 'flower', 'guitar', 'house', 'plane']

#创建文件夹

model_dir = 'models/my_models_recognition/data/'

#数据集存放的路径

database_path = 'H:/database/'

#创建一个模型的总文件夹

path = root_caffe + model_dir

if not os.path.exists(path):

os.makedirs(path)

for i in range(2):

if not os.path.exists(path + type[i]):

os.makedirs(path + type[i])

for j in range(5):

if not os.path.exists(path + type[i] + '/' + data_type[j]):

os.makedirs(path + type[i] + '/' + data_type[j])

# 读取文件

for root,dir,file in os.walk(database_path + data_type[j]):

print(len(file))

paths = []

if(i == 0): # 训练集

list = file[0:500]

for k in range(500):

print(database_path + data_type[j] + '/'+ list[k])

print(path + type[i] + '/' + data_type[j])

shutil.copyfile(database_path + data_type[j] + '/'+ list[k] , #图片的原始路径

path + type[i] + '/' + data_type[j] + '/' + list[k] ) #图片的caffe模型路径

elif(i==1): # 测试集

list = file[0:300]

for k in range(300):

print(database_path + data_type[j] + '/' + list[k])

print(path + type[i] + '/' + data_type[j])

shutil.copyfile(database_path + data_type[j] + '/' + list[k],

path + type[i] + '/' + data_type[j] + '/' + list[k])

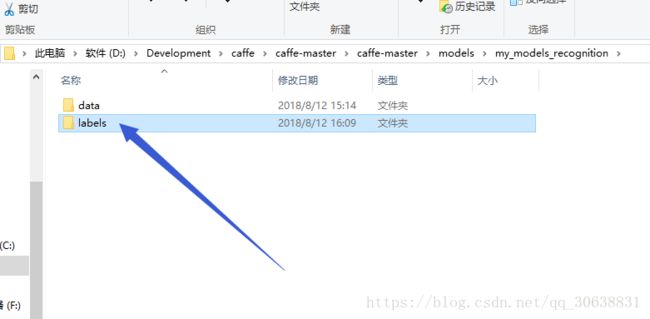

3.标签文件的制作

# coding: utf-8

import os

# In[2]:

#定义Caffe根目录

caffe_root = 'D:/Development/caffe/caffe-master/caffe-master/'

#制作训练标签数据

i = 0 #标签

with open(caffe_root + 'models/my_models_recognition/labels/train.txt','wb') as train_txt:

for root,dirs,files in os.walk(caffe_root+'models/my_models_recognition/data/train/'): #遍历文件夹

for dir in dirs:

for root,dirs,files in os.walk(caffe_root+'models/my_models_recognition/data/train/'+str(dir)): #遍历每一个文件夹中的文件

for file in files:

image_file = str(dir) + '\\' + str(file)

label = image_file + ' ' + str(i) + '\n' #文件路径+空格+标签编号+换行

train_txt.writelines(label) #写入标签文件中

i+=1 #编号加1

#制作测试标签数据

i=0 #标签

with open(caffe_root + 'models/my_models_recognition/labels/test.txt','wb') as test_txt:

for root,dirs,files in os.walk(caffe_root+'models/my_models_recognition/data/test/'): #遍历文件夹

for dir in dirs:

for root,dirs,files in os.walk(caffe_root+'models/my_models_recognition/data/test/'+str(dir)): #遍历每一个文件夹中的文件

for file in files:

image_file = str(dir) + '\\' + str(file)

label = image_file + ' ' + str(i) + '\n' #文件路径+空格+标签编号+换行

test_txt.writelines(label) #写入标签文件中

i+=1#编号加1

print "成功生成文件列表"

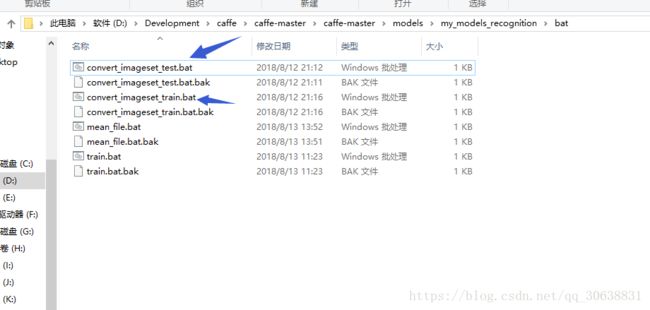

4.数据转化,把图片转化为LMDB格式

convert_imageset_train.bat:

%格式转化的可执行文件%

%重新设定文件大小%

%打乱图片%

%转化格式%

%图片路径%

%图片标签%

%lmdb文件的输出路径%

SET GLOG_logtostderr=1

D:\Development\caffe\caffe-master\caffe-master\Build\x64\Release\convert_imageset.exe ^

--resize_height=256 --resize_width=256 ^

--shuffle ^

--backend="lmdb" ^

D:\Development\caffe\caffe-master\caffe-master\models\my_models_recognition\data\train\ ^

D:\Development\caffe\caffe-master\caffe-master\models\my_models_recognition\labels\train.txt ^

D:\Development\caffe\caffe-master\caffe-master\models\my_models_recognition\lmdb\train\

pause

convert_imageset_test.bat:

%格式转化的可执行文件%

%重新设定文件大小%

%打乱图片%

%转化格式%

%图片路径%

%图片标签%

%lmdb文件的输出路径%

D:\Development\caffe\caffe-master\caffe-master\Build\x64\Release\convert_imageset.exe ^

--resize_height=256 --resize_width=256 ^

--shuffle ^

--backend="lmdb" ^

D:\Development\caffe\caffe-master\caffe-master\models\my_models_recognition\data\test\ ^

D:\Development\caffe\caffe-master\caffe-master\models\my_models_recognition\labels\test.txt ^

D:\Development\caffe\caffe-master\caffe-master\models\my_models_recognition\lmdb\test\

pause

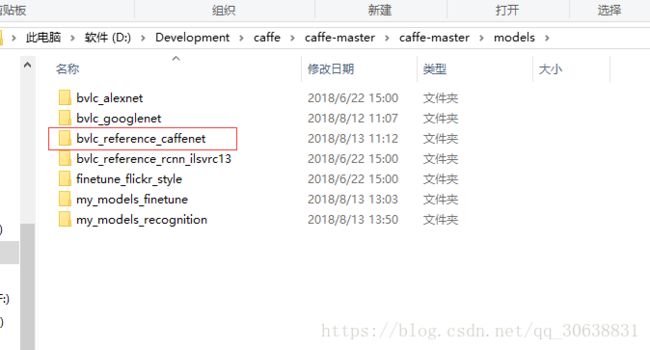

5.编写网络

用的是caffenet的网络,对此网络进行修改

train_val.prototxt:

name: "CaffeNet"

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

# transform_param { #求均值方法1

# mirror: true

# crop_size: 227

# mean_file: "data/ilsvrc12/imagenet_mean.binaryproto"

# }

#mean pixel / channel-wise mean instead of mean image

transform_param { #求均值方法2

crop_size: 227 #随机截取227*227

mean_value: 104 #大量图片求的均值

mean_value: 117

mean_value: 123

mirror: true

}

data_param {

source: "D:/Development/caffe/caffe-master/caffe-master/models/my_models_recognition/lmdb/train"

batch_size: 50

backend: LMDB

}

}

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

# transform_param {

# mirror: false

# crop_size: 227

# mean_file: "data/ilsvrc12/imagenet_mean.binaryproto"

# }

# mean pixel / channel-wise mean instead of mean image

transform_param {

crop_size: 227

mean_value: 104

mean_value: 117

mean_value: 123

mirror: false

}

data_param {

source: "D:/Development/caffe/caffe-master/caffe-master/models/my_models_recognition/lmdb/test"

batch_size: 50

backend: LMDB

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 96

kernel_size: 11

stride: 4

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "norm1"

type: "LRN"

bottom: "pool1"

top: "norm1"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "norm1"

top: "conv2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 1

}

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "norm2"

type: "LRN"

bottom: "pool2"

top: "norm2"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "norm2"

top: "conv3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 1

}

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "conv5"

type: "Convolution"

bottom: "conv4"

top: "conv5"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 1

}

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "fc6"

type: "InnerProduct"

bottom: "pool5"

top: "fc6"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 512

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 1

}

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "InnerProduct"

bottom: "fc6"

top: "fc7"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 512

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 1

}

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc8"

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 5

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "fc8"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "fc8"

bottom: "label"

top: "loss"

}

6.修改超参数文件

solver.prototxt:

net: "D:/Development/caffe/caffe-master/caffe-master/models/my_models_recognition/train_val.prototxt"

test_iter: 30 #1000 /batch

test_interval: 200 #间隔数

base_lr: 0.01

lr_policy: "step" #每隔stepsize步,对学习率进行改变

gamma: 0.1

stepsize: 1000

display: 100 #每100次显示一次

max_iter: 5000

momentum: 0.9

weight_decay: 0.0005

snapshot: 5000

snapshot_prefix: "D:/Development/caffe/caffe-master/caffe-master/models/my_models_recognition/model"

solver_mode: CPU

7.编写train的bat文件

%train训练数据%

%超参数文件%

D:\Development\caffe\caffe-master\caffe-master\Build\x64\Release\caffe.exe train ^

-solver=D:/Development/caffe/caffe-master/caffe-master/models/my_models_recognition/solver.prototxt

pause

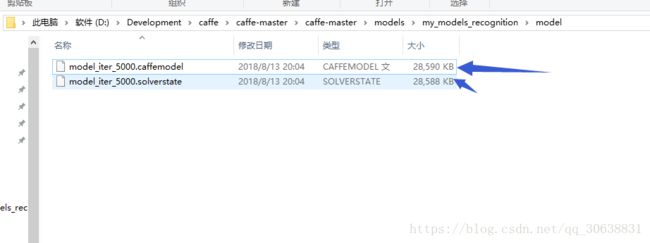

这里是训练5000次 每1000次打印一次,我这里只是训练5000次之后,对文件进行保存:

8.进行测试

8.1 编写网络文件

name: "CaffeNet"

layer {

name: "data"

type: "Input"

top: "data"

input_param { shape: { dim: 1 dim: 3 dim: 227 dim: 227 } } #dim:1 修改

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

convolution_param {

num_output: 96

kernel_size: 11

stride: 4

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "norm1"

type: "LRN"

bottom: "pool1"

top: "norm1"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "norm1"

top: "conv2"

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "norm2"

type: "LRN"

bottom: "pool2"

top: "norm2"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "norm2"

top: "conv3"

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

group: 2

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "conv5"

type: "Convolution"

bottom: "conv4"

top: "conv5"

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

group: 2

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "fc6"

type: "InnerProduct"

bottom: "pool5"

top: "fc6"

inner_product_param {

num_output: 512 #修改,原因自己电脑的显存或cpu有限

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "InnerProduct"

bottom: "fc6"

top: "fc7"

inner_product_param {

num_output: 512 #修改,原因自己电脑的显存或cpu有限

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc8"

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

inner_product_param {

num_output: 5 #修改类别

}

}

layer {

name: "prob"

type: "Softmax"

bottom: "fc8"

top: "prob"

}

8.2 找训练图片

在数据集中每样寻找几张

8.3编写python文件测试

import caffe

import numpy as np

import matplotlib.pyplot as plt

import os

import PIL

from PIL import Image

import sys

#定义Caffe根目录 这个需要根据自己的目录进行修改

caffe_root = 'D:/Development/caffe/caffe-master/caffe-master/'

#网络结构描述文件

deploy_file = caffe_root+'models/my_models_recognition/deploy.prototxt'

#训练好的模型

model_file = caffe_root+'models/my_models_recognition/model/model_iter_5000.caffemodel'

#gpu模式

#caffe.set_device(0)

caffe.set_mode_cpu()

#定义网络模型

net = caffe.Classifier(deploy_file, #调用deploy文件

model_file, #调用模型文件

channel_swap=(2,1,0), #caffe中图片是BGR格式,而原始格式是RGB,所以要转化

raw_scale=255, #python中将图片存储为[0, 1],而caffe中将图片存储为[0, 255],所以需要一个转换

image_dims=(227, 227)) #输入模型的图片要是227*227的图片

#分类标签文件

imagenet_labels_filename = caffe_root +'models/my_models_recognition/labels/label.txt'

#载入分类标签文件

labels = np.loadtxt(imagenet_labels_filename, str)

#对目标路径中的图像,遍历并分类

for root,dirs,files in os.walk(caffe_root+'models/my_models_recognition/image/'): #循环每一个文件

for file in files:

#加载要分类的图片

image_file = os.path.join(root,file)

input_image = caffe.io.load_image(image_file)

#打印图片路径及名称

image_path = os.path.join(root,file)

print(image_path)

#显示图片

img=Image.open(image_path)

plt.imshow(img)

plt.axis('off')

plt.show()

#预测图片类别

prediction = net.predict([input_image])

print 'predicted class:',prediction[0].argmax()

# 输出概率最大的前5个预测结果

top_k = prediction[0].argsort()[::-1]

for node_id in top_k:

#获取分类名称

human_string = labels[node_id]

#获取该分类的置信度

score = prediction[0][node_id]

print('%s (score = %.5f)' % (human_string, score))