Residual网络理解+pytorch实现+resnet34实现

文章目录

- 1.残差网络实现及其应用:

- 残差块理解

- 应用

- 残差块代码(pytorch实现)

- 2.resnet34网络pytorch实现

1.残差网络实现及其应用:

CSDN上关于残差网络的表述很多,基本都是按照论文里翻译过来,大家可以自行搜索论文关于残差网络的介绍。本文讲诉残差网络块的具体情况,以及在图像处理方面的使用。

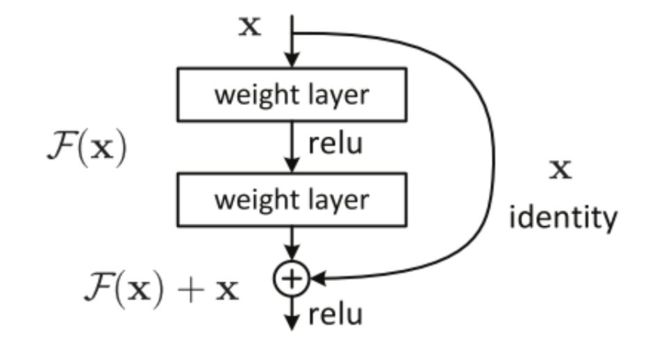

上图是从论文中节选出来的残差块结构,要想弄明白残差块的具体应用,需要弄清楚两个概念:

上图是从论文中节选出来的残差块结构,要想弄明白残差块的具体应用,需要弄清楚两个概念:

1.恒等映射:

上图中有一个曲线,称为shortcut connection(快捷连接),就是恒等映射。既不增加额外参数,也不增加计算复杂度。而恒等映射表示:输入=输出;

2.图中F(x)的作用:

图中F(x)执行卷积操作,目的是提取图片中的更多特征,或者是其它层没有学习的特征;

残差块理解

残差网络提出的目的就是为了减少梯度消失,更多的提取特征。假设我们输出目标是H(x),通过上图操作,实际输出是F(x)+x,因此F(x)=H(x)-x就是我们的目标残差。换个角度思考,假设网络模型优化的很好,通过卷积和池化已经提取不了特征,那么网络中F(x)=0,则残差块输入等于输出,这时候就变成恒等映射。很多利用残差块叠加深层神经网络,即使层数很多,但是运行时间没有很大提升,这是因为前面的残差块已经提出足够的特征,后面的残差块没有特征提取变成恒等映射,故网络模型运行时间没有显著提升。

通过优化使H(x)-x=F(x)无限逼近于0,当F(x)已经成为0时,后续操作无须进行,跳过执行,降低复杂度(恒等映射)。

应用

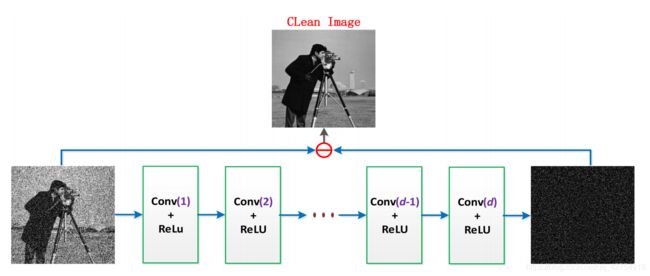

残差网络广泛应用图像处理中,例如去噪,超分辨率,去雨等等。下面介绍一个网络模型,展示残差网络的一些应用。

上图是一个利用残差结构去噪的模型,本人在学习时,产生误区,无法明白为什么经过卷积和池化可以提取图片中的噪点。但是,使用反推法就可以很好理解。假设输入有噪图片为I,清楚的图片为O,噪声为T,则I=O+T。而此模型使用O=I-T,则卷积操作只能学习噪声。这也从侧面表达了,残差块的目的是为了学习输入和输出之间的差距。

上图是一个利用残差结构去噪的模型,本人在学习时,产生误区,无法明白为什么经过卷积和池化可以提取图片中的噪点。但是,使用反推法就可以很好理解。假设输入有噪图片为I,清楚的图片为O,噪声为T,则I=O+T。而此模型使用O=I-T,则卷积操作只能学习噪声。这也从侧面表达了,残差块的目的是为了学习输入和输出之间的差距。

残差块代码(pytorch实现)

class Block(nn.Module):

def __init__(self, Nin, out, ksize=3, stride=1):

super.__init__()

self.conv1 = nn.Conv2d(Nin, out, ksize, stride, padding=1)

self.Relu = nn.ReLU()

self.bn = nn.BatchNorm2d(out)

def forward(self, input):

x = input

x = self.conv1(x)

x = self.bn(x)

x = self.Relu(x)

x = self.conv1(x)

x = self.bn(x)

x = x + input

output = self.Relu(x)

return output

2.resnet34网络pytorch实现

需要注意第二层,第三层,第四层跳跃连接时,维度不同,需要先经过卷积变换再相加。

import torch

import torch.nn as nn

class ResidualBlock(nn.Module):

def __init__(self, nin, nout, size, stried=1, shortcut=True):

super(ResidualBlock, self).__init__()

self.block1 = nn.Sequential(nn.Conv2d(nin, nout, size, stried, 1),

nn.ReLU(nout),

nn.BatchNorm2d(nout),

nn.Conv2d(nout, nout, size, 1, 1),

nn.BatchNorm2d(nout))

self.shorcut = shortcut

self.block2 = nn.Sequential(nn.Conv2d(nin, nout, size, stried, 1),

nn.BatchNorm2d(nout))

def forward(self, input):

x = input

out = self.block1(x)

'''若输入输出维度相等直接相加,不相等改变输入的维度'''

if self.shortcut:

out = x + out

else:

out = out + self.block2(x)

return nn.ReLU(out)

class resnet34(nn.Module):

def __init__(self):

super(resnet34, self).__init__()

self.block = nn.Sequential(nn.Conv2d(3, 64, 7, 2, 3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(3, 2, 1))

self.d1 = self.make_layer(64, 64, 3, 2, 3)

self.d2 = self.make_layer(64, 128, 3, 2, 4)

self.d3 = self.make_layer(128, 256, 3, 2, 6)

self.d4 = self.make_layer(256, 512, 3, 2, 3)

self.avgp = nn.AvgPool2d(7)

self.exit = nn.Linear(512, 1000)

def make_layer(self, in1, out1, ksize, stride, t):

layers = []

for i in range(0, t):

if i == 0 and in1 != out1:

layers.append(ResidualBlock(in1, out1, ksize, stride, None))

else:

layers.append(ResidualBlock(out1, out1, ksize, 1, True))

return nn.Sequential(*layers)

def forward(self, input):

x = self.block(input) # 输出维度56*56*64

x = self.d1(x) # 输出维度56*56*64

x = self.d2(x) # i=0步长为2,输出维度28*28*128

x = self.d3(x) # i=0步长为2,输出维度14*14*256

x = self.d4(x) # i=0步长为2,输出维度7*7*512

x = self.avgp(x) # 1*1*512

output = self.exit(x)

return output

net = resnet34()

print(net)

D:\anaconda\envs\pytorch\python.exe D:/pycpython/model/resnet.py

resnet34(

(block): Sequential(

(0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

)

(d1): Sequential(

(0): ResidualBlock(

(block1): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(block1): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): ResidualBlock(

(block1): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(d2): Sequential(

(0): ResidualBlock(

(block1): Sequential(

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(block1): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): ResidualBlock(

(block1): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(3): ResidualBlock(

(block1): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(d3): Sequential(

(0): ResidualBlock(

(block1): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(block1): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): ResidualBlock(

(block1): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(3): ResidualBlock(

(block1): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(4): ResidualBlock(

(block1): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(5): ResidualBlock(

(block1): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(d4): Sequential(

(0): ResidualBlock(

(block1): Sequential(

(0): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(block1): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): ResidualBlock(

(block1): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(block2): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(avgp): AvgPool2d(kernel_size=7, stride=7, padding=0)

(exit): Linear(in_features=512, out_features=1000, bias=True)

)