Keras 官方example理解之--pretrained_word_embeddings.py

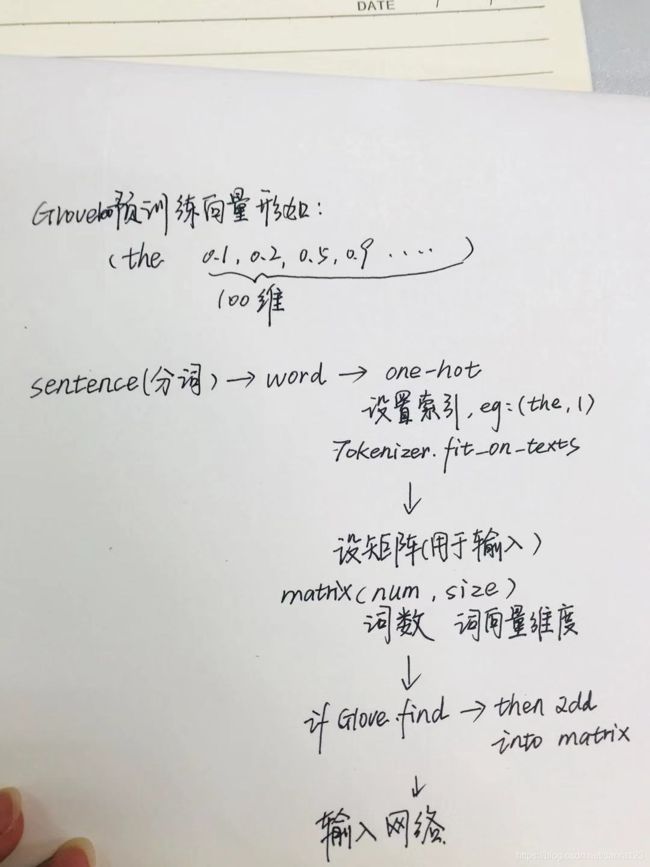

对Embedding一直有点迷迷糊糊的,今天看了关于Embedding层的介绍,大概就是将one-hot转换成紧凑的向量表示吧。下面是理解了下官方pretrained_word_embeddings.py(github)案例后自己的注释和理解,主要是利用 Glove6B 中与训练的词向量对 20_newsgroup 新闻数据进行分类。

1. 预训练向量:

将Glove6B中的100维预训练好的向量取出来,存到参数coefs中。其中Glove6B中每行数据格式为 单词 -0.03814 0.76897 ...(数字共100列)。

BASE_DIR = '../data/'

GLOVE_DIR = os.path.join(BASE_DIR, 'glove.6B')

TEXT_DATA_DIR = os.path.join(BASE_DIR, '20_newsgroup')

MAX_SEQUENCE_LENGTH = 1000

MAX_NUM_WORDS = 20000

EMBEDDING_DIM = 100

VALIDATION_SPLIT = 0.2

# first , build index mapping words in the embedding set

# to theis embedding vector

print ('Indexing word vectors ')

embeddings_index= {}

'''

glove6B中是预先训练好的词向量,.100d是100维词向量,每一行有101个

第一个是英文单词,后面的[1:] 是向量表示

embedding_index 中word是要转换成词向量的词,coefs 是对应的向量

'''

with open(os.path.join(GLOVE_DIR,'glove.6B.100d.txt')) as f:

for line in f:

values = line.split()

word = values[0]

coefs = np.asarray(values[1:],dtype='float32')

embeddings_index[word] = coefs

print('Found %s word vectors.'%len(embeddings_index))

输出:

Indexing word vectors

Found 400000 word vectors.

2.文章处理:

这一段主要是对新闻数据的处理,labels中存放每篇文章的分类(20_newsgroup中每个分类一个文件夹,每个文件夹中存放多篇二进制文章)

print('Processing text dataset\n')

texts = [] #list of text samples

labels_index = {} # dictionary mapping label name to numeric id

labels = [] # list of label ids

# 处理20个新闻文本,获取不同类别的新闻以及对应的类别标签

# 将所有的新闻样本转化为词索引序列,所谓词索引序列就是为每一个词依次分配一个

#整数ID,遍历所有的新闻文本,只保留最常见的20k个词,而且每个新闻文本最多保留1000个词

# name 是10个文件夹的名称(10种新闻)

for name in sorted(os.listdir(TEXT_DATA_DIR)):

path = os.path.join(TEXT_DATA_DIR,name)

# 如果里面还是目录

if os.path.isdir(path):

label_id = len(labels_index)

# 20个新闻名字索引到一个id

labels_index[name] = label_id

print(name,' ',label_id)

for fname in sorted(os.listdir(path)):

if fname.isdigit():

fpath = os.path.join(path,fname)

args = {} if sys.version_info<(3,) else {'encoding':

'latin-1'}

with open(fpath,**args) as f:

t = f.read()

i = t.find('\n\n') # skip header

if 0< i :

t = t[i:]

# 去掉头部文章来源后,将文章放入到texts中

texts.append(t)

# texts append 一篇文章,labels中就append一个文章的分类标识符

labels.append(label_id)

print('Found %s texts. '%len(texts))

# 8179个文档,labels 是每篇文章对应的种类,作为分类的监督y_label值

print('Found %s labels'%len(labels))

print('Found labels.shape%s'%(len(labels)))

print('labels',labels)

输出:

Processing text dataset

alt.atheism 0

comp.graphics 1

comp.os.ms-windows.misc 2

comp.sys.ibm.pc.hardware 3

comp.sys.mac.hardware 4

comp.windows.x 5

misc.forsale 6

rec.autos 7

rec.motorcycles 8

Found 8179 texts.

Found 8179 labels

Found labels.shape8179

labels [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0 # 这里没有复制完,

# 就是8179篇文章,每个对应的label

3. 数据集划分及预训练向量矩阵生成

indices 的输出有点奇怪,就看了好久。或许Jupyter notebook 在array 特别长的时候中间会用省略号代替吗?不确定这点。

# text是一篇文章,texts 是文章的总和,进行分词并对分词后的词 设置索引

# finally, vectorize the text samples into a 2D integer tensor

tokenizer = Tokenizer(num_words=MAX_NUM_WORDS)

tokenizer.fit_on_texts(texts)

sequences = tokenizer.texts_to_sequences(texts)

word_index = tokenizer.word_index

# print('sequences:',sequences)

# print('word_index:',word_index[0])

print('Found %s unique tokens.' % len(word_index))

data = pad_sequences(sequences, maxlen=MAX_SEQUENCE_LENGTH)

labels = to_categorical(np.asarray(labels))

print('Shape of data tensor:', data.shape)

print('Shape of label tensor:', labels.shape)

# split the data into a training set and a validation set

# 看了一个小时终于看懂了,这里就是得出data(shape=(8179,1000))的数据量即8179,随机打乱一下然后将20%的用于测试,剩下的用于训练

# data = data[indices]中indices是一个打乱的数组,将它作为下标,再重新复制给data即将data打乱了。

'''

import numpy as np

indices = np.arange(4)

print(indices)

np.random.shuffle(indices)

print(indices)

data = np.asarray([10,2,3,4,5,6,4])

data = data[indices]

# data 取前四个值呀

print(data)

>>>

[0 1 2 3]

[1 0 3 2]

[ 2 10 4 3]

'''

indices = np.arange(data.shape[0])

np.random.shuffle(indices)

data = data[indices]

labels = labels[indices]

num_validation_samples = int(VALIDATION_SPLIT * data.shape[0])

print('\n\n -')

print('indices.shape:',indices.shape)

print('indices:',indices)

print(len(indices))

print('data.shape[0]:',data.shape[0])

print('data.shape',data.shape)

print('data.type',type(data))

x_train = data[:-num_validation_samples]

y_train = labels[:-num_validation_samples]

x_val = data[-num_validation_samples:]

y_val = labels[-num_validation_samples:]

print('Preparing embedding matrix.')

# 初始化一个 N*din的矩阵,其中N是单词的个数,dim是词向量的维度

# prepare embedding matrix

num_words = min(MAX_NUM_WORDS, len(word_index)) + 1

embedding_matrix = np.zeros((num_words, EMBEDDING_DIM))

# 返回的word是词?i是词的索引?

for word, i in word_index.items():

if i <10:

print('word and i: %s,%s'%(word,i))

if i > MAX_NUM_WORDS:

continue

embedding_vector = embeddings_index.get(word)

if embedding_vector is not None:

# words not found in embedding index will be all-zeros.

embedding_matrix[i] = embedding_vector

输出:

Indexing word vectors.

Found 400000 word vectors.

Processing text dataset

label_index {'alt.atheism': 0, 'comp.graphics': 1, 'comp.os.ms-windows.misc': 2, 'comp.sys.ibm.pc.hardware': 3, 'comp.sys.mac.hardware': 4, 'comp.windows.x': 5, 'misc.forsale': 6, 'rec.autos': 7, 'rec.motorcycles': 8}

Found 8179 texts.

Found 113142 unique tokens.

Shape of data tensor: (8179, 1000)

Shape of label tensor: (8179, 9)

-

indices.shape: (8179,)

indices: [3114 1984 4873 ..., 3182 2946 5193]

8179

data.shape[0]: 8179

data.shape (8179, 1000)

data.type

Preparing embedding matrix.

word and i: the,1

word and i: 'ax,2

word and i: a,3

word and i: to,4

word and i: i,5

word and i: and,6

word and i: of,7

word and i: is,8

word and i: in,9

4. 模型搭建及训练预测

注意这里trainable=False设为False,后续训练中不再变化。试了几次,准确率在55-65之间呢。

# load pre-trained word embeddings into an Embedding layer

# note that we set trainable = False so as to keep the embeddings fixed

embedding_layer = Embedding(num_words,

EMBEDDING_DIM,

embeddings_initializer=Constant(embedding_matrix),

input_length=MAX_SEQUENCE_LENGTH,

trainable=False)

print('Training model.')

# train a 1D convnet with global maxpooling

sequence_input = Input(shape=(MAX_SEQUENCE_LENGTH,), dtype='int32')

embedded_sequences = embedding_layer(sequence_input)

x = Conv1D(128, 5, activation='relu')(embedded_sequences)

x = MaxPooling1D(5)(x)

x = Conv1D(128, 5, activation='relu')(x)

x = MaxPooling1D(5)(x)

x = Conv1D(128, 5, activation='relu')(x)

x = GlobalMaxPooling1D()(x)

x = Dense(128, activation='relu')(x)

preds = Dense(len(labels_index), activation='softmax')(x)

model = Model(sequence_input, preds)

model.compile(loss='categorical_crossentropy',

optimizer='rmsprop',

metrics=['acc'])

model.fit(x_train, y_train,

batch_size=128,

epochs=10,

validation_data=(x_val, y_val))

输出:

Training model.

Train on 6544 samples, validate on 1635 samples

Epoch 1/10

6544/6544 [==============================] - 29s - loss: 2.0645 - acc: 0.1910 - val_loss: 1.9363 - val_acc: 0.2483

Epoch 2/10

6544/6544 [==============================] - 28s - loss: 1.7428 - acc: 0.3070 - val_loss: 1.6016 - val_acc: 0.3621

Epoch 3/10

6544/6544 [==============================] - 28s - loss: 1.5417 - acc: 0.4031 - val_loss: 2.2646 - val_acc: 0.3021

Epoch 4/10

6544/6544 [==============================] - 28s - loss: 1.3385 - acc: 0.4829 - val_loss: 1.2003 - val_acc: 0.5034

Epoch 5/10

6544/6544 [==============================] - 28s - loss: 1.1699 - acc: 0.5504 - val_loss: 1.1473 - val_acc: 0.5584

Epoch 6/10

6544/6544 [==============================] - 28s - loss: 1.0528 - acc: 0.6009 - val_loss: 1.0102 - val_acc: 0.6092

Epoch 7/10

6544/6544 [==============================] - 28s - loss: 0.9276 - acc: 0.6525 - val_loss: 1.6099 - val_acc: 0.4795

Epoch 8/10

6544/6544 [==============================] - 28s - loss: 0.8312 - acc: 0.6881 - val_loss: 0.9994 - val_acc: 0.6379

Epoch 9/10

6544/6544 [==============================] - 28s - loss: 0.7619 - acc: 0.7175 - val_loss: 1.0713 - val_acc: 0.6287

Epoch 10/10

6544/6544 [==============================] - 28s - loss: 0.6822 - acc: 0.7479 - val_loss: 1.5020 - val_acc: 0.5144

参考:

- Keras中Embedding层介绍:

https://blog.csdn.net/jiangpeng59/article/details/77533309 - 在keras中使用Glove预训练的词向量:https://github.com/MoyanZitto/keras-cn/blob/master/docs/legacy/blog/word_embedding.md