kubernetes之使用kubeadm部署一个单主集群

kubernetes

- 一、认识kubernetes

- 二、清理之前多的docker-swarm实验

- 三、部署kubernetes

- 3.1 准备工作

- 3.2 使用kubeadm创建一个单主集群

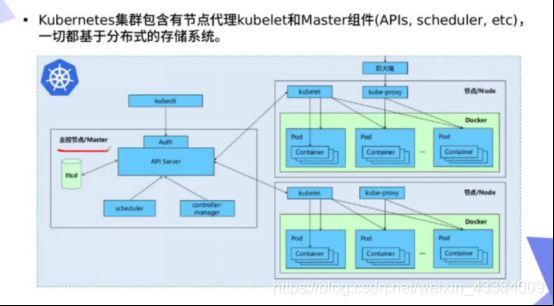

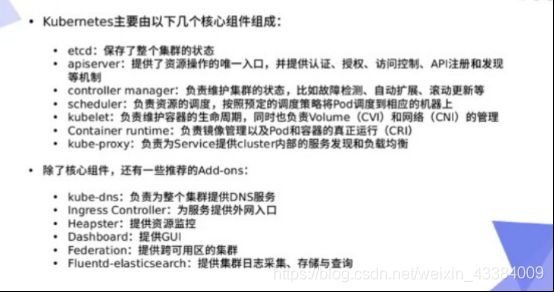

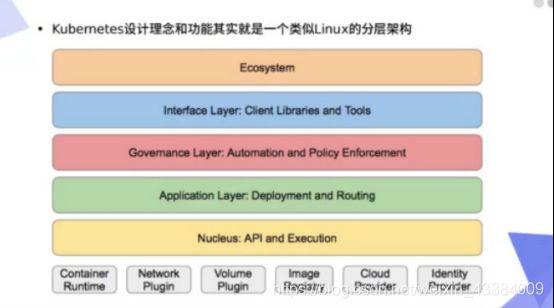

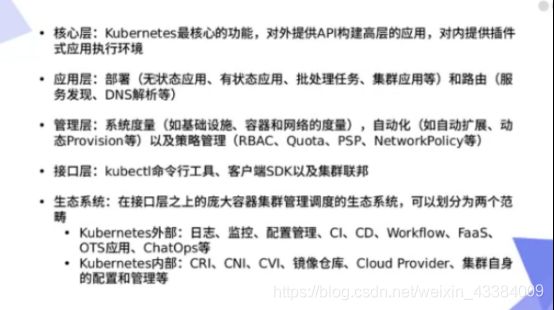

一、认识kubernetes

二、清理之前多的docker-swarm实验

将之前做docker-swarm的节点脱离出来

[root@server1 ~]#docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

434iwcmh9gsil4bs3rjm0xtnq * server1 Ready Active Leader 19.03.8

6lb9ftc8qm2mntup69ix8jcno server2 Ready Active 19.03.8

tkm4di4em3degw6muvq46jmi2 server3 Ready Active 19.03.8

[root@server2 ~]# docker swarm leave

[root@server3 ~]# docker swarm leave

[root@server1 ~]#docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

434iwcmh9gsil4bs3rjm0xtnq * server1 Ready Active Leader 19.03.8

6lb9ftc8qm2mntup69ix8jcno server2 Down Active 19.03.8

tkm4di4em3degw6muvq46jmi2 server3 Down Active 19.03.8

将管理节点脱离出来

[root@server1 ~]#docker swarm leave --force

Node left the swarm.

三、部署kubernetes

server1 私有仓库

server2、server3、server4 集群

3.1 准备工作

- 时间同步

方式一:

yum install -y ntpdate

ntpdate time.windows.com

方式二:

真机与阿里云时间同步

[root@foundation60 images]# vim /etc/chrony.conf

7 server ntp1.aliyun.com iburst

23 allow 172.25/16

[root@foundation60 images]# systemctl start chronyd

[root@foundation60 images]# chronyc sources -v

210 Number of sources = 1

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current synced, '+' = combined , '-' = not combined,

| / '?' = unreachable, 'x' = time may be in error, '~' = time too variable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 120.25.115.20 2 6 17 0 -6660us[-2489us] +/- 32ms

[root@foundation60 images]# firewall-config # 允许防火墙通过ntp服务

server2、server3、server4与真机时间同步(同时做如下操作)

[root@server4 ~]# cat /etc/hosts

172.25.60.250 foundation60.ilt.example.com

[root@server4 ~]# yum install chrony -y

[root@server4 ~]# vim /etc/chrony.conf

server 172.25.60.250 iburst

[root@server4 ~]# systemctl start chronyd

[root@server4 ~]# systemctl enable chronyd

[root@server4 ~]# chronyc sources -v

210 Number of sources = 1

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current synced, '+' = combined , '-' = not combined,

| / '?' = unreachable, 'x' = time may be in error, '~' = time too variable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* foundation60.ilt.example> 3 6 17 27 +120ns[ +43us] +/- 37ms

- server2\server3\server4上添加私有仓库解析(同时做如下操作)

[root@server4 ~]# cat /etc/hosts

172.25.60.1 server1 reg.westos.org

172.25.60.2 server2

172.25.60.3 server3

172.25.60.4 server4

172.25.60.5 server5

172.25.60.250 foundation60.ilt.example.com

3.2 使用kubeadm创建一个单主集群

- 主机配置

每个机器2GB内存

主节点2cpu

server2:master server3:node1 server4:node2 - 确保 在 sysctl 配置中的 net.bridge.bridge-nf-call-iptables 被设置为 1(server2,3,4同时操作)

[root@server4 ~]# cat < /etc/sysctl.d/k8s.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@server4 ~]# sysctl --system

- 安装docker(server2,3,4同时操作)

# 172.25.60.250上配置了docker仓库

[root@server4 yum.repos.d]# cat docker-ce.repo

[docker-ce]

name=docker-ce

baseurl=http://172.25.60.250/software

gpgcheck=0

[root@server4 yum.repos.d]# yum install docker-ce

[root@server4 yum.repos.d]# systemctl start docker

[root@server4 ~]# systemctl enable --now docker

- 从阿里云镜像下载kubernetes

https://developer.aliyun.com/mirror/kubernetes?spm=a2c6h.13651102.0.0.3e221b11jhg51C

添加kubernetes的yun源(server2,3,4同时操作)

[root@server2 ~]# cd /etc/yum.repos.d/

[root@server2 yum.repos.d]# cat k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

[root@server2 ~]# yum install -y kubelet kubeadm kubectl

kubectl:是一个客户端,调用api接口

- 设置免密

[root@server2 yum.repos.d]# ssh-keygen

[root@server2 yum.repos.d]# ssh-copy-id server3

[root@server2 yum.repos.d]# ssh-copy-id server4

- 将cgroupdriver改为systemd(server2,3,4同时操作)

[root@server2 docker]# pwd

/etc/docker

[root@server2 docker]# cat daemon.json

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

[root@server2 docker]# systemctl daemon-reload

[root@server2 docker]# systemctl restart docker # 重起失败

Job for docker.service failed because the control process exited with error code. See "systemctl status docker.service" and "journalctl -xe" for details.

# 排错

[root@server2 docker]# systemctl status docker.service

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/docker.service.d

└─10-machine.conf

Active: failed (Result: start-limit) since Fri 2020-04-17 17:57:30 CST; 19s ago

Docs: https://docs.docker.com

Process: 13306 ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2376 -H unix:///var/run/docker.sock --storage-driver overlay2 --tlsverify --tlscacert /etc/docker/ca.pem --tlscert /etc/docker/server.pem --tlskey /etc/docker/server-key.pem --label provider=generic (code=exited, status=1/FAILURE)

Main PID: 13306 (code=exited, status=1/FAILURE)

Apr 17 17:57:28 server2 systemd[1]: docker.service: main process exited, code=exited, status=1/FAILURE

Apr 17 17:57:28 server2 systemd[1]: Failed to start Docker Application Container Engine.

Apr 17 17:57:28 server2 systemd[1]: Unit docker.service entered failed state.

Apr 17 17:57:28 server2 systemd[1]: docker.service failed.

Apr 17 17:57:30 server2 systemd[1]: docker.service holdoff time over, scheduling restart.

Apr 17 17:57:30 server2 systemd[1]: Stopped Docker Application Container Engine.

Apr 17 17:57:30 server2 systemd[1]: start request repeated too quickly for docker.service

Apr 17 17:57:30 server2 systemd[1]: Failed to start Docker Application Container Engine.

Apr 17 17:57:30 server2 systemd[1]: Unit docker.service entered failed state.

Apr 17 17:57:30 server2 systemd[1]: docker.service failed.

[root@server2 docker]# cd /etc/systemd/system/docker.service.d

[root@server2 docker.service.d]# ls

10-machine.conf

[root@server2 docker.service.d]# rm -f 10-machine.conf

[root@server2 docker.service.d]# cd

server3之前也配置过所以删除10-machine.conf

[root@server3 yum.repos.d]# cd /etc/systemd/system/docker.service.d

[root@server3 docker.service.d]# ls

10-machine.conf

[root@server3 docker.service.d]# rm -fr 10-machine.conf

# 重起成功

[root@server2 ~]# systemctl daemon-reload

[root@server2 ~]# systemctl restart docker

[root@server2 docker]# docker info

Cgroup Driver: systemd

systemd目录的路径

[root@server2 cgroup]# pwd

/sys/fs/cgroup

[root@server2 cgroup]# ls

blkio cpu cpuacct cpu,cpuacct cpuset devices freezer hugetlb memory net_cls net_cls,net_prio net_prio perf_event pids systemd

- 禁用swap分区(server2,3,4同时操作)

[root@server2 ~]# swapoff -a

[root@server2 ~]# cat /etc/fstab

# /dev/mapper/rhel-swap swap swap defaults 0 0

- 开启kubelet(server2,3,4同时操作)

[root@server2 ~]# systemctl start kubelet

[root@server2 ~]# systemctl enable --now kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

- 查看kubeadm的配置信息

[root@server2 ~]# kubeadm config print init-defaults

W0417 18:31:32.834819 13832 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: server2

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.18.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

默认从k8s.gcr.io上下载镜像,需要,所以修改镜像仓库

# 修改为从阿里云镜像下载

[root@server2 ~]# kubeadm config images list --image-repository registry.aliyuncs.com/google_containers

W0417 18:38:45.037833 14155 version.go:102] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

W0417 18:38:45.037880 14155 version.go:103] falling back to the local client version: v1.18.1

W0417 18:38:45.037969 14155 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.1

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.18.1

registry.aliyuncs.com/google_containers/kube-scheduler:v1.18.1

registry.aliyuncs.com/google_containers/kube-proxy:v1.18.1

registry.aliyuncs.com/google_containers/pause:3.2

registry.aliyuncs.com/google_containers/etcd:3.4.3-0

registry.aliyuncs.com/google_containers/coredns:1.6.7

- 从阿里云上拉取kubeadm所需的镜像

[root@server2 ~]# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers

[root@server2 ~]# docker images | grep registry.aliyuncs.com

registry.aliyuncs.com/google_containers/kube-proxy v1.18.1 4e68534e24f6 8 days ago 117MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.18.1 d1ccdd18e6ed 8 days ago 162MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.18.1 a595af0107f9 8 days ago 173MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.18.1 6c9320041a7b 8 days ago 95.3MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 2 months ago 683kB

registry.aliyuncs.com/google_containers/coredns 1.6.7 67da37a9a360 2 months ago 43.8MB

registry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 5 months ago 288MB

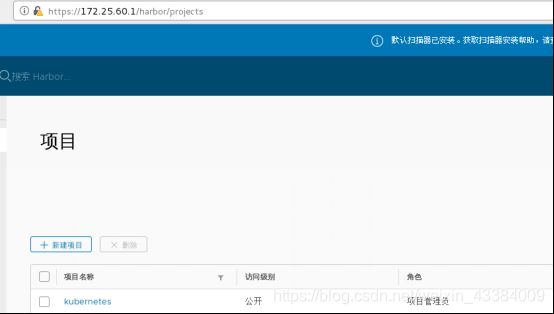

- 将kubeadm下载的镜像上传到私有仓库

[root@server1 ~/harbor]#docker-compose stop

Stopping harbor-portal ... done

Stopping registryctl ... done

Stopping redis ... done

Stopping registry ... done

Stopping harbor-log ... done

[root@server1 ~/harbor]#docker-compose start

Starting log ... done

Starting registry ... done

Starting registryctl ... done

Starting postgresql ... done

Starting portal ... done

Starting redis ... done

Starting core ... done

Starting jobservice ... done

Starting proxy ... done

Starting notary-signer ... done

Starting notary-server ... done

Starting clair ... done

Starting clair-adapter ... done

Starting chartmuseum ... done

[root@server2 ~]# for i in `docker images | grep registry.aliyuncs.com | awk '{print $1":"$2}'|awk -F / '{print $3}'`;do docker tag registry.aliyuncs.com/google_containers/$i reg.westos.org/kubernetes/$i; done

[root@server2 ~]# docker images | grep reg.westos.org

reg.westos.org/kubernetes/kube-proxy v1.18.1 4e68534e24f6 8 days ago 117MB

reg.westos.org/kubernetes/kube-controller-manager v1.18.1 d1ccdd18e6ed 8 days ago 162MB

reg.westos.org/kubernetes/kube-apiserver v1.18.1 a595af0107f9 8 days ago 173MB

reg.westos.org/kubernetes/kube-scheduler v1.18.1 6c9320041a7b 8 days ago 95.3MB

reg.westos.org/nginx latest ed21b7a8aee9 2 weeks ago 127MB

reg.westos.org/ubuntu latest 4e5021d210f6 3 weeks ago 64.2MB

reg.westos.org/kubernetes/pause 3.2 80d28bedfe5d 2 months ago 683kB

reg.westos.org/kubernetes/coredns 1.6.7 67da37a9a360 2 months ago 43.8MB

reg.westos.org/kubernetes/etcd 3.4.3-0 303ce5db0e90 5 months ago 288MB

上传镜像

[root@server2 ~]# for i in `docker images | grep reg.westos.org|awk '{print $1":"$2}'`;do docker push $i ;done

- 证书(server2/3/4上都有证书)

[root@server2 docker]# scp -r certs.d server4:/etc/docker

ca.crt

- 安装补齐指令

[root@server4 ~]# yum install bash-* -y

- 测试server4是否可以从私有仓库拉取

[root@server4 ~]# docker pull reg.westos.org/kubernetes/kube-proxy:v1.18.1

v1.18.1: Pulling from kubernetes/kube-proxy

597de8ba0c30: Pull complete

3f0663684f29: Pull complete

e1f7f878905c: Pull complete

3029977cf65d: Pull complete

cc627398eeaa: Pull complete

d3609306ce38: Pull complete

492846b7a550: Pull complete

Digest: sha256:f9c0270095cdeac08d87d20828f3ddbc7cbc24b3cc6569aa9e7022e75c333d18

Status: Downloaded newer image for reg.westos.org/kubernetes/kube-proxy:v1.18.1

reg.westos.org/kubernetes/kube-proxy:v1.18.1

[root@server4 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

reg.westos.org/kubernetes/kube-proxy v1.18.1 4e68534e24f6 8 days ago 117MB

[root@server4 ~]# docker rmi reg.westos.org/kubernetes/kube-proxy:v1.18.1

[root@server2 docker]# kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository reg.westos.org/kubernetes

W0417 20:29:43.970319 19674 version.go:102] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

W0417 20:29:43.970372 19674 version.go:103] falling back to the local client version: v1.18.1

W0417 20:29:43.970499 19674 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [server2 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.25.60.2]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [server2 localhost] and IPs [172.25.60.2 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [server2 localhost] and IPs [172.25.60.2 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0417 20:29:50.241610 19674 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0417 20:29:50.243664 19674 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 103.047201 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node server2 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node server2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: cla979.v9j844jwmz0szaog

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.25.60.2:6443 --token cla979.v9j844jwmz0szaog \

--discovery-token-ca-cert-hash sha256:3ba197deeae92fde4822aeccf66c89b9920cf65336514d4b474dbbbed5d62f00

- 让server3和server4加入集群

[root@server3 docker]# kubeadm join 172.25.60.2:6443 --token cla979.v9j844jwmz0szaog \

> --discovery-token-ca-cert-hash sha256:3ba197deeae92fde4822aeccf66c89b9920cf65336514d4b474dbbbed5d62f00

W0417 20:42:35.801102 17320 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@server4 docker]# kubeadm join 172.25.60.2:6443 --token cla979.v9j844jwmz0szaog \

> --discovery-token-ca-cert-hash sha256:3ba197deeae92fde4822aeccf66c89b9920cf65336514d4b474dbbbed5d62f00

- 创建用户

[root@server2 ~]# useradd kubeadm

[root@server2 ~]# visudo

100 root ALL=(ALL) ALL

101 kubeadm ALL=(ALL) NOPASSWD:ALL

[root@server2 ~]# su - kubeadm

- 创建配置文件

[kubeadm@server2 ~]$ mkdir -p $HOME/.kube

[kubeadm@server2 ~]$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[kubeadm@server2 ~]$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

[kubeadm@server2 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

server2 NotReady master 30m v1.18.1

server3 NotReady <none> 18m v1.18.1

server4 NotReady <none> 18m v1.18.1

[kubeadm@server2 ~]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7b8f97b6db-5g4hh 0/1 Pending 0 30m

coredns-7b8f97b6db-jxccd 0/1 Pending 0 30m

etcd-server2 1/1 Running 0 30m

kube-apiserver-server2 1/1 Running 0 30m

kube-controller-manager-server2 0/1 CrashLoopBackOff 5 30m

kube-proxy-hb9c7 1/1 Running 0 19m

kube-proxy-jgnk5 1/1 Running 0 30m

kube-proxy-s6nzt 1/1 Running 0 19m

kube-scheduler-server2 1/1 Running 6 30m

- 配置kubectl命令补齐功能

[kubeadm@server2 ~]$ echo "source <(kubectl completion bash)" >> ~/.bashrc

[kubeadm@server2 ~]$ source .bashrc

[kubeadm@server2 ~]$ kubectl

alpha attach completion create edit kustomize plugin run uncordon

annotate auth config delete exec label port-forward scale version

api-resources autoscale convert describe explain logs proxy set wait

api-versions certificate cordon diff expose options replace taint

apply cluster-info cp drain get patch rollout top

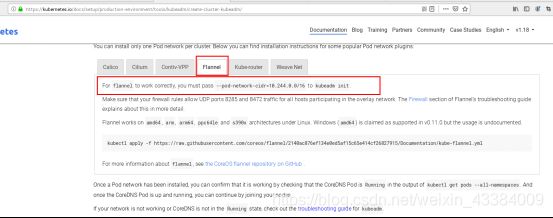

- flannel网络管理

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

如果无法下载:访问github上的源kube-flannel.yml复制到本地

https://github.com/coreos/flannel/blob/master/Documentation/kube-flannel.yml#L1-L602

[kubeadm@server2 ~]$ kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

[kubeadm@server2 ~]$ kubectl get pod -n kube-system -o wide

(有错)

排错:

https://github.com/coreos/flannel/releases

下载,上传到reg.westos.org/kubernetes仓库

在server3和server4上docker pull flanne

莫名解决很奇怪

[kubeadm@server2 ~]$ kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-7b8f97b6db-5g4hh 1/1 Running 1 167m 10.244.0.5 server2 <none> <none>

coredns-7b8f97b6db-jxccd 1/1 Running 1 167m 10.244.0.4 server2 <none> <none>

etcd-server2 1/1 Running 6 168m 172.25.60.2 server2 <none> <none>

kube-apiserver-server2 1/1 Running 10 168m 172.25.60.2 server2 <none> <none>

kube-controller-manager-server2 1/1 Running 21 168m 172.25.60.2 server2 <none> <none>

kube-flannel-ds-amd64-6cglm 1/1 Running 1 80m 172.25.60.2 server2 <none> <none>

kube-flannel-ds-amd64-957jx 1/1 Running 1 80m 172.25.60.3 server3 <none> <none>

kube-flannel-ds-amd64-gknfj 1/1 Running 0 80m 172.25.60.4 server4 <none> <none>

kube-proxy-hb9c7 1/1 Running 4 157m 172.25.60.3 server3 <none> <none>

kube-proxy-jgnk5 1/1 Running 4 167m 172.25.60.2 server2 <none> <none>

kube-proxy-s6nzt 1/1 Running 3 157m 172.25.60.4 server4 <none> <none>

kube-scheduler-server2 1/1 Running 22 168m 172.25.60.2 server2 <none> <none>

[kubeadm@server2 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

server2 Ready master 169m v1.18.1

server3 Ready <none> 157m v1.18.1

server4 Ready <none> 157m v1.18.1