GMM模型和EM算法

GMM模型和EM算法

高斯混合模型GMM

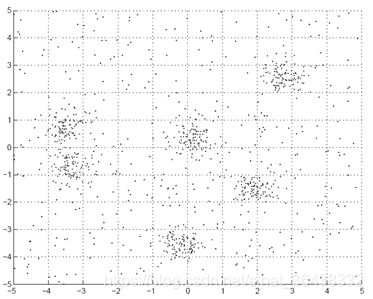

上图的分布很难用一个高斯分布进行模拟。观察上图,大致可以看见6个分布。将6个高斯分布线性加到一起就组成了能描述上图的高斯混合分布。

混高斯合分布的概率密度函数如下:

p ( x ∣ θ ) = ∑ j = 1 K p ( x , z = j ∣ θ ) = p ( z = j ∣ θ ) p ( x ∣ z = j , θ ) = ∑ j = 1 K π j N ( x ; μ j , Σ j ) 其 中 : p ( z = j ∣ θ ) = π j ; p ( x ∣ z = j , θ ) = N ( x ; μ j , Σ j ) θ ≡ { π j , μ j , Σ j ; j = 1 , 2 , … , K } ; j = 1 , 2 , … , K \begin{array}{l}p(x|\theta ) = \sum\limits_{j = 1}^K p (x,z = j|\theta ) = p(z = j|\theta )p(x|z = j,\theta ) = \sum\limits_{j = 1}^K {{\pi _j}} N\left( {x;{\mu _j},{\Sigma _j}} \right)\\ \\ \\其中:p(z = j|\theta ) = {\pi _j};\quad p(x|z = j,\theta ) = N\left( {x;{\mu _j},{\Sigma _j}} \right)\\\theta \equiv \left\{ {{\pi _j},{\mu _j},{\Sigma _j};j = 1,2, \ldots ,K} \right\};j = 1,2, \ldots ,K\end{array} p(x∣θ)=j=1∑Kp(x,z=j∣θ)=p(z=j∣θ)p(x∣z=j,θ)=j=1∑KπjN(x;μj,Σj)其中:p(z=j∣θ)=πj;p(x∣z=j,θ)=N(x;μj,Σj)θ≡{πj,μj,Σj;j=1,2,…,K};j=1,2,…,K

对于高斯混合模型的参数估计,如果利用最大似然估计,即:

θ ∗ = argmax θ ∏ i = 1 N p ( x i ∣ θ ) \theta^{*}=\operatorname{argmax}_{\theta} \prod_{i=1}^{N} p\left(x_{i} | \theta\right) θ∗=argmaxθi=1∏Np(xi∣θ)

对似然函数取对数,求解最优化问题(求导数为0):

l ( θ ; x ) = log p ( x ∣ θ ) = log ∏ i p ( x i ∣ θ ) = ∑ i log p ( x i ∣ θ ) = ∑ i log ∑ z p ( x i , z ∣ θ ) = 0 \begin{aligned} l(\theta ; x)=\log p(x | \theta) &=\log \prod_{i} p\left(x_{i} | \theta\right) \\ &=\sum_{i} \log p\left(x_{i} | \theta\right) \\ &=\sum_{i} \log \sum_{z} p\left(x_{i}, z | \theta\right)=0 \end{aligned} l(θ;x)=logp(x∣θ)=logi∏p(xi∣θ)=i∑logp(xi∣θ)=i∑logz∑p(xi,z∣θ)=0

log函数里面有个求和符号,很难求导来求最优值。因此最大似然估计难以进行GMM的参数估计。

EM算法(Expectation-Maximization Algorithm)

观察上面那副图我们可以大致地看出有6个高斯分布。如果对于上图地每一个点,能够大致的地判断它属于这六个高斯分布中的哪一个,即为这些点贴上“标签”z,然后对于每个高斯分布,利用我们认为属于该分布的点估计参数,这样就能够得出这个高斯混合分布的参数了。这就是EM算法的直观理解。而这些“标签”z可以认为是分布的隐含变量(当然,这些标签可以是“软划分”,即属于某个高斯分布的概率)。

回到上文的对数似然函数:

l ( θ ; x ) = ∑ i log ∑ z p ( x i , z ∣ θ ) \begin{aligned}l(\theta ; x)=\sum_{i} \log \sum_{z} p\left(x_{i}, z | \theta\right)\end{aligned} l(θ;x)=i∑logz∑p(xi,z∣θ)

其中:

log ∑ z p ( x , z ∣ θ ) = log ∑ z q ( z ) p ( x , z ∣ θ ) q ( z ) ≥ ∑ z q ( z ) log p ( x , z ∣ θ ) q ( z ) ( 根 据 J e n s e n 不 等 式 ) {\log \sum\limits_z p (x,z|\theta ) = \log \sum\limits_z q (z)\frac{{p(x,z|\theta )}}{{q(z)}} \ge \sum\limits_z q (z)\log \frac{{p(x,z|\theta )}}{{q(z)}}}(根据Jensen不等式) logz∑p(x,z∣θ)=logz∑q(z)q(z)p(x,z∣θ)≥z∑q(z)logq(z)p(x,z∣θ)(根据Jensen不等式)

为了下面表示方便,令:

L ( θ ; x ) = ∑ i ∑ z q ( z ) log p ( x , z ∣ θ ) q ( z ) l ( θ ; x ) ≥ L ( θ ; x ) L(\theta ; x)=\sum_{i} \sum\limits_z q (z)\log \frac{{p(x,z|\theta )}}{{q(z)}}\\l(\theta ; x) \ge L(\theta ; x) L(θ;x)=i∑z∑q(z)logq(z)p(x,z∣θ)l(θ;x)≥L(θ;x)

如果我们能根据Jensen不等式放缩这个似然函数,求和符号就挪到了log函数外面,这样就能更好求导来求解最优值。Jensen不等式:

Jensen’s inequality: f ( ∑ j λ j x j ) ≥ ∑ j λ j f ( x j ) \text { Jensen's inequality: } f\left(\sum_{j} \lambda_{j} x_{j}\right) \geq \sum_{j} \lambda_{j} f\left(x_{j}\right) Jensen’s inequality: f(j∑λjxj)≥j∑λjf(xj)

但是,这个q(z)该是多少呢?结果证明,q(z)=p(z|x,θ)时最好,因为此时式子的等号成立:

∑ z p ( z ∣ x , θ ) log p ( x , z ∣ θ ) p ( z ∣ x , θ ) = ∑ z p ( z ∣ x , θ ) log p ( x ∣ θ ) = log p ( x ∣ θ ) = ∑ z p ( x i , z ∣ θ ) \begin{array}{l}\sum\limits_z p \left( {z|x,\theta } \right)\log \frac{{p\left( {x,z|\theta } \right)}}{{p\left( {z|x,\theta } \right)}}\\ = \sum\limits_z p \left( {z|x,\theta } \right)\log p\left( {x|\theta } \right)\\ = \log p\left( {x|\theta } \right)\\ = \sum\limits_z p \left( {{x_i},z|\theta } \right)\end{array} z∑p(z∣x,θ)logp(z∣x,θ)p(x,z∣θ)=z∑p(z∣x,θ)logp(x∣θ)=logp(x∣θ)=z∑p(xi,z∣θ)

所以,E步就是利用目前的参数θ来,根据q(z)=p(z|x,θ)来计算q(z),使得(也就是说,当q(z)=p(z|x,θ)时,下式取最优值):

q ( t + 1 ) = arg max q L ( q , θ ( t ) ) q^{(t+1)}=\arg \max _{q} L\left(q, \theta^{(t)}\right) q(t+1)=argqmaxL(q,θ(t))

M步就是利用L(θ|x)来近似l(θ|x),求解最优化问题计算θ:

θ ( t + 1 ) = arg max q L ( q ( t ) , θ ) \theta^{(t+1)}=\arg \max _{q} L\left(q^{(t)},\theta\right) θ(t+1)=argqmaxL(q(t),θ)

L ( q , θ ) = ∑ i = 1 N ∑ z i q ( z i ) log p ( x i , z i ∣ θ ) q ( z i ) = ∑ i = 1 N ∑ z i q ( z i ) log p ( x i z i ∣ θ ) − q ( z i ) log q ( z i ) = ∑ i = 1 N ∑ j = 1 K q i j log [ π j ∗ p ( x i ∣ φ j ) ] + C ( − q l o g q 可 以 看 作 是 常 数 ) \begin{aligned}L(q, \theta) &=\sum_{i=1}^{N} \sum_{z_{i}} q\left(z_{i}\right) \log \frac{p\left(x_{i}, z_{i} | \theta\right)}{q\left(z_{i}\right)} \\&=\sum_{i=1}^{N} \sum_{z_{i}} q\left(z_{i}\right) \log p\left(x_{i} z_{i} | \theta\right)-q\left(z_{i}\right) \log q\left(z_{i}\right) \\&=\sum_{i=1}^{N} \sum_{j=1}^{K} q_{i j} \log \left[\pi_{j} * p\left(x_{i} | \varphi_{j}\right)\right]+C(-qlogq可以看作是常数)\end{aligned} L(q,θ)=i=1∑Nzi∑q(zi)logq(zi)p(xi,zi∣θ)=i=1∑Nzi∑q(zi)logp(xizi∣θ)−q(zi)logq(zi)=i=1∑Nj=1∑Kqijlog[πj∗p(xi∣φj)]+C(−qlogq可以看作是常数)

其中,φj表示混合分布中,第j个高斯分布的参数(μ,Σ),这里为了简便而统一表示。πj即高斯混合分布公式中每个高斯分布的权重,即:

p ( z = j ∣ θ ) = π j ; p ( x ∣ z = j , θ ) = p ( x ∣ φ j ) \begin{aligned}&p(z=j | \theta)=\pi_{j};\\&p(x | z=j, \theta)=p\left(x | \varphi_{j}\right)\end{aligned} p(z=j∣θ)=πj;p(x∣z=j,θ)=p(x∣φj)

对于φj,将L求导等于零即可求得:

∂ L ( q , θ ) ∂ φ j = ∂ { ∑ i = 1 N q i j log p ( x i ∣ φ j ) } ∂ φ j = 0 ⇒ φ j \frac{{\partial L(q,\theta )}}{{\partial {\varphi _j}}} = \frac{{\partial \left\{ {\sum\limits_{i = 1}^N {{q_{ij}}} \log p\left( {{x_i}|{\varphi _j}} \right)} \right\}}}{{\partial {\varphi _j}}} = 0 \Rightarrow {\varphi _j} ∂φj∂L(q,θ)=∂φj∂{i=1∑Nqijlogp(xi∣φj)}=0⇒φj

而对于πj,还满足于一个约束条件:

∑ j = 1 K π j = 1 \sum_{j=1}^{K} \pi_{j}=1 j=1∑Kπj=1

因此需要利用拉格朗日乘子求解。

在回到E步,之前说我们用z表示"标签",对于某一个高斯分布,q(z)=p(z|x,θ)具体公式如下:

q ( z i = c ) = p ( z i = c ∣ x i , θ t ) = p ( x i , z i = c ∣ θ t ) ∑ d p ( x i , z i = d ∣ θ t ) q\left(z_{i}=c\right)=p\left(z_{i}=c | x_{i}, \theta^{t}\right)=\frac{p\left(x_{i}, z_{i}=c | \theta^{t}\right)}{\sum_{d} p\left(x_{i}, z_{i}=d | \theta^{t}\right)} q(zi=c)=p(zi=c∣xi,θt)=∑dp(xi,zi=d∣θt)p(xi,zi=c∣θt)

对于GMM模型来说:

q ( z i = c ) = π ( z i = c ) N ( x i ∣ μ c , σ c ) ∑ d π ( z i = d ) N ( x i ∣ μ d , σ d ) q\left(z_{i}=c\right)=\frac{\pi\left(z_{i}=c\right) N\left(x_{i} | \mu_{c, \sigma_{c}}\right)}{\sum_{d} \pi\left(z_{i}=d\right) N\left(x_{i} | \mu_{d}, \sigma_{d}\right)} q(zi=c)=∑dπ(zi=d)N(xi∣μd,σd)π(zi=c)N(xi∣μc,σc)