Spark工作笔记

package bd.export.ent;

import java.io.BufferedWriter;

import java.io.FileNotFoundException;

import java.io.FileWriter;

import java.io.IOException;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Calendar;

import java.util.Date;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import org.apache.commons.lang3.StringUtils;

import org.apache.spark.SparkConf;

import org.apache.spark.SparkContext;

import org.apache.spark.aliyun.odps.OdpsOps;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.storage.StorageLevel;

import com.aliyun.odps.TableSchema;

import com.aliyun.odps.data.Record;

import com.jianfeitech.bd.common.conf.model.spark.Spark;

import com.jianfeitech.bd.util.crypto.AES;

import com.thoughtworks.xstream.mapper.Mapper.Null;

import scala.Tuple2;

/**

* @author Liqiang

* @date 创建时间: 2017年5月24日 下午3:44:24

* @version 1.0

*/

public class QueryMacFromScanThirtyDaysOriginal {

private final static String QUERY_TN = "scan_original";

private final static String PROJECT_NM = "xxx";

private static String accessId = "xxx";

private static String accessKey = "xxx";

private static String tunnelUrl = "xxx";

private static String odpsUrl = "xxx";

private static final String PARTITION_NAME = "data_date";

private static final String RESULT_CSV_PATH = "/home/hadoop/liqiang/QueryMacFromScan/mac_result_my.csv";

public static void main(String[] args) {

if (args[0] == null) {

System.out.println("Please Input datadate!");

return;

}

if (args[1] == null) {

System.out.println("Please Input apserial!");

return;

}

if (args[2] == null) {

System.out.println("Please Input days!");

return;

}

String inputDataDate = args[0];

final String INPUT_APSERIAL = args[1];

final Integer QUERY_DAYS = Integer.valueOf(args[2]);

System.out.println("Input Params Is===>>>[" + inputDataDate + "],[" + INPUT_APSERIAL + "],[" + QUERY_DAYS +"]");

SparkConf conf = new SparkConf().setAppName(QueryMacFromScanThirtyDaysOriginal.class.getName());

SparkContext sc = new SparkContext(conf);

OdpsOps odpsOps = new OdpsOps(sc, accessId, accessKey, odpsUrl, tunnelUrl);

JavaRDD

@Override

public String call(Record record, TableSchema v2) throws Exception {

String mac = record.getString("mac");

String apserial = record.getString("apserial");

if (apserial == null || apserial.indexOf(INPUT_APSERIAL) == -1) {

return null;

}

if (StringUtils.isBlank(mac)) {

return null;

}

return AES.decryptDefault(mac.trim());

}

}, 12).filter(new Function

@Override

public Boolean call(String v1) throws Exception {

return v1 != null;

}

}).distinct();

JavaPairRDD

for (int i = 0; i < QUERY_DAYS; i++) {

/*JavaPairRDD

JavaRDD

String afterNDaysDate = getAfterNDaysDate(inputDataDate, i + 1);

Integer date = Integer.valueOf(afterNDaysDate);

final Integer innerDate = date;

System.out.println("Current Partition Is===>" + PARTITION_NAME + "=" + innerDate);

try {

JavaPairRDD

@Override

public String call(Record record, TableSchema v2) throws Exception {

String mac = record.getString("mac");

String apserial = record.getString("apserial");

if (apserial == null || apserial.indexOf(INPUT_APSERIAL) == -1) {

return null;

}

if (StringUtils.isBlank(mac)) {

return null;

}

return AES.decryptDefault(mac.trim());

}

}, 12).filter(new Function

@Override

public Boolean call(String v1) throws Exception {

return v1 != null;

}

}).distinct().intersection(oneDayRdd).mapToPair(new PairFunction

@Override

public Tuple2

return new Tuple2

}

});

if (intersectionAllPair == null) {

intersectionAllPair = mapToPair;

} else {

intersectionAllPair = intersectionAllPair.union(mapToPair);

}

/* someDayRdd = odpsOps.readTableWithJava(PROJECT_NM, QUERY_TN, PARTITION_NAME + "=" + innerDate, new Function2

@Override

public String call(Record record, TableSchema v2) throws Exception {

String mac = record.getString("mac");

String apserial = record.getString("apserial");

if (apserial == null || apserial.indexOf(INPUT_APSERIAL) == -1) {

return null;

}

if (StringUtils.isBlank(mac)) {

return null;

}

return mac.trim();

}

}, 12).filter(new Function

@Override

public Boolean call(String v1) throws Exception {

return v1 != null;

}

});

intersectionPair = oneDayRdd.intersection(someDayRdd).mapToPair(new PairFunction

@Override

public Tuple2

return new Tuple2

}

});

if (intersectionAllPair == null) {

intersectionAllPair = intersectionPair;

} else {

intersectionAllPair = intersectionAllPair.union(intersectionPair);

}

intersectionAllPair.count();*/

} catch (Exception e) {

System.out.println("Get Partition " + date + " Error!===>>>" + e);

}

}

List

System.out.println("============================="+result.size());

// 生成列名数组

String[] columnNames = new String[QUERY_DAYS + 1];

columnNames[0] = "mac";

for (int i = 1; i <= QUERY_DAYS; i++) {

columnNames[i] = getAfterNDaysDate(inputDataDate, i);

}

List

for (Tuple2

// 动态生成结果实体类

QueryMacFromScanResultDynamicBean generateResultBean = generateResultBean(columnNames);

String mac = tuple._1;

generateResultBean.setValue("mac", mac);

Iterable

Iterator

while (iterator.hasNext()) {

Integer date = iterator.next();

generateResultBean.setValue(String.valueOf(date), "1");

}

resultList.add(generateResultBean);

}

System.out.println("resultList长度==>>" + resultList.size());

for (int i = 0; i < columnNames.length; i++) {

System.out.println("列名==>>" + columnNames[i]);

}

for (QueryMacFromScanResultDynamicBean bean : resultList) {

System.out.println("实体类mac==>>" + bean.getValue("mac"));

}

// 写出

writeResultToCsv(columnNames, resultList, RESULT_CSV_PATH);

System.out.println("写出成功!!!");

}

// 定义读取odps数据的方法

static String getAfterNDaysDate(String currentDate, int count) {

try {

SimpleDateFormat sdf = new SimpleDateFormat("yyyyMMdd");

Date date = sdf.parse(currentDate);

Calendar calendar = Calendar.getInstance();

calendar.setTime(date);

calendar.set(Calendar.DATE, calendar.get(Calendar.DATE) + count);

System.out.println("==>>" + sdf.format(calendar.getTime()));

String afterSomeDate = sdf.format(calendar.getTime());

return afterSomeDate;

} catch (ParseException e) {

System.out.println("getAfterNDaysDate Error!" + e);

}

return null;

}

// 动态生成实体类对象

static QueryMacFromScanResultDynamicBean generateResultBean(String[] columnNames) {

try {

// 设置类成员属性

HashMap propertyMap = new HashMap();

for (int i = 0; i < columnNames.length; i++) {

propertyMap.put(columnNames[i], Class.forName("java.lang.String"));

}

// 生成动态 Bean

QueryMacFromScanResultDynamicBean bean = new QueryMacFromScanResultDynamicBean(propertyMap);

for (int i = 0; i < columnNames.length; i++) {

bean.setValue(columnNames[i], "0");

}

return bean;

} catch (ClassNotFoundException e) {

System.out.println("GenerateDynamicBean Error!" + e);

}

return null;

}

static void writeResultToCsv(String[] columnNames, List

try {

// // read file content from file

//

// FileReader reader = new FileReader(filePath);

// BufferedReader br = new BufferedReader(reader);

//

// String str = null;

//

// while ((str = br.readLine()) != null) {

// sb.append(str + "/n");

//

// System.out.println(str);

// }

//

// br.close();

// reader.close();

// write string to file

FileWriter writer = new FileWriter(filePath);

BufferedWriter bw = new BufferedWriter(writer);

StringBuffer sbHeader = new StringBuffer("");

for (int i = 0; i < columnNames.length; i++) {

if (i == columnNames.length - 1) {

sbHeader.append(columnNames[i] + "\n");

} else {

sbHeader.append(columnNames[i] + ",");

}

}

bw.write(sbHeader.toString());

for (QueryMacFromScanResultDynamicBean bean : resultList) {

StringBuffer sbContent = new StringBuffer("");

for (int i = 0; i < columnNames.length; i++) {

if (i == columnNames.length - 1) {

sbContent.append(bean.getValue(columnNames[i]) + "\n");

} else {

sbContent.append(bean.getValue(columnNames[i]) + ",");

}

}

bw.write(sbContent.toString());

}

bw.close();

writer.close();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

}

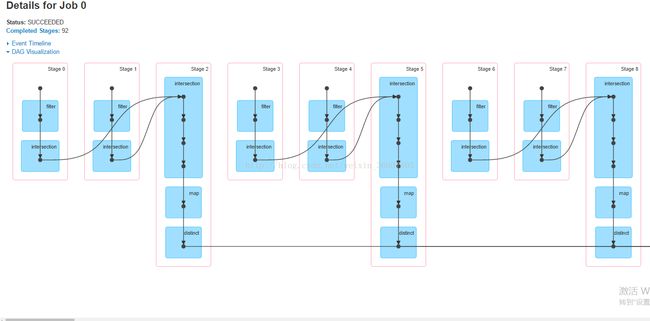

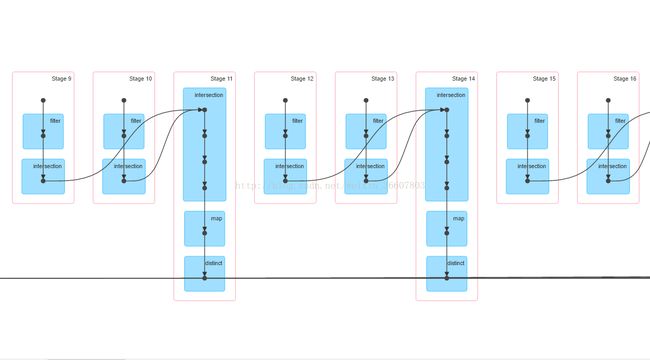

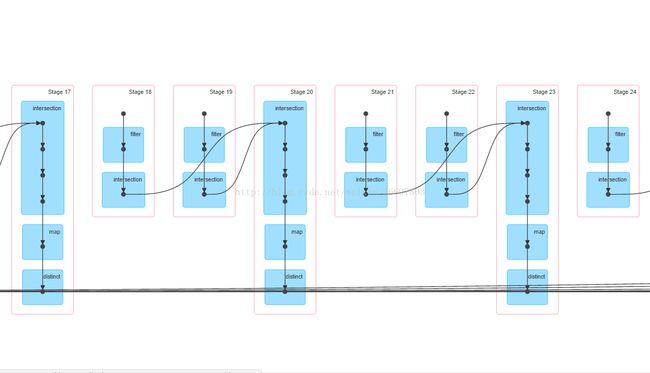

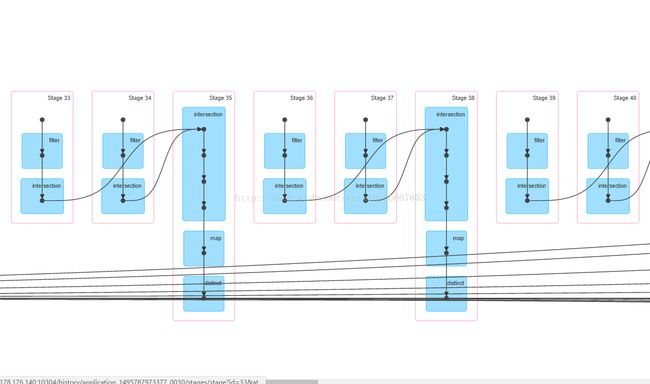

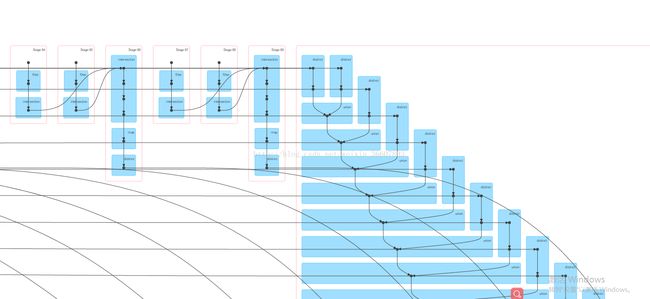

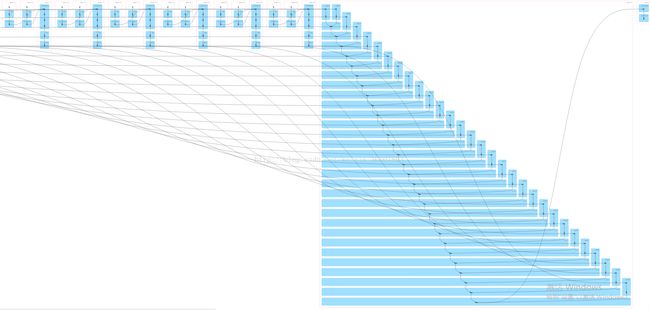

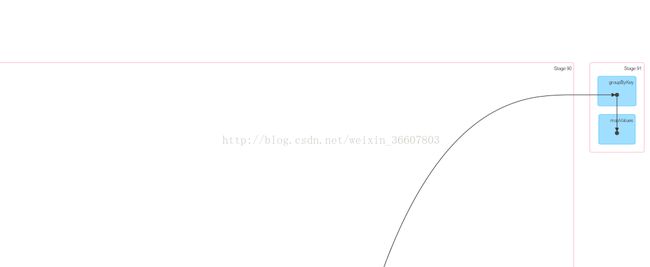

DAG图如下:

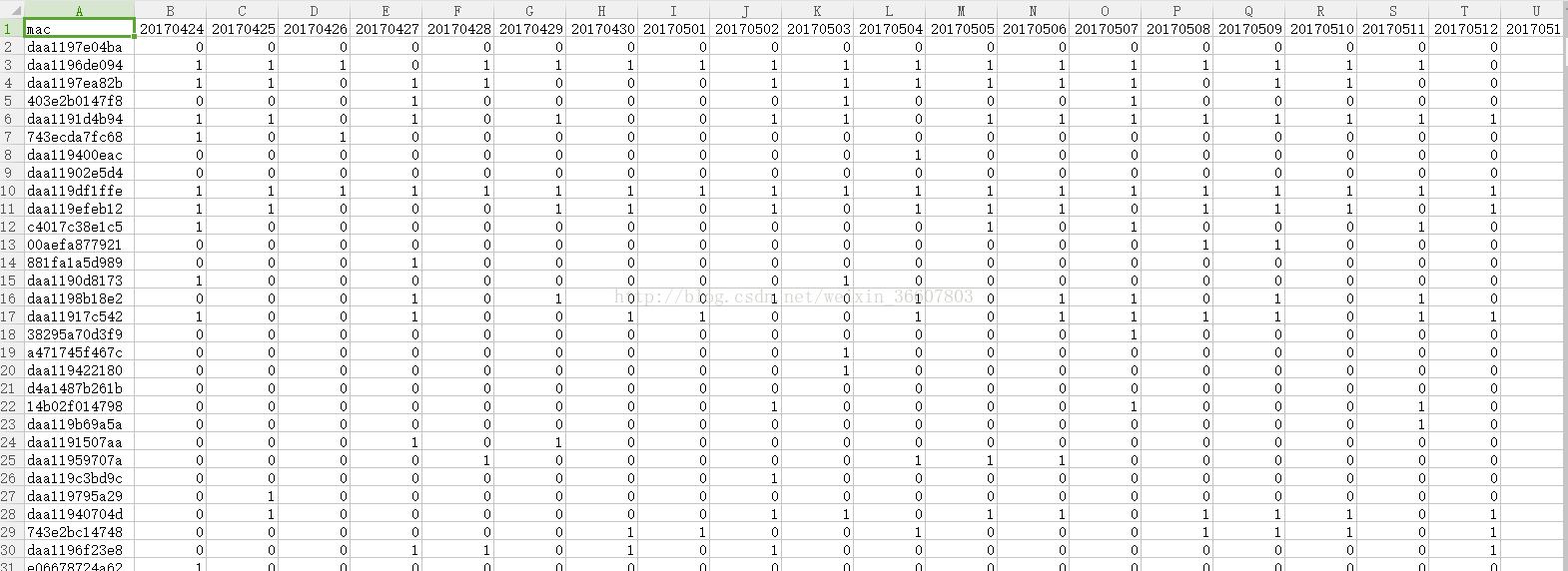

结果如下图: