optimizer.zero_grad()和loss.backward()

1、optimizer.zero_grad()和loss.backward()先后问题

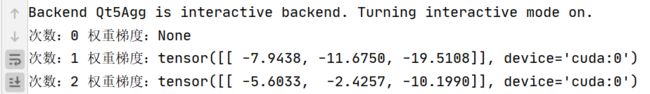

刚开始学习深度学习,就是不明白,为什么第一次运行的时候就要optimizer.zero_grad()(梯度清零),看了好多资料,都是说梯度会累加,后一起在前一次的基础上运算,不是后一起取代前次的结果,比如:这是有optimizer.zero_grad()

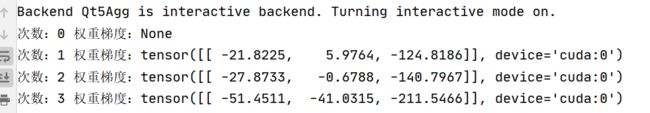

无optimizer.zero_grad()

就是可以看到,当不加optimizer.zero_grad()的时候,梯度无限下降。

这个我弄明白了,就是累计计算梯度。但是我还是不明白为啥第一次就要梯度清零,第一次不是还没有进行计算梯度?(原谅我钻牛角尖了,一个小白的牛角)。

除了第一次后面都要清零,难道要写一个判断语句来规避第一次?显然是小小白的做法。

全部代码

# -*- coding: utf-8 -*-

import torch

import numpy as np

from torch import nn

from torch.autograd import Variable

import matplotlib.pyplot as plt

# 数据拼接

def make_features(x):

# Builds features i.e. a matrix with columns [x, x^2, x^3].

x = x.unsqueeze(1)

return torch.cat([x ** i for i in range(1, 4)], 1)

if __name__ == '__main__':

count = 0

# unsqueeze: https://blog.csdn.net/flysky_jay/article/details/81607289

w_target = torch.FloatTensor([0.5, 3, 2.4]).unsqueeze(1)

b_target = torch.FloatTensor([0.9])

# 每次输入一个x,就得到一个真实y

def f(x):

return x.mm(w_target) + b_target[0] # x.mm做矩阵乘法

# 每次取batch_size这么多数据点,然后转换成矩阵,

def get_batch(batch_size=32):

random = torch.randn(batch_size)

random = np.sort(random)

random = torch.Tensor(random)

x = make_features(random)

y = f(x)

if torch.cuda.is_available():

return Variable(x).cuda(), Variable(y).cuda() # 用GPU计算

else:

return Variable(x), Variable(y)

# create module

class poly_model(nn.Module):

def __init__(self):

super(poly_model, self).__init__()

self.poly = nn.Linear(3, 1)

def forward(self, x):

out = self.poly(x)

return out

if torch.cuda.is_available():

model = poly_model().cuda() # 用GPU计算

else:

model = poly_model()

criterion = nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=1e-3, momentum=0.9)

epoch = 0

while True:

# get data

batch_x, batch_y = get_batch()

# Forward pass

output = model(batch_x)

loss = criterion(output, batch_y)

print_loss = loss.item()

# print(print_loss)

# reset gradients

print('次数:{} 权重梯度:{}'.format(count, model.poly.weight.grad))

count += 1

optimizer.zero_grad()

# backward pass

loss.backward()

# update parameters

optimizer.step()

epoch += 1

if print_loss < 1e-3:

break

print(model.poly.weight)

print('Loss: {:.6f} after {} batches'.format(loss.item(), epoch))

print('==> Learned function: y = {:.2f} + {:.2f}*x + {:.2f}*x^2 + {:.2f}*x^3'.format(model.poly.bias[0],

model.poly.weight[0][0],

model.poly.weight[0][1],

model.poly.weight[0][2]))

print('==> Actual function: y = {:.2f} + {:.2f}*x + {:.2f}*x^2 + {:.2f}*x^3'.format(b_target[0],

w_target[0][0],

w_target[1][0],

w_target[2][0]))

# Prediction

predict = model(batch_x)

batch_x = batch_x.cpu()

batch_y = batch_y.cpu()

x = batch_x.numpy()[:, 0]

plt.plot(x, batch_y.numpy(), 'ro', label='real cure')

predict = predict.cpu()

predict = predict.data.numpy()

plt.plot(x, predict, 'b', label='fitting cure')

plt.show()

一个同学问问题:当grad是None的时候,置零用grad.data.zero_()就会失败

x = torch.ones(3, 4, requires_grad=True)

y = x+2

z = y**2

print(y,z)

print('after', z.grad)

z.grad.zero_()

# if z.grad is None:

# z.grad.zero_()

print('before', z.grad)

意思就是说,放在optimizer中就不会出现下面这种错误:

会打印出来None;

我看了optim的源码https://zhuanlan.zhihu.com/p/100076702

我才明白,就是一个if的事情。

# 这是内置代码,全部代码可以连接文章

def zero_grad(self):

r"""Clears the gradients of all optimized :class:`torch.Tensor` s."""

# 获取每一组参数

for group in self.param_groups:

# 遍历当前参数组所有的params

for p in group['params']:

if p.grad is not None:

p.grad.detach_()

p.grad.zero_()