基于GraphCuts图割算法的图像分割----OpenCV代码与实现

转载请注明出处:http://blog.csdn.net/wangyaninglm/article/details/44151213,

来自:shiter编写程序的艺术

1.绪论

图切割算法是组合图论的经典算法之一。近年来,许多学者将其应用到图像和视频分割中,取得了很好的效果。本文简单介绍了图切算法和交互式图像分割技术,以及图切算法在交互式图像分割中的应用。

图像分割指图像分成各具特性的区域并提取出感兴趣目标的技术和过程,它是由图像处理到图像分析的关键步骤,是一种基本的计算机视觉技术。只有在图像分割的基础上才能对目标进行特征提取和参数测量,使得更高层的图像分析和理解成为可能。因此对图像分割方法的研究具有十分重要的意义。

图像分割技术的研究已有几十年的历史,但至今人们并不能找到通用的方法能够适合于所有类型的图像。常用的图像分割技术可划分为四类:特征阈值或聚类、边缘检测、区域生长或区域提取。虽然这些方法分割灰度图像效果较好,但用于彩色图像的分割往往达不到理想的效果。

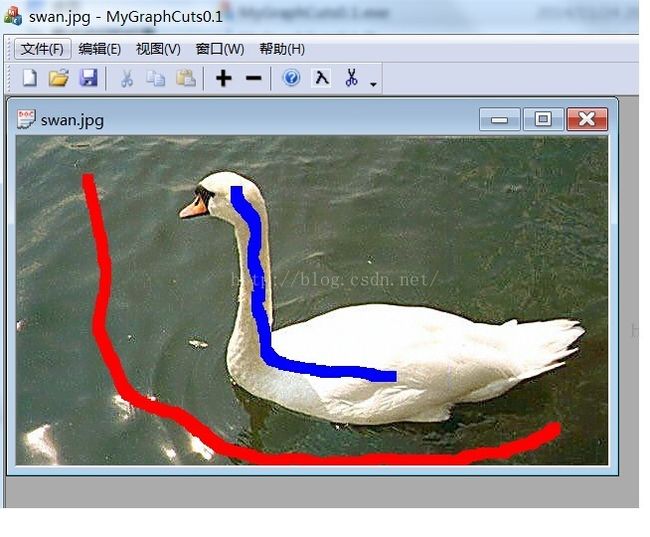

交互式图像分割是指,首先由用户以某种交互手段指定图像的部分前景与部分背景,然后算法以用户的输入作为分割的约束条件自动地计算出满足约束条件下的最佳分割。典型的交互手段包括用一把画刷在前景和背景处各画几笔(如[1][4]等)以及在前景的周围画一个方框(如[2])等。

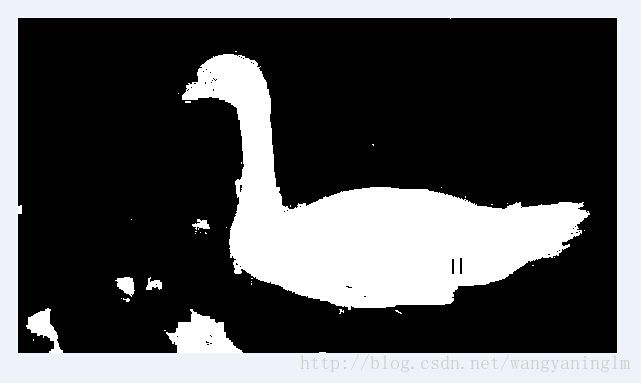

基于图切算法的图像分割技术是近年来国际上图像分割领域的一个新的研究热点。该类方法将图像映射为赋权无向图,把像素视作节点,利用最小切割得到图像的最佳分割。

2.几种改进算法

- Graph Cut[1]算法是一种直接基于图切算法的图像分割技术。它仅需要在前景和背景处各画几笔作为输入,算法将建立各个像素点与前景背景相似度的赋权图,并通过求解最小切割区分前景和背景。

- Grabcut[2]算法方法的用户交互量很少,仅仅需要指定一个包含前景的矩形,随后用基于图切算法在图像中提取前景。

- Lazy Snapping[4]系统则是对[1]的改进。通过预计算和聚类技术,该方法提供了一个即时反馈的平台,方便用户进行交互分割。

文档说明:

http://download.csdn.net/detail/wangyaninglm/8484301

3.代码实现效果

graphcuts代码:

http://download.csdn.net/detail/wangyaninglm/8484243

ICCV'2001论文"Interactive graph cuts for optimal boundary and region segmentation of objects in N-D images"。

Graph Cut方法是基于颜色统计采样的方法,因此对前背景相差较大的图像效果较佳。

同时,比例系数lambda的调节直接影响到最终的分割效果。

grabcut代码:

// Grabcut.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include

#include "ComputeTime.h"

#include "windows.h"

using namespace std;

using namespace cv;

static void help()

{

cout << "\nThis program demonstrates GrabCut segmentation -- select an object in a region\n"

"and then grabcut will attempt to segment it out.\n"

"Call:\n"

"./grabcut \n"

"\nSelect a rectangular area around the object you want to segment\n" <<

"\nHot keys: \n"

"\tESC - quit the program\n"

"\tr - restore the original image\n"

"\tn - next iteration\n"

"\n"

"\tleft mouse button - set rectangle\n"

"\n"

"\tCTRL+left mouse button - set GC_BGD pixels\n"

"\tSHIFT+left mouse button - set CG_FGD pixels\n"

"\n"

"\tCTRL+right mouse button - set GC_PR_BGD pixels\n"

"\tSHIFT+right mouse button - set CG_PR_FGD pixels\n" << endl;

}

const Scalar RED = Scalar(0,0,255);

const Scalar PINK = Scalar(230,130,255);

const Scalar BLUE = Scalar(255,0,0);

const Scalar LIGHTBLUE = Scalar(255,255,160);

const Scalar GREEN = Scalar(0,255,0);

const int BGD_KEY = CV_EVENT_FLAG_CTRLKEY; //Ctrl键

const int FGD_KEY = CV_EVENT_FLAG_SHIFTKEY; //Shift键

static void getBinMask( const Mat& comMask, Mat& binMask )

{

if( comMask.empty() || comMask.type()!=CV_8UC1 )

CV_Error( CV_StsBadArg, "comMask is empty or has incorrect type (not CV_8UC1)" );

if( binMask.empty() || binMask.rows!=comMask.rows || binMask.cols!=comMask.cols )

binMask.create( comMask.size(), CV_8UC1 );

binMask = comMask & 1; //得到mask的最低位,实际上是只保留确定的或者有可能的前景点当做mask

}

class GCApplication

{

public:

enum{ NOT_SET = 0, IN_PROCESS = 1, SET = 2 };

static const int radius = 2;

static const int thickness = -1;

void reset();

void setImageAndWinName( const Mat& _image, const string& _winName );

void showImage() const;

void mouseClick( int event, int x, int y, int flags, void* param );

int nextIter();

int getIterCount() const { return iterCount; }

private:

void setRectInMask();

void setLblsInMask( int flags, Point p, bool isPr );

const string* winName;

const Mat* image;

Mat mask;

Mat bgdModel, fgdModel;

uchar rectState, lblsState, prLblsState;

bool isInitialized;

Rect rect;

vector fgdPxls, bgdPxls, prFgdPxls, prBgdPxls;

int iterCount;

};

/*给类的变量赋值*/

void GCApplication::reset()

{

if( !mask.empty() )

mask.setTo(Scalar::all(GC_BGD));

bgdPxls.clear(); fgdPxls.clear();

prBgdPxls.clear(); prFgdPxls.clear();

isInitialized = false;

rectState = NOT_SET; //NOT_SET == 0

lblsState = NOT_SET;

prLblsState = NOT_SET;

iterCount = 0;

}

/*给类的成员变量赋值而已*/

void GCApplication::setImageAndWinName( const Mat& _image, const string& _winName )

{

if( _image.empty() || _winName.empty() )

return;

image = &_image;

winName = &_winName;

mask.create( image->size(), CV_8UC1);

reset();

}

/*显示4个点,一个矩形和图像内容,因为后面的步骤很多地方都要用到这个函数,所以单独拿出来*/

void GCApplication::showImage() const

{

if( image->empty() || winName->empty() )

return;

Mat res;

Mat binMask;

if( !isInitialized )

image->copyTo( res );

else

{

getBinMask( mask, binMask );

image->copyTo( res, binMask ); //按照最低位是0还是1来复制,只保留跟前景有关的图像,比如说可能的前景,可能的背景

}

vector::const_iterator it;

/*下面4句代码是将选中的4个点用不同的颜色显示出来*/

for( it = bgdPxls.begin(); it != bgdPxls.end(); ++it ) //迭代器可以看成是一个指针

circle( res, *it, radius, BLUE, thickness );

for( it = fgdPxls.begin(); it != fgdPxls.end(); ++it ) //确定的前景用红色表示

circle( res, *it, radius, RED, thickness );

for( it = prBgdPxls.begin(); it != prBgdPxls.end(); ++it )

circle( res, *it, radius, LIGHTBLUE, thickness );

for( it = prFgdPxls.begin(); it != prFgdPxls.end(); ++it )

circle( res, *it, radius, PINK, thickness );

/*画矩形*/

if( rectState == IN_PROCESS || rectState == SET )

rectangle( res, Point( rect.x, rect.y ), Point(rect.x + rect.width, rect.y + rect.height ), GREEN, 2);

imshow( *winName, res );

}

/*该步骤完成后,mask图像中rect内部是3,外面全是0*/

void GCApplication::setRectInMask()

{

assert( !mask.empty() );

mask.setTo( GC_BGD ); //GC_BGD == 0

rect.x = max(0, rect.x);

rect.y = max(0, rect.y);

rect.width = min(rect.width, image->cols-rect.x);

rect.height = min(rect.height, image->rows-rect.y);

(mask(rect)).setTo( Scalar(GC_PR_FGD) ); //GC_PR_FGD == 3,矩形内部,为可能的前景点

}

void GCApplication::setLblsInMask( int flags, Point p, bool isPr )

{

vector *bpxls, *fpxls;

uchar bvalue, fvalue;

if( !isPr ) //确定的点

{

bpxls = &bgdPxls;

fpxls = &fgdPxls;

bvalue = GC_BGD; //0

fvalue = GC_FGD; //1

}

else //概率点

{

bpxls = &prBgdPxls;

fpxls = &prFgdPxls;

bvalue = GC_PR_BGD; //2

fvalue = GC_PR_FGD; //3

}

if( flags & BGD_KEY )

{

bpxls->push_back(p);

circle( mask, p, radius, bvalue, thickness ); //该点处为2

}

if( flags & FGD_KEY )

{

fpxls->push_back(p);

circle( mask, p, radius, fvalue, thickness ); //该点处为3

}

}

/*鼠标响应函数,参数flags为CV_EVENT_FLAG的组合*/

void GCApplication::mouseClick( int event, int x, int y, int flags, void* )

{

// TODO add bad args check

switch( event )

{

case CV_EVENT_LBUTTONDOWN: // set rect or GC_BGD(GC_FGD) labels

{

bool isb = (flags & BGD_KEY) != 0,

isf = (flags & FGD_KEY) != 0;

if( rectState == NOT_SET && !isb && !isf )//只有左键按下时

{

rectState = IN_PROCESS; //表示正在画矩形

rect = Rect( x, y, 1, 1 );

}

if ( (isb || isf) && rectState == SET ) //按下了alt键或者shift键,且画好了矩形,表示正在画前景背景点

lblsState = IN_PROCESS;

}

break;

case CV_EVENT_RBUTTONDOWN: // set GC_PR_BGD(GC_PR_FGD) labels

{

bool isb = (flags & BGD_KEY) != 0,

isf = (flags & FGD_KEY) != 0;

if ( (isb || isf) && rectState == SET ) //正在画可能的前景背景点

prLblsState = IN_PROCESS;

}

break;

case CV_EVENT_LBUTTONUP:

if( rectState == IN_PROCESS )

{

rect = Rect( Point(rect.x, rect.y), Point(x,y) ); //矩形结束

rectState = SET;

setRectInMask();

assert( bgdPxls.empty() && fgdPxls.empty() && prBgdPxls.empty() && prFgdPxls.empty() );

showImage();

}

if( lblsState == IN_PROCESS ) //已画了前后景点

{

setLblsInMask(flags, Point(x,y), false); //画出前景点

lblsState = SET;

showImage();

}

break;

case CV_EVENT_RBUTTONUP:

if( prLblsState == IN_PROCESS )

{

setLblsInMask(flags, Point(x,y), true); //画出背景点

prLblsState = SET;

showImage();

}

break;

case CV_EVENT_MOUSEMOVE:

if( rectState == IN_PROCESS )

{

rect = Rect( Point(rect.x, rect.y), Point(x,y) );

assert( bgdPxls.empty() && fgdPxls.empty() && prBgdPxls.empty() && prFgdPxls.empty() );

showImage(); //不断的显示图片

}

else if( lblsState == IN_PROCESS )

{

setLblsInMask(flags, Point(x,y), false);

showImage();

}

else if( prLblsState == IN_PROCESS )

{

setLblsInMask(flags, Point(x,y), true);

showImage();

}

break;

}

}

/*该函数进行grabcut算法,并且返回算法运行迭代的次数*/

int GCApplication::nextIter()

{

if( isInitialized )

//使用grab算法进行一次迭代,参数2为mask,里面存的mask位是:矩形内部除掉那些可能是背景或者已经确定是背景后的所有的点,且mask同时也为输出

//保存的是分割后的前景图像

grabCut( *image, mask, rect, bgdModel, fgdModel, 1 );

else

{

if( rectState != SET )

return iterCount;

if( lblsState == SET || prLblsState == SET )

grabCut( *image, mask, rect, bgdModel, fgdModel, 1, GC_INIT_WITH_MASK );

else

grabCut( *image, mask, rect, bgdModel, fgdModel, 1, GC_INIT_WITH_RECT );

isInitialized = true;

}

iterCount++;

bgdPxls.clear(); fgdPxls.clear();

prBgdPxls.clear(); prFgdPxls.clear();

return iterCount;

}

GCApplication gcapp;

static void on_mouse( int event, int x, int y, int flags, void* param )

{

gcapp.mouseClick( event, x, y, flags, param );

}

int main( int argc, char** argv )

{

string filename;

cout<<" Grabcuts ! \n";

cout<<"input image name: "<>filename;

Mat image = imread( filename, 1 );

if( image.empty() )

{

cout << "\n Durn, couldn't read image filename " << filename << endl;

return 1;

}

help();

const string winName = "image";

cvNamedWindow( winName.c_str(), CV_WINDOW_AUTOSIZE );

cvSetMouseCallback( winName.c_str(), on_mouse, 0 );

gcapp.setImageAndWinName( image, winName );

gcapp.showImage();

for(;;)

{

int c = cvWaitKey(0);

switch( (char) c )

{

case '\x1b':

cout << "Exiting ..." << endl;

goto exit_main;

case 'r':

cout << endl;

gcapp.reset();

gcapp.showImage();

break;

case 'n':

ComputeTime ct ;

ct.Begin();

int iterCount = gcapp.getIterCount();

cout << "<" << iterCount << "... ";

int newIterCount = gcapp.nextIter();

if( newIterCount > iterCount )

{

gcapp.showImage();

cout << iterCount << ">" << endl;

cout<<"运行时间: "<" << endl;

break;

}

}

exit_main:

cvDestroyWindow( winName.c_str() );

return 0;

}

lazy snapping代码实现:

// LazySnapping.cpp : 定义控制台应用程序的入口点。

//

/* author: zhijie Lee

* home page: lzhj.me

* 2012-02-06

*/

#include "stdafx.h"

#include

#include

#include "graph.h"

#include

#include

#include

#include

using namespace std;

typedef Graph GraphType;

class LasySnapping

{

public :

LasySnapping();

~LasySnapping()

{

if(graph)

{

delete graph;

}

};

private :

vector forePts;

vector backPts;

IplImage* image;

// average color of foreground points

unsigned char avgForeColor[3];

// average color of background points

unsigned char avgBackColor[3];

public :

void setImage(IplImage* image)

{

this->image = image;

graph = new GraphType(image->width*image->height,image->width*image->height*2);

}

// include-pen locus

void setForegroundPoints(vector pts)

{

forePts.clear();

for(int i =0; i< pts.size(); i++)

{

if(!isPtInVector(pts[i],forePts))

{

forePts.push_back(pts[i]);

}

}

if(forePts.size() == 0)

{

return;

}

int sum[3] = {0};

for(int i =0; i < forePts.size(); i++)

{

unsigned char* p = (unsigned char*)image->imageData + forePts[i].x * 3

+ forePts[i].y*image->widthStep;

sum[0] += p[0];

sum[1] += p[1];

sum[2] += p[2];

}

cout< pts)

{

backPts.clear();

for(int i =0; i< pts.size(); i++)

{

if(!isPtInVector(pts[i],backPts))

{

backPts.push_back(pts[i]);

}

}

if(backPts.size() == 0)

{

return;

}

int sum[3] = {0};

for(int i =0; i < backPts.size(); i++)

{

unsigned char* p = (unsigned char*)image->imageData + backPts[i].x * 3 +

backPts[i].y*image->widthStep;

sum[0] += p[0];

sum[1] += p[1];

sum[2] += p[2];

}

avgBackColor[0] = sum[0]/backPts.size();

avgBackColor[1] = sum[1]/backPts.size();

avgBackColor[2] = sum[2]/backPts.size();

}

// return maxflow of graph

int runMaxflow();

// get result, a grayscale mast image indicating forground by 255 and background by 0

IplImage* getImageMask();

private :

float colorDistance(unsigned char* color1, unsigned char* color2);

float minDistance(unsigned char* color, vector points);

bool isPtInVector(CvPoint pt, vector points);

void getE1(unsigned char* color,float* energy);

float getE2(unsigned char* color1,unsigned char* color2);

GraphType *graph;

};

LasySnapping::LasySnapping()

{

graph = NULL;

avgForeColor[0] = 0;

avgForeColor[1] = 0;

avgForeColor[2] = 0;

avgBackColor[0] = 0;

avgBackColor[1] = 0;

avgBackColor[2] = 0;

}

float LasySnapping::colorDistance(unsigned char* color1, unsigned char* color2)

{

return sqrt(((float)color1[0]-(float)color2[0])*((float)color1[0]-(float)color2[0])+

((float)color1[1]-(float)color2[1])*((float)color1[1]-(float)color2[1])+

((float)color1[2]-(float)color2[2])*((float)color1[2]-(float)color2[2]));

}

float LasySnapping::minDistance(unsigned char* color, vector points)

{

float distance = -1;

for(int i =0 ; i < points.size(); i++)

{

unsigned char* p = (unsigned char*)image->imageData + points[i].y * image->widthStep +

points[i].x * image->nChannels;

float d = colorDistance(p,color);

if(distance < 0 )

{

distance = d;

}

else

{

if(distance > d)

{

distance = d;

}

}

}

return distance;

}

bool LasySnapping::isPtInVector(CvPoint pt, vector points)

{

for(int i =0 ; i < points.size(); i++)

{

if(pt.x == points[i].x && pt.y == points[i].y)

{

return true;

}

}

return false;

}

void LasySnapping::getE1(unsigned char* color,float* energy)

{

// average distance

float df = colorDistance(color,avgForeColor);

float db = colorDistance(color,avgBackColor);

// min distance from background points and forground points

// float df = minDistance(color,forePts);

// float db = minDistance(color,backPts);

energy[0] = df/(db+df);

energy[1] = db/(db+df);

}

float LasySnapping::getE2(unsigned char* color1,unsigned char* color2)

{

const float EPSILON = 0.01;

float lambda = 100;

return lambda/(EPSILON+

(color1[0]-color2[0])*(color1[0]-color2[0])+

(color1[1]-color2[1])*(color1[1]-color2[1])+

(color1[2]-color2[2])*(color1[2]-color2[2]));

}

int LasySnapping::runMaxflow()

{

const float INFINNITE_MAX = 1e10;

int indexPt = 0;

for(int h = 0; h < image->height; h ++)

{

unsigned char* p = (unsigned char*)image->imageData + h *image->widthStep;

for(int w = 0; w < image->width; w ++)

{

// calculate energe E1

float e1[2]={0};

if(isPtInVector(cvPoint(w,h),forePts))

{

e1[0] =0;

e1[1] = INFINNITE_MAX;

}

else if

(isPtInVector(cvPoint(w,h),backPts))

{

e1[0] = INFINNITE_MAX;

e1[1] = 0;

}

else

{

getE1(p,e1);

}

// add node

graph->add_node();

graph->add_tweights(indexPt, e1[0],e1[1]);

// add edge, 4-connect

if(h > 0 && w > 0)

{

float e2 = getE2(p,p-3);

graph->add_edge(indexPt,indexPt-1,e2,e2);

e2 = getE2(p,p-image->widthStep);

graph->add_edge(indexPt,indexPt-image->width,e2,e2);

}

p+= 3;

indexPt ++;

}

}

return graph->maxflow();

}

IplImage* LasySnapping::getImageMask()

{

IplImage* gray = cvCreateImage(cvGetSize(image),8,1);

int indexPt =0;

for(int h =0; h < image->height; h++)

{

unsigned char* p = (unsigned char*)gray->imageData + h*gray->widthStep;

for(int w =0 ;w width; w++)

{

if (graph->what_segment(indexPt) == GraphType::SOURCE)

{

*p = 0;

}

else

{

*p = 255;

}

p++;

indexPt ++;

}

}

return gray;

}

// global

vector forePts;

vector backPts;

int currentMode = 0;// indicate foreground or background, foreground as default

CvScalar paintColor[2] = {CV_RGB(0,0,255),CV_RGB(255,0,0)};

IplImage* image = NULL;

char* winName = "lazySnapping";

IplImage* imageDraw = NULL;

const int SCALE = 4;

void on_mouse( int event, int x, int y, int flags, void* )

{

if( event == CV_EVENT_LBUTTONUP )

{

if(backPts.size() == 0 && forePts.size() == 0)

{

return;

}

LasySnapping ls;

IplImage* imageLS = cvCreateImage(cvSize(image->width/SCALE,image->height/SCALE),

8,3);

cvResize(image,imageLS);

ls.setImage(imageLS);

ls.setBackgroundPoints(backPts);

ls.setForegroundPoints(forePts);

ls.runMaxflow();

IplImage* mask = ls.getImageMask();

IplImage* gray = cvCreateImage(cvGetSize(image),8,1);

cvResize(mask,gray);

// edge

cvCanny(gray,gray,50,150,3);

IplImage* showImg = cvCloneImage(imageDraw);

for(int h =0; h < image->height; h ++)

{

unsigned char* pgray = (unsigned char*)gray->imageData + gray->widthStep*h;

unsigned char* pimage = (unsigned char*)showImg->imageData + showImg->widthStep*h;

for(int width =0; width < image->width; width++)

{

if(*pgray++ != 0 )

{

pimage[0] = 0;

pimage[1] = 255;

pimage[2] = 0;

}

pimage+=3;

}

}

cvSaveImage("t.bmp",showImg);

cvShowImage(winName,showImg);

cvReleaseImage(&imageLS);

cvReleaseImage(&mask);

cvReleaseImage(&showImg);

cvReleaseImage(&gray);

}

else if( event == CV_EVENT_LBUTTONDOWN )

{

}

else if( event == CV_EVENT_MOUSEMOVE && (flags & CV_EVENT_FLAG_LBUTTON))

{

CvPoint pt = cvPoint(x,y);

if(currentMode == 0)

{//foreground

forePts.push_back(cvPoint(x/SCALE,y/SCALE));

}

else

{//background

backPts.push_back(cvPoint(x/SCALE,y/SCALE));

}

cvCircle(imageDraw,pt,2,paintColor[currentMode]);

cvShowImage(winName,imageDraw);

}

}

int main(int argc, char** argv)

{

//if(argc != 2)

//{

// cout<<"command : lazysnapping inputImage"<>image_name;

cvNamedWindow(winName,1);

cvSetMouseCallback( winName, on_mouse, 0);

image = cvLoadImage(image_name.c_str(),CV_LOAD_IMAGE_COLOR);

imageDraw = cvCloneImage(image);

cvShowImage(winName, image);

for(;;)

{

int c = cvWaitKey(0);

c = (char)c;

if(c == 27)

{//exit

break;

}

else if(c == 'r')

{//reset

image = cvLoadImage(image_name.c_str(),CV_LOAD_IMAGE_COLOR);

imageDraw = cvCloneImage(image);

forePts.clear();

backPts.clear();

currentMode = 0;

cvShowImage(winName, image);

}

else if(c == 'b')

{//change to background selection

currentMode = 1;

}else if(c == 'f')

{//change to foreground selection

currentMode = 0;

}

}

cvReleaseImage(&image);

cvReleaseImage(&imageDraw);

return 0;

}

参考文献

[1] Y. Boykov, and M. P. Jolly, “Interactive graph cuts for optimal boundary and region segmentation ofobjects in N-D images”,Proceeding ofIEEE International Conference on Computer Vision, 1:105~112, July 2001.

[2] C. Rother, A. Blake, and V. Kolmogorov, “Grabcut – interactive foreground extractionusing iterated graph cuts”,Proceedingsof ACM SIGGRAPH 2004, 23(3):307~312, August 2004.

[3] A. Agarwala, M. Dontcheva, M. Agrawala,et al, “Interactive digital photomontage”,Proceedings of ACM SIGGRAPH 2004, 23(3):294~302, August 2004.

[4] Y. Li, J. Sun, C. Tang,et al, “Interacting withimages: Lazy snapping”,Proceedingsof ACM SIGGRAPH 2004, 23(3):303~308, August 2004.

[5] A. Blake, C. Rother, M. Brown,et al, “Interactive ImageSegmentation using an adaptive GMMRF model”.Proceedings of European Conference on Computer Vision, pp. 428~441,May 2004.

[6] V. Kwatra, A. Schodl, I. Essa,et al, “Graphcut Textures:Image and Video Synthesis Using Graph Cuts”.Proceedings of ACM Siggraph 2003, pp.277~286, Augst 2003.

部分代码与文档是早些时候收集的,出处找不到了,还请原作者看到后联系注明。

转载请注明出处:http://blog.csdn.net/wangyaninglm/article/details/44151213,

来自:shiter编写程序的艺术