文本分类——KNN算法

上一篇文章已经描述了朴素贝叶斯算法newgroup的分类实现,这篇文章采用KNN算法实现newgroup的分类。

文中代码参考:http://blog.csdn.net/yangliuy/article/details/7401142

1、KNN算法描述

对于KNN算法,前面有一篇文章介绍其思想,但是按个事例采用的模拟的数值数据。本文将采用KNN进行文本分类。算法步骤如下:

(1)文本预处理,向量化,根据特征词的TF*IDF值计算 (上一篇文章已经处理)

(2)当新文本到达后,根据特征词计算新文本的向量

(3)在训练文本中选出与新文本最相近的K个文本,相似度用向量夹角的余弦值度量。

注:K的值目前没有好的办法确定,只有根据实验来调整K的值

(4)在新文本的K个相似文本中,依此计算每个类的权重,每个类的权重等于K个文本中属于该类的训练样本与测试样本的相似度之和。

(5)比较类的权重,将文本分到权重最大那个类别中

2、KNN算法实现

KNN算法的实现要注意

(1)用TreeMap

(2)注意要以"类目_文件名"作为每个文件的key,才能避免同名不同内容的文件出现

package com.datamine.NaiveBayes;

import java.io.*;

import java.util.*;

/**

* KNN算法的实现类,本程序用向量夹角余弦计算相识度

* @author Administrator

*/

public class KNNClassifier {

/**

* 用knn算法对测试文档集分类,读取测试样例和训练样例集

* @param trainFiles 训练样例的所有向量构成的文件

* @param testFiles 测试样例的所有向量构成的文件

* @param knnResultFile KNN分类结果文件路径

* @throws Exception

*/

private void doProcess(String trainFiles, String testFiles,

String knnResultFile) throws Exception {

/*

* 首先读取训练样本和测试样本,用map>保存测试集和训练集,注意训练样本的类目信息也得保存

* 然后遍历测试样本,对于每一个测试样本去计算它与所有训练样本的相识度,相识度保存到map有序map中

* 然后取钱K个样本,针对这k个样本来给它们所属的类目计算权重得分,对属于同一个类目的权重求和进而得到最大得分的类目

* 就可以判断测试样例属于该类目下,K值可以反复测试,找到分类准确率最高的那个值

* 注意:

* 1、要以"类目_文件名"作为每个文件的key,才能避免同名不同内容的文件出现

* 2、注意设置JM参数,否则会出现JAVA Heap溢出错误

* 3、本程序用向量夹角余弦计算相识度

*/

File trainSample = new File(trainFiles);

BufferedReader trainSampleBR = new BufferedReader(new FileReader(trainSample));

String line;

String[] lineSplitBlock;

//trainFileNameWordTFMap<类名_文件名,map<特征词,特征权重>>

Map> trainFileNameWordTFMap = new TreeMap>();

//trainWordTFMap<特征词,特征权重>

TreeMap trainWordTFMap = new TreeMap();

while((line = trainSampleBR.readLine()) != null){

lineSplitBlock = line.split(" ");

trainWordTFMap.clear();

for(int i =2 ;i tempMap = new TreeMap();

tempMap.putAll(trainWordTFMap);

trainFileNameWordTFMap.put(lineSplitBlock[0]+"_"+lineSplitBlock[1], tempMap);

}

trainSampleBR.close();

File testSample = new File(testFiles);

BufferedReader testSampleBR = new BufferedReader(new FileReader(testSample));

Map> testFileNameWordTFMap = new TreeMap>();

Map testWordTFMap = new TreeMap();

while((line = testSampleBR.readLine()) != null){

lineSplitBlock = line.split(" ");

testWordTFMap.clear();

for(int i =2;i tempMap = new TreeMap();

tempMap.putAll(testWordTFMap);

testFileNameWordTFMap.put(lineSplitBlock[0]+"_"+lineSplitBlock[1], tempMap);

}

testSampleBR.close();

//下面遍历每一个测试样例计算所有训练样本的距离,做分类

String classifyResult;

FileWriter knnClassifyResultWriter = new FileWriter(knnResultFile);

Set>> testFileNameWordTFMapSet = testFileNameWordTFMap.entrySet();

for(Iterator>> it = testFileNameWordTFMapSet.iterator();it.hasNext();){

Map.Entry> me = it.next();

classifyResult = knnComputeCate(me.getKey(),me.getValue(),trainFileNameWordTFMap);

knnClassifyResultWriter.append(me.getKey()+" "+classifyResult+"\n");

knnClassifyResultWriter.flush();

}

knnClassifyResultWriter.close();

}

/**

* 对于每一个测试样本去计算它与所有训练样本的向量夹角余弦相识度

* 相识度保存入map有序map中,然后取前k个样本

* 针对这k个样本来给他们所属的类目计算权重得分,对属于同一个类目的权重求和进而得到最大得分类目

* k值可以反复测试,找到分类准确率最高的那个值

* @param testFileName 测试文件名 "类别名_文件名"

* @param testWordTFMap 测试文件向量 map<特征词,特征权重>

* @param trainFileNameWordTFMap 训练样本<类目_文件名,向量>

* @return K个邻居权重得分最大的类目

*/

private String knnComputeCate(String testFileName, Map testWordTFMap,

Map> trainFileNameWordTFMap) {

//<类目_文件名,距离> 后面需要将该HashMap按照value排序

HashMap simMap = new HashMap();

double similarity;

Set>> trainFileNameTFMapSet = trainFileNameWordTFMap.entrySet();

for(Iterator>> it = trainFileNameTFMapSet.iterator();it.hasNext();){

Map.Entry> me = it.next();

similarity = computeSim(testWordTFMap,me.getValue());

simMap.put(me.getKey(), similarity);

}

//下面对simMap按照value降序排序

ByValueComparator bvc = new ByValueComparator(simMap);

TreeMap sortedSimMap = new TreeMap(bvc);

sortedSimMap.putAll(simMap);

//在disMap中取前K个最近的训练样本对其类别计算距离之和,K的值通过反复试验而得

Map cateSimMap = new TreeMap(); //k个最近训练样本所属类目的距离之和

double K = 20;

double count = 0;

double tempSim ;

Set> simMapSet = sortedSimMap.entrySet();

for(Iterator> it = simMapSet.iterator();it.hasNext();){

Map.Entry me = it.next();

count++;

String categoryName = me.getKey().split("_")[0];

if(cateSimMap.containsKey(categoryName)){

tempSim = cateSimMap.get(categoryName);

cateSimMap.put(categoryName, tempSim+me.getValue());

}else

cateSimMap.put(categoryName, me.getValue());

if(count>K)

break;

}

//下面到cateSimMap里面吧sim最大的那个类目名称找出来

double maxSim = 0;

String bestCate = null;

Set> cateSimMapSet = cateSimMap.entrySet();

for(Iterator> it = cateSimMapSet.iterator();it.hasNext();){

Map.Entry me = it.next();

if(me.getValue() > maxSim){

bestCate = me.getKey();

maxSim = me.getValue();

}

}

return bestCate;

}

/**

* 计算测试样本向量和训练样本向量的相识度

* sim(D1,D2)=(D1*D2)/(|D1|*|D2|)

* 例:D1(a 30;b 20;c 20;d 10) D2(a 40;c 30;d 20; e 10)

* D1*D2 = 30*40 + 20*0 + 20*30 + 10*20 + 0*10 = 2000

* |D1| = sqrt(30*30+20*20+20*20+10*10) = sqrt(1800)

* |D2| = sqrt(40*40+30*30+20*20+10*10) = sqrt(3000)

* sim = 0.86;

* @param testWordTFMap 当前测试文件的<单词,权重>向量

* @param trainWordTFMap 当前训练样本<单词,权重>向量

* @return 向量之间的相识度,以向量夹角余弦计算

*/

private double computeSim(Map testWordTFMap,

TreeMap trainWordTFMap) {

// mul = test*train testAbs = |test| trainAbs = |train|

double mul = 0,testAbs = 0, trainAbs = 0;

Set> testWordTFMapSet = testWordTFMap.entrySet();

for(Iterator> it = testWordTFMapSet.iterator();it.hasNext();){

Map.Entry me = it.next();

if(trainWordTFMap.containsKey(me.getKey())){

mul += me.getValue()*trainWordTFMap.get(me.getKey());

}

testAbs += me.getValue()*me.getValue();

}

testAbs = Math.sqrt(testAbs);

Set> trainWordTFMapSet = trainWordTFMap.entrySet();

for(Iterator> it = trainWordTFMapSet.iterator();it.hasNext();){

Map.Entry me = it.next();

trainAbs += me.getValue()*me.getValue();

}

trainAbs = Math.sqrt(trainAbs);

return mul / (testAbs * trainAbs);

}

/**

* 根据knn算法分类结果文件生成正确类目文件,而正确率和混淆矩阵的计算可以复用贝叶斯算法中的方法

* @param knnResultFile 分类结果文件 <"目录名_文件名",分类结果>

* @param knnRightFile 分类正确类目文件 <"目录名_文件名",正确结果>

* @throws IOException

*/

private void createRightFile(String knnResultFile, String knnRightFile) throws IOException {

String rightCate;

FileReader fileR = new FileReader(knnResultFile);

FileWriter knnRightWriter = new FileWriter(new File(knnRightFile));

BufferedReader fileBR = new BufferedReader(fileR);

String line;

String lineBlock[];

while((line = fileBR.readLine()) != null){

lineBlock = line.split(" ");

rightCate = lineBlock[0].split("_")[0];

knnRightWriter.append(lineBlock[0]+" "+rightCate+"\n");

}

knnRightWriter.flush();

fileBR.close();

knnRightWriter.close();

}

public static void main(String[] args) throws Exception {

//wordMap是所有属性词的词典<单词,在所有文档中出现的次数>

double[] accuracyOfEveryExp = new double[10];

double accuracyAvg,sum=0;

KNNClassifier knnClassifier = new KNNClassifier();

NaiveBayesianClassifier nbClassifier = new NaiveBayesianClassifier();

Map wordMap = new TreeMap();

Map IDFPerWordMap = new TreeMap();

ComputeWordsVector computeWV = new ComputeWordsVector();

wordMap = computeWV.countWords("E:\\DataMiningSample\\processedSample", wordMap);

IDFPerWordMap = computeWV.computeIDF("E:\\DataMiningSample\\processedSampleOnlySpecial", wordMap);

//IDFPerWordMap=null;

computeWV.printWordMap(wordMap);

// 首先生成KNN算法10次试验需要的文档TF矩阵文件

for (int i = 0; i < 1; i++) {

computeWV.computeTFMultiIDF("E:/DataMiningSample/processedSampleOnlySpecial", 0.9, i, IDFPerWordMap, wordMap);

String trainFiles = "E:\\DataMiningSample\\docVector\\wordTFIDFMapTrainSample"+i;

String testFiles = "E:/DataMiningSample/docVector/wordTFIDFMapTestSample"+i;

String knnResultFile = "E:/DataMiningSample/docVector/KNNClassifyResult"+i;

String knnRightFile = "E:/DataMiningSample/docVector/KNNClassifyRight"+i;

knnClassifier.doProcess(trainFiles,testFiles,knnResultFile);

knnClassifier.createRightFile(knnResultFile,knnRightFile);

//计算准确率和混淆矩阵使用朴素贝叶斯中的方法

accuracyOfEveryExp[i] = nbClassifier.computeAccuracy(knnRightFile, knnResultFile);

sum += accuracyOfEveryExp[i];

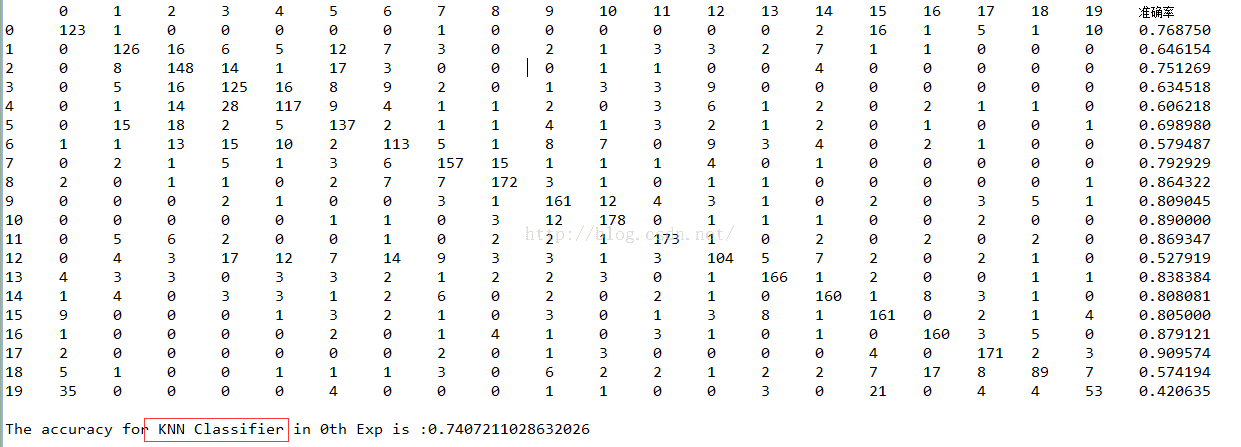

System.out.println("The accuracy for KNN Classifier in "+i+"th Exp is :" + accuracyOfEveryExp[i]);

}

//accuracyAvg = sum / 10;

//System.out.println("The average accuracy for KNN Classifier in all Exps is :" + accuracyAvg);

}

//对hashMap按照value做排序 降序

static class ByValueComparator implements Comparator