Python自然语言处理 5 分类和标注词汇

目标:

(1)什么是词汇分类,在自然语言处理中它们如何使用?

(2)对于存储词汇和它们的分类来说什么是好的Python数据结构?

(3)如何自动标注文本中每个词汇的词类?

基本技术,包括序列标注,N-gram模型,回退和评估

一 使用词性标注器

text = nltk.word_tokenize("and now for something completely different")

nltk.pos_tag(text)

[('and', 'CC'),

('now', 'RB'),

('for', 'IN'),

('something', 'NN'),

('completely', 'RB'),

('different', 'JJ')]二 标注语料库

#表示已标注的标识符

tagged_token = nltk.tag.str2tuple('fly/NN')

tagged_token

('fly', 'NN')#读取已标注的语料库

NLTK中包括的若干语料库已标注了词性

nltk.corpus.brown.tagged_words()

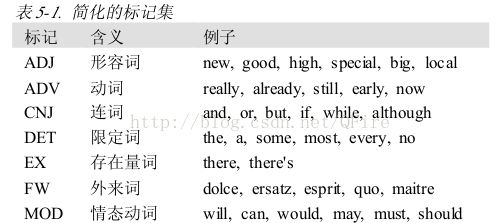

#简化的词性标记集

from nltk.corpus import brown

brown_news_tagged = brown.tagged_words(categories='news',tagset = 'universal')

tag_fd = nltk.FreqDist(tag for (word,tag) in brown_news_tagged)

tag_fd.keys()

[u'ADV',

u'NOUN',

u'ADP',

u'PRON',word_tag_pairs = nltk.bigrams(brown_news_tagged)

list(nltk.FreqDist(a[1] for (a,b) in word_tag_pairs if b[1] == 'N'))#动词

wsj = nltk.corpus.treebank.tagged_words(tagset = 'universal')

word_tag_fd = nltk.FreqDist(wsj)

[word+"/"+tag for (word,tag) in word_tag_fd if tag.startswith('V')]#形容词和副词

#未简化的标记

#探索已标注的语料库

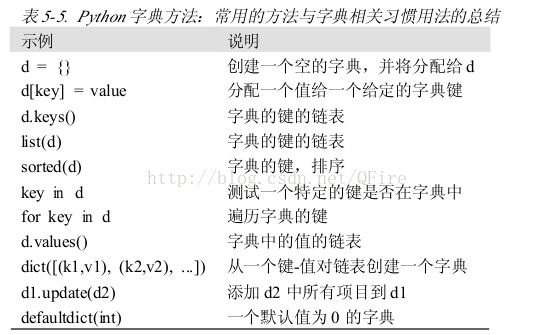

三 使用Python字典映射词及其属性 P206

#索引链表VS字典

#PYthon字典

pos = {}

pos['colorless'] = 'ADJ'

pos['ideas'] = 'N'

pos['sleep'] = 'V'

pos['furiously'] = 'ADV'

pos

{'colorless': 'ADJ', 'furiously': 'ADV', 'ideas': 'N', 'sleep': 'V'}for word in sorted(pos):

print word + ":", pos[word]

#定义字典

#默认字典

#递增地更新字典

四 自动标注

from nltk.corpus import brown

brown_tagged_sents = brown.tagged_sents(categories='news')

brown_sents = brown.sents(categories='news')文本词汇#默认标注器

tags = [tag for (word,tag) in brown.tagged_words(categories='news')]

nltk.FreqDist(tags).max()

u'NN'raw = 'I do not like green eggs and ham, I do not like them Sam I am!'

tokens = nltk.word_tokenize(raw)

default_tagger = nltk.DefaultTagger('NN')

default_tagger.tag(tokens)

[('I', 'NN'),

('do', 'NN'),

('not', 'NN'),

('like', 'NN'),default_tagger.evaluate(brown_tagged_sents)

0.13089484257215028

#正则表达式标注器

基于匹配模式分配标记给标识符,如认为以ed结尾的词都是动词过去分词

patterns = [

(r'.*ing$', 'VBG'), # gerunds

(r'.*ed$', 'VBD'), # simple past

(r'.*es$', 'VBZ'), # 3rd singular present

(r'.*ould$', 'MD'), # modals

(r'.*\'s$', 'NN$'), # possessive nouns

(r'.*s$', 'NNS'), # plural nouns

(r'^-?[0-9]+(.[0-9]+)?$', 'CD'), # cardinal numbers

(r'.*', 'NN') # nouns (default)

]regexp_tagger = nltk.RegexpTagger(patterns)

regexp_tagger.tag(brown_sents[3])

[(u'``', 'NN'),

(u'Only', 'NN'),

(u'a', 'NN'),

(u'relative', 'NN'),

(u'handful', 'NN'),regexp_tagger.evaluate(brown_tagged_sents)

0.20326391789486245

#查询标注器

nltk.UnigramTagger()

**开发已标注语料库是一个重大的任务,为确保高品质的标注,除了数据,它会产生复杂的工具,文档和实践.标记集和其他编码方案不可避免地依赖于一些理论主张,不是所有的理论主张都被共享.然而,语料库的创作者往往竭尽全力使他们的工作尽可能中立,以最大限度地提高其工作的有效性

fd = nltk.FreqDist(brown.words(categories='news'))

cfd = nltk.ConditionalFreqDist(brown.tagged_words(categories='news'))

most_freq_words = fd.keys()[:100]

likely_tags = dict((word,cfd[word].max()) for word in most_freq_words)

baseline_tagger = nltk.UnigramTagger(model=likely_tags)

baseline_tagger.evaluate(brown_tagged_sents)

0.005171350717027666五 N-gram标注

#一元标注器(Unigram Tagging)

from nltk.corpus import brown

brown_tagged_sents = brown.tagged_sents(categories='news')

brown_sents = brown.sents(categories='news')

unigram_tagger = nltk.UnigramTagger(brown_tagged_sents)

unigram_tagger.tag(brown_sents[2007])

[(u'Various', u'JJ'),

(u'of', u'IN'),

(u'the', u'AT'),

(u'apartments', u'NNS'),

(u'are', u'BER'),#分离训练和测试数据

90%为训练数据,10%为测试数据

size = int(len(brown_tagged_sents) * 0.9)

size

4160

train_sents = brown_tagged_sents[:size] # ******

test_sents = brown_tagged_sents[size:]

unigram_tagger = nltk.UnigramTagger(train_sents) # <-------

unigram_tagger.evaluate(test_sents)

0.8120203329014253

#一般的N-gram的标注

bigram_tagger = nltk.BigramTagger(train_sents)

bigram_tagger.tag(brown_sents[2007])

[(u'Various', u'JJ'),

(u'of', u'IN'),

(u'the', u'AT'),

(u'apartments', u'NNS'),

bigram_tagger.evaluate(test_sents)

0.10276088906608193#组合标注器

解决精度和覆盖范围之间权衡的一个办法是尽可能地使用更精确的算法,但却在很多时候却逊于覆盖范围更广的算法.如组合bigram标注器和unigram标注器和一个默认标注器.

- 尝试使用bigram标注器标注标识符

- 如果bigram标准器无法找到标记,尝试unigram标注器

- 如果unigram标注器也无法找到标记,使用默认标注器

t0 = nltk.DefaultTagger('NN')

t1 = nltk.UnigramTagger(train_sents, backoff=t0) #回退

t2 = nltk.BigramTagger(train_sents, backoff=t1)

t2.evaluate(test_sents)

0.844911791089405#标注生词

方法是回退到正则表达式标注器或默认标注器

#存储标注器

没有必要重复训练标注器,可将一个训练好的标注器保存到文件为以后重复使用.将标注器t2保存到文件t2.pkl

from cPickle import dump

output = open('t2.pkl', 'wb')

dump(t2, output, -1)

output.close()from cPickle import load

input = open('t2.pkl', 'rb')

tagger = load(input)

input.close()text = """The board's action shows what free enterprise is up against in our complex maze of regulatory laws ."""

tokens = text.split()

tagger.tag(tokens)

[('The', u'AT'),

("board's", u'NN$'),

('action', 'NN'),

('shows', u'NNS'),

('what', u'WDT'),

('free', u'JJ'),#性能限制

n-gram标注器性能的上限是什么?参考trigram标注器.

cfd = nltk.ConditionalFreqDist( ((x[1],y[1],z[0]), z[1]) for sent in brown_tagged_sents for x, y, z in nltk.trigrams(sent))

ambiguous_contexts = [c for c in cfd.conditions() if len(cfd[c]) > 1]

sum(cfd[c].N() for c in ambiguous_contexts) / cfd.N()test_tags = [tag for sent in brown.sents(categories='editorial') for (word,tag) in t2.tag(sent)]

gold_tags = [tag for (word,tag) in brown.tagged_words(categories='editorial')]

print nltk.ConfusionMatrix(gold_tags, test_tags)分析标注器性能界限的另一种方式是人工标注者经过商讨得到的

#跨句子边界标注

brown_tagged_sents = brown.tagged_sents(categories='news')

brown_sents = brown.sents(categories='news')

size = int(len(brown_tagged_sents) * 0.9)

train_sents = brown_tagged_sents[:size]

test_sents = brown_tagged_sents[size:]

t0 = nltk.DefaultTagger('NN')

t1 = nltk.UnigramTagger(train_sents, backoff=t0)

t2 = nltk.BigramTagger(train_sents, backoff=t1)

t2.evaluate(test_sents)

0.844911791089405六 基于转换的标注

n-gram标注器存在的一个潜在的问题是n-gram表的大小(或语言模型)。如果将各种语言技术的标注器部署在移动计算设备上,在模型大小和标注器性能之间取得平衡是很重要的。

第二个问题是关于上下文的。

在本节中,我们利用Brill标注,它是一种归纳标注方法,性能好,使用的模型仅有n-gram标注器的很小一部分

七 如何确定一个词的分类

形态学线索

句法线索

语义线索

新词

词性标记集中的形态学