【Tensorflow】1.x版本中的各种学习率策略

Tensorflow 1中的tf.train模块中提供了多种学习率策略。

-

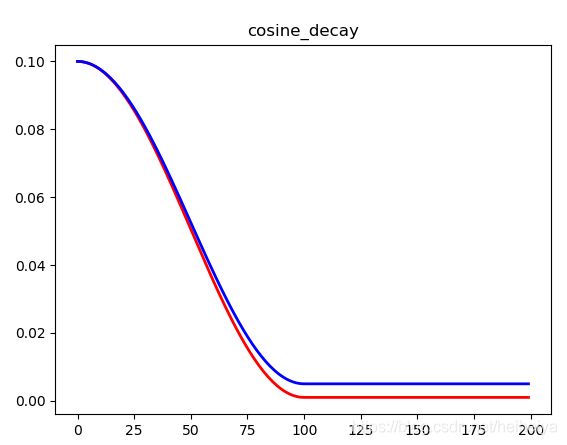

tf.train.cosine_decay

余弦衰减

tf.train.cosine_decay(

learning_rate,

global_step,

decay_steps,

alpha=0.0,

name=None

)- learning_rate:浮点型,初始学习率

- global_step:整型,用于计算decay

- decay_steps:整型,衰减步数

- alpha:浮点型,最小学习率

- name:字符串,operation名称

学习率的计算公式是:

global_step = min(global_step, decay_steps)

cosine_decay = 0.5 * (1 + cos(pi * global_step / decay_steps))

decayed = (1 - alpha) * cosine_decay + alpha

decayed_learning_rate = learning_rate * decayed红线:alpha=0.01

蓝线:alpha=0.05

-

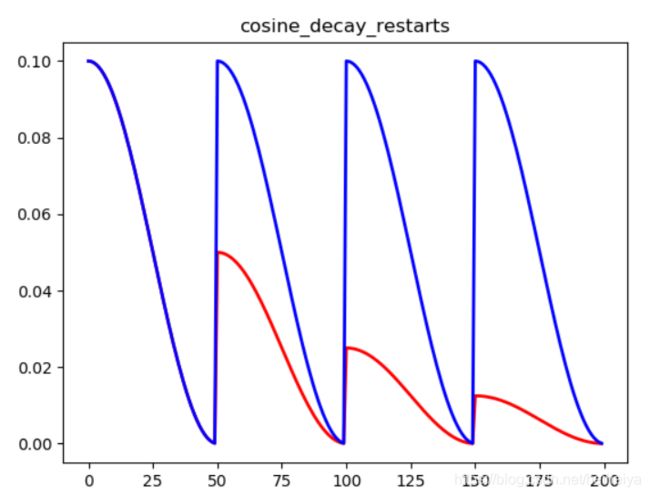

tf.train.cosine_decay_restarts

循环余弦衰减

tf.train.cosine_decay_restarts(

learning_rate,

global_step,

first_decay_steps,

t_mul=2.0,

m_mul=1.0,

alpha=0.0,

name=None

)- learning_rate:初始学习率

- global_step:整型,用于计算decay

- first_decay_steps:整型,第一个完全下降的步数

- t_mul:浮点型,用于计算第i个周期中的迭代步数

- m_mul:浮点型,用于计算第1个周期的初始学习率

- alpha:浮点型,最小学习率不低于这个值

- name:字符串,operation的名称

cosine_decay_restarts就是cosine_decay一个cycle版本,每次完全下降之后又有一个warm restarts。

红线:t_mul=1.0,m_mul=0.5

蓝线:t_mul=1.0,m_mul=1.0

-

tf.train.exponential_decay

指数式衰减

tf.train.exponential_decay(

learning_rate,

global_step,

decay_steps,

decay_rate,

staircase=False,

name=None

)- learning_rate:浮点型,初始学习率

- global_step:整型,用于计算decay

- decay_steps:整型,衰减步数

- decay_rate:浮点型,衰减率

- staircase:布尔型,如果为true,则学习率呈离散分布

- name:字符串,operation名称

指数式衰减的计算公式是:

decayed_learning_rate = learning_rate *

decay_rate ^ (global_step / decay_steps)如果staircase为true,则

decayed_learning_rate = learning_rate *

decay_rate ^ floor(global_step / decay_steps)红线:staircase=False

蓝线:staircase=True

-

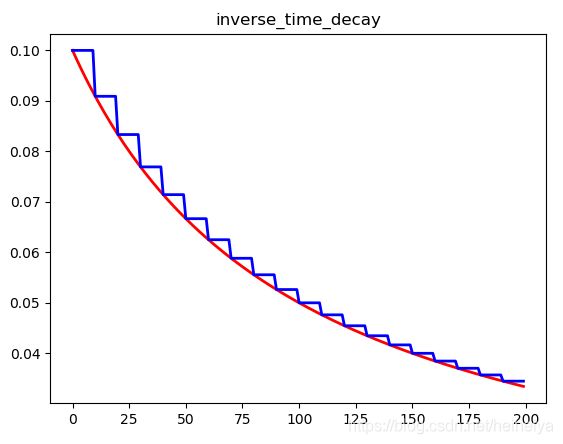

tf.train.inverse_time_decay

反比延时衰减

tf.train.inverse_time_decay(

learning_rate,

global_step,

decay_steps,

decay_rate,

staircase=False,

name=None

)- learning_rate:浮点型,初始学习率

- global_step:整型,用于计算decay

- decay_steps:整型,衰减步数

- decay_rate:浮点型,衰减率

- staircase:布尔型,如果为true,则学习率呈离散分布

- name:字符串,operation的名称

学习率的计算公式是:

decayed_learning_rate = learning_rate / (1 + decay_rate * global_step /

decay_step)如果staircase为true,则计算方式是:

decayed_learning_rate = learning_rate / (1 + decay_rate * floor(global_step /

decay_step))红线:staircase=False

蓝线:staircase=True

从曲线形状来看和多项式衰减是差不多的。

-

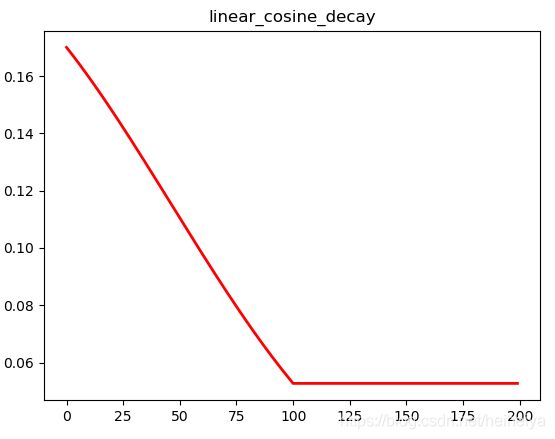

tf.train.linear_cosine_decay

线性余弦衰减

tf.train.linear_cosine_decay(

learning_rate,

global_step,

decay_steps,

num_periods=0.5,

alpha=0.0,

beta=0.001,

name=None

)learning_rate:浮点型,初始学习率

global_step:整型,用于计算decay

decay_steps:整型,衰减步数

num_periods:浮点型,用于计算cos部分的decay

alpha:浮点型,用于计算decay

beta:浮点型,用于计算decay

name:字符串,operation的名称

计算公式是:

global_step = min(global_step, decay_steps)

linear_decay = (decay_steps - global_step) / decay_steps)

cosine_decay = 0.5 * (

1 + cos(pi * 2 * num_periods * global_step / decay_steps))

decayed = (alpha + linear_decay) * cosine_decay + beta

decayed_learning_rate = learning_rate * decayed-

tf.train.natural_exp_decay

自然指数衰减

tf.train.natural_exp_decay(

learning_rate,

global_step,

decay_steps,

decay_rate,

staircase=False,

name=None

)- learning_rate:浮点型,初始学习率

- global_step:整型,用于计算decay

- decay_steps:整型,衰减步数

- decay_rate:浮点型,衰减率

- staircase:布尔型,如果为true,则学习率呈离散分布

- name:字符串,operation的名称

学习率的计算公式是:

decayed_learning_rate = learning_rate * exp(-decay_rate * global_step /

decay_step)如果staircase为true,则

decayed_learning_rate = learning_rate * exp(-decay_rate * floor(global_step /

decay_step))-

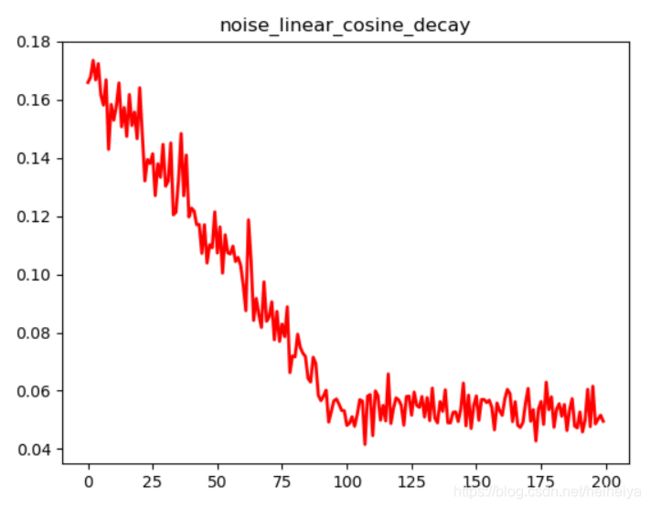

tf.train.noisy_linear_cosine_decay

加噪声的线性余弦衰减

tf.train.noisy_linear_cosine_decay(

learning_rate,

global_step,

decay_steps,

initial_variance=1.0,

variance_decay=0.55,

num_periods=0.5,

alpha=0.0,

beta=0.001,

name=None

)- learning_rate:浮点型,初始学习率

- global_step:整型,用于计算decay

- decay_steps:整型,衰减步数

- initial_variance:浮点型,噪声的初始方差

- variance_decay:浮点型,噪声方差的衰减率

- num_periods:浮点型,用于计算cos部分的衰减

- alpha:浮点型,用于计算decay

- beta:浮点型,用于计算decay

- name:字符串,operation的名称

学习率的计算公式是:

global_step = min(global_step, decay_steps)

linear_decay = (decay_steps - global_step) / decay_steps)

cosine_decay = 0.5 * (

1 + cos(pi * 2 * num_periods * global_step / decay_steps))

decayed = (alpha + linear_decay + eps_t) * cosine_decay + beta

decayed_learning_rate = learning_rate * decayed加了噪声之后,学习率就会呈现一种无规则的上下波动的情况。

-

tf.train.piecewise_constant_decay

分段常数式衰减

tf.train.piecewise_constant_decay(

x,

boundaries,

values,

name=None

)- x:整型或浮点型,0-D标量,类似其他函数中的global_step

- boundaries:整型或浮点型的list,严格递增,代表的是步数的区间

- values:整型或浮点型的list,指定boundaries各区间的学习率,必须必boundaries多一个元素值

- name:字符串,operation的名称

-

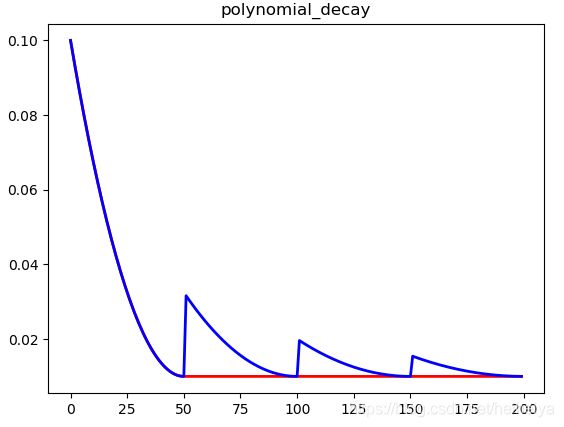

tf.train.polynomial_decay

多项式衰减

tf.train.polynomial_decay(

learning_rate,

global_step,

decay_steps,

end_learning_rate=0.0001,

power=1.0,

cycle=False,

name=None

)- learning_rate:浮点型,初始学习率

- global_step:整型,用于计算decay

- decay_steps:整型,衰减步数

- end_learning_rate:浮点型,最小学习率

- power:浮点型,多项式的幂

- cycle:布尔值,是否在完全下降之后循环

- name:字符串,operation的名称

学习率的计算公式是:

global_step = min(global_step, decay_steps)

decayed_learning_rate = (learning_rate - end_learning_rate) *

(1 - global_step / decay_steps) ^ (power) +

end_learning_rate

如果cycle为true,则

decay_steps = decay_steps * ceil(global_step / decay_steps)

decayed_learning_rate = (learning_rate - end_learning_rate) *

(1 - global_step / decay_steps) ^ (power) +

end_learning_rate红线:cycle=False

蓝线:cycle=True