scrapyd+scrapydweb部署和监控分布式爬虫项目(同一台机器)

1、安装部署scrapyd

系统:centos7.6

安装命令:

pip3 install scrapyd(因为本地有2.7+和3.+版本python)

安装成功后新建配置文件:

sudo mkdir /etc/scrapyd

sudo vim /etc/scrapyd/scrapyd.conf

scrapyd.conf 写入如下内容:(给内容在https://scrapyd.readthedocs.io/en/stable/config.html)

[scrapyd]

eggs_dir = eggs

logs_dir = logs

items_dir =

jobs_to_keep = 5

dbs_dir = dbs

max_proc = 0

max_proc_per_cpu = 10

finished_to_keep = 100

poll_interval = 5.0

bind_address = 0.0.0.0

http_port = 6800

debug = off

runner = scrapyd.runner

application = scrapyd.app.application

launcher = scrapyd.launcher.Launcher

webroot = scrapyd.website.Root

[services]

schedule.json = scrapyd.webservice.Schedule

cancel.json = scrapyd.webservice.Cancel

addversion.json = scrapyd.webservice.AddVersion

listprojects.json = scrapyd.webservice.ListProjects

listversions.json = scrapyd.webservice.ListVersions

listspiders.json = scrapyd.webservice.ListSpiders

delproject.json = scrapyd.webservice.DeleteProject

delversion.json = scrapyd.webservice.DeleteVersion

listjobs.json = scrapyd.webservice.ListJobs

daemonstatus.json = scrapyd.webservice.DaemonStatus

主要修改 bind_address = 0.0.0.0 (远程访问)

max_proc = 0(程序最大运行数,为0时最大执行一个爬虫)

scrapyd 在前台启动,Ctrl+c 退出后scrapyd程序停止

scrapyd > /root/scrapyd.log & 输出日志到 /root/scrapyd.log,在后台启动。(停止ps -ef|grep scrapyd,kill -9 +进程号)

需要在青云放开5000端口,可通过API 形式访问主机

参考文章地址:https://www.cnblogs.com/ss-py/p/9661928.html

2、scrapydweb安装部署

安装命令:

pip install scrapydweb

启动后自动生成配置文件:

scrapydweb 在前台启动,Ctrl+c 退出后scrapyd程序停止

scrapyd >web /root/scrapydweb.log & 输出日志到 /root/scrapydweb.log,在后台启动。(停止ps -ef|grep scrapydweb,kill -9 +进程号)

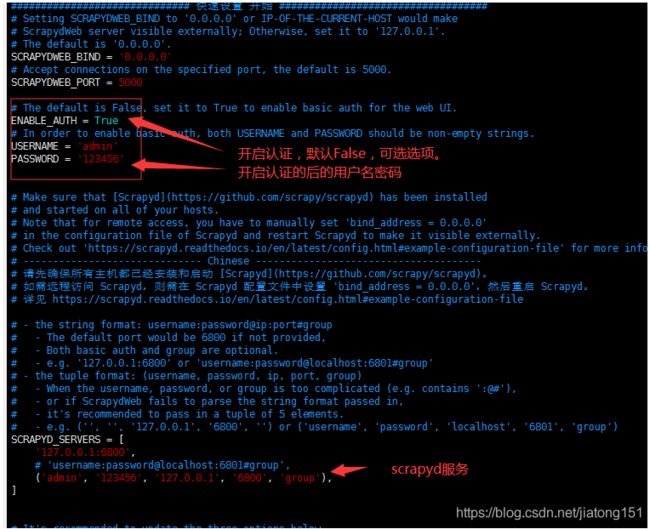

修改配置文件:/usr/local/lib/python3.7/site-packages/scrapydweb/default_settings.py (默认安装后配置文件在python程序site-packages/scrapydweb/目录下)

修改配置文件:/usr/local/lib/python3.7/site-packages/scrapydweb/scrapydweb_settings_v10.py