- html大学生网站开发实践作业:传统文化网页设计题材【绒花6页】HTML+CSS+JavaScript (1)

@码出未来-web网页设计

htmlcssjavascript

精彩专栏推荐文末获取联系✍️作者简介:一个热爱把逻辑思维转变为代码的技术博主作者主页:【主页——获取更多优质源码】web前端期末大作业:【毕设项目精品实战案例(1000套)】程序员有趣的告白方式:【HTML七夕情人节表白网页制作(110套)】超炫酷的Echarts大屏可视化源码:【Echarts大屏展示大数据平台可视化(150套)】HTML+CSS+JS实例代码:【️HTML+CSS+JS实例代码

- 大模型微调方法之Delta-tuning

空 白II

大语言模型论文解读微调方法介绍微调方法delta-tuning论文解读大语言模型

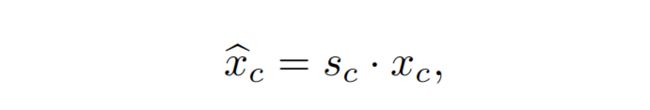

大模型微调方法之Delta-tuning大模型微调方法自从23年之后可谓是百花齐放,浙大有团队在8月将关于大模型微调方法的综述上传了ArXiv。论文将微调方法总结为等几个类别。本次讨论的1大模型业务分类当前的大模型行业可谓百花齐放,自然语言处理(naturallanguageprocessing,NLP)、计算机视觉(computervision,CV)、音频处理(audioprocessing,

- Python 正则表达式超详细解析:从基础到精通

2201_75491841

python正则表达式开发语言

Python正则表达式超详细解析:从基础到精通一、引言在Python编程的广阔领域中,文本处理占据着极为重要的地位。而正则表达式,作为Python处理文本的强大工具,能够帮助开发者高效地完成诸如查找、替换、提取特定模式字符串等复杂任务。无论是在数据清洗、网页爬虫,还是日志分析、自然语言处理等应用场景中,正则表达式都展现出了无可比拟的优势。本文将深入且全面地剖析Python正则表达式,从最基础的概念

- 【html5期末大作业】基于HTML仿QQ音乐官网网站

IT-司马青衫

htmlhtml5课程设计

精彩专栏推荐文末获取联系✍️作者简介:一个热爱把逻辑思维转变为代码的技术博主作者主页:【主页——获取更多优质源码】web前端期末大作业:【毕设项目精品实战案例(1000套)】程序员有趣的告白方式:【HTML七夕情人节表白网页制作(110套)】超炫酷的Echarts大屏可视化源码:【Echarts大屏展示大数据平台可视化(150套)】HTML+CSS+JS实例代码:【️HTML+CSS+JS实例代码

- 珍藏!Java SpringBoot 精品源码合集约惠来袭,获取路径大公开

秋野酱

javaspringboot开发语言

技术范围:SpringBoot、Vue、SSM、HLMT、Jsp、PHP、Nodejs、Python、爬虫、数据可视化、小程序、安卓app、大数据、物联网、机器学习等设计与开发。主要内容:免费功能设计、开题报告、任务书、中期检查PPT、系统功能实现、代码编写、论文编写和辅导、论文降重、长期答辩答疑辅导、腾讯会议一对一专业讲解辅导答辩、模拟答辩演练、和理解代码逻辑思路。文末获取源码联系文末获取源码联

- 小米新款智能眼镜今日发布;苹果CEO库克来访中国,盛赞DeepSeek | 极客头条

CSDN资讯

AI

「极客头条」——技术人员的新闻圈!CSDN的读者朋友们好,「极客头条」来啦,快来看今天都有哪些值得我们技术人关注的重要新闻吧。整理|苏宓出品|CSDN(ID:CSDNnews)一分钟速览新闻点!小米米家智能眼镜新品今日发布,号称“精细之镜”宇树科技王兴兴谈家用人形机器人何时上市:近两三年实现不了网传商汤联创徐冰或离职,公司回应:目前未收到辞呈何小鹏:预计2025年下半年会实现L3级别全场景自动驾驶

- 代码随想录算法训练营第四十一天 | hot65/100| 33.搜索旋转排序数组、153.寻找旋转排序数组中的最小值、155.最小栈、394.字符串解码

boguboji

刷题算法leetcode数据结构

33.搜索旋转排序数组思路是:数组可能有两种情况2345671和6712345将数组一分为二,其中一定有一个是有序的,每次判断前半部分是有序的还是后半部分是有序的,每次只在有序的那部分里找。无序那部分不管(没找到会重新一分为二,继续在有序的一半里找,迟早会找到)注意点:这道题重点是记住边界条件(哪些是小于等于小于大于等于大于)有小于等于/大于等于的情况是因为,如果出现[2,1]中找1的情况,需要有

- 富途证券C++面试题及参考答案

大模型大数据攻城狮

c++java后端面试大厂面试Epoll智能指针数据库索引

C++中堆和栈的区别在C++中,堆和栈是两种不同的内存区域,它们有许多区别。从内存分配方式来看,栈是由编译器自动分配和释放的内存区域。当一个函数被调用时,函数内的局部变量、函数参数等会被压入栈中,这些变量的内存空间在函数执行结束后会自动被释放。例如,在下面的函数中:voidfunc(){inta=5;//这里的变量a存储在栈中,当func函数结束后,a所占用的栈空间会自动释放}而堆是由程序员手动分

- CCF编程能力等级认证GESP—C++1级—20250322

青岛少儿编程-王老师

#C++-1级c++java算法

CCF编程能力等级认证GESP—C++1级—20250322单选题(每题2分,共30分)判断题(每题2分,共20分)编程题(每题25分,共50分)图书馆里的老鼠四舍五入单选题(每题2分,共30分)1、2025年春节有两件轰动全球的事件,一个是DeepSeek横空出世,另一个是贺岁片《哪吒2》票房惊人,入了全球票房榜。下面关于DeepSeek与《哪吒2》的描述成立的是()。A.《哪吒2》是一款新型操

- 双指针与二分算法

打不了嗝

蓝桥杯c++算法

一.双指针1.基本介绍双指针算法是一种暴力枚举的优化算法,他也被叫做尺取法或者滑动窗口。当我们发现算法需要两次for循环时并且两个指针可以不回退,我们可以利用双指针来优化算法复杂度。2.例题详解题目描述企业家Emily有一个很酷的主意:把雪花包起来卖。她发明了一台机器,这台机器可以捕捉飘落的雪花,并把它们一片一片打包进一个包裹里。一旦这个包裹满了,它就会被封上送去发售。Emily的公司的口号是“把

- 算力租赁:人工智能时代的“水电煤”革命——以NVIDIA 4090为例解读下一代算力解决方案

算法工程gpu

引言:当AI算力需求遇上“算力饥渴症”2023年,ChatGPT仅用2个月突破1亿用户,StableDiffusion让普通人秒变艺术家,但背后是单次训练消耗超10万GB内存、千亿级参数的恐怖算力需求。当全球AI企业陷入“算力饥渴症”时,一种名为算力租赁的创新模式正以每年37%的增速(MarketsandMarkets数据)重塑行业格局。本文将深度解析这一革命性服务,并聚焦搭载NVIDIARTX4

- LeetCode 1092:最短公共超序列

迪小莫学AI

每日算法leetcode算法职场和发展

LeetCode1092:最短公共超序列题目描述LeetCode1092.最短公共超序列是一道困难题。题目要求我们给定两个字符串str1和str2,返回一个最短的字符串,使得str1和str2都是它的子序列。如果答案有多个,可以返回任意一个。题目详情输入:str1:第一个字符串,仅包含小写英文字母。str2:第二个字符串,仅包含小写英文字母。输出:一个最短的字符串,使得str1和str2都是它的子

- 《Astro 3.0 岛屿架构实战:用「零JS」打造百万PV内容网站》

前端极客探险家

架构javascript开发语言

文章目录一、传统内容站点的性能困局1.1企业级项目性能调研(N=200+)1.2Astro核心优势矩阵二、十分钟构建高性能内容站点2.1项目初始化2.2核心配置文件三、六大企业级场景实战3.1场景一:多框架组件混用3.2场景二:交互增强型Markdown四、性能优化深度解析4.1优化前后数据对比4.2关键优化策略五、企业级架构方案5.1内容站点技术栈5.2流量突增应对方案六、调试与监控体系6.1性

- 论文翻译:ChatGPT: Bullshit spewer or the end of traditional assessments in higher education?

CSPhD-winston-杨帆

智慧教育论文翻译chatgpt

ChatGPT:Bullshitspewerortheendoftraditionalassessmentsinhighereducation?https://journals.sfu.ca/jalt/index.php/jalt/article/download/689/539/3059文章目录ChatGPT:废话制造者还是传统高等教育评估的终结者?摘要引言ChatGPT的功能ChatGPT对教

- 代码随想录算法训练营Day19| LeetCode 77 组合、216 组合总和 III、17 电话号码的字母组合

今天也要早睡早起

代码随想录算法训练营跟练算法leetcodec++数据结构递归回溯

理论基础回溯的本质是穷举,也就是暴力求解,它是递归的一部分。所有回溯法解决的问题都可以抽象为树形结构,因为回溯法解决的都是在集合中递归查找子集,集合的大小构成了树的宽度,递归的深度就构成了树的深度(cr.代码随想录)。应用回溯一般被用于以下几种问题(cr.代码随想录)的求解中:组合问题:N个数里面按一定规则找出k个数的集合切割问题:一个字符串按一定规则有几种切割方式子集问题:一个N个数的集合里有多

- Python if-else对缩进的要求

宇寒风暖

python编程python开发语言学习笔记

在Python中,缩进是语法的一部分,用于表示代码块的层次结构。if-else语句的代码块必须通过缩进来定义,缩进不正确会导致语法错误或逻辑错误。1.缩进的基本规则1.1缩进的作用缩进用于表示代码块的层次结构。同一代码块中的语句必须具有相同的缩进级别。缩进通常使用4个空格,这是Python官方推荐的风格。1.2示例x=10ifx>5:print("x大于5")#缩进4个空格print("这是if代

- 《Java开发者必备:jstat、jmap、jstack实战指南》 ——从零掌握JVM监控三剑客

admin_Single

javajvm开发语言

《Java开发者必备:jstat、jmap、jstack实战指南》——从零掌握JVM监控三剑客文章目录**《Java开发者必备:jstat、jmap、jstack实战指南》**@[toc]**摘要****核心工具与场景****关键实践****诊断流程****工具选型决策表****调优原则****未来趋势****第一章:GC基础:垃圾回收机制与监控的关系****1.1内存世界的"垃圾分类"——GC分

- 【开题报告+论文+源码】基于SpringBoot+vue的鲜花购物商城

编程毕设

springboot后端java

项目背景与意义近年来,随着人们生活水平的提高,鲜花消费需求逐年增长。然而,传统的鲜花销售模式存在着诸如店面租金高、人力成本高、货源不稳定等问题,这使得商家在面对激烈的市场竞争时,难以获得持续稳定的发展。传统的鲜花商店也可能距离远,这使得消费者需要花时间在各个鲜花店里来回选择,而网上的消费群体可以通过网站,挑选并订购自己需要的鲜花[2]。因此,网络鲜花购物系统可以很好地解决顾客在购买鲜花时的地域限制

- 产品经理必备知识之网页设计系列(二)-如何设计出一个优秀的界面

文宇肃然

产品运营系列课程快速学习实战应用界面设计产品设计产品经理网页设计

前言第一部分参见产品经理必备知识之网页设计系列(一)-创建出色用户体验https://blog.csdn.net/wenyusuran/article/details/108199875第三部分参见产品经理必备知识之网页设计系列(三)-移动端适配&无障碍设计及测试https://wenyusuran.blog.csdn.net/article/details/108199947设计师和开发人员在构

- 【传输层协议】TCP协议详解(上)

望舒_233

Linux网络tcp/ip网络服务器

前言TCP(TransmissionControlProtocol,传输控制协议)是TCP/IP协议栈中的核心协议,作为互联网通信的基石,承担着确保数据可靠传输的重要职责。接下来我将分两篇文章,从四个部分带大家学习一些与TCP相关的基本概念和机制,首先我将带大家认识一下TCP报头字段的含义,然后了解TCP保证可靠性的一些机制,接下来是TCP进行效率优化的机制,最后是TCP与应用层相关的概念。本篇文

- ts之变量声明以及语法细节,ts小白初学ing

菥菥爱嘻嘻

小白学习tstypescript前端

TypeScript用js编写的项目虽然开发很快,但是维护是成本很高,而且js不报错啊啊啊啊啊!!!以js为基础进行扩展的给变量赋予了类型语法、实战(ts+vue3)TypeScript是JavaScript的一个超集,支持ECMAScript6标准(ES6教程)。TypeScript由微软开发的自由和开源的编程语言,在JavaScript的基础上增加了静态类型检查的超集。TypeScript设计

- 2024年第五届MathorCup数学应用挑战赛--大数据竞赛思路、代码更新中.....

宇哥预测优化代码学习

1024程序员节

欢迎来到本博客❤️❤️博主优势:博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。⛳️座右铭:行百里者,半于九十。本文目录如下:目录⛳️研赛及概况一、竞赛背景与目的二、组织机构与参赛对象三、竞赛时间与流程四、竞赛要求与规则五、奖项设置与奖励六、研究文档撰写建议七、参考资料与资源1找程序网站推荐2公式编辑器、流程图、论文排版324年研赛资源下载4思路、Python、Matlab代码分享......⛳

- 【Unity网络同步框架 - Nakama研究】

归海_一刀

Unityunity游戏引擎Nakama网络同步

Unity网络同步框架-Nakama研究介绍如果你现在被委托了一个需求,要求调研并且撰写一份关于Unity网络同步框架方面的报告,你会如何做我知道,现在AI这么多,我马上打开DeepSeek进行光速搜索,那么好,如下是一部分关于这方面的咨询反馈:Mirror性能:性能表现良好,适合中小规模的多人游戏。易用性:上手难度较低,尤其是对于有UNet经验的开发者。功能完整性:功能较为全面,但扩展性有限。社

- 【赛题】2024年MathorCup数学应用挑战赛D题赛题发布

睿森竞赛

数学建模MathorCup数学应用挑战赛

2024年MathorCup数学应用挑战赛——正式开赛!!!D题量子计算在矿山设备配置及运营中的建模应用赛题已发布,后续无偿分享各题的解题思路、参考文献、完整论文+可运行代码,帮助大家最快时间,选择最适合是自己的赛题。祝大家都能取得一个好成绩,加油,加油,加油!!

- 万字深度解析:DeepSeek-V3为何成为大模型时代的“速度之王“?

羊不白丶

大模型算法

引言在AI军备竞赛白热化的2024年,DeepSeek-V3以惊人的推理速度震撼业界:相比前代模型推理速度提升3倍,训练成本降低70%。这背后是十余项革命性技术的叠加创新,本文将为您揭开这艘"AI超跑"的性能密码。DeepSeek-V3的技术路径证明:计算效率的本质是知识组织的效率。其MoE架构中2048个专家的动态协作,恰似人脑神经网络的模块化运作——每个专家不再是被动执行计算的"劳工",而是具

- 洛谷 P3228 [HNOI2013] 数列

syzyc

数论题解组合数取模数论

题目传送门前言这道题最难的其实是想到把【构造一个上升序列】转化为【构造一个差分序列】(当然我是想不到的,所以看了题解的一部分)。了解此思路下的我经过一顿推公式之后依旧只推出了30pts的暴力公式和代码,然后看了题解豁然开朗,所以决定写一篇题解来说说暴力和正解的思路。整体思路正如前言所说,我们把每一天股票增长的差分数组did_idi设出来,did_idi的取值范围是[1,m][1,m][1,m]。假

- k8s--集群内的pod调用集群外的服务

IT艺术家-rookie

k8s与docker容器技术kubernetes容器云原生

关于如何让同一个局域网内的Kubernetes服务的Pod访问同一局域网中的电脑上的服务。可能的解决方案包括使用ClusterIP、NodePort、HeadlessService、HostNetwork、ExternalIPs,或者直接使用Pod网络。每种方法都有不同的适用场景,需要逐一分析。例如,ClusterIP是默认的,只能在集群内部访问,所以可能需要其他方式。NodePort会在每个节点

- 前端请求怎么发送到后端:深度剖析与实用指南

dhfnngte24fhfn

pythondjangopygamevirtualenv

前端请求怎么发送到后端:深度剖析与实用指南在web开发中,前端与后端之间的通信是至关重要的。前端通过发送请求来获取后端的数据或执行某些操作,而后端则负责处理这些请求并返回相应的响应。本文将分四个方面、五个方面、六个方面和七个方面,深入剖析前端请求是如何发送到后端的,并为你提供实用的指南。四个方面:请求与响应的基础首先,我们需要了解前端请求与后端响应的基础概念。前端通过HTTP协议向后端发送请求,后

- Java进阶——数组超详细整理

1加1等于

Javajava数据结构

数组是一种基础且重要的数据结构,广泛应用于各种场景,本文将深入探讨Java数组的相关知识点,并结合实际场景展示其应用。本文目录一、数组声明与初始化1.声明方式2.初始化方法3.长度特性二、内存管理三、数组遍历与操作1.遍历方式2.数组填充四、多维数组五、数组工具类Arrays六、数组与集合的转换1.数组转集合2.集合转数组总结一、数组声明与初始化1.声明方式数组的声明有两种方式:int[]prod

- MCS51指令系统及汇编程序设计

cxz204986

51单片机

一、MSC--51指令系统包含111条基本指令。指令:是CPU按照人的意图来完成某种操作的命令,它以英文名称或缩写形式作为助记符。掌握MCS-51汇编语言指令是51单片机汇编设计程序的基础。按所占字节分,MCS-51指令分三种:(1)单字节指令49条:(2)双字节指令45条;(3)三字节指令17条。按执行时间分,MCS-51指令分三种:(1)1个机器周期指令64条;(2)2个机器周期指令45条;(

- Java 并发包之线程池和原子计数

lijingyao8206

Java计数ThreadPool并发包java线程池

对于大数据量关联的业务处理逻辑,比较直接的想法就是用JDK提供的并发包去解决多线程情况下的业务数据处理。线程池可以提供很好的管理线程的方式,并且可以提高线程利用率,并发包中的原子计数在多线程的情况下可以让我们避免去写一些同步代码。

这里就先把jdk并发包中的线程池处理器ThreadPoolExecutor 以原子计数类AomicInteger 和倒数计时锁C

- java编程思想 抽象类和接口

百合不是茶

java抽象类接口

接口c++对接口和内部类只有简介的支持,但在java中有队这些类的直接支持

1 ,抽象类 : 如果一个类包含一个或多个抽象方法,该类必须限定为抽象类(否者编译器报错)

抽象方法 : 在方法中仅有声明而没有方法体

package com.wj.Interface;

- [房地产与大数据]房地产数据挖掘系统

comsci

数据挖掘

随着一个关键核心技术的突破,我们已经是独立自主的开发某些先进模块,但是要完全实现,还需要一定的时间...

所以,除了代码工作以外,我们还需要关心一下非技术领域的事件..比如说房地产

&nb

- 数组队列总结

沐刃青蛟

数组队列

数组队列是一种大小可以改变,类型没有定死的类似数组的工具。不过与数组相比,它更具有灵活性。因为它不但不用担心越界问题,而且因为泛型(类似c++中模板的东西)的存在而支持各种类型。

以下是数组队列的功能实现代码:

import List.Student;

public class

- Oracle存储过程无法编译的解决方法

IT独行者

oracle存储过程

今天同事修改Oracle存储过程又导致2个过程无法被编译,流程规范上的东西,Dave 这里不多说,看看怎么解决问题。

1. 查看无效对象

XEZF@xezf(qs-xezf-db1)> select object_name,object_type,status from all_objects where status='IN

- 重装系统之后oracle恢复

文强chu

oracle

前几天正在使用电脑,没有暂停oracle的各种服务。

突然win8.1系统奔溃,无法修复,开机时系统 提示正在搜集错误信息,然后再开机,再提示的无限循环中。

无耐我拿出系统u盘 准备重装系统,没想到竟然无法从u盘引导成功。

晚上到外面早了一家修电脑店,让人家给装了个系统,并且那哥们在我没反应过来的时候,

直接把我的c盘给格式化了 并且清理了注册表,再装系统。

然后的结果就是我的oracl

- python学习二( 一些基础语法)

小桔子

pthon基础语法

紧接着把!昨天没看继续看django 官方教程,学了下python的基本语法 与c类语言还是有些小差别:

1.ptyhon的源文件以UTF-8编码格式

2.

/ 除 结果浮点型

// 除 结果整形

% 除 取余数

* 乘

** 乘方 eg 5**2 结果是5的2次方25

_&

- svn 常用命令

aichenglong

SVN版本回退

1 svn回退版本

1)在window中选择log,根据想要回退的内容,选择revert this version或revert chanages from this version

两者的区别:

revert this version:表示回退到当前版本(该版本后的版本全部作废)

revert chanages from this versio

- 某小公司面试归来

alafqq

面试

先填单子,还要写笔试题,我以时间为急,拒绝了它。。时间宝贵。

老拿这些对付毕业生的东东来吓唬我。。

面试官很刁难,问了几个问题,记录下;

1,包的范围。。。public,private,protect. --悲剧了

2,hashcode方法和equals方法的区别。谁覆盖谁.结果,他说我说反了。

3,最恶心的一道题,抽象类继承抽象类吗?(察,一般它都是被继承的啊)

4,stru

- 动态数组的存储速度比较 集合框架

百合不是茶

集合框架

集合框架:

自定义数据结构(增删改查等)

package 数组;

/**

* 创建动态数组

* @author 百合

*

*/

public class ArrayDemo{

//定义一个数组来存放数据

String[] src = new String[0];

/**

* 增加元素加入容器

* @param s要加入容器

- 用JS实现一个JS对象,对象里有两个属性一个方法

bijian1013

js对象

<html>

<head>

</head>

<body>

用js代码实现一个js对象,对象里有两个属性,一个方法

</body>

<script>

var obj={a:'1234567',b:'bbbbbbbbbb',c:function(x){

- 探索JUnit4扩展:使用Rule

bijian1013

java单元测试JUnitRule

在上一篇文章中,讨论了使用Runner扩展JUnit4的方式,即直接修改Test Runner的实现(BlockJUnit4ClassRunner)。但这种方法显然不便于灵活地添加或删除扩展功能。下面将使用JUnit4.7才开始引入的扩展方式——Rule来实现相同的扩展功能。

1. Rule

&n

- [Gson一]非泛型POJO对象的反序列化

bit1129

POJO

当要将JSON数据串反序列化自身为非泛型的POJO时,使用Gson.fromJson(String, Class)方法。自身为非泛型的POJO的包括两种:

1. POJO对象不包含任何泛型的字段

2. POJO对象包含泛型字段,例如泛型集合或者泛型类

Data类 a.不是泛型类, b.Data中的集合List和Map都是泛型的 c.Data中不包含其它的POJO

- 【Kakfa五】Kafka Producer和Consumer基本使用

bit1129

kafka

0.Kafka服务器的配置

一个Broker,

一个Topic

Topic中只有一个Partition() 1. Producer:

package kafka.examples.producers;

import kafka.producer.KeyedMessage;

import kafka.javaapi.producer.Producer;

impor

- lsyncd实时同步搭建指南——取代rsync+inotify

ronin47

1. 几大实时同步工具比较 1.1 inotify + rsync

最近一直在寻求生产服务服务器上的同步替代方案,原先使用的是 inotify + rsync,但随着文件数量的增大到100W+,目录下的文件列表就达20M,在网络状况不佳或者限速的情况下,变更的文件可能10来个才几M,却因此要发送的文件列表就达20M,严重减低的带宽的使用效率以及同步效率;更为要紧的是,加入inotify

- java-9. 判断整数序列是不是二元查找树的后序遍历结果

bylijinnan

java

public class IsBinTreePostTraverse{

static boolean isBSTPostOrder(int[] a){

if(a==null){

return false;

}

/*1.只有一个结点时,肯定是查找树

*2.只有两个结点时,肯定是查找树。例如{5,6}对应的BST是 6 {6,5}对应的BST是

- MySQL的sum函数返回的类型

bylijinnan

javaspringsqlmysqljdbc

今天项目切换数据库时,出错

访问数据库的代码大概是这样:

String sql = "select sum(number) as sumNumberOfOneDay from tableName";

List<Map> rows = getJdbcTemplate().queryForList(sql);

for (Map row : rows

- java设计模式之单例模式

chicony

java设计模式

在阎宏博士的《JAVA与模式》一书中开头是这样描述单例模式的:

作为对象的创建模式,单例模式确保某一个类只有一个实例,而且自行实例化并向整个系统提供这个实例。这个类称为单例类。 单例模式的结构

单例模式的特点:

单例类只能有一个实例。

单例类必须自己创建自己的唯一实例。

单例类必须给所有其他对象提供这一实例。

饿汉式单例类

publ

- javascript取当月最后一天

ctrain

JavaScript

<!--javascript取当月最后一天-->

<script language=javascript>

var current = new Date();

var year = current.getYear();

var month = current.getMonth();

showMonthLastDay(year, mont

- linux tune2fs命令详解

daizj

linuxtune2fs查看系统文件块信息

一.简介:

tune2fs是调整和查看ext2/ext3文件系统的文件系统参数,Windows下面如果出现意外断电死机情况,下次开机一般都会出现系统自检。Linux系统下面也有文件系统自检,而且是可以通过tune2fs命令,自行定义自检周期及方式。

二.用法:

Usage: tune2fs [-c max_mounts_count] [-e errors_behavior] [-g grou

- 做有中国特色的程序员

dcj3sjt126com

程序员

从出版业说起 网络作品排到靠前的,都不会太难看,一般人不爱看某部作品也是因为不喜欢这个类型,而此人也不会全不喜欢这些网络作品。究其原因,是因为网络作品都是让人先白看的,看的好了才出了头。而纸质作品就不一定了,排行榜靠前的,有好作品,也有垃圾。 许多大牛都是写了博客,后来出了书。这些书也都不次,可能有人让为不好,是因为技术书不像小说,小说在读故事,技术书是在学知识或温习知识,有

- Android:TextView属性大全

dcj3sjt126com

textview

android:autoLink 设置是否当文本为URL链接/email/电话号码/map时,文本显示为可点击的链接。可选值(none/web/email/phone/map/all) android:autoText 如果设置,将自动执行输入值的拼写纠正。此处无效果,在显示输入法并输

- tomcat虚拟目录安装及其配置

eksliang

tomcat配置说明tomca部署web应用tomcat虚拟目录安装

转载请出自出处:http://eksliang.iteye.com/blog/2097184

1.-------------------------------------------tomcat 目录结构

config:存放tomcat的配置文件

temp :存放tomcat跑起来后存放临时文件用的

work : 当第一次访问应用中的jsp

- 浅谈:APP有哪些常被黑客利用的安全漏洞

gg163

APP

首先,说到APP的安全漏洞,身为程序猿的大家应该不陌生;如果抛开安卓自身开源的问题的话,其主要产生的原因就是开发过程中疏忽或者代码不严谨引起的。但这些责任也不能怪在程序猿头上,有时会因为BOSS时间催得紧等很多可观原因。由国内移动应用安全检测团队爱内测(ineice.com)的CTO给我们浅谈关于Android 系统的开源设计以及生态环境。

1. 应用反编译漏洞:APK 包非常容易被反编译成可读

- C#根据网址生成静态页面

hvt

Web.netC#asp.nethovertree

HoverTree开源项目中HoverTreeWeb.HVTPanel的Index.aspx文件是后台管理的首页。包含生成留言板首页,以及显示用户名,退出等功能。根据网址生成页面的方法:

bool CreateHtmlFile(string url, string path)

{

//http://keleyi.com/a/bjae/3d10wfax.htm

stri

- SVG 教程 (一)

天梯梦

svg

SVG 简介

SVG 是使用 XML 来描述二维图形和绘图程序的语言。 学习之前应具备的基础知识:

继续学习之前,你应该对以下内容有基本的了解:

HTML

XML 基础

如果希望首先学习这些内容,请在本站的首页选择相应的教程。 什么是SVG?

SVG 指可伸缩矢量图形 (Scalable Vector Graphics)

SVG 用来定义用于网络的基于矢量

- 一个简单的java栈

luyulong

java数据结构栈

public class MyStack {

private long[] arr;

private int top;

public MyStack() {

arr = new long[10];

top = -1;

}

public MyStack(int maxsize) {

arr = new long[maxsize];

top

- 基础数据结构和算法八:Binary search

sunwinner

AlgorithmBinary search

Binary search needs an ordered array so that it can use array indexing to dramatically reduce the number of compares required for each search, using the classic and venerable binary search algori

- 12个C语言面试题,涉及指针、进程、运算、结构体、函数、内存,看看你能做出几个!

刘星宇

c面试

12个C语言面试题,涉及指针、进程、运算、结构体、函数、内存,看看你能做出几个!

1.gets()函数

问:请找出下面代码里的问题:

#include<stdio.h>

int main(void)

{

char buff[10];

memset(buff,0,sizeof(buff));

- ITeye 7月技术图书有奖试读获奖名单公布

ITeye管理员

活动ITeye试读

ITeye携手人民邮电出版社图灵教育共同举办的7月技术图书有奖试读活动已圆满结束,非常感谢广大用户对本次活动的关注与参与。

7月试读活动回顾:

http://webmaster.iteye.com/blog/2092746

本次技术图书试读活动的优秀奖获奖名单及相应作品如下(优秀文章有很多,但名额有限,没获奖并不代表不优秀):

《Java性能优化权威指南》