Scrapy框架

Scrapy

scrapy是一个为了爬取网站数据,提取结构性数据而编写的应用框架。

-

scrapy集成好的功能:

- 高性能的数据解析操作(xpath)

- 高性能的数据下载

- 高性能的持久化存储

- 中间件

- 全栈数据爬取操作

- 分布式:redis

- 请求传参的机制(深度爬取)

- scrapy中合理的应用selenium

-

环境安装

- pip install wheel

- 下载twisted,对应python版本 地址:https://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

- 安装twisted,在下载好的目录中cmd,pip install 下载好后的twisted

- pip install pywin32

- pip install scrapy

创建工程

- scrapy startproject ProName

- cd ProName

- scrapy genspider spiderName www.xxx.com 创建爬虫文件

- 执行:scrapy crawl spiderName

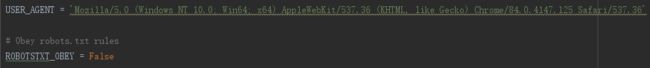

- settings:

scrapy的数据解析

- extract_first():列表元素只有单个

- extract():列表是有多个列表元素

scrapy的持久化数据

- 基于终端:

- 只可以将parse方法的返回值存储到磁盘文件中

- scrapy crawl first -o file.csv

- 基于管道:piplines.py

- 编码流程:

- 数据解析

- 在item的类中定义相关的属性

- 将解析的数据存储封装到item类型的对象中,item[‘xxx’]

- 将item对象提交给管道

- 在管道类中process_item方法,负责接收item对象,然后对item进行任意形式的持久化存储

- 在配置文件中开启管道 ITEM_PIPELINES

- 在管道中的一个管道类表示数据存储到某一种平台中

- 如果管道中定义了多个管道类,爬虫类提交的item会给优先级最高的管道类

- process_item方法实现的return item操作表示将item传递给下一个即将被执行的管道类

- 编码流程:

基于终端指令的持久化存储

import scrapy

class FirstSpider(scrapy.Spider):

name = 'first'

# allowed_domains = ['www.baidu.com']

start_urls = ['https://www.huya.com/g/1663']

# 基于终端指定进行的持久化存储

def parse(self, response):

li_list = response.xpath('//*[@id="js-live-list"]/li')

all_data = []

for li in li_list:

title = li.xpath('./a[2]/text()').extract_first()

author = li.xpath('./span/span[1]/i/text()').extract_first()

hot = li.xpath('/span/span[2]/i[2]/text()').extract_first()

dic = {

'title': title,

'author': author,

'hot': hot,

}

all_data.append(dic)

return all_data

基于管道持久化存储

import scrapy

from huya.items import HuyaItem

class FirstSpider(scrapy.Spider):

name = 'first'

start_urls = ['https://www.huya.com/g/1663']

# 基于管道的持久化存储

def parse(self, response):

li_list = response.xpath('//*[@id="js-live-list"]/li')

for li in li_list:

title = li.xpath('./a[2]/text()').extract_first()

author = li.xpath('./span/span[1]/i/text()').extract_first()

# 实例化item类型的对象

item = HuyaItem()

item['title'] = title

item['author'] = author

yield item # 提交给管道

# pipelines.py

class HuyaPipeline:

fp = None

def open_spider(self, spider):

self.fp = open('hy.txt', 'w', encoding='utf-8')

def process_item(self, item, spider): # item就是接收爬虫类提交过来的item对象

self.fp.write(item['title'] + ':' + item['author'] + '\n')

print(item['title'])

return item

def close_spider(self, spider):

self.fp.close()

import pymysql

# 插入到mysql中

class MysqlPipeline:

conn = None

cursor = None

def open_spider(self, spider):

self.conn = pymysql.connect(host='127.0.0.1', port=3306, user='root', password='root', db='spider',

charset='utf8')

def process_item(self, item, spider): # item就是接收爬虫类提交过来的item对象

sql = 'insert into huya values ("%s", "%s")' % (item['title'], item['author'])

self.cursor = self.conn.cursor()

try:

self.cursor.execute(sql)

self.conn.commit()

except Exception as e:

self.conn.rollback()

return item

def close_spider(self, spider):

self.cursor.close()

self.conn.close()

# settings.py

ITEM_PIPELINES = {

'huya.pipelines.HuyaPipeline': 300, # 值越小优先级越高

'huya.pipelines.MysqlPipeline': 301,

}

基于Spider父类进行全站数据的爬取

全站数据爬取:将所有页码的数据进行爬取

- 手动请求的发送(get):yield scrapy.Request(url, callback)

- 对yield总结

- 像管道提交item时候:yield item

- 手动请求发送:yield scrapy.Request(url, callback)

- 手动发送post请求:yield scrapy.FormRequest(url, formdata, callback) formdata:参数字典

class HuyazbSpider(scrapy.Spider):

name = 'huyaZB'

# allowed_domains = ['www.xxx.com']

start_urls = ['http://www.huya.com/g/xingxiu']

# 通用url模板

url = 'https://www.huya.com/cache.php?m=LiveList&do=getLiveListByPage&gameId=1663&tagAll=0&page=%d'

def parse(self, response):

li_list = response.xpath('//*[@id="js-live-list"]/li')

for li in li_list:

title = li.xpath('./a[2]/text()').extract_first()

author = li.xpath('./span/span[1]/i/text()').extract_first()

hot = li.xpath('./span/span[2]/i[2]/text()').extract_first()

# 实例化item类型的对象

item = HuyaItem()

item['title'] = title

item['author'] = author

item['hot'] = hot

yield item # 提交给管道

# 手动请求发送

for page in range(2,5):

new_url = format(self.url%page)

yield scrapy.Request(url=new_url, callback=self.parse_other)

# 所有的解析方法都必须模拟parse进行定义:必须要有和parse同样的参数

def parse_other(self, response):

print(response.text) # 这里拿到其他页面,然后进行解析就可以了

scrapy的请求传参

-

作用:实现深度爬取

-

使用场景:如果使用scrapy爬取的数据没有存在同一个页面中

-

传递item:yield scrapy.Request(url, callback, meta)

-

接收item:response.meta

-

提升scrapy爬取数据的效率

- 在配置文件中进行相关的配置即可:

- 增加并发:默认scrapy开启的并发线程为32个,可以适当进行增加。在settings配置文件中修改CONCURRENT_REQUESTS = 100 值为100,并发设置成为了100

- 降低日志级别:在scrapy运行时,会有大量的日志信息的输出,为了减少cpu的使用率。可以设置log输出信息为INFO或ERROR即可。在配置文件中编写:LOG_LEVEL = ‘INFO’

- 进制cookie:如果不是真的需要cookie,则在scrapy爬取数据时可以禁止cookie从而减少cpu的使用率,提升爬取效率。在配置文件中编写:COOKIES_ENABLED = False

- 禁止重试:对失败的HTTP进行重新请求(重试)会减慢爬取速度,因此可以禁止重试。在配置文件中编写:RETRY_ENABLED = False

- 减少下载超时:如果对一个非常慢的链接进行爬取,减少下载超时可能让卡主的链接快速被放弃,从而提升效率。在配置文件中进行编写:DOWNLOAD_TIMEOUT = 3 超时时间为3s

- 在配置文件中进行相关的配置即可:

import scrapy

from movie4567.items import Movie4567Item

class MovieSpider(scrapy.Spider):

name = 'movie'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://www.4567kan.com/frim/index6.html']

urls = 'https://www.4567kan.com/frim/index6-%d.html'

page = 1

def parse(self, response):

print(f'正在爬取第{self.page}页数据.....')

li_list = response.xpath('/html/body/div[1]/div/div/div/div[2]/ul/li')

for li in li_list:

item = Movie4567Item()

name = li.xpath('./div/a/@title').extract_first()

item['name'] = name

detail_url = 'https://www.4567kan.com' + li.xpath('./div/a/@href').extract_first()

# 详情页的url进行手动请求的发送

# 请求传参:让Request将一个数据值传递给回调函数

yield scrapy.Request(detail_url, callback=self.parse_detail, meta={'item': item})

if self.page < 5:

self.page += 1

new_url = format(self.urls % self.page)

yield scrapy.Request(new_url, callback=self.parse)

def parse_detail(self, response):

# 接收请求传参的数据(字典)

item = response.meta['item']

desc = response.xpath('/html/body/div[1]/div/div/div/div[2]/p[5]/span[2]/text()').extract_first()

item['desc'] = desc

yield item

scrapy bytes类型数据处理

# pipelines.py

import scrapy

from scrapy.pipelines.images import ImagesPipeline

class ImgproPipeline(ImagesPipeline):

# 是用来对媒体资源进行请求的(数据下载)

def get_media_requests(self, item, info):

yield scrapy.Request(item['img_src'])

# 指明数据存储的路径

def file_path(self, request, response=None, info=None):

return request.url.split('/')[-1]

# 将item传递给下一个即将执行的管道类

def item_completed(self, results, item, info):

return item

# settings.py

# 图片存储文件夹的名称+路径

IMAGES_STORE = './imgLibs'