N-Gram模型预测单词

词嵌入

只有先将单词编码为数字,每个单词匹配一个数字,才能传入Embedding中,进行词向量的转化。

word_to_ix = {'hello':0, 'world':1}

embeds = nn.Embedding(2, 5)

hello_idx = torch.LongTensor([word_to_ix['hello']])

hello_idx = Variable(hello_idx)

hello_embed = embeds(hello_idx)

print(hello_embed)N-Gram模型

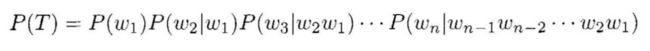

在一句话中,由前面几个词来预测后面的一个单词。

一句话T,里面有n个单词,w1,w2,…,wn,那么

缺陷:参数空间过大

解决:马尔可夫假设,该单词只和前面的几个单词有关系

过程:词嵌入——>计算条件概率——>最大化条件概率——>优化词向量——>预测

import torch

from torch import nn

import torch.nn.functional as F

from torch.autograd import Variable

# word_to_ix = {'hello':0, 'world':1}

# embeds = nn.Embedding(2, 5)

# hello_idx = torch.LongTensor([word_to_ix['hello']])

# hello_idx = Variable(hello_idx)

# hello_embed = embeds(hello_idx)

# print(hello_embed)

CONTEXT_SIZE = 2 # 依据的单词数,希望由前面2个单词来预测这个单词

EMBEDDING_DIM = 10 # 词向量的维度

# 我们使用莎士比亚的诗

test_sentence = """When forty winters shall besiege thy brow,

And dig deep trenches in thy beauty's field,

Thy youth's proud livery so gazed on now,

Will be a totter'd weed of small worth held:

Then being asked, where all thy beauty lies,

Where all the treasure of thy lusty days;

To say, within thine own deep sunken eyes,

Were an all-eating shame, and thriftless praise.

How much more praise deserv'd thy beauty's use,

If thou couldst answer 'This fair child of mine

Shall sum my count, and make my old excuse,'

Proving his beauty by succession thine!

This were to be new made when thou art old,

And see thy blood warm when thou feel'st it cold.""".split()

# 建立训练集,遍历整个test_sentence,将单词三个分组,前面两个作为输入,最后一个作为预测的结果。

trigram = [((test_sentence[i], test_sentence[i + 1]), test_sentence[i + 2])

for i in range(len(test_sentence) - 2)]

# 给每个单词编码,用数字表示单词,只有这样才能传入nn.Embedding得到词向量

vocb = set(test_sentence) # 通过set去掉重复的单词

word_to_idx = {word: i for i, word in enumerate(vocb)} # 词在前,数在后

idx_to_word = {word_to_idx[word]: word for word in word_to_idx} # 数在前,词在后

# 注释掉的这一部分是书上的代码,自己还没有调通,非注释的可以跑的是github上的代码

# # 定义N Gram模型

# class NgramModel(nn.Module):

# # 传入3个参数,单词总数、预测单词所依赖的单词数(这里为2)、词向量维度

# def __init__(self, vocb_size, context_size=CONTEXT_SIZE, n_dim=EMBEDDING_DIM):

# super(NgramModel, self).__init__()

# self.n_word = vocb_size

# self.embedding = nn.Embedding(self.n_word, n_dim)

# self.linear1 = nn.Linear(context_size * n_dim, 128)

# self.linear2 = nn.Linear(128, self.n_word)

#

# def forward(self, x):

# emb = self.embedding(x)

# emb = emb.view(1, -1) # view()函数作用是将一个多行的Tensor,拼接成一行。

# out = self.linear1(emb)

# out = F.relu(out)

# out = self.linear2(emb) # 此时输出的维度是单词总数

# log_prob = F.log_sofmax(out) # 经过log_sofmax激活函数得到概率分布,最大化条件概率

# return log_prob

#

# github上的代码

# 定义N Gram模型

class n_gram(nn.Module):

# 传入3个参数,单词总数、预测单词所依赖的单词数(这里为2)、词向量维度

def __init__(self, vocab_size, context_size=CONTEXT_SIZE, n_dim=EMBEDDING_DIM):

super(n_gram, self).__init__()

self.embed = nn.Embedding(vocab_size, n_dim)

self.classify = nn.Sequential(

nn.Linear(context_size * n_dim, 128),

nn.ReLU(True),

nn.Linear(128, vocab_size)

)

def forward(self, x):

voc_embed = self.embed(x) # 得到词嵌入

voc_embed = voc_embed.view(1, -1) # 将两个词向量拼在一起 # view()函数作用是将一个多行的Tensor,拼接成一行。

out = self.classify(voc_embed) # 此时输出的维度是单词总数

return out

net = n_gram(len(word_to_idx))

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(net.parameters(), lr=1e-2, weight_decay=1e-5)

for e in range(100):

train_loss = 0

for word, label in trigram: # 使用前 100 个作为训练集

word = Variable(torch.LongTensor([word_to_idx[i] for i in word])) # 将两个词作为输入

label = Variable(torch.LongTensor([word_to_idx[label]]))

# 前向传播

out = net(word)

loss = criterion(out, label)

train_loss += loss.item()

# 反向传播

optimizer.zero_grad()

loss.backward()

optimizer.step()

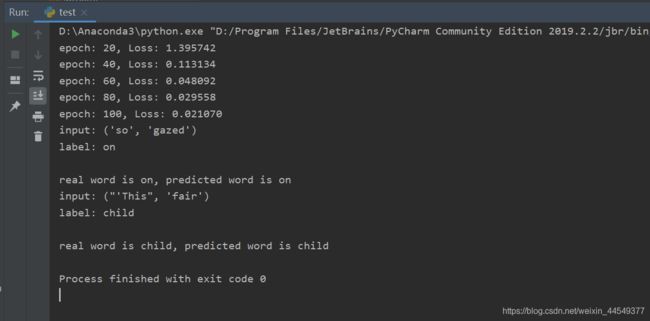

if (e + 1) % 20 == 0:

# print('epoch: {}, Loss: {:.6f}'.format(e + 1, train_loss / len(trigram)))

print('epoch: {}, Loss: {:.6f}'.format(e + 1, loss))

net = net.eval()

# 测试一下结果

word, label = trigram[19]

print('input: {}'.format(word))

print('label: {}'.format(label))

print()

word = Variable(torch.LongTensor([word_to_idx[i] for i in word]))

out = net(word)

# pred_label_idx = out.max(1)[1].data[0]

pred_label_idx = out.max(1)[1].item()

predict_word = idx_to_word[pred_label_idx]

print('real word is {}, predicted word is {}'.format(label, predict_word))

word, label = trigram[75]

print('input: {}'.format(word))

print('label: {}'.format(label))

print()

word = Variable(torch.LongTensor([word_to_idx[i] for i in word]))

out = net(word)

pred_label_idx = out.max(1)[1].item()

predict_word = idx_to_word[pred_label_idx]

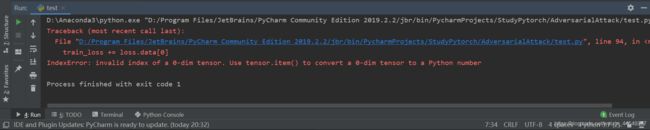

print('real word is {}, predicted word is {}'.format(label, predict_word))其中,有个小问题。代码中,这一类的代码,调用.data[0]时,会报错(以前经常遇到)

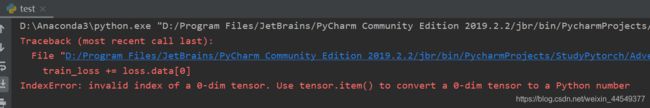

train_loss += loss.data[0]train_loss += loss.data[0]解决方法:将.data[0]改成.item()即可,如下:

# pred_label_idx = out.max(1)[1].data[0]

pred_label_idx = out.max(1)[1].item()