Discover supporting concepts, features, and best practices for building great AR experiences.

发现构建好的AR体验的支持概念,功能和最佳实践。

Overview

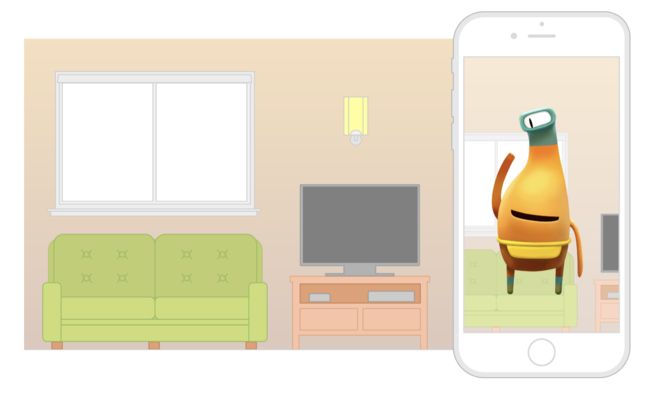

The basic requirement for any AR experience—and the defining feature of ARKit—is the ability to create and track a correspondence between the real-world space the user inhabits and a virtual space where you can model visual content. When your app displays that content together with a live camera image, the user experiences augmented reality: the illusion that your virtual content is part of the real world.

In all AR experiences, ARKit uses world and camera coordinate systems following a right-handed convention: the y-axis points upward, and (when relevant) the z-axis points toward the viewer and the x-axis points toward the viewer's right.

Session configurations can change the origin and orientation of the coordinate system with respect to the real world (seeworldAlignment). Each anchor in an AR session defines its own local coordinate system, also following the right-handed, z-towards-viewer convention; for example, theARFaceAnchorclass defines a system for locating facial features.

概述

任何AR体验的基本要求(以及ARKit的定义特征)都是能够创建和跟踪用户处在的真实世界空间与可以为视觉内容建模的虚拟空间之间的对应关系。 当您的应用程序将该内容与实时相机图像一起显示时,用户会体验到增强现实:虚拟内容是真实世界的一部分。

在所有AR体验中,ARKit遵循右手习惯使用世界和摄像机坐标系统:y轴指向上方,(相关时)z轴指向观察者,x轴指向观察者的右侧。

Session 配置可以相对于现实世界改变坐标系的原点和方向(请参阅worldAlignment)。 AR Session中的每个锚点都定义了自己的本地坐标系统,也遵循惯用右手的z向观察者惯例; 例如,ARFaceAnchor类定义了一个用于定位面部特征的系统。

How World Tracking Works

To create a correspondence between real and virtual spaces, ARKit uses a technique calledvisual-inertial odometry. This process combines information from the iOS device’s motion sensing hardware with computer vision analysis of the scene visible to the device’s camera. ARKit recognizes notable features in the scene image, tracks differences in the positions of those features across video frames, and compares that information with motion sensing data. The result is a high-precision model of the device’s position and motion.

World tracking also analyzes and understands the contents of a scene. Use hit-testing methods (see theARHitTestResultclass) to find real-world surfaces corresponding to a point in the camera image. If you enable theplaneDetectionsetting in your session configuration, ARKit detects flat surfaces in the camera image and reports their position and sizes. You can use hit-test results or detected planes to place or interact with virtual content in your scene.

世界追踪系统如何运作

为了创建真实和虚拟空间之间的对应关系,ARKit使用了一种称为视觉 - 惯性测距法的技术。 该过程将来自iOS设备的运动传感硬件的信息与设备摄像头可见的场景的计算机视觉分析相结合。 ARKit识别场景图像中的显着特征,跟踪视频帧中这些特征的位置差异,并将该信息与运动感测数据进行比较。 结果是设备位置和运动的高精度模型。

世界追踪也分析和理解场景的内容。 使用命中测试方法(请参阅ARHitTestResult类)来查找与相机图像中的点相对应的真实世界曲面。 如果在会话配置中启用planeDetection设置,则ARKit会检测摄像机图像中的平面并报告其位置和大小。 您可以使用命中测试结果或检测到的飞机在场景中放置或与虚拟内容交互。

Best Practices and Limitations

World tracking is an inexact science. This process can often produce impressive accuracy, leading to realistic AR experiences. However, it relies on details of the device’s physical environment that are not always consistent or are difficult to measure in real time without some degree of error. To build high-quality AR experiences, be aware of these caveats and tips.

Design AR experiences for predictable lighting conditions. World tracking involves image analysis, which requires a clear image. Tracking quality is reduced when the camera can’t see details, such as when the camera is pointed at a blank wall or the scene is too dark.

Use tracking quality information to provide user feedback. World tracking correlates image analysis with device motion. ARKit develops a better understanding of the scene if the device is moving, even if the device moves only subtly. Excessive motion—too far, too fast, or shaking too vigorously—results in a blurred image or too much distance for tracking features between video frames, reducing tracking quality. TheARCameraclass provides tracking state reason information, which you can use to develop UI that tells a user how to resolve low-quality tracking situations.

Allow time for plane detection to produce clear results, and disable plane detection when you have the results you need.Plane detection results vary over time—when a plane is first detected, its position and extent may be inaccurate. As the plane remains in the scene over time, ARKit refines its estimate of position and extent. When a large flat surface is in the scene, ARKit may continue changing the plane anchor’s position, extent, and transform after you’ve already used the plane to place content.

最佳实践和限制

世界追踪是一种不精确的科学。 这个过程往往能产生令人印象深刻的准确性,从而产生逼真的AR体验 但是,它依赖于设备的物理环境的细节并不总是一致的,或者难以实时测量而没有一定程度的错误。 要打造高品质的AR体验,请注意这些注意事项和提示。

为AR体验设计可预测的照明条件。世界追踪涉及图像分析,这需要一个清晰的图像。 当相机看不到细节时(如相机指向空白墙壁或场景太暗)时,跟踪质量会降低。

使用跟踪质量信息以便来提供用户反馈。世界追踪将图像分析与设备运动关联起来。 即使设备只是巧妙地移动,ARKit也能更好地了解设备正在移动的场景。 过度运动 - 太快,太快或抖动过于剧烈 - 会导致图像模糊或跟踪视频帧之间的特征的距离过长,从而降低跟踪质量。 ARCamera类提供了跟踪状态原因信息,您可以使用该信息开发UI,告知用户如何解决低质量的跟踪情况。

允许一定时间以便进行平面检测以产生清晰的结果,并在您获得所需结果时禁用平面检测。 平面检测结果随时间变化 - 当首次检测到飞机时,其位置和范围可能不准确。 随着飞机长时间停留在现场,ARKit提炼出其位置和范围的估计。 当场景中出现大面积平坦表面时,ARKit可能会在您已经使用飞机放置内容后继续更改飞机锚点的位置,范围和变形。