MGC TOKEN technical explanation —— Consensus mechanism

MGC Token consensus mechanism

MGC TOKEN uses the POS+POW+DPOS consensus algorithm. The following mainly introduces DPOS.

DPOS algorithm

DPOS (Delegated Proof of Stake), a share-based authorization certification algorithm, is a new consensus algorithm based on POW and POS. It was proposed and applied by the late developer of Bitshares, Dan Larimer (now EOS CTO) in April 2014. . DPOS can not only solve the problem of excessive energy consumption of DPOS generated by POW during mining, but also avoid the problem of trust balance bias that may occur under POS equity distribution. DPOS is created by a trusted account (super node, such as the top 101 votes) that is elected by the community. For example, 101 super nodes are selected, that is, 101 mine pools, and the rights between the super nodes are completely equal. Ordinary holders can change super nodes (mine pools) at any time by voting. DPORT decentralization does not have direct shareholding rights for each holder, but requires indirect voting power to ensure that the super is elected. The node does not do evil, but it can also pull the ballot to become a super node or a standby super node.

DPOS algorithm principle

The basic principles of the DPOS algorithm:

A. The shareholder exercises voting rights based on the shares held, rather than relying on mining competition.

B. Maximize the profitability of shareholders.

C. Minimize the cost of maintaining network security.

D. Maximize the performance of the network.

E. Minimize the cost of running the network (bandwidth, CPU, etc.), and achieve security and stability of the network without wasting a lot of power.

In the DPOS consensus algorithm, the normal operation of the blockchain depends on the super node. The main functions of the super node are: A. Provide a server node to ensure the normal operation of the node; B. The node server collects transactions in the network; C. The node verifies the transaction, packages the transaction to the block; D. the node broadcasts the block, and the other nodes add the block to its own database after verification; E. leads and promotes the development of the blockchain project;

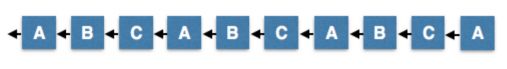

The DPOS algorithm operates as follows:

I want to assume three block producers A, B, and C. Because the consensus requires 2/3 + 1 to solve all cases, this simplified model will assume that producer C is considered an attacker. In the real world, there will be 21 or more block producers. Just like proof of work, the general rule is the longest chain win. At any time, an honest colleague sees an effective, stricter, longer chain that switches from the current fork to a longer fork. I will show you how DPOS works under the most imaginable network conditions. These examples should help you understand why DPOS is robust and hard to break.

normal situation

Under normal operation, the block producer in turn produces one block every 3 seconds. Assuming no one missed them, this will produce the longest chain. It is not valid for block producers to generate blocks at any other time.

Minority unconnected branch

Up to 1/3 of the nodes may be malicious or faulty and create a few forks. In this case, a few forks will only produce one block every 9 seconds, while most forks will produce 2 blocks every 9 seconds. Again, an honest 2/3 majority will be longer than a few.

Minority is not connected to multiple branches

A few people can try to produce an unlimited number of branches, but all of their branches will be shorter than the main chain, because a few people are slower than most people.

Minority unconnected branch

Up to 1/3 of the nodes may be malicious or faulty and create a few forks. In this case, a few forks will only produce one block every 9 seconds, while most forks will produce 2 blocks every 9 seconds. Again, the honest 2/3 majority will be broken than the minority network

In some cases, it is entirely possible for a network to be fragmented into many pieces without most of the block producers. In this case, the longest chain will be the largest minority. When the network connection is restored, the smaller minority will naturally switch to the longest chain, and a clear consensus will be restored.

There may be 3 branches where 2 of the longest branches are of the same length. In this case, the producer on the third shortest chain will abandon the original chain and rejoin the network of one of the two longest chains. The number of producers is odd, so it is impossible to remain the same for a long time. After that, we will cover the producer shuffling, which will randomize the production sequence to ensure that even if the two branches have the same number of producers, the branches will grow at different speeds.

Connecting minority production

In this case, a few Bs have generated two or more candidate blocks in their time slots. The next planned producer © can choose to build from any of the blocks generated by B. When this happens, it will become the longest chain, and all nodes that select B1 will switch forks. It doesn’t matter that few of the few bad producers are trying to spread the alternatives, they will never be part of the longest chain of more than one round.

Last irreversible block

In the case of network fragmentation, multiple branches can continue to grow over a long period of time. In the long run, the longest chain will win, but observers need a means to determine when a block is definitely part of the fastest growing chain. This can be determined by seeing the 2/3 + 1 block manufacturer’s confirmation.

In the figure below, block B has been confirmed by C and A, and A stands for 2/3+1 confirmation, so we can infer that if our 2/3 producers are honest, no other chain may be longer.

Please note that this “rule” is similar to Bitcoin’s 6 blocks to confirm “rules”. Some smart individuals can design a series of events where two nodes can end on different final irreversible blocks. This edge condition requires the attacker to have complete control over the communication delay and use the control instead of once, but for two minutes. If this happens, the longest chain of long-term rules still apply. We estimate that the probability of such an attack is close to zero, and the economic consequences are so insignificant that it is not worth worrying about.

Lack of a specific number of producers

If there is no explicit producer quorum, a minority may continue to produce blocks. In these blocks, stakeholders can include transactions that change their votes. These votes can then select a new set of producers and resume block production to 100%. Once this happens, the minority chain will eventually exceed all other chains without 100% involvement.

In the process, all observers will know that the state of the network is constantly changing until 67% of the participation in a chain. Those who choose to trade under these conditions are at similar risk to those who choose to accept less than 6 confirmations. The knowledge they do has a small probability that consensus may eventually settle in different branches. In practice, this situation is much safer than accepting blocks that are less than 3 bitcoin confirmed.

Most producers make mistakes

If most producers become corrupt, they can produce an unlimited number of branches, and each branch seems to advance with a 2/3 majority. In this case, the last irreversible block algorithm will revert to the longest chain algorithm. The longest chain will be the chain approved by the most people, which will be determined by a few remaining honest nodes. This behavior will not last long, as stakeholders will eventually vote to replace these producers.

Transaction as proof certificate (TaPoS)

When users sign a transaction, they trade based on specific assumptions about the status of the blockchain. This assumption is based on their views on recent blocks. If the consensus on the longest chain changes, it may invalidate the signatory’s consent to the transaction.

For TaPoS, all transactions contain a hash of the most recent block and are considered invalid if the block does not exist in the chain history. Anyone who signs a trade at an isolated fork will find the deal invalid and cannot be moved to the main fork.

The side effect of this process is the security of remote attacks that attempt to create alternative chains. Each stakeholder will directly confirm the blockchain at each transaction. As time passed, all obstacles were confirmed by all stakeholders, and this could not be replicated in the forged chain.

Determine producer shuffling

In all the examples, we show the cyclic scheduling of the block producer. In fact, the block producer shuffles in every n blocks, where n is the number of producers. This randomization ensures that block producer B does not always ignore block producer A, and that at any time there are multiple branches with the same number of producers (alliance) that are ultimately broken.

DPOS is reliable in every imaginable natural network error, even in the face of corruption by a few manufacturers. Unlike some competitive algorithms, DPOS can continue to function when most producers fail. In the process, the community can vote to replace the failed producer until it can resume 100% participation. I know that no other consistent algorithms are robust under such high and different fault conditions. Ultimately, DPOS derives significant security from the algorithm of the selected block manufacturer and verifies that the node is a high quality and unique individual. The process of using an approved vote ensures that even a 50% active voting person cannot choose a single producer. DPOS is designed to optimize the performance of nominal conditions by 100% participation in honest nodes with strong network connections. This makes it possible for DPOS to be able to confirm transactions within an average time of 99.9% seconds while downgrading in an elegant, detectable manner, which is trivial. Other consistent algorithm designs for the nominal conditions of dishonest nodes with poor network conditions. The end result of the alternative design is a network with slower performance, higher latency, and higher communication overhead, and completely stops in 33% of the node failure events.

DPOS successfully navigated the environment and demonstrated its ability to maintain consistency while handling more transactions.

DPOS penalties for malicious nodes

Registering as a candidate supernode requires a margin (about 10 XTS), which usually takes about two weeks to reach the profit and loss balance, thus promoting the stability of the trustee and ensuring that at least two weeks of mines are dug.

The penalty for DPOS for a malicious node is that the super node that does not generate the block by schedule will be rejected in the next round and the deposit will be confiscated.

A malicious node can do evil in a short period of time. A malicious block is only reserved for a short time. Soon the super nodes will return to the consensus reached by the honest node, creating the longest chain and returning to the longest chain without the evil block. .

Advantages and disadvantages of the DPOS algorithm

Advantages of the DPOS algorithm:

A. Energy consumption advantage, low network operation cost.

B. In theory, it is more decentralized.

C, faster confirmation speed. The block speed is in seconds and the transaction confirms the minute level.

Disadvantages of the DPOS algorithm:

A. Bad nodes cannot be processed in time, and only bad nodes can be cleared after election.

B. Small voting is not very motivating.

C.rely on tokens

Simple code for DPOS

From the main.go of the project, four super nodes are simulated with different ports on the four terminals.

Its main logic is:

- According to different ports, tcp listens.

- Create a new blockchain based on the port number and store it on the supernode.

- Get the number of lastHeight and delegations from the newly created blockchain.

- Build a delegate object based on nodeVersion, lastHeight, nodeAddress, and numberDelegate.

- Add the newly created delegate object to the database.

- If it is not the first node to start, you need to add a new principal node.

sendDelegates(blockchain,numberDelegate+1,delegate)

After adding the principal node, the coroutine is started to perform the round-out block. Go Forks(blockchain) - Process the results of tcp snooping.

- The destructuring of the Delegates object is as follows:

This structure can not only describe the location of its own nodes, but also know how many principal nodes there are. You can also see the block height at the time of the last block.

Next, we will analyze how to add a new principal node.

The main logic is to obtain a list of existing principal nodes and perform traversal. If it is not the node added now, it will send a message to other nodes.

Sending a message is also very simple. The address of the sending message indicates to which principal node the message is sent, and then the request command to be executed by the message.

Then there is a coroutine in StartServer() that listens for these messages and executes the command after getting the message. Let’s see how to add a new principal node.

The main logic is to get the delegate data from the request, deserialize to get the delegate object, and then update the bestHeight. Write the new principal node to the database. Get the latest number of delegates, if the write is successful, broadcast again. Until its LastHeight is synchronized.

Focus on how the Forks() method allows the principal node to roll out the block.

This method is to open an infinite loop, and continue to make a round and round of the block cycle. Then you can analyze a block cycle in detail.

The main logic is as follows: - Get all the principal nodes and determine if there are more than 3 principal nodes. If it is not enough, wait. Of course, this number can be set by yourself.

- Once the principal is ready, get the latest block.

- Determine whether the principal node is the first principal node to be started. If so, the first principal node is responsible for the block, (of course, it can be changed to a round-out block).

If nodeAddress == knownNodes[0]{ - Then judge whether the other principal nodes are the latest block according to the block height, and then count the proportion of the principal nodes of the latest block. If it exceeds 2/3, the block can be performed.

- After the block is finished, it is necessary to broadcast. There is also a traversal update of the principal node state.

Summary: There is no way to write code in strict DPoS. On the one hand, the principal nodes are not out of order after one cycle, and on the other hand, there is no power for all the principal nodes to have a block, and of course, the longest chain is not performed according to the order of the principals. Selection mechanism.

POS consensus algorithm

POS is fully known as Proof of Stake Equity. That is to say, the proof of equity is an upgrade consensus mechanism of POW. According to the proportion and time of the tokens occupied by each node, the difficulty of mining is reduced proportionally, so that the speed of finding random numbers is accelerated. In view of the shortcomings of POW, Sunny King proposed POS in 2012 and released dot coin PPCoin based on a hybrid mechanism of POW and POS. POS literally means the shareholding system. That is to say, the more shares of the company, the greater the right of the person, which is similar to the meaning of the shareholders in the joint-stock company in our lives. However, in the application of the blockchain, we are not likely to actually assign the shares of the nodes in the chain, but instead have something else that acts as a share, and we assign these things to the nodes in the chain. Here are some examples to illustrate this concept.

For example, in the application of virtual currency in PoS, we can regard the amount of currency held as the shareholding and the number of shares. Now there is a PoS consensus mechanism in MGC TOKEN, so in MGC TOKEN, each MGC TOKEN node is used. Measure the number of MGC tokens owned, how many shares of this node, and how much it has. Suppose an MGC network has 3 nodes, A and B and C. The A node has 10000 MGC tokens, and B and C have 1000 and 2000 respectively. In this MGC network, the block of A is the most. If it is possible to be selected, the right to speak is relatively large.

For example, suppose that in the future, a non-virtual currency blockchain, a public chain, a physical industry chain, such as a car chain, we can count the number of vehicles owned by each owner and the value of his car. To allocate shares, for example, to define a formula: number of cars * car value = number of shares, in PoS, shares are a concept, a concept of measuring the right to speak.

POS features

The above description has already explained the concept of the POS consensus algorithm. Because it measures the right to talk by the amount of something, which means that as long as our nodes have such things, such as MGC tokens, even if there is only one, there is a right to talk, even Very small, even no chance to show up, but it still has a chance.

In POS, the block is already cast (there is no concept of “mining” here, so we don’t use this word to prove the shares), POW has the concept of mining.

This also has the following characteristics:

Advantages:

- Shorten the time for consensus to reach, and the consensus block in the chain is faster;

- No longer need a lot of energy to mine;

- Cheating is not lost, because if a person holding more than 51% of the shares cheated, it is equivalent to him pitted himself, because he is the person with the most shares, the result of cheating is often the more losses more.

Disadvantages: - The attack cost is low, and only the node has the number of items, such as the number of tokens, and can initiate a block attack of dirty data;

- In addition, the node with a large number of tokens will have a higher probability of obtaining the accounting rights, which will make the network consensus dominated by a few wealthy accounts, thus losing the fairness.

MGC TOKEN’s POS proof calculation formula is:

Proofhash < currency age x target value

Expand as follows:

Hash(nStakeModifier + txPrev.block.nTime + txPrev.offset + txPrev.nTime + txPrev.vout.n + nTime) < bnTarget x bnCoinDayWeight

Where proofhash, the hash value corresponding to a set of data, namely hash (nStakeModifier + txPrev.block.nTime + txPrev.offset + txPrev.nTime + txPrev.vout.n + nTime).

The currency age is bnCoinDayWeight, which is the currency day, which is the number of coins held multiplied by the number of days in which the currency is held. The maximum number of days here is 90 days.

The target value, bnTarget, is used to measure the difficulty of POS mining. The target value is inversely proportional to the difficulty. The larger the target value, the smaller the difficulty, and vice versa.

Therefore, the larger the currency held, the greater the chance of digging into the block.

Write POS code

In order to make you understand, we will implement this through pseudo code.

First we use a candidate block array to save, each block broadcasts the block object generated by its own node: candidateBlocks [ ]Blocks (candidate block array)

There is a variable in each block structure that is used to record the address of the node that generated the block.

Type block struct {

Timestamp string // timestamp representing the generation time of the block

Hash string // the hash value of this block

PrevHash string // The hash value of the previous block of this block

NodeAddress string // Generate the node address of this block

Data string // data carried by the block}

Then there is a sub-thread that is specifically responsible for traversing the candidate block array to get its token amount based on the node address in the block, and then allocate the equity.

stakeRecord []string // array

For block ~ candidateBlocks {

coinNum = getCoinBalance(block.NodeAddress) // Get the token amount

For i ~ coinNum { // How many coins do you add?

If stakeRecord.contains(block.NodeAddress) { // Whether or not

Break // the included is no longer added repeatedly

}

stakeRecord = append(block.NodeAddress) // add

}}

Then select a campaign winner from stakeRecord. This probability is related to the coinNum above, the bigger the chance, the better.

Index = randInt() // Get a shaped random number

Winner = stakeRecord[index] // retrieve the address of the winner node

Finally, we can take out the block generated by this winner to access the public chain and then broadcast it.

For block ~ candidateBlocks {

If block.NodeAddress == winner {

// Add to

}}

// broadcast out

…

The above is a very simple, POS algorithm mechanism code implementation, simply based on the number of coins to do equity allocation. In fact, things are often more complicated, and the distribution of equity is not only related to the amount of tokens, so it can be derived from a variety of ideas. For example, MGC TOKEN has joined the age of the coin. After the candidate is successful, MGC TOKEN will also deduct the age of the coin at this step. These can be changed.

POS mining process

In order to let everyone know the mining process, we use the Step 11: start node of the AppInit2() function in init.cpp to start the node analysis. Through the source code, we can see that the source code of MGC TOKEN is also based on Bitcoin source code. of. The client startup process is the same as Bitcoin, and the code structure is the same. Let’s look at the last line of code in step 11 to start the mining process of MGC TOKEN:

Start POS mining (picture 1)

Here we start POS mining by calling MintStake, we now enter this function in main.cpp.

Call ThreadStakeMinter (Picture 2)

This function creates a new thread ThreadStakeMinter for POS mining.

Call BitcoinMiner (Picture 3)

Here you can see that the BitcoinMiner function is called. There are two parameters, pwallet and true, which represent the current wallet class and POS mining, respectively. Let’s look at this function header:

Void BitcoinMiner(CWallet *pwallet, bool fProofOfStake)

fProofOfStake indicates whether it is currently POS mining. MGC TOKEN merges the three mining methods of POW, POS and DPOS of Bitcoin. The default is POS after startup, because the parameter passed when this parameter is started is true.

BitcoinMiner includes two mining methods, POW and POS. Here we mainly introduce the mining method of POS; POS mining is also CreateNewBlock() from the new block; here we introduce a new concept: Coinstake. In order to achieve POS, MGC TOKEN designed a special transaction called Coinstake. The design of Coinstake is based on the design of Coinbase by Satoshi Nakamoto. In essence, Coinbase and Coinsake are all transactions, but they have some hard limits on their input and output. Bitcoin stipulates that the first transaction in each block must be placed in Coinbase. Conversely, Coinbase cannot appear in other parts of the block. In order not to destroy this rule, MGC TOKEN refers to the second transaction as Coinstake. Conversely, Coinstake cannot appear anywhere else. In other words, as long as the second transaction is Coinstake, then this block is treated as a POS block.

Now let’s go into CreateNewBlock() and look at the code inside. In fact, most of them are the same as Bitcoin:

Create a new block template (image 4)

Create coinStake (Picture 5)

In this code we can see that the condition for creating CoinStake is that the time to create this transaction is greater than the time when the transaction was last created (nSearchTime > nLastCoinStakeSearchTime). Since nLastCoinStakeSearchTime is statically typed, this variable will only be initialized once, so that two can be calculated. The time difference between times. Create a CoinStake function in the wallet class wallet.cpp CreateCoinStake (const CKeyStore & keystore, unsigned int nBits, int64 nSearchInterval, CTransaction & txNew), the parameters are the wallet public key class, mining difficulty value, mining interval, trading object.

Create a transaction (Picture 6)

Cycling mining (Picture 7)

Here I borrowed the concept of POW mining. In fact, although POS has a similar calculation process, it can be seen that this is not an infinite loop of mining. It is interval-limited. This interval is the last time and this digging. The mine time is poor and cannot be greater than 60 seconds. The function here and the judgment of the successful mining is the CheckStakeKernelHash function. We are now entering this function to do something. Before we enter, we introduce a new concept: Kernel; we already know that in the POS block, the first transaction is also called CoinBase, in order not to destroy the previous rules, the second transaction is called Coinstake, to represent this area. The block is a POS block, and the first transaction input for Coinstake is called Kernel. So we are looking for this Kernel in POS mining. Now let’s see how CheckStakeKernelHash looks for Kernel. This function is in Kernel.cpp. Let’s first look at how to pass the argument. What we should pay special attention to is the sixth parameter, which is the time of the transaction minus the number of seconds in the past. txNew.nTime - n; This allows for a more accurate calculation of equity gains.

![]()

Call CheckStakeKernelHash (Picture 8)

Calculate hash and equity adjusters (Picture 9)

Here we see a variable: nStakeModifier, we can see that if it is V03 protocol, the first data of the hash value is nStakeModifier, otherwise it is nBits; the acquisition of nStakeModifier is called GetKernelStakeModifer for assignment. nStakeModifier: A regulator specially designed for POS. If there is no such data, when a person receives a coin to get the network confirmation, he can immediately calculate in advance how he can forge the block in the future. This obviously does not In line with the design goals, Sunny King hopes that POS miners and POW miners will do blind exploration to maintain the blockchain in real time. The nStakeModifier is designed to prevent POS miners from calculating ahead of time. nStakeModifier can be understood as an attribute of the POS block. Each block corresponds to an nStakeModifier value, but the nStakeModifier does not change every block. However, the protocol stipulates that the Modifier Interval must be recalculated once, and the value is The previous nStakeModifier is related to the latest block hash value, so the POS miner cannot calculate it in advance because he does not know the future block hash value.

POS mining is based on the balance and the time of holding mining.

Its formula is: arget * Balance >

F(Timestamp) Compared with POW, the search space on the right side of the formula changes from Nonce to Timestamp, the Nonce range is infinite, and Timestamp is extremely limited. The block time of a qualified block must be within the specified range of the previous block time. Blocks that are too early or too advanced will not be accepted by other nodes. The target value on the left side of the formula introduces a product factor balance. The larger the visible balance, the larger the target value (Target* Balance) and the easier it is to find a block. Because Timestamp is limited, the success rate of POS casting blocks is mainly related to Balance. The last formula in MGC TOKEN is: SHA256D(nStakeModifier+ txPrev.block.nTime + txPrev.offset + txPrev.nTime + txPrev.vout.n +nTime)< bnTarget * nCoinDayWeight

The above is the mining process of MGC TOKEN POS.

POW algorithm

POW (Proof of Work), that is, proof of workload, also known as mining. The workload proves to guess a value (nonce) by calculation, so that the hash value of the content after the transaction data is put together satisfies the specified upper limit. Since the Hash problem requires a lot of calculations in the current calculation model, it can be guaranteed that only a few legitimate proposals can appear in the system for a period of time. If a legal proposal can be made, it proves that the sponsor has indeed paid a certain amount of work. Hash Cash is a proof of work mechanism that Adam Back invented in 1997 to resist denial of service attacks and spam gateway abuse.

Principle of POW algorithm

The main feature of the proof of workload is that the client needs to do a certain difficulty to get a result, but the verifier can easily check whether the client has done the corresponding work through the result. A core feature of the workload proof scheme is asymmetry: work is moderate for the requester and easy to verify for the verifier.

Given a basic string, add an integer value called nonce after the base string, and perform a SHA256 hash on the changed (add nonce) string if the resulting hash result (in hexadecimal notation) ) is started with a string (such as “0000”), and the verification is passed. In order to achieve the goal of workload proof, it is necessary to continuously increment the nonce value and perform SHA256 hash on the obtained new string.

Since the given basic string is not determined in different situations, for the combination of different basic strings and nonce, it is not certain to use SHA256 to calculate the number of calculations of the hash value at the beginning of a string, but it will be a match. Probabilistic events of statistical law.

According to the rules, it is expected that 2^16 attempts (the pseudo-random characteristics of the hash value can be estimated) can obtain four hash hashes of leading 0s.

MGC TOKEN’s POW implementation

The MGC TOKEN block consists of the block header and the list of transactions contained in the block. The block header size is 80 bytes, and its composition includes:

4 bytes: version number

32 bytes: the hash value of the previous block

32 bytes: Merkle root hash of the transaction list

4 bytes: current timestamp

4 bytes: current difficulty value

4 bytes: random number Nonce value

The block header of 80 bytes in length is the input string of the MGC TOKEN Pow algorithm.

The transaction list is appended to the block header, where the first transaction is a special transaction for the miner to receive rewards and commissions.

The workload proof process is a process of continuously adjusting the Nonce value and performing a double SHA256 hash operation on the block header so that the result satisfies the hash value of a given number of leading zeros, wherein the number of leading zeros depends on the mining difficulty. The more the number of leading zeros, the more difficult it is to mine.

The new difficulty will be calculated after each 2016 block is created, and the 2016 blocks will use the new difficulty. The calculation steps are as follows:

A. Find the first block of the previous 2016 block and calculate the time it takes to generate this 2016 block.

That is, the time difference between the time of the last block and the first block. The time difference is not less than 3.5 days and no more than 56 days.

B. Calculate the sum of the difficulty of the previous 2016 blocks, that is, the difficulty x total time of a single block.

C. Calculate the new difficulty, which is the sum of the difficulty of the 2016 blocks / the number of seconds in the 14 days, to get the difficulty value per second.

D. Require new difficulty, the difficulty is not less than the minimum difficulty defined by the parameters.

Advantages and disadvantages of the POW algorithm

Advantages of the POW algorithm:

A. Complete decentralization

B. Nodes are free to enter and exit

C. High security

Disadvantages of the POW algorithm:

A. Bookkeeping rights to capital concentration

B. Mining causes a lot of waste of resources.

C. The network performance is too low, it is necessary to wait for multiple confirmations, and it is easy to generate bifurcation. The confirmation consensus of the block is long, which is not suitable for commercial applications.

Principle of POW algorithm

I. Abstract algorithm

The first thing to know is what is the digest algorithm. The digest algorithm is generally divided into two steps.

2. Compression

There are several concepts to keep in mind when compressing the values you just got:

a. The digest algorithm is irreversible and cannot be obtained.

b. The abstract algorithm in order to protect the original text will generally add some strings after the original text, this process is called salt

c. The abstract algorithm has collapse characteristics, and a small amount of changes to the original text will cause the value of the abstract to change greatly.

d. The abstract values generated by different original texts will generally be different. There will be very small probability that different original texts will produce the same abstract. This is called collision.

Second, generate a special summary value

Package main

Import (

“crypto/sha256”

“fmt”

“strings”

)

Func main() {

For i := 0; i < 100; i++ {

Data := "[email protected]"+string(i)

c := getSha256Code(data)

Index := strings.Index(c, “0”)

If index == 0{

fmt.Println(c,i)

Break;

}

}

}

Func getSha256Code(s string) string {

h := sha256.New()

h.Write([]byte(s))

Data := h.Sum(nil)

Hex :=fmt.Sprintf("%x", data)

Return hex

}

Print value:

069896a4318eca6ef060f14db5b967d5dab38233d6e9ca2a3d8bdaf3a8c88398 28

When i is 28, a summary starting with 0 is generated. Suppose we specify a digest value starting with six zeros. In this case, the computer needs to calculate ten minutes. The length of this calculation is the computing power of your computer. A strong computer will calculate this digest value first, and the pow algorithm will use this value as the latest block digest.

Third, the abstract chain

Last hash value + current block content + random number The hash value generated is the hash value of the latest block. The chain formed by these hash values is the abstract chain. The principle of pow is roughly the same.

MGC TOKEN POW workload proof source code analysis

Consensus engine description

In the CPU mining part, the CpuAgent mine function calls the self.engine.Seal function when performing the mining operation. The engine here is the consensus engine. Seal is one of the most important interfaces. It implements the search for nonce values and the calculation of hashes. And this function is an important function that guarantees consensus and cannot be forged.

In the PoW consensus algorithm, the Seal function implements the working proof. This part of the source code is under consensus/ethhash.

Consensus Engine Interface: type Engine interface {

// Get the block digger, ie coinbase

Author(header *types.Header) (common.Address, error)

// VerifyHeader is used to check the block header and verify it by consensus rules. The verification block can be used here by Ketong through the VerifySeal method.

VerifyHeader(chain ChainReader, header *types.Header, seal bool) error

// VerifyHeaders is similar to VerifyHeader, and this is used to batch checkpoints. This method returns an exit signal

// Used to terminate the operation for asynchronous verification.

VerifyHeaders(chain ChainReader, headers []*types.Header, seals []bool) (chan<- struct{}, <-chan error)

// VerifyUncles is used to verify the unblock to conform to the rules of the consensus engine

VerifyUncles(chain ChainReader, block *types.Block) error

// VerifySeal checks the block header according to the rules of the consensus algorithm

VerifySeal(chain ChainReader, header *types.Header) error

// Prepare the consensus field used to initialize the block header based on the consensus engine. These changes are all implemented inline.

Prepare(chain ChainReader, header *types.Header) error

// Finalize completes all state changes and eventually assembles into blocks.

// The block header and state database can be updated to conform to the consensus rules at the time of final validation.

Finalize(chain ChainReader, header *types.Header, state *state.StateDB, txs []*types.Transaction,

Uncles []*types.Header, receipts []*types.Receipt) (*types.Block, error)

// Seal packs a new block based on the input block

Seal(chain ChainReader, block *types.Block, stop <-chan struct{}) (*types.Block, error)

// CalcDifficulty is the difficulty adjustment algorithm that returns the difficulty value of the new block.

CalcDifficulty(chain ChainReader, time uint64, parent *types.Header) *big.Int

// APIs return RPC APIs provided by the consensus engine

APIs(chain ChainReader) []rpc.API

}

Ethhash implementation analysis

Ethhash structure

Type Ethash struct {

Config Config

// cache

Caches *lru // In memory caches to avoid regenerating too often

// memory data set

Datasets *lru // In memory datasets to avoid regenerating too often

// Mining related fields

Rand *rand.Rand // Properly seeded random source for nonces

// number of mining threads

Threads int // Number of threads to mine on if mining

// channel is used to update mining notices

Update chan struct{} // Notification channel to update mining parameters

Hashrate metrics.Meter // Meter tracking the average hashrate

/ / Test network related parameters

// The fields below are hooks for testing

Shared *Ethash // Shared PoW verifier to avoid cache regeneration

fakeFail uint64 // Block number which fails PoW check even in fake mode

fakeDelay time.Duration // Time delay to sleep for before returning from verify

Lock sync.Mutex // Ensures thread safety for the in-memory caches and mining fields

}

Ethhash is a concrete implementation of POW. Since there are a lot of data sets to be used, there are two pointers to lru. And through the threads control the number of mining threads. And in test mode or fake mode, simple and fast processing, so that it can get results quickly.

The Athor method obtained the address of the miner who dug out the block.

Func (ethash *Ethash) Author(header *types.Header) (common.Address, error) {

Return header.Coinbase, nil

}

VerifyHeader is used to verify that the block header information conforms to the ethash consensus engine rules.

// VerifyHeader checks whether a header conforms to the consensus rules of the

// stock Ethereum ethash engine.

Func (ethash *Ethash) VerifyHeader(chain consensus.ChainReader, header *types.Header, seal bool) error {

// When in ModeFullFake mode, any header information is accepted

If ethash.config.PowMode == ModeFullFake {

Return nil

}

// If the header is known, return without checking.

Number := header.Number.Uint64()

If chain.GetHeader(header.Hash(), number) != nil {

Return nil

}

Parent := chain.GetHeader(header.ParentHash, number-1)

If parent == nil { // Get the parent node failed

Return consensus.ErrUnknownAncestor

}

// further header verification

Return ethash.verifyHeader(chain, header, parent, false, seal)

}

Then look at the implementation of verifyHeader,

Func (ethash *Ethash) verifyHeader(chain consensus.ChainReader, header, parent *types.Header, uncle bool, seal bool) error {

// Make sure the extra data segment has a reasonable length

If uint64(len(header.Extra)) > params.MaximumExtraDataSize {

Return fmt.Errorf(“extra-data too long: %d > %d”, len(header.Extra), params.MaximumExtraDataSize)

}

// check the timestamp

If uncle {

If header.Time.Cmp(math.MaxBig256) > 0 {

Return errLargeBlockTime

}

} else {

If header.Time.Cmp(big.NewInt(time.Now().Add(allowedFutureBlockTime).Unix())) > 0 {

Return consensus.ErrFutureBlock

}

}

If header.Time.Cmp(parent.Time) <= 0 {

Return errZeroBlockTime

}

// Check the difficulty of the block based on the timestamp and the difficulty of the parent block.

Expected := ethash.CalcDifficulty(chain, header.Time.Uint64(), parent)

If expected.Cmp(header.Difficulty) != 0 {

Return fmt.Errorf(“invalid difficulty: have %v, want %v”, header.Difficulty, expected)

}

// check gas limit <= 2^63-1

Cap := uint64(0x7fffffffffffffff)

If header.GasLimit > cap {

Return fmt.Errorf(“invalid gasLimit: have %v, max %v”, header.GasLimit, cap)

}

// Verify gasUsed <= gasLimit

If header.GasUsed > header.GasLimit {

Return fmt.Errorf(“invalid gasUsed: have %d, gasLimit %d”, header.GasUsed, header.GasLimit)

}

// Is gas limit within the allowable range?

Diff := int64(parent.GasLimit) - int64(header.GasLimit)

If diff < 0 {

Diff *= -1

}

Limit := parent.GasLimit / params.GasLimitBoundDivisor

If uint64(diff) >= limit || header.GasLimit < params.MinGasLimit {

Return fmt.Errorf(“invalid gas limit: have %d, want %d += %d”, header.GasLimit, parent.GasLimit, limit)

}

// The check block number should be the parent block block number +1

If diff := new(big.Int).Sub(header.Number, parent.Number); diff.Cmp(big.NewInt(1)) != 0 {

Return consensus.ErrInvalidNumber

}

// Verify that a particular block meets the requirements

If seal {

If err := ethash.VerifySeal(chain, header); err != nil {

Return err

}

}

// Validate the special fields of the hard fork if all checks pass.

If err := misc.

VerifyDAOHeaderExtraData(chain.Config(), header); err != nil {

return err

}

if err := misc.VerifyForkHashes(chain.Config(), header, uncle); err != nil {

return err

}

return nil

}

Structure Ethash

Let’s take a look at this structure, which is itself a specific description of the pow algorithm:

//The following mining modes

Const (

ModeNormal Mode = iota

ModeShared //Share mode to avoid cache interference

ModeTest // test mode

ModeFake // pseudo mode

ModeFullFake //complete pseudo mode, no block validation at all, increase speed

)

Type Config struct {

CacheDir string

CachesInMem int

CachesOnDisk int

DatasetDir string

DatasetsInMem int

DatasetsOnDisk int

PowMode Mode //From here you can set different mining modes

}

Type Ethash struct {

Config Config // some basic configuration of pow, such as cache path, database path, etc.

Caches *lru // cache information stored in memory

Datasets *lru // Database information stored in memory

// Mining related

Rand *rand.Rand // random seed of nonces

Threads int // how many threads are mining

Update chan struct{} // notify update mining parameters

Hashrate metrics.Meter // Tracks the rate associated with the hash, which should be used for monitoring to ensure a constant difficulty

// Remote sealer related fields,

workCh chan *sealTask // Notification channel to push new work and relative result channel to remote sealer

fetchWorkCh chan *sealWork // Channel used for remote sealer to fetch mining work

submitWorkCh chan *mineResult // Channel used for remote sealer to submit their mining result

fetchRateCh chan chan uint64 // Channel used to gather submitted hash rate for local or remote sealer.

submitRateCh chan *hashrate // Channel used for remote sealer to submit their mining hashrate

// The following are the parameters used in the test.

Shared *Ethash // Shared PoW verifier to avoid cache regeneration

fakeFail uint64 // Block number which fails PoW check even in fake mode

fakeDelay time.Duration // Time delay to sleep for before returning from verify

Lock sync.Mutex // Ensures thread safety for the in-memory caches and mining fields

closeOnce sync.Once // Ensures exit channel will not be closed twice.

exitCh chan chan error // Notification channel to exiting backend threads

}

Since there are a large number of data sets to use, there are two pointers to lru, one pointing to db and one pointing to memory. And through the threads control the number of mining threads. And in test mode or fake mode, simple and fast processing, so that it can get results quickly.

Consensus algorithm interface implementation

The consensus algorithm is a method implemented in the Engine interface. That is to say, the structure Ethash has to implement each of the interfaces, and because it is a concrete implementation of the POW, it has to implement another interface Hashrate() in the pow.

The specific implementation is in the consensus.go file.

Let us explain each one below.

Author()

Get the miner address to dig out the current block

func (ethash *Ethash) Author(header *types.Header) (common.Address, error) {

return header.Coinbase, nil

}

VerifyHeader()

Used to check whether the block header information conforms to the ethash consensus engine rule.

First summarize the verification process, followed by the block batch verification, the check of the uncle block, etc., all perform the following similar steps. The method verification process (see the code analysis below for details), which is only used for combing):

Check if it is ModeFullFake mode, then it will return success, otherwise continue.

According to the block number and hash of the block, it is judged in the chain whether the block can be found, and then it can be returned, otherwise it continues;

According to the hash and block number of the parent block of the block, it is detected whether the parent block can be found in the chain, and no error is returned, otherwise it continues. (At this point, the block is a new block)

After getting the parent block, further fine-tune the new block.

Whether the length of the extra data (head.extra) of the block header exceeds the maximum limit length (it is understood that the extra can allow the miner to add a finite character, and the extra is occupied after the dao fork)

Determine if the block is a bad block (true, false).

If the block is a non-block, it is judged whether the timestamp exceeds 256-bit length. If yes, it returns an exception or continues.

If the block is not a non-block, it is determined whether the block timestamp exceeds the current time (+15 seconds, it can be known that one block is generated every 15 seconds or so), and if so, an exception is returned, otherwise it continues;

Determine whether the timestamp of the block is larger than the timestamp of the parent block. If not, return an exception, otherwise continue.

Calculate the expected difficulty level according to the difficulty of the block and its parent block, determine whether the expected difficulty level is consistent with the difficulty level of the original record in the block, and return an abnormality if it is inconsistent, otherwise continue;

Determine whether gaslimit is greater than 2^63-1, if it returns, it will return an exception, otherwise continue (here we can see that the upper limit of gaslimit is 2^63-1, which is equivalent to 9.2 or so eth);

Determine whether the gasUsed of the block is <= gasLimit, if not, return an exception, otherwise continue;

Make sure that the current block gaslimit is in a range relative to the parent block. If it is not within the range, return an exception or continue. (This shows that the gaslimit must not be less than 5000)

If the sub-block number-parent block number is not 1, an exception is returned.

If all checks pass, verify the special field of the hard fork.

Specific code analysis

Func (ethash *Ethash) VerifyHeader(chain consensus.ChainReader, header *types.Header, seal bool) error {

// ModeFullFake mode, do not do any verification

If ethash.config.PowMode == ModeFullFake {

Return nil

}

Number := header.Number.Uint64()

// If the block number and hash can be found in the chain, the block exists.

If chain.GetHeader(header.Hash(), number) != nil {

Return nil

}

// At this point the block has not been added to the chain, the newly generated block from which to try to get its parent block

Parent := chain.GetHeader(header.ParentHash, number-1)

If parent == nil { //Unable to get the parent block, return an exception

Return consensus.ErrUnknownAncestor

}

// After getting the parent block, further verify the block to ensure the correctness of the block.

// false means not a bad block

Return ethash.verifyHeader(chain, header, parent, false, seal)

}

The above code is mainly to verify the correctness of the block header (in fact, the verification block), in the last line of code ethash.verifyHeader (…) (note that verifyHeader is the first letter is lowercase, not the interface in the consensus general engine), Logically we can see that it is used to verify a newly generated block in detail, to see its implementation:

The relevant content of the header check is explained here. At the beginning, the whole verification process is summarized. Basically, it can be used to implement the whole verification process. Then we look at the next consensus engine interface.

CalcDifficulty()

The difficulty of calculating the next block, Ethereum is divided into different stages (refer to: Ethereum stage description), therefore, this difficulty calculation will have different calculation methods for different stages.

This method is used to calculate the difficulty of the next block. At the same time, we can see from the above verification method that the method is also used to check whether the difficulty values in the block are consistent.

Can be seen from the following code:

// The specific implementation requires a layer of exploration

Func (ethash *Ethash) CalcDifficulty(chain consensus.ChainReader, time uint64, parent *types.Header) *big.Int {

Return CalcDifficulty(chain.Config(), time, parent)

}

/ / Calculate the difficulty of the next block according to different stages

Func CalcDifficulty(config *params.ChainConfig, time uint64, parent *types.Header) *big.Int {

Next := new(big.Int).Add(parent.Number, big1)

//You can see that the following are different stages.

Switch {

Case config.IsConstantinople(next): //The third stage of the Metropolis vConstantinople (Constantinburg stage)

Return calcDifficultyConstantinople(time, parent)

Case config.IsByzantium(next): //The third phase of Metropolis (vubyzantium) (byzantine phase)

Return calcDifficultyByzantium(time, parent)

Case config.IsHomestead(next): //Second stage Homestead (home)

Return calcDifficultyHomestead(time, parent)

Default:

Return calcDifficultyFrontier(time, parent) //First stage Frontier (leading edge)

}

}

The current stable version is the pure pow consensus algorithm used in the third phase of Metropolis’s vByzantium (Byzantine phase). So let’s take a closer look at how the difficulty values for this phase are calculated.

Summarize the calculation process

Calculate the difficulty according to the Byzantine phase rules from the 3 millionth block.

The difficulty of the uncle block is intervened, and the calculation is performed using the corresponding formula, and the calculation result is returned (the formula is given below).

The difficulty value is always not less than 131072. If the creation block does not set the difficulty, the default is also this size.

Specific code analysis

The calculation of the next block difficulty for each block of the vByzantium (Byzantine phase) of the third phase of Metropolis (the Metropolis) begins with the following entry:

// This origin and rules can be referenced: https://eips.ethereum.org/EIPS/eip-649

// 3000000 means that this difficulty calculation method is used after the 3 millionth block from Ethereum.

// This is called a difficulty bomb. . .

calcDifficultyByzantium = makeDifficultyCalculator(big.NewInt(3000000))

Take a look at the specific calculation code:

// See the code, the function method

// This process is called a deep water bomb

// returned by makeDifficultyCalculator: is a method with a return value

// The method parameter bombDelay is the block number indicating which block to start with

// In the returned method, the first argument is the timestamp of the block, and the second is the parent block.

Func makeDifficultyCalculator(bombDelay *big.Int) func(time uint64, parent *types.Header) *big.Int {

// To calculate the difficulty of the next block, you need to know the number of the previous block first.

bombDelayFromParent := new(big.Int).Sub(bombDelay, big1)

Return func(time uint64, parent *types.Header) *big.Int {

// Reference: https://github.com/ethereum/EIPs/issues/100.

…

…

}

}

The specific code of the calculation is not listed, and the difficulty calculation is implemented according to such a calculation formula:

Diff = (parent_diff + (parent_diff / 2048 * max((2 if len(parent.uncles) else 1) - ((timestamp - parent.timestamp) / 9), -99)) ) + 2^(periodCount - 2)

As you can see from the formula, the calculation of the difficulty is added to the uncle block.

For clarity, the formula is organized as follows:

Block_diff = parent_diff + [difficulty adjustment] + [difficulty bomb]

[Difficulty adjustment] = parent_diff / 2048 * max((2 if len(parent.uncles) else 1) - (block_timestamp - parent_timestamp) / 9, -99))

[Difficult Bomb] = INT(2^((periodCount / 100000) - 2))

Remarks: between 300w~310w blocks, [difficult bomb]=0

VerifyHeaders()

This method is similar to the previously mentioned VerifyHeader, except that this is bulk verification.

A lot of goroutine is used in the middle, and the amount of code is quite large. In fact, it is quite understandable.

Func (ethash *Ethash) VerifyHeaders(chain consensus.ChainReader, headers []*types.Header, seals []bool) (chan<- struct{}, <-chan error) {

… // ModeFullFake, then nothing is processed, return directly, here the code is slightly

// Make sure each core is involved in the work

Workers := runtime.GOMAXPROCS(0) //0, indicating the maximum cpu number to use

If len(headers) < workers {

Workers = len(headers)

}

/ / Define some necessary variables

Var (

Inputs = make(chan int)

Done = make(chan int, workers)

Errors = make([]error, len(headers))

Abort = make(chan struct{})

)

For i := 0; i < workers; i++ { //All workers are running at full power

Go func() {

For index := range inputs { //Receive the incoming number (the lowest code comment) and start executing

Errors[index] = ethash.verifyHeaderWorker(chain, headers, seals, index)

Done <- index

}

}()

}

errorsOut := make(chan error, len(headers)) // used to receive stored errors in select

… //This is select, the processing logic after receiving the chan message, although the code is a bit long, but not complicated, it is not listed here.

//At the same time, here is also the input number 1, 2, 3… for input non-stop, until len(headers)

Return abort, errorsOut

}

The above code makes the whole process clear through goroutine and channel. It can be seen that the real verification header is executed in the ethash.verifyHeaderWorker() method, which is mainly implemented by calling the consensusHeader() interface of the consensus engine, which is not detailed here.

VerifyUncles()

What is a non-block, look directly at the picture:

Uncle block

The unchecked block used to verify the current block is actually very similar to the check block header. See the code directly:

Func (ethash *Ethash) VerifyUncles(chain consensus.ChainReader, block *types.Block) error {

// ModeFullFake, do not execute

If ethash.config.PowMode == ModeFullFake {

Return nil

}

// Currently a block can have up to two uncle blocks

If len(block.Uncles()) > maxUncles {

Return errTooManyUncles

}

// Summarize the unblock and ancestor, the ancestor refers to the first 8 blocks of the current block

// ancestors store the hash of the ancestor block

// uncles stores the hash of the uncles block of each main block

Uncles, ancestors := mapset.NewSet(), make(map[common.Hash]*types.Header)

Number, parent := block.NumberU64()-1, block.ParentHash() //parent block number and hash

For i := 0; i < 7; i++ { //

Ancestor := chain.GetBlock(parent, number)

If ancestor == nil {

Break

}

Ancestors[ancestor.Hash()] = ancestor.Header()

For _, uncle := range ancestor.Uncles() {

uncles.Add(uncle.Hash())

}

Parent, number = ancestor.ParentHash(), number-1

}

Ancestors[block.Hash()] = block.Header() //A total of 8 ancestors

uncles.Add(block.Hash()) //uncles is mainly used to ensure that hashes are not repeated.

/ / Add the current block’s uncle block hash to the uncles for repeated filtering

// Use verifyHeader to further verify the block

For _, uncle := range block.Uncles() {

//hash repeatability test

Hash := uncle.Hash()

If uncles.Contains(hash) {

Return errDuplicateUncle

}

uncles.Add(hash)

/ / Check the validity

If ancestors[hash] != nil {

Return errUncleIsAncestor

}

/ / The uncle block should point to the parent block of the parent block of the current block

If ancestors[uncle.ParentHash] == nil || uncle.ParentHash == block.ParentHash() {

Return errDanglingUncle

}

/ / Detailed detection block

If err := ethash.verifyHeader(chain, uncle, ancestors[uncle.ParentHash], true, true); err != nil {

Return err

}

}

Return nil

}

Here we can learn the following important information:

- The parent block of the parent block of the current block is the parent block of the current block unblock;

- One block has two unblocks

- The first 8 blocks of the block and their uncle block information are separately summarized, in order to better ensure the correctness of the current block unblock, prevent duplicates, etc., and ensure that the unblock and the normal block are not cross-conflicted. It is also to avoid malicious operation.

Prepare()

In the pow algorithm, this interface is mainly used to fill the difficulty value in the block header, and currently has no other effect.

func (ethash *Ethash) Prepare(chain consensus.ChainReader, header *types.Header) error {

parent := chain.GetHeader(header.ParentHash, header.Number.Uint64()-1)

if parent == nil {

return consensus.ErrUnknownAncestor

}

header.Difficulty = ethash.CalcDifficulty(chain, header.Time.Uint64(), parent) //Calculate and fill the difficulty

return nil

}

Finalize()

A very important method is to reward the confirmed block. In layman’s terms, the reward is assigned to the block account and the unblock account.

It should be noted that the two uncle blocks are referenced by a block, that is, the method will eventually record this block in db to generate a new block.

code show as below:

Func (ethash *Ethash) Finalize(chain consensus.ChainReader, header *types.Header, state *state.StateDB, txs []*types.Transaction, uncles []*types.Header, receipts []*types.Receipt) ( *types.Block, error) {

// Reward rules for block and unblock accounts

accumulateRewards(chain.Config(), state, header, uncles)

// Generate the root hash of the block according to the saved db state, and then record it in db, this is the hash of the block

// EIP158 hard fork, refer to: https://github.com/ethereum/EIPs/issues/158

header.Root = state.IntermediateRoot(chain.Config().IsEIP158(header.Number))

// return the new block

Return types.NewBlock(header, txs, uncles, receipts), nil

}

// specific reward implementation

Func accumulateRewards(config *params.ChainConfig, state *state.StateDB, header *types.Header, uncles []*types.Header) {

blockReward := FrontierBlockReward // Basic reward, 5 eth rewards

If config.IsByzantium(header.Number) { // Byzantine stage, 3 eth rewards

blockReward = ByzantiumBlockReward

}

If config.IsConstantinople(header.Number) { //Constantinburg stage, 2 eth rewards

blockReward = ConstantinopleBlockReward

}

// The reward logic of the uncle block

Reward := new(big.Int).Set(blockReward)

r := new(big.Int)

For _, uncle := range uncles {

//Formula: ((uncle.Number+8)-heater.Number)*blockReward/8

/ / It can be seen that the larger the block number of the uncle block, the greater the reward of the uncle block

r.Add(uncle.Number, big8)

r.Sub(r, header.Number)

r.Mul(r, blockReward)

r.Div(r, big8)

state.AddBalance(uncle.Coinbase, r) //Add a bad block to db

//From here, each additional block adds an additional 1/32 bonus to each block.

r.Div(blockReward, big32)

reward.Add(reward, r)

}

state.AddBalance(header.Coinbase, reward) //block account, reward added to db

}

In the current stage of Constantinople, a block will give 3 eth rewards by default. Each time a block is added, the block will be given a default reward of 1/32 (a block of up to two uncles). After the bonus is over, Will be permanently recorded in the chain

SealHash()

The method is to return the hash value of a block header before the seal, the code is as follows:

func (ethash *Ethash) SealHash(header *types.Header) (hash common.Hash) {

hasher := sha3.NewKeccak256()

rlp.Encode(hasher, []interface{}{

header.ParentHash,

header.UncleHash,

header.Coinbase,

header.Root,

header.TxHash,

header.ReceiptHash,

header.Bloom,

header.Difficulty,

header.Number,

header.GasLimit,

header.GasUsed,

header.Time,

header.Extra,

})

hasher.Sum(hash[:0])

return hash

}

This is obviously to serialize a block header first rlp, and then generate a hash, in order to generate a hash of the block header, after the generation, the block is also determined.

VerifySeal()

The VerifySeal() function is based on the exact same algorithmic principle as Seal(), which determines if the block has been subjected to a Seal operation by verifying that certain attributes of the block (Header.Nonce, Header.MixDigest, etc.) are correct.

It involves using the cache to verify the legitimacy of the block or using dag to verify, using dag to make the validation faster.

code show as below:

func (ethash *Ethash) VerifySeal(chain consensus.ChainReader, header *types.Header) error {

return ethash.verifySeal(chain, header, false)

}

Directly return the submethod verifySeal to handle, see the comments inside:

//About fulldag, true means using DAG, false using traditional caching mechanism

Func (ethash *Ethash) verifySeal(chain consensus.ChainReader, header *types.Header, fulldag bool) error {

// Fake mode, the most simplified processing, pseudo-verification

If ethash.config.PowMode == ModeFake || ethash.config.PowMode == ModeFullFake {

time.Sleep(ethash.fakeDelay)

If ethash.fakeFail == header.Number.Uint64() {

Return errInvalidPoW

}

Return nil

}

// shanred mode processing, this is not considered for the time being

If ethash.shared != nil {

Return ethash.shared.verifySeal(chain, header, fulldag)

}

// Make sure the difficulty value is greater than 0

If header.Difficulty.Sign() <= 0 {

Return errInvalidDifficulty

}

// current block number

Number := header.Number.Uint64()

Var (

Digest []byte

Result []byte

)

//

If fulldag { //Use DAG, combine db, generate dataset

Dataset := ethash.dataset(number, true)

If dataset.generated() {

Digest, result = hashimotoFull(dataset.dataset, ethash.SealHash(header).Bytes(), header.Nonce.Uint64())

// The dataset is not mapped in the finalizer. Make sure the dataset remains active until you call hashimotoFull, so it will not be unmapped when you use it.

runtime.KeepAlive(dataset)

} else { //Use the cache

Fulldag = false

}

}

// If you use normal authentication or if the DAG is not ready yet, execute the content

If !fulldag {

Cache := ethash.cache(number)

Size := datasetSize(number)

If ethash.config.PowMode == ModeTest {

Size = 32 * 1024 //The test environment specifies a fixed size

}

/ / Call the hash calculation algorithm, this is the core of the hash collision

Digest, result = hashimotoLight(size, cache.cache, ethash.SealHash(header).Bytes(), header.Nonce.Uint64())

// The cache is not mapped in the finalizer. Make sure the cache is active until hashimotoLight is called, so it will not be unmapped when it is used.

runtime.KeepAlive(cache)

}

/ / Verify that the calculation results are satisfied

If !bytes.Equal(header.MixDigest[:], digest) {

Return errInvalidMixDigest

}

Target := new(big.Int).Div(two256, header.Difficulty)

If new(big.Int).SetBytes(result).Cmp(target) > 0 {

Return errInvalidPoW

}

Return nil

}

It involves a very complicated hash calculation method. Now it is just a general review of the overall operation. It is only described for the implementation of the engine interface. The rest of the ethash methods involved are described later.

Seal()

It implements the proof of the workload, and the Seal function tries to find a nonce value that satisfies the block difficulty. People often say that mining is actually calling it, finding the block that meets the conditions, which is the core of the entire mining process. This method is more complicated and is implemented in the file sealer.go. This method seems to be long, in fact, it is to use goroutine to accept and pass,

Func (ethash *Ethash) Seal(chain consensus.ChainReader, block *types.Block, results chan<- *types.Block, stop <-chan struct{}) error {

//fack mode ignores mining

…

// shared mode processing

…

// standard mode

Abort := make(chan struct{})

ethash.lock.Lock()

Threads := ethash.threads //This is how many miners are mining

If ethash.rand == nil {

Seed, err := crand.Int(crand.Reader, big.NewInt(math.MaxInt64))

If err != nil {

ethash.lock.Unlock()

Return err

}

Ethash.rand = rand.New(rand.NewSource(seed.Int64())) //Get a random number

}

ethash.lock.Unlock()

If threads == 0 {

Threads = runtime.NumCPU() //If no miners are set, use cpu

}

If threads < 0 {

Threads = 0 // Local mining will be blocked

}

// Push the task to the remote

If ethash.workCh != nil {

ethash.workCh <- &sealTask{block: block, results: results}

}

Var (

Pend sync.WaitGroup //similar to fence mode, thread waiting

Locals = make(chan *types.Block)

)

For i := 0; i < threads; i++ {

pend.Add(1)

Go func(id int, nonce uint64) {

Defer pend.Done()

Ethash.mine(block, id, nonce, abort, locals) //Start mining, enter hash collision mode

}(i, uint64(ethash.rand.Int63()))

}

// Get the result of the mine return, select receive, mine stop, or find the right nonce

Go func() {

Var result *types.Block

Select {

Case <-stop:

Close(abort) //Close all channels

Case result = <-locals:

// Stop as soon as there is a miner digging the right block.

Select {

Case results <- result:

Default:

log.Warn(“Sealing result is not read by miner”, “mode”, “local”, “sealhash”, ethash.SealHash(block.Header()))

}

Close(abort)

Case <-ethash.update:

// If the user updates the number of miners (new or reduced), stop all miners and then dig again

Close(abort)

If err := ethash.Seal(chain, block, results, stop); err != nil {

log.Error(“Failed to restart sealing after update”, “err”, err)

}

}

// Wait for all miners to finish

pend.Wait()

}()

Return nil

}

This code explains the operation before mining and the way to receive the mine. We found that the details of mining are in the mine() method. This is one of the methods of ethash. Multiple threads are open. The process of groutine opening.

Apis()

This is the external RPC interface service.

Func (ethash *Ethash) APIs(chain consensus.ChainReader) []rpc.API {

/ / In order to be compatible with the old version, add two namespaces, all the same

Return []rpc.API{

{

Namespace: “eth”,

Version: “1.0”,

Service: &API{ethash},

Public: true,

},

{

Namespace: “ethash”,

Version: “1.0”,

Service: &API{ethash},

Public: true,

},

}

}

Hashrate()

This is the proprietary interface of the pow algorithm, not the consensus algorithm engine, used to obtain the computing power of the current node.

Func (ethash *Ethash) Hashrate() float64 {

/ / fake mode use

If ethash.config.PowMode != ModeNormal && ethash.config.PowMode != ModeTest {

Return ethash.hashrate.Rate1()

}

Var res = make(chan uint64, 1)

Select {

// fetchRateCh itself is a chan chan uint64 type

//This shows: The power of local or remote mining will be recorded to ethash.fetchRateCh, then it will write the result to res, <-res will get the result

Case ethash.fetchRateCh <- res: // used to collect local or remote computing power

Case <-ethash.exitCh:

Return ethash.hashrate.Rate1()

}

/ / Return to the entire computing power

Return ethash.hashrate.Rate1() + float64(<-res)

}

The specific way to get the look at the above comment, using select and channel, this method is only the display of the results of the garlic, the real processing is generally in the mine (.) file in the mine () method, each calculation, computing power +1.

Close()

This is equivalent to shutting down the entire mining thread.

Func (ethash *Ethash) Close() error {

Var err error

ethash.closeOnce.Do(func() { // will only trigger execution once

If ethash.exitCh == nil {

Return

}

Errc := make(chan error)

Ethash.exitCh <- errc

Err = <-errc

Close(ethash.exitCh)

})

Return err

}

The above is a detailed description of the MGC TOKEN POW algorithm.