深度学习概论

Neural Network

Term deep learning: Term deep learning refers to training neural networks.

single neuron: Inputs x, computes this function by itself, and then outputs y.

For example,

Size ->single neuron -> Price

x y

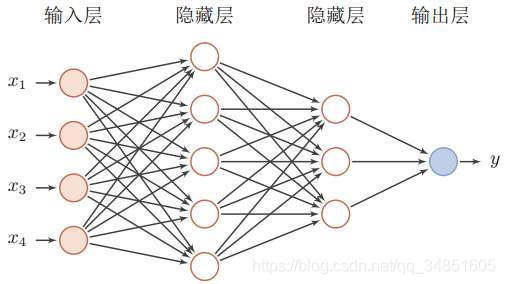

neural network: Neural network is formed by taking many of these single neurons and stacking them together.

For example,

This hollow circle in the picture is a single neuron which is also called hidden units in a neural network. And the entire map in the picture is a neural network. That each of them takes its input of all input and decides to use which of these inputs by itself. So we say that this layer in the middle, the neural network, are densely connected, because every input feature’s connected to everyone of these circles in the middle.

supervised learning

supervised learning: In supervised learning, you have some input x, and you want to learn a function mapping to some output y.

For example,

| Input(x) | Output(y) | Application | Model |

|---|---|---|---|

| Home features | Price | Real Estate | standard neural network |

| Ad, user info | Click on ad?(0/1) | Online Advertising | standard neural network |

| Image | Object(1,…,1000) | Photo tagging | convolutional neural network |

| Audio | Text transcript | Speech recognition | recurrent neural network |

| English | Chinese | Machine translation | recurrent neural network |

| Image, Radar info | Position of other cars | Autonomous driving | custom/hybrid neural network |

Structured Data: Structured Data means basically databases of data.

For example,

| User Age | Id | … | number |

|---|---|---|---|

| 41 | 93292 | 3000 | |

| 32 | 93234 | 2170 | |

| 53 | 93123 | 4090 | |

| 14 | 93143 | 1300 | |

| … | … | … |

Unstructured Data: On the contrary, unstructured Data refers to things like audio, raw audio, or images where you might want to recognize what’s in the image or text.

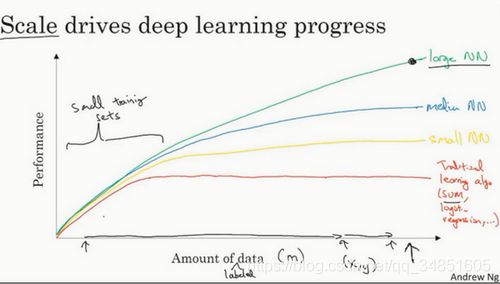

Scale drives deep learning progress

In this small training set, the priorities of various algorithms are actually not very clear, so if you don’t have a large number of training sets, the effect will depend on your feature engineering capabilities, which will determine the final performance. For example, in this small training set, the SVM algorithm may perform better than the larger training NN algorithm. So you know that on the left side of this graphics area, the priorities between the various algorithms are not clearly defined, and the final performance depends more on your ability to select features using engineering and some details on algorithm processing. Neural network algorithms have an advantage only when some large data sets have a very large training set, which is to the right of the image.

Question

Which of the following are true? (Check all that apply.)

[√] Increasing the training set size generally does not hurt an algorithm 'performance, and it may help significantly.

[√] Increasing the size of a neural network generally does not hurt an algorithm 'performance, and it may help significantly.

[ ] Decreasing the training set size generally does not hurt an algorithm 'performance, and it may help significantly.

[ ] Decreasing the size of a neural network generally does not hurt an algorithm 'performance, and it may help significantly.