使用opencv进行疲劳监测

中国有大概600万长途货车司机,在我老家也有很多人从事这一工作,这个工作辛苦且高危,就在今年春节前几天,邻村有个30多岁的货车司机因为疲劳驾驶,直接追尾等红灯的大货车,不幸离世,这让我不禁想起,如果疲劳检测系统能够普及,也许可以挽回很多生命。本篇文章讲一下如何用opencv检测眼睛的闭合状态来进行疲劳监测报警。

宋丹丹:问把大象关冰箱分几步?

赵本山:几步?

宋丹丹:三步!一、把门打开 二、把大象塞进去 三、把门关上

这里的代码也是分三步,很直接、很清晰。

1、打开摄像头,获取每一帧图片,检测人脸位置

2、在检测到人脸的基础上,提取人脸特征点(68个),取出眼睛对应坐标。

3、计算眼睛的高宽比,也就是闭合程度,根据闭合程度和持续时间决定是否报警。

在实际项目中,我们使用c++实现,这里为实验方便,使用python代码做演示。顺便提一点,在实际项目中,我们还结合了嘴巴张开的程度,即打哈欠的动作判断。

先上代码:

# USAGE

# python detect_drowsiness.py --shape-predictor shape_predictor_68_face_landmarks.dat

# python detect_drowsiness.py --shape-predictor shape_predictor_68_face_landmarks.dat --alarm alarm.wav

# import the necessary packages

from scipy.spatial import distance as dist

from imutils.video import VideoStream

from imutils import face_utils

from threading import Thread

import numpy as np

import playsound

import argparse

import imutils

import time

import dlib

import cv2

def sound_alarm(path):

# play an alarm sound

playsound.playsound(path)

def eye_aspect_ratio(eye):

# compute the euclidean distances between the two sets of

# vertical eye landmarks (x, y)-coordinates

A = dist.euclidean(eye[1], eye[5])

B = dist.euclidean(eye[2], eye[4])

# compute the euclidean distance between the horizontal

# eye landmark (x, y)-coordinates

C = dist.euclidean(eye[0], eye[3])

# compute the eye aspect ratio

ear = (A + B) / (2.0 * C)

# return the eye aspect ratio

return ear

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--shape-predictor", required=True,

help="path to facial landmark predictor")

ap.add_argument("-a", "--alarm", type=str, default="",

help="path alarm .WAV file")

ap.add_argument("-w", "--webcam", type=int, default=0,

help="index of webcam on system")

args = vars(ap.parse_args())

# define two constants, one for the eye aspect ratio to indicate

# blink and then a second constant for the number of consecutive

# frames the eye must be below the threshold for to set off the

# alarm

EYE_AR_THRESH = 0.3

EYE_AR_CONSEC_FRAMES = 48

# initialize the frame counter as well as a boolean used to

# indicate if the alarm is going off

COUNTER = 0

ALARM_ON = False

# initialize dlib's face detector (HOG-based) and then create

# the facial landmark predictor

print("[INFO] loading facial landmark predictor...")

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(args["shape_predictor"])

# grab the indexes of the facial landmarks for the left and

# right eye, respectively

(lStart, lEnd) = face_utils.FACIAL_LANDMARKS_IDXS["left_eye"]

(rStart, rEnd) = face_utils.FACIAL_LANDMARKS_IDXS["right_eye"]

# start the video stream thread

print("[INFO] starting video stream thread...")

vs = VideoStream(src=args["webcam"]).start()

time.sleep(1.0)

# loop over frames from the video stream

while True:

# grab the frame from the threaded video file stream, resize

# it, and convert it to grayscale

# channels)

frame = vs.read()

frame = imutils.resize(frame, width=500)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# detect faces in the grayscale frame

rects = detector(gray, 0)

# loop over the face detections

for rect in rects:

# determine the facial landmarks for the face region, then

# convert the facial landmark (x, y)-coordinates to a NumPy

# array

shape = predictor(gray, rect)

shape = face_utils.shape_to_np(shape)

# extract the left and right eye coordinates, then use the

# coordinates to compute the eye aspect ratio for both eyes

leftEye = shape[lStart:lEnd]

rightEye = shape[rStart:rEnd]

leftEAR = eye_aspect_ratio(leftEye)

rightEAR = eye_aspect_ratio(rightEye)

# average the eye aspect ratio together for both eyes

ear = (leftEAR + rightEAR) / 2.0

# compute the convex hull for the left and right eye, then

# visualize each of the eyes

leftEyeHull = cv2.convexHull(leftEye)

rightEyeHull = cv2.convexHull(rightEye)

cv2.drawContours(frame, [leftEyeHull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [rightEyeHull], -1, (0, 255, 0), 1)

# check to see if the eye aspect ratio is below the blink

# threshold, and if so, increment the blink frame counter

if ear < EYE_AR_THRESH:

COUNTER += 1

# if the eyes were closed for a sufficient number of

# then sound the alarm

if COUNTER >= EYE_AR_CONSEC_FRAMES:

# if the alarm is not on, turn it on

if not ALARM_ON:

ALARM_ON = True

# check to see if an alarm file was supplied,

# and if so, start a thread to have the alarm

# sound played in the background

if args["alarm"] != "":

t = Thread(target=sound_alarm,

args=(args["alarm"],))

t.deamon = True

t.start()

# draw an alarm on the frame

cv2.putText(frame, "DROWSINESS ALERT!", (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

# otherwise, the eye aspect ratio is not below the blink

# threshold, so reset the counter and alarm

else:

COUNTER = 0

ALARM_ON = False

# draw the computed eye aspect ratio on the frame to help

# with debugging and setting the correct eye aspect ratio

# thresholds and frame counters

cv2.putText(frame, "EAR: {:.2f}".format(ear), (300, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

# show the frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()

注:如果运行时提示有些module找不到,请使用pip install安装即可

看效果:

代码解析:

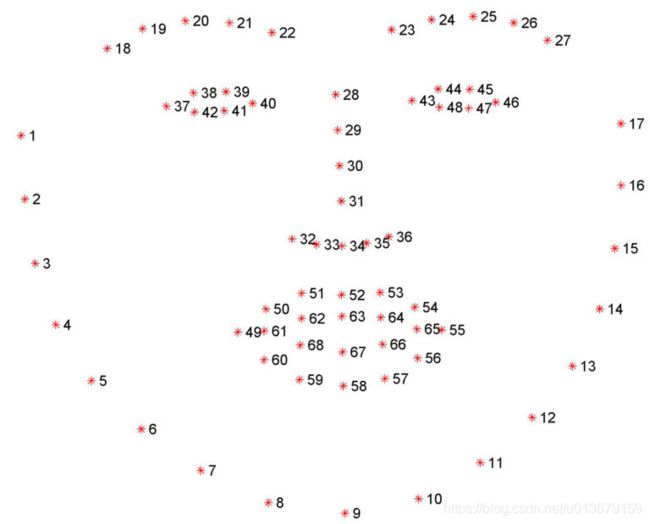

首先,看68个人脸特征点的分布

根据上图的标识,我们要获取到左右眼的特征点坐标 :

(lStart, lEnd) = face_utils.FACIAL_LANDMARKS_IDXS["left_eye"]

(rStart, rEnd) = face_utils.FACIAL_LANDMARKS_IDXS["right_eye"]其中。(lStart,lEnd) =37,42+1。(rStart,rEnd)= 43,48+1。通过列表切片操作即可取出左右眼的相关坐标。

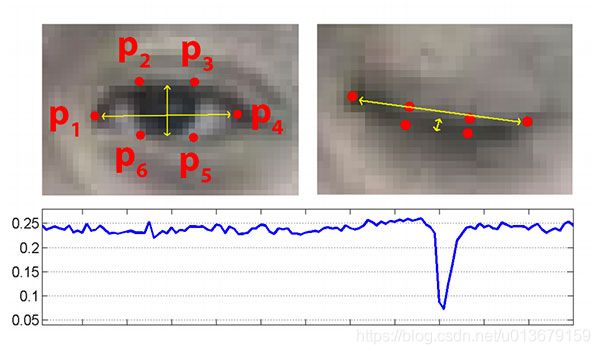

接下来,根据下图分析,人眼的眨眼动作有如下特点:眼睛的睁开的横宽比随时间的变化如图表所示,睁开时会保持固定且在0.25左右,然后迅速下降到0.1以下,再然后迅速上升到0.25左右,完成一次眨眼动作。

根据之前获取的眼睛坐标,计算眼睛睁开的横宽比,方法如下,求直角坐标系中的两点距离就不用讲了。

def eye_aspect_ratio(eye):

# compute the euclidean distances between the two sets of

# vertical eye landmarks (x, y)-coordinates

A = dist.euclidean(eye[1], eye[5])

B = dist.euclidean(eye[2], eye[4])

# compute the euclidean distance between the horizontal

# eye landmark (x, y)-coordinates

C = dist.euclidean(eye[0], eye[3])

# compute the eye aspect ratio

ear = (A + B) / (2.0 * C)

# return the eye aspect ratio

return ear讲2个眼睛的横宽比进行平均。

# loop over the face detections

for rect in rects:

# determine the facial landmarks for the face region, then

# convert the facial landmark (x, y)-coordinates to a NumPy

# array

shape = predictor(gray, rect)

shape = face_utils.shape_to_np(shape)

# extract the left and right eye coordinates, then use the

# coordinates to compute the eye aspect ratio for both eyes

leftEye = shape[lStart:lEnd]

rightEye = shape[rStart:rEnd]

leftEAR = eye_aspect_ratio(leftEye)

rightEAR = eye_aspect_ratio(rightEye)

# average the eye aspect ratio together for both eyes

ear = (leftEAR + rightEAR) / 2.0接下来,根据我们设定的阈值进行逻辑判断:如果ear连续小于阈值的帧数大于48则报警,只要出现一次ear小于阈值,则计数清零,起到滤波作用。逻辑比较清晰简单。

# check to see if the eye aspect ratio is below the blink

# threshold, and if so, increment the blink frame counter

if ear < EYE_AR_THRESH:

COUNTER += 1

# if the eyes were closed for a sufficient number of

# then sound the alarm

if COUNTER >= EYE_AR_CONSEC_FRAMES:

# if the alarm is not on, turn it on

if not ALARM_ON:

ALARM_ON = True

# check to see if an alarm file was supplied,

# and if so, start a thread to have the alarm

# sound played in the background

if args["alarm"] != "":

t = Thread(target=sound_alarm,

args=(args["alarm"],))

t.deamon = True

t.start()

# draw an alarm on the frame

cv2.putText(frame, "DROWSINESS ALERT!", (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

# otherwise, the eye aspect ratio is not below the blink

# threshold, so reset the counter and alarm

else:

COUNTER = 0

ALARM_ON = False

# draw the computed eye aspect ratio on the frame to help

# with debugging and setting the correct eye aspect ratio

# thresholds and frame counters

cv2.putText(frame, "EAR: {:.2f}".format(ear), (300, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

参考链接:https://www.pyimagesearch.com/2017/05/08/drowsiness-detection-opencv/