netty学习笔记

文章目录

- 五种I/O模型

- netty 各大组件详解

- echo服务

- EventLoop和EventLoopGroup

- Bootstrapt

- Channel

- ChannelHandler、ChannelPipline、ChannelHandlerContext

- ChannelFuture

- 编解码器

- 编解码器的类型:

- 编码器

- 解码器

- 什么是拆包和粘包问题(半包读写)

- 半包读写解决方案

- LineBasedFrameDecoder

- DelimiterBasedFrameDecoder

- FixedLengthFrameDecoder

- LengthFieldBasedFrameDecoder

- Bytebuf缓冲

- ByteBuf的创建方法

- 单机百万连接测试代码

- 服务器性能优化(支持更多连接数)

五种I/O模型

java 5种IO模型

netty 各大组件详解

echo服务

实现一个简单的echo服务,就是报文回显服务

服务端:

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

public class EchoServer {

private int port;

public EchoServer(int port){

this.port = port;

}

/**

* 启动流程

*/

public void run() throws InterruptedException {

//配置服务端线程组

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workGroup = new NioEventLoopGroup();

try{

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap.group(bossGroup, workGroup)

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<SocketChannel>() {

protected void initChannel(SocketChannel ch) throws Exception {

ch.pipeline().addLast(new EchoServerHandler());

}

});

System.out.println("Echo 服务器启动ing");

//绑定端口,同步等待成功

ChannelFuture channelFuture = serverBootstrap.bind(port).sync();

//等待服务端监听端口关闭

channelFuture.channel().closeFuture().sync();

}finally {

//优雅退出,释放线程池

workGroup.shutdownGracefully();

bossGroup.shutdownGracefully();

}

}

public static void main(String [] args) throws InterruptedException {

int port = 8080;

if(args.length > 0){

port = Integer.parseInt(args[0]);

}

new EchoServer(port).run();

}

}

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.util.CharsetUtil;

public class EchoServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ByteBuf data = (ByteBuf) msg;

System.out.println("服务端收到数据: "+ data.toString(CharsetUtil.UTF_8));

ctx.writeAndFlush(data);

}

@Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

System.out.println("EchoServerHandle channelReadComplete");

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

}

}

客户端:

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import java.net.InetSocketAddress;

public class EchoClient {

private String host;

private int port;

public EchoClient(String host, int port){

this.host = host;

this.port = port;

}

public void start() throws InterruptedException {

EventLoopGroup group = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.group(group)

.channel(NioSocketChannel.class)

.remoteAddress(new InetSocketAddress(host, port))

.handler(new ChannelInitializer<SocketChannel>() {

protected void initChannel(SocketChannel ch) throws Exception {

ch.pipeline().addLast(new EchoClientHandler());

}

});

//连接到服务端,connect是异步连接,在调用同步等待sync,等待连接成功

ChannelFuture channelFuture = bootstrap.connect().sync();

//阻塞直到客户端通道关闭

channelFuture.channel().closeFuture().sync();

}finally {

//优雅退出,释放NIO线程组

group.shutdownGracefully();

}

}

public static void main(String []args) throws InterruptedException {

new EchoClient("127.0.0.1",8080).start();

}

}

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.SimpleChannelInboundHandler;

import io.netty.util.CharsetUtil;

public class EchoClientHandler extends SimpleChannelInboundHandler<ByteBuf> {

protected void channelRead0(ChannelHandlerContext ctx, ByteBuf msg) throws Exception {

System.out.println("Client received: " + msg.toString(CharsetUtil.UTF_8));

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

System.out.println("Active");

ctx.writeAndFlush(Unpooled.copiedBuffer("小滴课堂 xdclass.net",CharsetUtil.UTF_8));

}

@Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

System.out.println("EchoClientHandler channelReadComplete");

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

}

}

EventLoop和EventLoopGroup

相当于线程和线程池,EventLoop底层是selector,可以查看java nio多路复用 selector,所以一个EventLoop可以接收多个线程访问;而EventLoopGroup包含多个EventExecutor,EventExecutor继承于EventExecutorGroup,EventExecutorGroup继承与ScheduledExecutorService,就是jdk的线程池,也就是说EventLoopGroup其本质就是一个netty封装之后的线程池,EventLoopGroup默认线程数为2*coreNum(coreNum为内核个数),以下是源码:

private static final int DEFAULT_EVENT_LOOP_THREADS;

static {

DEFAULT_EVENT_LOOP_THREADS = Math.max(1, SystemPropertyUtil.getInt(

"io.netty.eventLoopThreads", NettyRuntime.availableProcessors() * 2));

if (logger.isDebugEnabled()) {

logger.debug("-Dio.netty.eventLoopThreads: {}", DEFAULT_EVENT_LOOP_THREADS);

}

}

Bootstrapt

启动引导类,有以下方法:

- group :设置线程组模型,Reactor线程模型对比EventLoopGroup

1)单线程

2)多线程

3)主从线程

参考 netty nio 源码详解 - channel:设置channel通道类型NioServerSocketChannel、OioServerSocketChannel

- option: 作用于每个新建立的channel,设置TCP连接中的一些参数,如下

ChannelOption.SO_BACKLOG: 存放已完成三次握手的请求的等待队列的最大长度;

Linux服务器TCP连接底层知识:

syn queue:半连接队列,洪水攻击,tcp_max_syn_backlog

accept queue:全连接队列, net.core.somaxconn

系统默认的somaxconn参数要足够大 ,如果backlog比somaxconn大,则会优先用后者

ChannelOption.TCP_NODELAY: 为了解决Nagle的算法问题,默认是false, 要求高实时性,有数据时马上发送,就将该选项设置为true关闭Nagle算法;如果要减少发送次数,就设置为false,会累积一定大小后再发送;

使用serverBootstrap.option(ChannelOption.SO_BACKLOG, 1024)设置 - childOption: 作用于被accept之后的连接

使用serverBootstrap.childOption(ChannelOption.WRITE_SPIN_COUNT, 100)设置 - childHandler: 用于对每个通道里面的数据处理

Channel

channel就是客户端和服务端建立的一个连接通道

ChannelHandler、ChannelPipline、ChannelHandlerContext

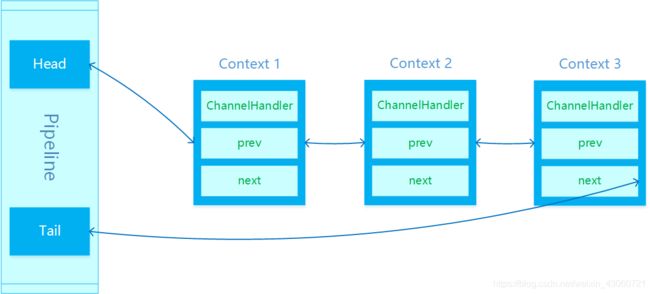

handler是channel连接之后收到消息的处理者,而一个channel可以拥有多个handler,这些多个handler连成一条线就是pipline, 相当于流水线和流水线上工人的关系而channel就是流水线的入货口;ChannelHandlerContext是连接ChannelHandler和ChannelPipeline的桥梁,ChannelHandlerContext本质是一个双向链表的一个节点;

ChannelHandler的执行顺序

以下是执行顺序的结论:

1、InboundHandler是通过fire事件决定是否要执行下一个InboundHandler,如果哪个InboundHandler没有调用fire事件,那么往后的Pipeline就断掉了。

2、InboundHandler是按照Pipleline的加载顺序,顺序执行。

3、OutboundHandler是按照Pipeline的加载顺序,逆序执行。

4、有效的InboundHandler是指通过fire事件能触达到的最后一个InboundHander。

5、如果想让所有的OutboundHandler都能被执行到,那么必须把OutboundHandler放在最后一个有效的InboundHandler之前。

6、推荐的做法是通过addFirst加载所有OutboundHandler,再通过addLast加载所有InboundHandler。

7、OutboundHandler是通过write方法实现Pipeline的串联的。

8、如果OutboundHandler在Pipeline的处理链上,其中一个OutboundHandler没有调用write方法,最终消息将不会发送出去。

9、ctx.writeAndFlush是从当前ChannelHandler开始,逆序向前执行OutboundHandler。

10、ctx.writeAndFlush所在ChannelHandler后面的OutboundHandler将不会被执行。

11、ctx.channel().writeAndFlush 是从最后一个OutboundHandler开始,依次逆序向前执行其他OutboundHandler,即使最后一个ChannelHandler是OutboundHandler,在InboundHandler之前,也会执行该OutbondHandler。

12、千万不要在OutboundHandler的write方法里执行ctx.channel().writeAndFlush,否则就死循环了。

ChannelHandler的类继承

一般情况下自定义的handler都继承于ChannelInboundHandlerAdapter然后重写方法,而不是直接实现接口ChannelInboundHandler,这里使用适配器模式,Inbound依靠fire事件来传递,ChannelInboundHandlerAdapter中默认实现都是调用fire方法

package io.netty.channel;

import io.netty.channel.ChannelHandlerMask.Skip;

public class ChannelInboundHandlerAdapter extends ChannelHandlerAdapter implements ChannelInboundHandler {

@Skip

@Override

public void channelRegistered(ChannelHandlerContext ctx) throws Exception {

ctx.fireChannelRegistered();

}

...

}

ChannelOutboundHandler则继承于ChannelOutboundHandlerAdapter

channelHandler的并发问题:

- 如果按照以下方式加则EchoServerHandler需要加

@ChannelHandler.Sharable注解进行线程共享,也就是说加了注解之后EchoServerHandler就是单利,需要线程安全,不加注解在第个客户端连接进来时会报错

serverBootstrap.childHandler(new EchoServerHandler());

- 如果按照以下方式加,每一个Channel接入进来的时候都会创建自己的EchoServerHandler,所以EchoServerHandler不需要线程安全,查看源码发现ChannelInitializer本身就有

@ChannelHandler.Sharable注解,也就是说ChannelInitializer是单利,由netty提供线程安全

serverBootstrap.childHandler(new ChannelInitializer<SocketChannel>() {

protected void initChannel(SocketChannel ch) throws Exception {

ch.pipeline().addLast(new EchoServerHandler());

}

});

总结:只有在方式一的时候需要加注解@ChannelHandler.Sharable,如果使用@ChannelHandler.Sharable注解则需要保证注解的类是线程安全的,无状态的,因为会被多个Channel共享

ChannelFuture

Netty中的所有I/O操作都是异步的,这意味着任何I/O调用都会立即返回,而ChannelFuture会提供有关的信息I/O操作的结果或状态,类似jjdk中的Future,其实ChannelFuture就是Future的包装接口

ChannelFuture->io.netty.util.concurrent.Future<Void>->java.util.concurrent.Future<V>

编解码器

解决的问题:

解决数据协议问题

编解码器的类型:

- Encoder:编码器

- Decoder:解码器

- Codec:编解码器

编码器

Encoder对应的就是ChannelOutboundHandler,消息对象转换为字节数组

- MessageToByteEncoder

消息转为字节数组,调用write方法,会先判断当前编码器是否支持需要发送的消息类型,如果不支持,则透传 - MessageToMessageEncoder

用于从一种消息编码为另外一种消息(例如POJO到POJO)

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.MessageToByteEncoder;

public class CustomEncoder extends MessageToByteEncoder<Integer> {

protected void encode(ChannelHandlerContext ctx, Integer msg, ByteBuf out) throws Exception {

out.writeInt(msg);//将int数据写入out中

}

}

解码器

Decoder对应的就是ChannelInboundHandler,主要就是字节数组转换为消息对象

抽象解码器

- ByteToMessageDecoder

用于将字节转为消息,需要检查缓冲区是否有足够的字节 - ReplayingDecoder

继承ByteToMessageDecoder,不需要检查缓冲区是否有足够的字节,但是ReplayingDecoder速度略低于ByteToMessageDecoder,不是所有的ByteBuf都支持 - MessageToMessageDecoder

用于从一种消息解码为另外一种消息

public class CustomDecoder extends ByteToMessageDecoder {//自定义一种解码整形数据的解码器

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception {

//int是4个字节,需要检查下是否满足

if(in.readableBytes()>=4){

//从in中读出int数据并添加到解码信息里面去

out.add(in.readInt());

}

}

}

什么是拆包和粘包问题(半包读写)

tcp为了提高传输效率导致的问题:

- TCP拆包: 一个完整的包可能会被TCP拆分为多个包进行发送

- TCP粘包: 把多个小的包封装成一个大的数据包发送, client发送的若干数据包 Server接收时粘成一包发送

发送方和接收方都可能出现这个原因:

- 发送方的原因:TCP默认会使用Nagle算法

- 接收方的原因: TCP接收到数据放置缓存中,应用程序从缓存中读取

udp不存在这个问题,因为udp是有边界协议,说白了就是有结尾符,所以tcp解决拆包和粘包问题也需要在结尾符上下功夫;编解码器核心就是为了搞一个结尾符

半包读写解决方案

我们使用寻找边界的方式解决拆包和粘包问题,发展出以下4中解决方案:

- LineBasedFrameDecoder: 以换行符为结束标志的解码器

- DelimiterBasedFrameDecoder: 指定消息分隔符的解码器

- FixedLengthFrameDecoder:固定长度解码器

- LengthFieldBasedFrameDecoder:message = header+body, 基于长度解码的通用解码器

LineBasedFrameDecoder

以换行符为结束标志的解码器

服务端写法:

ch.pipeline().addLast(new LineBasedFrameDecoder(1024));

客户端写法:

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

ByteBuf mes = null;

//从系统获取换行符加在消息后

byte [] req = ("xdclass.net"+System.getProperty("line.separator")).getBytes();

//连续发送

for(int i=0; i< 5; i++){

mes = Unpooled.buffer(req.length);

mes.writeBytes(req);

ctx.writeAndFlush(mes);

}

}

DelimiterBasedFrameDecoder

以自定义分隔符为结束标志的解码器

服务端写法:

//自定义&_为分隔符

ByteBuf delimiter = Unpooled.copiedBuffer("&_".getBytes());

//第二个参数为是否在分割后的消息中不带分隔符,true为不带,false为保留分隔符

ch.pipeline().addLast(new DelimiterBasedFrameDecoder(1024, true, delimiter));

客户端写法:

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

String message = "Netty is a NIO client server framework which enables quick&_" +

"and easy development of network applications&_ " +

"such as protocol servers and clients.&_" +

" It greatly simplifies and streamlines&_" +

"network programming such as TCP and UDP socket server.&_";

ByteBuf mes = Unpooled.buffer(message.getBytes().length);

mes.writeBytes(message.getBytes());

ctx.writeAndFlush(mes);

}

FixedLengthFrameDecoder

固定长度解码器

服务端写法:

ch.pipeline().addLast(new FixedLengthFrameDecoder(10));

客户端写法:

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

String message = "Netty is a NIO client server framework which enables quick";

ByteBuf mes = Unpooled.buffer(message.getBytes().length);

mes.writeBytes(message.getBytes());

ctx.writeAndFlush(mes);

}

LengthFieldBasedFrameDecoder

length+body模式解码器

服务端写法:

maxFrameLength 数据包的最大长度

lengthFieldOffset 长度字段的偏移位,长度字段开始的地方,意思是跳过指定长度个字节之后的才是消息体字段

lengthFieldLength 长度字段占的字节数, 帧数据长度的字段本身的长度

lengthAdjustment 如果长度域的值,除了包含有效数据域的长度外,还包含了其他域(如长度域自身)长度,那么,就需要进行矫正。矫正的值为:包长 - 长度域的值 – 长度域偏移 – 长度域长

initialBytesToStrip 丢弃的起始字节数。丢弃处于有效数据前面的字节数量。比如前面有4个字节的长度域,则它的值为4

//表示数据包最大长度1024;长度字段从第一个字段开始,偏移位为0;

//长度字段占1个字节;长度域的值只包含数据域的长度,矫正值为0;

//长度域只占1个字节所以需要丢弃的字节数为1

ch.pipeline().addLast(new LengthFieldBasedFrameDecoder(1024,0,1,0,1));

客户端写法:

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

String body = "hello world";

byte [] req = body.getBytes();

ByteBuf mes = Unpooled.buffer(req.length);

mes.writeByte(body.length());//使用一个字节标志长度

mes.writeBytes(req);

ctx.writeAndFlush(mes);

}

Bytebuf缓冲

ByteBuf:是数据容器(字节容器)

对比JDK原生ByteBuffer

- JDK ByteBuffer

共用读写索引,每次读写操作都需要Flip()

扩容麻烦,而且扩容后容易造成浪费 - Netty ByteBuf

读写使用不同的索引,所以操作便捷

自动扩容,使用便捷

ByteBuf的创建方法

创建非为池化和非池化两种(从是否共用内存角度来讲),通过ByteBufAllocator byteBufAllocator = ctx.alloc();可以获取ByteBufAllocator然后创建ByteBuf,ByteBufAllocator有两个子类分别对应池化(PooledByteBufAllocator)和非池化(UnpooledByteBufAllocator),默认在非安卓平台使用非池化,以下是源码:

static {

String allocType = SystemPropertyUtil.get(

"io.netty.allocator.type", PlatformDependent.isAndroid() ? "unpooled" : "pooled");//这里判定非安卓平台的allocType为池化

allocType = allocType.toLowerCase(Locale.US).trim();

ByteBufAllocator alloc;

if ("unpooled".equals(allocType)) {

alloc = UnpooledByteBufAllocator.DEFAULT;

logger.debug("-Dio.netty.allocator.type: {}", allocType);

} else if ("pooled".equals(allocType)) {

alloc = PooledByteBufAllocator.DEFAULT;

logger.debug("-Dio.netty.allocator.type: {}", allocType);

} else {//默认选用池化

alloc = PooledByteBufAllocator.DEFAULT;

logger.debug("-Dio.netty.allocator.type: pooled (unknown: {})", allocType);

}

DEFAULT_ALLOCATOR = alloc;

...

}

从是否共用内存分为池化和非池化:

- 池化(Netty4.x版本后默认使用 PooledByteBufAllocator

提高性能并且最大程度减少内存碎片 - 非池化UnpooledByteBufAllocator: 每次返回新的实例

从使用那部分内存分为堆缓存区、直接缓存区和复合缓冲区:

- 堆缓存区HEAP BUFFER:

优点:存储在JVM的堆空间中,可以快速的分配和释放

缺点:每次使用前会拷贝到直接缓存区(也叫堆外内存) - 直接缓存区DIRECR BUFFER:

优点:存储在堆外内存上,堆外分配的直接内存,不会占用堆空间

缺点:内存的分配和释放,比在堆缓冲区更复杂 - 复合缓冲区COMPOSITE BUFFER:

可以创建多个不同的ByteBuf,然后放在一起,但是只是一个视图

创建方法:

//分配池化缓冲区,ctx.alloc()默认获取池化缓冲区

ByteBufAllocator byteBufAllocator = ctx.alloc();

ByteBuf pooledHeapBuffer = byteBufAllocator.buffer();

ByteBuf pooledDirectBuffer = byteBufAllocator.directBuffer();

CompositeByteBuf pooledCompositeBuffer = byteBufAllocator.compositeBuffer();

pooledCompositeBuffer.addComponents(pooledHeapBuffer, pooledDirectBuffer);

//分配非池化缓冲区

ByteBuf unPooledHeapBuffer = Unpooled.buffer();

ByteBuf unPooledDirectBuffer = Unpooled.directBuffer();

CompositeByteBuf unPooledCompositeBuffer = Unpooled.compositeBuffer();

unPooledCompositeBuffer.addComponents(unPooledHeapBuffer, unPooledDirectBuffer);

大量IO数据读写,用“直接缓存区”; 业务消息编解码用“堆缓存区”

单机百万连接测试代码

服务端:

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFutureListener;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

public class NettyServer {

public static void main(String [] args){

new NettyServer().run(Config.BEGIN_PORT, Config.END_PORT);

}

public void run(int beginPort, int endPort){

System.out.println("服务端启动中");

//配置服务端线程组

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workGroup = new NioEventLoopGroup();

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap.group(bossGroup, workGroup)

.channel(NioServerSocketChannel.class)

.childOption(ChannelOption.SO_REUSEADDR, true); //快速复用端口,因为tcp连接有时会隔一会才释放端口

serverBootstrap.childHandler(new TcpCountHandler());

for(; beginPort < endPort; beginPort++){

int port = beginPort;

serverBootstrap.bind(port).addListener((ChannelFutureListener) future->{

System.out.println("服务端成功绑定端口 port = "+port);

});

}

}

}

import io.netty.channel.ChannelHandler;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import java.util.concurrent.Executors;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicInteger;

@ChannelHandler.Sharable

public class TcpCountHandler extends ChannelInboundHandlerAdapter {

private AtomicInteger connectCounter = new AtomicInteger();//连接数

private AtomicInteger disConnectCounter = new AtomicInteger();//断开的连接数

public TcpCountHandler(){

Executors.newSingleThreadScheduledExecutor().scheduleAtFixedRate(()->{

System.out.println("当前连接数为 = "+ connectCounter.get());

System.out.println("当前断开连接数为 = "+ disConnectCounter.get());

}, 0, 3, TimeUnit.SECONDS);

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

connectCounter.incrementAndGet();

}

@Override

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

connectCounter.decrementAndGet();

disConnectCounter.incrementAndGet();

}

//异常最好注掉不然会疯狂打印,影响观察,如果有需要观察异常可打开

// @Override

// public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

// System.out.println("TcpCountHandler exceptionCaught");

// cause.printStackTrace();

// }

}

客户端:

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelFutureListener;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

public class NettyClient {

private static final String SERVER = "127.0.0.1";

public static void main(String [] args){

new NettyClient().run(Config.BEGIN_PORT, Config.END_PORT);

}

public void run(int beginPort, int endPort){

System.out.println("客户端启动中");

EventLoopGroup group = new NioEventLoopGroup();

Bootstrap bootstrap = new Bootstrap();

bootstrap.group(group)

.channel(NioSocketChannel.class)

.option(ChannelOption.SO_REUSEADDR, true)//参考server端

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

}

});

int index = 0 ;

int finalPort ;

while (true){

finalPort = beginPort + index;

try {

bootstrap.connect(SERVER, finalPort).addListener((ChannelFutureListener)future ->{

if(!future.isSuccess()){

System.out.println("创建连接失败 " );

}

}).get();

} catch (Exception e) {

//e.printStackTrace();

}

++index;

if(index == (endPort - beginPort)){

index = 0 ;

}

}

}

}

public class Config {

public static final int BEGIN_PORT = 8000;//起始端口

public static final int END_PORT = 8050;//结尾端口

}

执行结果:

我自己机器(内存:8G, cpu:4核)模拟最多能绑定不到17000个连接,如果在服务器

服务端启动中

当前连接数为 = 0

当前断开连接数为 = 0

服务端成功绑定端口 port = 8000

...省略端口绑定信息...

服务端成功绑定端口 port = 8049

当前连接数为 = 0

当前断开连接数为 = 0

当前连接数为 = 2010

当前断开连接数为 = 0

当前连接数为 = 4538

当前断开连接数为 = 0

当前连接数为 = 6245

当前断开连接数为 = 0

当前连接数为 = 7881

当前断开连接数为 = 0

服务器性能优化(支持更多连接数)

unix服务器修改分为全局和局部,全局是最大限制,局部在全局之下进行再限制,修改方式如下:

- 全局文件句柄限制(所有进程最大打开的文件数,不同系统是不一样,可以直接echo临时修改)

查看命令

cat /proc/sys/fs/file-max 永久修改全局文件句柄, 修改后生效 sysctl -p

vim /etc/sysctl.conf

增加 fs.file-max = 1000000

- 局部文件句柄限制(单个进程最大文件打开数)

ulimit -n 一个进程最大打开的文件数 fd 不同系统有不同的默认值

root身份编辑 vim /etc/security/limits.conf

增加下面

root soft nofile 1000000

root hard nofile 1000000

* soft nofile 1000000

* hard nofile 1000000

* 表示当前用户,修改后生效要重启

注意:启动时需要调整一下堆信息

-Xms5g -Xmx5g -XX:NewSize=3g -XX:MaxNewSize=3g

查看链接情况可以使用以下命令

lsof -i:端口号

netstat -tunplp | grep 端口号

详情可以参考:linux如何查看端口被谁占用(lsof -i与netstat命令)

建议服务器硬件:

cpu:16核

内存:32G