计算机视觉——眼疾图片识别(数据集iChallenge-PM)

写在前面: 我是「虐猫人薛定谔i」,一个不满足于现状,有梦想,有追求的00后

\quad

本博客主要记录和分享自己毕生所学的知识,欢迎关注,第一时间获取更新。

\quad

不忘初心,方得始终。

\quad❤❤❤❤❤❤❤❤❤❤

文章目录

- 数据介绍

- 代码

- 结果

- 总结

数据介绍

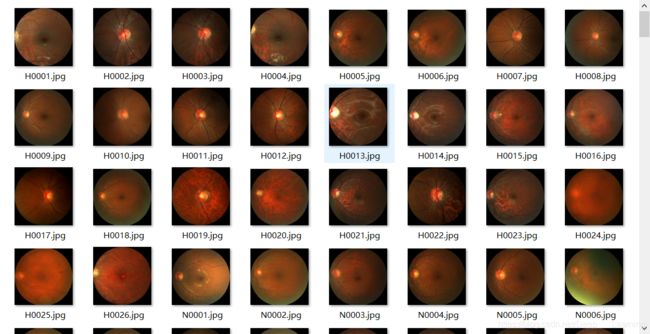

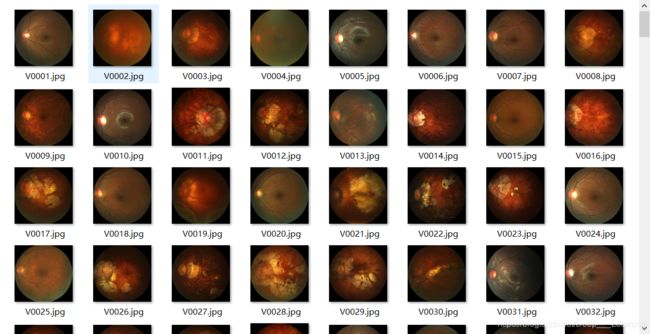

百度大脑和中山大学中山眼科中心联合举办的iChallenge比赛提供了一系列医疗类数据集, 其中有一项是关于病理性近视(Pathologic Myopia,简称:PM)疾病的,iChallenge-PM

PALM-Training400该文件夹下存放的是训练用的图片

PALM-Validation400该文件夹下存放的是验证用的图片

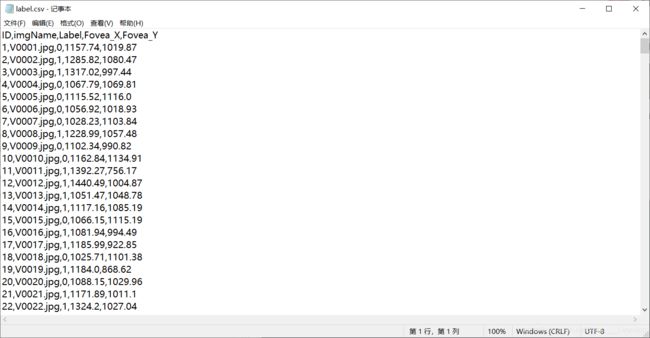

label.csv文件(处理过了,原数据集中是excel文件,我把它转成csv文件了)

代码

LeNet网络结构

# 定义LeNet的网络结构

class LeNet(fluid.dygraph.Layer):

def __init__(self, name_scope, num_classes=1):

super(LeNet, self).__init__(name_scope)

self.conv1 = Conv2D(num_channels=3,

num_filters=6,

filter_size=5,

act='sigmoid')

self.pool1 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv2 = Conv2D(num_channels=6,

num_filters=16,

filter_size=5,

act='sigmoid')

self.pool2 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv3 = Conv2D(num_channels=16,

num_filters=120,

filter_size=4,

act='sigmoid')

self.fc1 = Linear(input_dim=300000, output_dim=64, act='sigmoid')

self.fc2 = Linear(input_dim=64, output_dim=num_classes)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.pool2(x)

x = self.conv3(x)

x = fluid.layers.reshape(x, [x.shape[0], -1])

x = self.fc1(x)

x = self.fc2(x)

return x

AlexNet网络结构

# 定义AlexNet网络结构

class AlexNet(fluid.dygraph.Layer):

def __init__(self, name_scope, num_classes=1):

super(AlexNet, self).__init__(name_scope)

name_scope = self.full_name

self.conv1 = Conv2D(num_channels=3,

num_filters=96,

filter_size=11,

stride=4,

padding=5,

act='relu')

self.pool1 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv2 = Conv2D(num_channels=96,

num_filters=256,

filter_size=5,

stride=1,

padding=2,

act='relu')

self.pool2 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv3 = Conv2D(num_channels=256,

num_filters=384,

filter_size=3,

stride=1,

padding=1,

act='relu')

self.conv4 = Conv2D(num_channels=384,

num_filters=384,

filter_size=3,

stride=1,

padding=1,

act='relu')

self.conv5 = Conv2D(num_channels=384,

num_filters=256,

filter_size=3,

stride=1,

padding=1,

act='relu')

self.pool5 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.fc1 = Linear(input_dim=12544, output_dim=4096, act='relu')

self.drop_ratio1 = 0.5

self.fc2 = Linear(input_dim=4096, output_dim=4096, act='relu')

self.drop_ratio2 = 0.5

self.fc3 = Linear(input_dim=4096, output_dim=num_classes)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.pool2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.pool5(x)

x = fluid.layers.reshape(x, [x.shape[0], -1])

x = self.fc1(x)

x = fluid.layers.dropout(x, self.drop_ratio1)

x = self.fc2(x)

x = fluid.layers.dropout(x, self.drop_ratio2)

x = self.fc3(x)

return x

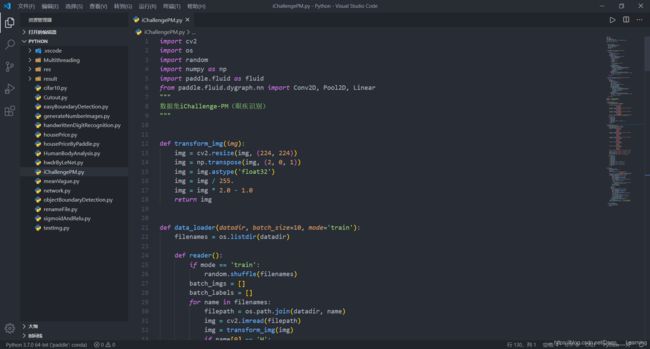

完整代码

import cv2

import os

import random

import numpy as np

import paddle.fluid as fluid

from paddle.fluid.dygraph.nn import Conv2D, Pool2D, Linear

"""

数据集iChallenge-PM(眼疾识别)

"""

def transform_img(img):

img = cv2.resize(img, (224, 224))

img = np.transpose(img, (2, 0, 1))

img = img.astype('float32')

img = img / 255.

img = img * 2.0 - 1.0

return img

def data_loader(datadir, batch_size=10, mode='train'):

filenames = os.listdir(datadir)

def reader():

if mode == 'train':

random.shuffle(filenames)

batch_imgs = []

batch_labels = []

for name in filenames:

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

if name[0] == 'H':

label = 0

elif name[0] == 'N':

label = 0

elif name[0] == 'P':

label = 1

else:

print('Not excepted file name')

print(name[0])

exit(-1)

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype(

'float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(

-1, 1)

yield imgs_array, labels_array

return reader

def valid_data_loader(datadir, csvfile, batch_size=10, mode='valid'):

filelists = open(csvfile).readlines()

def reader():

batch_imgs = []

batch_labels = []

for line in filelists[1:]:

line = line.strip().split(',')

name = line[1]

label = int(line[2])

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype(

'float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(

-1, 1)

yield imgs_array, labels_array

return reader

DATADIR = './res/PALM-Training400'

DATADIR2 = './res/PALM-Validation400'

CSCVFILE = './res/label.csv'

# 定义LeNet的网络结构

class LeNet(fluid.dygraph.Layer):

def __init__(self, name_scope, num_classes=1):

super(LeNet, self).__init__(name_scope)

self.conv1 = Conv2D(num_channels=3,

num_filters=6,

filter_size=5,

act='sigmoid')

self.pool1 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv2 = Conv2D(num_channels=6,

num_filters=16,

filter_size=5,

act='sigmoid')

self.pool2 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv3 = Conv2D(num_channels=16,

num_filters=120,

filter_size=4,

act='sigmoid')

self.fc1 = Linear(input_dim=300000, output_dim=64, act='sigmoid')

self.fc2 = Linear(input_dim=64, output_dim=num_classes)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.pool2(x)

x = self.conv3(x)

x = fluid.layers.reshape(x, [x.shape[0], -1])

x = self.fc1(x)

x = self.fc2(x)

return x

# 定义AlexNet网络结构

class AlexNet(fluid.dygraph.Layer):

def __init__(self, name_scope, num_classes=1):

super(AlexNet, self).__init__(name_scope)

name_scope = self.full_name

self.conv1 = Conv2D(num_channels=3,

num_filters=96,

filter_size=11,

stride=4,

padding=5,

act='relu')

self.pool1 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv2 = Conv2D(num_channels=96,

num_filters=256,

filter_size=5,

stride=1,

padding=2,

act='relu')

self.pool2 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv3 = Conv2D(num_channels=256,

num_filters=384,

filter_size=3,

stride=1,

padding=1,

act='relu')

self.conv4 = Conv2D(num_channels=384,

num_filters=384,

filter_size=3,

stride=1,

padding=1,

act='relu')

self.conv5 = Conv2D(num_channels=384,

num_filters=256,

filter_size=3,

stride=1,

padding=1,

act='relu')

self.pool5 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.fc1 = Linear(input_dim=12544, output_dim=4096, act='relu')

self.drop_ratio1 = 0.5

self.fc2 = Linear(input_dim=4096, output_dim=4096, act='relu')

self.drop_ratio2 = 0.5

self.fc3 = Linear(input_dim=4096, output_dim=num_classes)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.pool2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.pool5(x)

x = fluid.layers.reshape(x, [x.shape[0], -1])

x = self.fc1(x)

x = fluid.layers.dropout(x, self.drop_ratio1)

x = self.fc2(x)

x = fluid.layers.dropout(x, self.drop_ratio2)

x = self.fc3(x)

return x

# 定义训练过程

def train(model):

with fluid.dygraph.guard():

print("---- start training ----")

model.train()

epoch_num = 5

opt = fluid.optimizer.Momentum(learning_rate=0.001,

momentum=0.9,

parameter_list=model.parameters())

train_loader = data_loader(DATADIR, batch_size=10, mode='train')

valid_loader = valid_data_loader(DATADIR2, CSCVFILE)

for epoch in range(epoch_num):

for batch_id, data in enumerate(train_loader()):

x_data, y_data = data

img = fluid.dygraph.to_variable(x_data)

label = fluid.dygraph.to_variable(y_data)

logits = model(img)

loss = fluid.layers.sigmoid_cross_entropy_with_logits(

logits, label)

avg_loss = fluid.layers.mean(loss)

if batch_id % 10 == 0:

print("epoch: {}, batch_id: {}, loss is: {}".format(

epoch, batch_id, avg_loss.numpy()))

avg_loss.backward()

opt.minimize(avg_loss)

model.clear_gradients()

model.eval()

accuracies = []

losses = []

for batch_id, data in enumerate(valid_loader()):

x_data, y_data = data

img = fluid.dygraph.to_variable(x_data)

label = fluid.dygraph.to_variable(y_data)

logits = model(img)

pred = fluid.layers.sigmoid(logits)

loss = fluid.layers.sigmoid_cross_entropy_with_logits(

logits, label)

pred2 = pred * (-1.0) + 1.0

pred = fluid.layers.concat([pred2, pred], axis=1)

acc = fluid.layers.accuracy(

pred, fluid.layers.cast(label, dtype='int64'))

accuracies.append(acc.numpy())

losses.append(loss.numpy())

print("[validation accuracy/loss: {}/{}]".format(

np.mean(accuracies), np.mean(losses)))

model.train()

fluid.save_dygraph(model.state_dict(), './result/iChallengePM')

fluid.save_dygraph(opt.state_dict(), './result/iChallengePM')

if __name__ == "__main__":

with fluid.dygraph.guard():

# model = LeNet("LeNet")

model = AlexNet("AlexNet")

train(model)

结果

LeNet网络的训练结果

---- start training ----

epoch: 0, batch_id: 0, loss is: [0.63386595]

epoch: 0, batch_id: 10, loss is: [0.69357824]

epoch: 0, batch_id: 20, loss is: [0.8262283]

epoch: 0, batch_id: 30, loss is: [0.70314413]

[validation accuracy/loss: 0.4725000262260437/0.6942468881607056]

epoch: 1, batch_id: 0, loss is: [0.69328773]

epoch: 1, batch_id: 10, loss is: [0.6822144]

epoch: 1, batch_id: 20, loss is: [0.6788982]

epoch: 1, batch_id: 30, loss is: [0.679153]

[validation accuracy/loss: 0.5275000333786011/0.6917198300361633]

epoch: 2, batch_id: 0, loss is: [0.66813517]

epoch: 2, batch_id: 10, loss is: [0.6962514]

epoch: 2, batch_id: 20, loss is: [0.6779094]

epoch: 2, batch_id: 30, loss is: [0.72074294]

[validation accuracy/loss: 0.5275000333786011/0.6916546821594238]

epoch: 3, batch_id: 0, loss is: [0.68267685]

epoch: 3, batch_id: 10, loss is: [0.69080985]

epoch: 3, batch_id: 20, loss is: [0.69051874]

epoch: 3, batch_id: 30, loss is: [0.7030691]

[validation accuracy/loss: 0.5275000333786011/0.6916455626487732]

epoch: 4, batch_id: 0, loss is: [0.70706123]

epoch: 4, batch_id: 10, loss is: [0.7118827]

epoch: 4, batch_id: 20, loss is: [0.72799003]

epoch: 4, batch_id: 30, loss is: [0.7123946]

[validation accuracy/loss: 0.5275000333786011/0.6917262077331543]

AlexNet网络的训练结果

---- start training ----

epoch: 0, batch_id: 0, loss is: [0.71437234]

epoch: 0, batch_id: 10, loss is: [0.66942436]

epoch: 0, batch_id: 20, loss is: [0.57675594]

epoch: 0, batch_id: 30, loss is: [0.5789426]

[validation accuracy/loss: 0.9100000262260437/0.5783206224441528]

epoch: 1, batch_id: 0, loss is: [0.59212446]

epoch: 1, batch_id: 10, loss is: [0.5880574]

epoch: 1, batch_id: 20, loss is: [0.4672559]

epoch: 1, batch_id: 30, loss is: [0.5209719]

[validation accuracy/loss: 0.9325000643730164/0.29782530665397644]

epoch: 2, batch_id: 0, loss is: [0.59152406]

epoch: 2, batch_id: 10, loss is: [0.2935087]

epoch: 2, batch_id: 20, loss is: [0.3406319]

epoch: 2, batch_id: 30, loss is: [0.21688469]

[validation accuracy/loss: 0.9375/0.21106351912021637]

epoch: 3, batch_id: 0, loss is: [0.09478948]

epoch: 3, batch_id: 10, loss is: [0.39397192]

epoch: 3, batch_id: 20, loss is: [0.34152466]

epoch: 3, batch_id: 30, loss is: [0.24933481]

[validation accuracy/loss: 0.9399999380111694/0.2010333091020584]

epoch: 4, batch_id: 0, loss is: [0.43089372]

epoch: 4, batch_id: 10, loss is: [0.06475895]

epoch: 4, batch_id: 20, loss is: [0.33812967]

epoch: 4, batch_id: 30, loss is: [0.12363017]

[validation accuracy/loss: 0.8949999809265137/0.282415509223938]

总结

AlexNet相比于LeNet,拥有更多的卷积层,并且激活函数使用的是ReLU,同时使用了dropout有效的防止模型出现过拟合现象。

通过运行结果可以看出,在眼疾筛查数据集iChallenge-PM上,LeNet的loss很难下降,模型没有收敛。而AlexNet的loss则能有效下降,并且识别的准确率能达到了90%以上。

蒟蒻写博客不易,加之本人水平有限,写作仓促,错误和不足之处在所难免,谨请读者和各位大佬们批评指正。

如需转载,请署名作者并附上原文链接,蒟蒻非常感激

名称:虐猫人薛定谔i

博客地址:https://blog.csdn.net/Deep___Learning