2020 cs231n 作业1笔记 svm

目录

Multiclass Support Vector Machine loss(SVM loss)

求loss:

求梯度:

svm_loss_naive

svm_loss_vectorized

SGD

tune hyperparameters

Multiclass Support Vector Machine loss(SVM loss)

求loss:

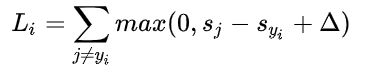

SVM的损失函数想要SVM在正确分类上的得分始终比不正确分类上的得分高出一个边界值![]() 。

。

如果正确分类得分比不正确分类得分高出![]() ,则损失为0,否则为:正确分类得分 - 不正确分类得分 +

,则损失为0,否则为:正确分类得分 - 不正确分类得分 + ![]()

损失函数的公式:

计算例子:

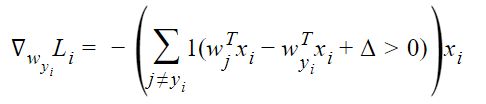

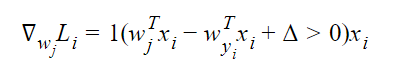

求梯度:

参考:Computing the gradient analytically with Calculus

直观理解根据损失函数的公式两边求导,

svm_loss_naive

首先完成cs231n/classifiers/linear_svm.py的svm_loss_naive(W, X, y, reg)函数,使用L2 Regularization

def svm_loss_naive(W, X, y, reg):

"""

Structured SVM loss function, naive implementation (with loops).

Inputs have dimension D, there are C classes, and we operate on minibatches

of N examples.

Inputs:

- W: A numpy array of shape (D, C) containing weights.

- X: A numpy array of shape (N, D) containing a minibatch of data.

- y: A numpy array of shape (N,) containing training labels; y[i] = c means

that X[i] has label c, where 0 <= c < C.

- reg: (float) regularization strength

Returns a tuple of:

- loss as single float

- gradient with respect to weights W; an array of same shape as W

"""

dW = np.zeros(W.shape) # initialize the gradient as zero

# compute the loss and the gradient

num_classes = W.shape[1]

num_train = X.shape[0]

loss = 0.0

for i in range(num_train):

scores = X[i].dot(W)

correct_class_score = scores[y[i]]

for j in range(num_classes):

if j == y[i]:

continue

margin = scores[j] - correct_class_score + 1 # note delta = 1

if margin > 0:

loss += margin

dW[:,j] += X[i,:]

dW[:,y[i]] -= X[i,:]

# Right now the loss is a sum over all training examples, but we want it

# to be an average instead so we divide by num_train.

loss /= num_train

dW /= num_train

# Add regularization to the loss.

loss += reg * np.sum(W * W)

dW += 2 * reg * W

#############################################################################

# TODO: #

# Compute the gradient of the loss function and store it dW. #

# Rather than first computing the loss and then computing the derivative, #

# it may be simpler to compute the derivative at the same time that the #

# loss is being computed. As a result you may need to modify some of the #

# code above to compute the gradient. #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return loss, dWsvm_loss_vectorized

然后是向量化实现:svm_loss_vectorized(W, X, y, reg)

def svm_loss_vectorized(W, X, y, reg):

"""

Structured SVM loss function, vectorized implementation.

Inputs and outputs are the same as svm_loss_naive.

"""

loss = 0.0

dW = np.zeros(W.shape) # initialize the gradient as zero

#############################################################################

# TODO: #

# Implement a vectorized version of the structured SVM loss, storing the #

# result in loss. #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

num_train = X.shape[0]

score = np.dot(X, W)

margin = score - score[range(num_train),y].reshape(-1,1) + 1

margin[range(num_train),y] = 0

margin = (margin > 0) * margin

loss = np.sum(margin) / num_train

loss += reg * np.sum(W * W)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

#############################################################################

# TODO: #

# Implement a vectorized version of the gradient for the structured SVM #

# loss, storing the result in dW. #

# #

# Hint: Instead of computing the gradient from scratch, it may be easier #

# to reuse some of the intermediate values that you used to compute the #

# loss. #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

flag = (margin > 0).astype(int)

#统计每行margin值>0的个数n,用于下一步dW[:,y[i]] -= n * X[i,:]

flag[range(num_train), y] = - np.sum(flag, axis = 1)

#dW[:,j] += X[i,:]

#dW[:,y[i]] -= n * X[i,:]

#两步一起做

dW = np.dot(X.T, flag) / num_train

dW += 2 * reg * W

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return loss, dW

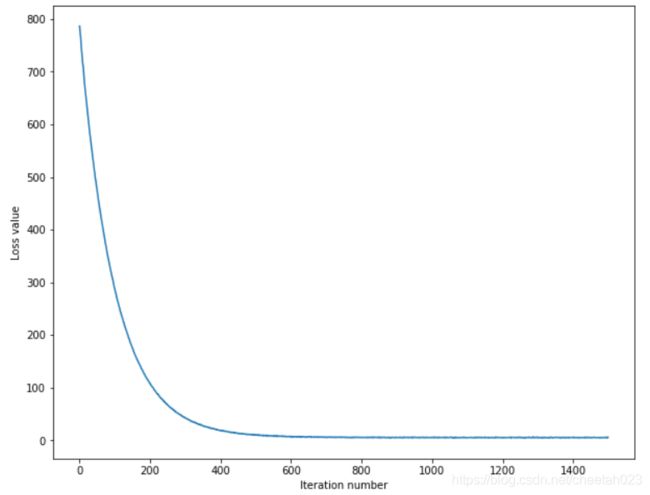

SGD

接下来就是使用SGD训练减少loss,优化参数

流程大概就是:

随机初始化W

for(迭代次数):

{

随机选batch_size个训练样本

计算loss ,gradient

W -= learning_rate * gradient

}

def train(self, X, y, learning_rate=1e-3, reg=1e-5, num_iters=100,

batch_size=200, verbose=False):

"""

Train this linear classifier using stochastic gradient descent.

Inputs:

- X: A numpy array of shape (N, D) containing training data; there are N

training samples each of dimension D.

- y: A numpy array of shape (N,) containing training labels; y[i] = c

means that X[i] has label 0 <= c < C for C classes.

- learning_rate: (float) learning rate for optimization.

- reg: (float) regularization strength.

- num_iters: (integer) number of steps to take when optimizing

- batch_size: (integer) number of training examples to use at each step.

- verbose: (boolean) If true, print progress during optimization.

Outputs:

A list containing the value of the loss function at each training iteration.

"""

num_train, dim = X.shape

num_classes = np.max(y) + 1 # assume y takes values 0...K-1 where K is number of classes

if self.W is None:

# lazily initialize W

self.W = 0.001 * np.random.randn(dim, num_classes)

# Run stochastic gradient descent to optimize W

loss_history = []

for it in range(num_iters):

X_batch = None

y_batch = None

#########################################################################

# TODO: #

# Sample batch_size elements from the training data and their #

# corresponding labels to use in this round of gradient descent. #

# Store the data in X_batch and their corresponding labels in #

# y_batch; after sampling X_batch should have shape (batch_size, dim) #

# and y_batch should have shape (batch_size,) #

# #

# Hint: Use np.random.choice to generate indices. Sampling with #

# replacement is faster than sampling without replacement. #

#########################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

lists = np.random.choice(num_train, batch_size)

X_batch = X[lists,:]

y_batch = y[lists]

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# evaluate loss and gradient

loss, grad = self.loss(X_batch, y_batch, reg)

loss_history.append(loss)

# perform parameter update

#########################################################################

# TODO: #

# Update the weights using the gradient and the learning rate. #

#########################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

self.W -= learning_rate * grad

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

if verbose and it % 100 == 0:

print('iteration %d / %d: loss %f' % (it, num_iters, loss))

return loss_history可以得到loss曲线和accuracy:

training accuracy: 0.374776

validation accuracy: 0.385000

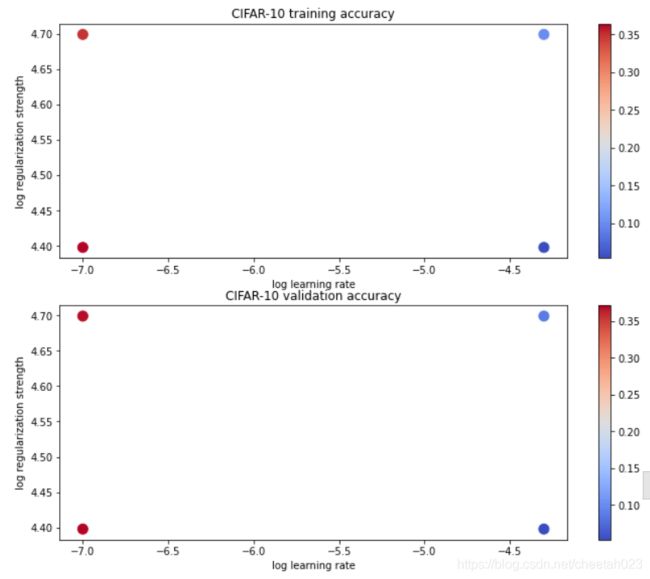

tune hyperparameters

最后是调超参数,找到validation accuracy最高的超参数(learning_rate 和 reg)

# Provided as a reference. You may or may not want to change these hyperparameters

learning_rates = [1e-7, 5e-5]

regularization_strengths = [2.5e4, 5e4]

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

for lr in learning_rates:

for reg in regularization_strengths:

svm = LinearSVM()

svm.train(X_train, y_train, learning_rate=lr, reg=reg, num_iters=1500)

y_train_pred = svm.predict(X_train)

train_accuracy = np.mean(y_train == y_train_pred)

y_val_pred = svm.predict(X_val)

val_accuracy = np.mean(y_val == y_val_pred)

results[(lr,reg)] = (train_accuracy, val_accuracy)

if val_accuracy > best_val:

best_val = val_accuracy

best_svm = svm

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****打印结果:

lr 1.000000e-07 reg 2.500000e+04 train accuracy: 0.363878 val accuracy: 0.371000

lr 1.000000e-07 reg 5.000000e+04 train accuracy: 0.349000 val accuracy: 0.368000

lr 5.000000e-05 reg 2.500000e+04 train accuracy: 0.054041 val accuracy: 0.053000

lr 5.000000e-05 reg 5.000000e+04 train accuracy: 0.100265 val accuracy: 0.087000

best validation accuracy achieved during cross-validation: 0.371000

在test测试集上的accuracy:

linear SVM on raw pixels final test set accuracy: 0.361000