TextCNN文本分类详解--使用TensorFlow一步步带你实现简单TextCNN

TextCNN文本分类详解–使用TensorFlow一步步带你实现简单TextCNN

前言

近期在项目组工作中,使用TextCNN对文本分类取得了不错的准确率,为了更清晰地了解TextCNN的结构,特翻译TensorFlow实现的TextCNN一文。

一、什么是TextCNN

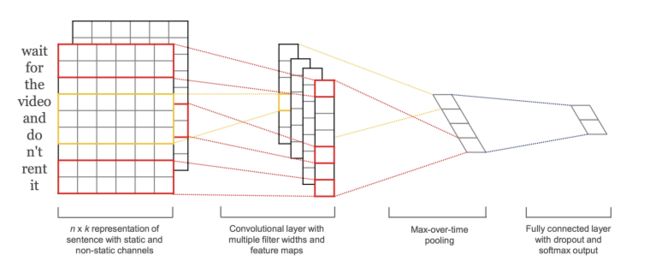

TextCNN 是利用卷积神经网络对文本进行分类的算法,由 Yoon Kim 在 《Convolutional Neural Networks for Sentence Classification》 中提出.

第一层将单词嵌入到低维矢量中。下一层使用多个过滤器大小对嵌入的单词向量执行卷积。例如,一次滑动3,4或5个单词。接下来,将卷积层的结果最大池化为一个长特征向量,添加dropout正则,并使用softmax对结果进行分类。

二、一步一步带你实现简单的TextCNN

代码使用dennybritz实现的[Github]cnn-text-classification-tf,下面将对其代码内容进行详解

- TEXTCNN类

TextCNN类的初始化方法如下:

class TextCNN(object):

def __init__(self,sequence_length, num_classes, vocab_size,

embedding_size, filter_sizes, num_filters,l2_reg_lambda=0.0):

各参数的介绍:

各参数的介绍:

| 参数名 | 意义 | 例 |

|---|---|---|

| sequence_length | 句子长度--输入句子的最大长度 | 20 |

| num_classes | 分类数 | 2400 |

| vocab_size | 单词数 | 3000 |

| embedding_size | 单词嵌入维度 | 128 |

| filter_sizes | 卷积过滤器的大小 | [3, 4, 5] -- 使用大小分别为3,4,5的过滤器,每个过滤器有一个与之对应的num_filters,本例共有3*[num_filters]个过滤器 |

| num_filters | 每个过滤器大小的过滤器数量 | |

| l2_reg_lambda | l2正则权值 | 0.0 |

# Placeholders for input, output and dropout

self.input_x = tf.placeholder(tf.int32, [None, sequence_length], name="input_x")

self.input_y = tf.placeholder(tf.float32, [None, num_classes], name="input_y")

self.dropout_keep_prob = tf.placeholder(tf.float32, name="dropout_keep_prob")

这里,我们创建了placeholder变量作为训练的输入和测试的输入,placeholder的第二项变量中第一维为batch_size,None意味着该维度可为任意值,使用None将该维度交给网络自由决定。

将神经元保存在dropout层中的概率也作为网络的输入,因为我们在测试时不使用dropout。

1.2 Embedding层

Embedding层将单词向量使用更低维向量表示。

with tf.device('/cpu:0'), tf.name_scope("embedding"):

W = tf.Variable(

tf.random_uniform([vocab_size, embedding_size], -1.0, 1.0),

name="W")

self.embedded_chars = tf.nn.embedding_lookup(W, self.input_x)

self.embedded_chars_expanded = tf.expand_dims(self.embedded_chars, -1)

这里我们使用了一些特殊的特性:

tf.device(’/cpu:0’)将Embedding操作交给cpu执行。默认情况下TensorFlow会将该操作交给gpu执行(前提是有gpu),但是当前embedding在gpu中执行会报错。

tf.name_scope(“embedding”):本操作将embedding加入到命名空间(name scope)中。命名空间将所有操作加入到名为embedding的顶层节点中,因此在使用TensorBoard进行网络可视化时能有一个良好的层次结构。

W是我们在训练中学习的嵌入矩阵。 我们使用随机均匀分布来初始化它。 tf.nn.embedding_lookup创建实际的嵌入操作。 嵌入操作的结果是形状为[None,sequence_length,embedding_size]的三维张量。

TensorFlow的卷积转换操作具有对应于批次,宽度,高度和通道的尺寸的4维张量。 我们嵌入的结果不包含通道尺寸,所以我们手动添加,留下一层shape为[None,sequence_length,embedding_size,1]。

1.3 Convolution and Max-Pooling Layers

下面开始构建卷积层,再进行max-pooling。因为每个卷积产生不同形状的张量,因此为他们中的每一个创建一个层,然后合并结果为一个大的特征向量。

pooled_outputs = [] # 池化输出结果

for i, filter_size in enumerate(filter_sizes):

# 遍历多个filter_size

with tf.name_scope("conv-maxpool-%s" % filter_size):

# Convolution Layer

filter_shape = [filter_size, embedding_size, 1, num_filters]

W = tf.Variable(tf.truncated_normal(filter_shape, stddev=0.1), name="W")

b = tf.Variable(tf.constant(0.1, shape=[num_filters]), name="b")

conv = tf.nn.conv2d(

self.embedded_chars_expanded,

W,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")

# Apply nonlinearity

h = tf.nn.relu(tf.nn.bias_add(conv, b), name="relu")

# Max-pooling over the outputs

pooled = tf.nn.max_pool(

h,

ksize=[1, sequence_length - filter_size + 1, 1, 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool")

pooled_outputs.append(pooled)

# Combine all the pooled features

num_filters_total = num_filters * len(filter_sizes)

self.h_pool = tf.concat(3, pooled_outputs)

self.h_pool_flat = tf.reshape(self.h_pool, [-1, num_filters_total])

这里,W是过滤器矩阵,h是将非线性应用于卷积输出的结果。 每个过滤器在整个嵌入中滑动,但是它涵盖的字数有所不同。 VALID填充意味着我们在没有填充边缘的情况下将过滤器滑过我们的句子,执行给我们输出形状[1,sequence_length - filter_size + 1,1,1]的窄卷积。 在特定过滤器大小的输出上执行最大值池将留下一张张量的形状[batch_size,1,num_filters]。 这本质上是一个特征向量,其中最后一个维度对应于我们的特征。 一旦我们从每个过滤器大小得到所有的汇总输出张量,我们将它们组合成一个长形特征向量[batch_size,num_filters_total]。 在tf.reshape中使用-1可以告诉TensorFlow在可能的情况下平坦化维度。

1.4 Dropout层

Dropout是使卷积神经网络正则化的最受欢迎的方法,Dropout的想法很简单:Dropout层随机“禁用”神经元的一部分,这可以防止神经元共同适应并迫使他们独立学习有用的特征。神经元中启用的比例是由初始化参数中的dropout_keep_prob决定的,训练时我们将它定义为0.5,而在测试时定义为1(禁用Dropout)。

# Add dropout

with tf.name_scope("dropout"):

self.h_drop = tf.nn.dropout(self.h_pool_flat, self.dropout_keep_prob)

1.5 评估和预测

使用从max-pooling中得到的特征向量(带Dropout),我们可以通过矩阵乘法生成预测并选择得分最高的分类,我们使用softmax将原分数转换为归一化概率,但它并不会改变预测结果。

with tf.name_scope("output"):

W = tf.Variable(tf.truncated_normal([num_filters_total, num_classes], stddev=0.1), name="W")

b = tf.Variable(tf.constant(0.1, shape=[num_classes]), name="b")

self.scores = tf.nn.xw_plus_b(self.h_drop, W, b, name="scores")

self.predictions = tf.argmax(self.scores, 1, name="predictions")

其中,tf.nn.xw_plus是一个实现 W x + b Wx+b Wx+b矩阵乘法的一个封装方法。

1.6 loss和准确率计算

我们可以使用1.5得到的score来定义loss function。分类问题的标准损失方程为交叉熵损失方程。

# Calculate mean cross-entropy loss

with tf.name_scope("loss"):

losses = tf.nn.softmax_cross_entropy_with_logits(self.scores, self.input_y)

self.loss = tf.reduce_mean(losses)

其中,tf.nn.softmax_cross_entropy_with_logits是一个对每个分类计算交叉熵损失的封装方法,通过score和正确分类作为参数,我们可以得到每一类的loss,对其求平均值,可以得到平均损失。

我们也定义了准确率函数。

# Calculate Accuracy

with tf.name_scope("accuracy"):

correct_predictions = tf.equal(self.predictions, tf.argmax(self.input_y, 1))

self.accuracy = tf.reduce_mean(tf.cast(correct_predictions, "float"), name="accuracy")

1.7 网络的可视化

到这里,我们已经完成了网络的构建,为了得到网络的可视化图,我们可以使用TensorBoard对网络进行可视化。

图4 网络可视化图

1.8 训练

FLAGS = tf.flags.FLAGS

with tf.Graph().as_default():

session_conf = tf.ConfigProto(

allow_soft_placement=FLAGS.allow_soft_placement,

log_device_placement=FLAGS.log_device_placement

)

sess = tf.Session(config=session_conf)

with sess.as_default():

显式创建graph便于训练结束后释放资源,

1.9 实例化CNN和最小化损失

当我们实例化我们的TextCNN模型时,所有定义的变量和操作将被放置在上面创建的默认图和会话中。

cnn = TextCNN(

sequence_length=x_train.shape[1],

num_classes=y_train.shape[1],

vocab_size=len(vocab_processor.vocabulary)

embedding_size=FLAGS.num_filters,

filter_sizes = map(int, FLAGS.filter_sizes.split(",")),

num_filters = FLAGS.num_filters)

接下来,我们定义如何优化网络的损失函数。 TensorFlow有几个内置优化器。 我们正在使用Adam优化器。

# Define Training procedure

global_step = tf.Variable(0,name="global_step",trainable=False)

optimizer = tf.train.AdamOptimizer(1e-4)

grads_and_vars = optimizer.compute_gradients(cnn.loss)

train_op = optimizer.apply_gradients(grads_and_vars,global_step=global_step)

1.10 概览(SUMMARIES)

TensorFlow有一个概述(summaries),可以在训练和评估过程中跟踪和查看各种数值。 例如,您可能希望跟踪您的损失和准确性随时间的变化。您还可以跟踪更复杂的数值,例如图层激活的直方图。 summaries是序列化对象,并使用SummaryWriter写入磁盘。

# Output directory for models and summaries

timestamp = str(int(time.time()))

out_dir = os.path.abspath(os.path.join(os.path.curdir, "runs", timestamp))

print("Writing to {}\n".format(out_dir))

# Summaries for loss and accuracy

loss_summary = tf.scalar_summary("loss", cnn.loss)

acc_summary = tf.scalar_summary("accuracy", cnn.accuracy)

# Train Summaries

train_summary_op = tf.merge_summary([loss_summary, acc_summary])

train_summary_dir = os.path.join(out_dir, "summaries", "train")

train_summary_writer = tf.train.SummaryWriter(train_summary_dir, sess.graph_def)

# Dev summaries

dev_summary_op = tf.merge_summary([loss_summary, acc_summary])

dev_summary_dir = os.path.join(out_dir, "summaries", "dev")

dev_summary_writer = tf.train.SummaryWriter(dev_summary_dir, sess.graph_def)

在这里,我们分别跟踪培训和评估的总结。 在我们的情况下,这些数值是相同的,但是您可能只有在训练过程中跟踪的数值(如参数更新值)。 tf.merge_summary是将多个摘要操作合并到可以执行的单个操作中的便利函数。

1.11 CHECKPOINTING

通常使用TensorFlow的另一个功能是checkpointing- 保存模型的参数以便稍后恢复。Checkpoints 可用于在以后的时间继续训练,或使用 early stopping选择最佳参数设置。 使用Saver对象创建 Checkpoints。

# Checkpointing

checkpoint_dir = os.path.abspath(os.path.join(out_dir, "checkpoints"))

checkpoint_prefix = os.path.join(checkpoint_dir, "model")

# Tensorflow assumes this directory already exists so we need to create it

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

saver = tf.train.Saver(tf.all_variables())

1.12 初始化变量

在训练模型前,我们需要初始化变量。

# Initialize all variables

sess.run(tf.global_variables_initializer())

1.13 定义单步训练函数

现在我们来定义一个训练步骤的函数,评估一批数据上的模型并更新模型参数。

def train_step(x_batch,y_batch):

"""

A single training step

"""

feed_dict = {

cnn.input_x:x_batch,

cnn.input_y:y_batch,

cnn.dropout_keep_prob:FLAGS.dropout_keep_prob

}

_,step,summaries,loss,accuracy = sess.run(

[train_op,global_step,train_summary_op,cnn.loss,cnn.accuracy],feed_dict

)

time_str = datetime.datetime.now().isoformat()

print("{}:step{},loss{:g},acc{:g}".format(time_str,step,loss,accuracy))

train_summary_writer.add_summary(summaries,step)

def dev_step(x_batch, y_batch, writer=None):

"""

Evaluates model on a dev set

"""

feed_dict = {

cnn.input_x: x_batch,

cnn.input_y: y_batch,

cnn.dropout_keep_prob: 1.0

}

step, summaries, loss, accuracy = sess.run(

[global_step, dev_summary_op, cnn.loss, cnn.accuracy],

feed_dict)

time_str = datetime.datetime.now().isoformat()

print("{}: step {}, loss {:g}, acc {:g}".format(time_str, step, loss, accuracy))

if writer:

writer.add_summary(summaries, step)

1.14 TRAINING LOOP

下面写训练循环。

# Generate batches

batches = data_helpers.batch_iter(

zip(x_train, y_train), FLAGS.batch_size, FLAGS.num_epochs)

# Training loop. For each batch...

for batch in batches:

x_batch, y_batch = zip(*batch)

train_step(x_batch, y_batch)

current_step = tf.train.global_step(sess, global_step)

if current_step % FLAGS.evaluate_every == 0:

print("\nEvaluation:")

dev_step(x_dev, y_dev, writer=dev_summary_writer)

print("")

if current_step % FLAGS.checkpoint_every == 0:

path = saver.save(sess, checkpoint_prefix, global_step=current_step)

print("Saved model checkpoint to {}\n".format(path))

- 模型可优化内容

使用word2vec词向量初始化embedding层,为了达到提升,你需要使用300维以上的词向量。

限制最后一层权重向量的L2范数,就像原始文献一样。你可以通过定义一个新的操作,在每次训练步骤之后更新权重值。

将L2正则化添加到网络以防止过拟合,同时也提高dropout比率。(Github上的代码已经包括L2正则化,但默认情况下禁用)

添加权重更新和图层操作的直方图summaries,并在TensorBoard中进行可视化。

后续内容待更

参考文献

Convolutional Neural Networks for Sentence Classification

Implementing a CNN for Text Classification in TensorFlow

cnn-text-classification-tf

2018.5.5更新:使用word2vec作为输入进行训练

使用Word2Vec

使用word2vec训练其实早已写完代码,一直没有整理上来。先将修改后的代码放到这里,以后有时间再介绍修改过程。

text_cnn.py

import tensorflow as tf

import numpy as np

class TextCNN(object):

def __init__(

self, sequence_length, num_classes, vocab_size,

embedding_size, filter_sizes, num_filters, l2_reg_lambda=0.0):

# Placeholders for input, output and dropout

self.input_x = tf.placeholder(tf.int32, [None, sequence_length], name="input_x")

self.input_y = tf.placeholder(tf.float32, [None, num_classes], name="input_y")

self.dropout_keep_prob = tf.placeholder(tf.float32, name="dropout_keep_prob")

self.learning_rate = tf.placeholder(tf.float32, name='learning_rate')

# Keeping track of l2 regularization loss (optional)

l2_loss = tf.constant(0.0)

# Embedding layer

with tf.device('/cpu:0'), tf.name_scope("embedding"):

self.W = tf.Variable(

tf.random_uniform([vocab_size, embedding_size], -1.0, 1.0),

name="W")

self.embedded_chars = tf.nn.embedding_lookup(self.W, self.input_x)

self.embedded_chars_expanded = tf.expand_dims(self.embedded_chars, -1)

# Create a convolution + maxpool layer for each filter size

pooled_outputs = []

for i, filter_size in enumerate(filter_sizes):

with tf.name_scope("conv-maxpool-%s" % filter_size):

# Convolution Layer

filter_shape = [filter_size, embedding_size, 1, num_filters]

W = tf.Variable(tf.truncated_normal(filter_shape, stddev=0.1), name="W")

b = tf.Variable(tf.constant(0.1, shape=[num_filters]), name="b")

conv = tf.nn.conv2d(

self.embedded_chars_expanded,

W,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")

# Apply nonlinearity

h = tf.nn.relu(tf.nn.bias_add(conv, b), name="relu")

# Maxpooling over the outputs

pooled = tf.nn.max_pool(

h,

ksize=[1, sequence_length - filter_size + 1, 1, 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool")

pooled_outputs.append(pooled)

# Combine all the pooled features

num_filters_total = num_filters * len(filter_sizes)

self.h_pool = tf.concat(pooled_outputs, 3)

self.h_pool_flat = tf.reshape(self.h_pool, [-1, num_filters_total])

# Add dropout

with tf.name_scope("dropout"):

self.h_drop = tf.nn.dropout(self.h_pool_flat, self.dropout_keep_prob)

# Final (unnormalized) scores and predictions

with tf.name_scope("output"):

W = tf.get_variable(

"W",

shape=[num_filters_total, num_classes],

initializer=tf.contrib.layers.xavier_initializer())

b = tf.Variable(tf.constant(0.1, shape=[num_classes]), name="b")

l2_loss += tf.nn.l2_loss(W)

l2_loss += tf.nn.l2_loss(b)

self.scores = tf.nn.xw_plus_b(self.h_drop, W, b, name="scores")

self.predictions = tf.argmax(self.scores, 1, name="predictions")

# CalculateMean cross-entropy loss

with tf.name_scope("loss"):

losses = tf.nn.softmax_cross_entropy_with_logits(logits=self.scores, labels=self.input_y)

self.loss = tf.reduce_mean(losses) + l2_reg_lambda * l2_loss

# Accuracy

with tf.name_scope("accuracy"):

correct_predictions = tf.equal(self.predictions, tf.argmax(self.input_y, 1))

self.accuracy = tf.reduce_mean(tf.cast(correct_predictions, "float"), name="accuracy")

data_helpers.py

import numpy as np

import re

import jieba

import itertools

from collections import Counter

from tensorflow.contrib import learn

from gensim.models import Word2Vec

def clean_str(string):

"""

Tokenization/string cleaning for all datasets except for SST.

Original taken from https://github.com/yoonkim/CNN_sentence/blob/master/process_data.py

"""

string = re.sub(r"[^A-Za-z0-9(),!?\'\`]", " ", string)

string = re.sub(r"\'s", " \'s", string)

string = re.sub(r"\'ve", " \'ve", string)

string = re.sub(r"n\'t", " n\'t", string)

string = re.sub(r"\'re", " \'re", string)

string = re.sub(r"\'d", " \'d", string)

string = re.sub(r"\'ll", " \'ll", string)

string = re.sub(r",", " , ", string)

string = re.sub(r"!", " ! ", string)

string = re.sub(r"

train.py

#! /usr/bin/env python

import tensorflow as tf

import numpy as np

import os

import time

import datetime

import data_helpers

from text_cnn import TextCNN

from tensorflow.contrib import learn

import yaml

# Parameters

# ==================================================

# Data loading params

tf.app.flags.DEFINE_float("dev_sample_percentage", .1, "Percentage of the training data to use for validation")

tf.app.flags.DEFINE_string("train_file", "../data/train_data.txt", "Train file source.")

tf.app.flags.DEFINE_string("test_file", "../data/test_data.txt", "Test file source.")

# Model Hyperparameters

tf.app.flags.DEFINE_integer("embedding_dim", 128, "Dimensionality of character embedding (default: 128)")

tf.app.flags.DEFINE_string("filter_sizes", "3,4,5", "Comma-separated filter sizes (default: '3,4,5')")

tf.app.flags.DEFINE_integer("num_filters", 128, "Number of filters per filter size (default: 128)")

tf.app.flags.DEFINE_float("dropout_keep_prob", 0.5, "Dropout keep probability (default: 0.5)")

tf.app.flags.DEFINE_float("l2_reg_lambda", 0.0, "L2 regularization lambda (default: 0.0)")

# Training parameters

tf.app.flags.DEFINE_integer("batch_size", 128, "Batch Size (default: 64)")

tf.app.flags.DEFINE_integer("num_epochs", 100, "Number of training epochs (default: 200)")

tf.app.flags.DEFINE_integer("evaluate_every", 100, "Evaluate model on dev set after this many steps (default: 100)")

tf.app.flags.DEFINE_integer("checkpoint_every", 1000, "Save model after this many steps (default: 100)")

tf.app.flags.DEFINE_integer("num_checkpoints", 5, "Number of checkpoints to store (default: 5)")

# Misc Parameters

tf.app.flags.DEFINE_boolean("allow_soft_placement", True, "Allow device soft device placement")

tf.app.flags.DEFINE_boolean("log_device_placement", False, "Log placement of ops on devices")

FLAGS = tf.app.flags.FLAGS

print("\nParameters:")

for attr, value in sorted(FLAGS.__flags.items()):

print("{}={}".format(attr.upper(), value))

print("")

# Data Preparation

# ==================================================

# Load data

with open("config.yml", 'r') as ymlfile:

cfg = yaml.load(ymlfile)

print("Loading data...")

x_train, y_train, x_test, y_test, vocab_processor = data_helpers.load_train_dev_data(FLAGS.train_file, FLAGS.test_file)

embedding_name = cfg['word_embeddings']['default']

embedding_dimension = cfg['word_embeddings'][embedding_name]['dimension']

# Training

# ==================================================

with tf.Graph().as_default():

session_conf = tf.ConfigProto(

allow_soft_placement=FLAGS.allow_soft_placement,

log_device_placement=FLAGS.log_device_placement)

sess = tf.Session(config=session_conf)

with sess.as_default():

cnn = TextCNN(

sequence_length=x_train.shape[1],

num_classes=y_train.shape[1],

vocab_size=len(vocab_processor.vocabulary_),

embedding_size=embedding_dimension,

filter_sizes=list(map(int, FLAGS.filter_sizes.split(","))),

num_filters=FLAGS.num_filters,

l2_reg_lambda=FLAGS.l2_reg_lambda)

cnn.learning_rate = 0.01

# Define Training procedure

global_step = tf.Variable(0, name="global_step", trainable=False)

optimizer = tf.train.AdamOptimizer(cnn.learning_rate)

grads_and_vars = optimizer.compute_gradients(cnn.loss)

train_op = optimizer.apply_gradients(grads_and_vars, global_step=global_step)

# Keep track of gradient values and sparsity (optional)

grad_summaries = []

for g, v in grads_and_vars:

if g is not None:

grad_hist_summary = tf.summary.histogram("{}/grad/hist".format(v.name), g)

sparsity_summary = tf.summary.scalar("{}/grad/sparsity".format(v.name), tf.nn.zero_fraction(g))

grad_summaries.append(grad_hist_summary)

grad_summaries.append(sparsity_summary)

grad_summaries_merged = tf.summary.merge(grad_summaries)

# Output directory for models and summaries

timestamp = str(int(time.time()))

out_dir = os.path.abspath(os.path.join(os.path.curdir, "runs", timestamp))

print("Writing to {}\n".format(out_dir))

# Summaries for loss and accuracy

loss_summary = tf.summary.scalar("loss", cnn.loss)

acc_summary = tf.summary.scalar("accuracy", cnn.accuracy)

# Train Summaries

train_summary_op = tf.summary.merge([loss_summary, acc_summary, grad_summaries_merged])

train_summary_dir = os.path.join(out_dir, "summaries", "train")

train_summary_writer = tf.summary.FileWriter(train_summary_dir, sess.graph)

# Dev summaries

dev_summary_op = tf.summary.merge([loss_summary, acc_summary])

dev_summary_dir = os.path.join(out_dir, "summaries", "dev")

dev_summary_writer = tf.summary.FileWriter(dev_summary_dir, sess.graph)

# Checkpoint directory. Tensorflow assumes this directory already exists so we need to create it

checkpoint_dir = os.path.abspath(os.path.join(out_dir, "checkpoints"))

checkpoint_prefix = os.path.join(checkpoint_dir, "model")

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

saver = tf.train.Saver(tf.global_variables(), max_to_keep=FLAGS.num_checkpoints)

# Write vocabulary

vocab_processor.save(os.path.join(out_dir, "vocab"))

# Initialize all variables

sess.run(tf.global_variables_initializer())

vocabulary = vocab_processor.vocabulary_

initW = data_helpers.load_embedding_vectors_word2vec(vocabulary,

cfg['word_embeddings']['word2vec']['path'],

cfg['word_embeddings']['word2vec']['binary'])

print(initW.shape)

sess.run(cnn.W.assign(initW))

def train_step(x_batch, y_batch):

"""

A single training step

"""

feed_dict = {

cnn.input_x: x_batch,

cnn.input_y: y_batch,

cnn.dropout_keep_prob: FLAGS.dropout_keep_prob

}

_, step, summaries, loss, accuracy = sess.run(

[train_op, global_step, train_summary_op, cnn.loss, cnn.accuracy],

feed_dict)

time_str = datetime.datetime.now().isoformat()

print("{}: step {}, loss {:g}, acc {:g}".format(time_str, step, loss, accuracy))

train_summary_writer.add_summary(summaries, step)

def dev_step(x_batch, y_batch, writer=None):

"""

Evaluates model on a dev set

"""

feed_dict = {

cnn.input_x: x_batch,

cnn.input_y: y_batch,

cnn.dropout_keep_prob: 1.0

}

step, summaries, loss, accuracy = sess.run(

[global_step, dev_summary_op, cnn.loss, cnn.accuracy],

feed_dict)

time_str = datetime.datetime.now().isoformat()

print("{}: step {}, loss {:g}, acc {:g}".format(time_str, step, loss, accuracy))

if writer:

writer.add_summary(summaries, step)

if step % FLAGS.batch_size == 0:

print('epoch ', step % FLAGS.batch_size)

# Generate batches

batches = data_helpers.batch_iter(

list(zip(x_train, y_train)), FLAGS.batch_size, FLAGS.num_epochs)

# Training loop. For each batch...

for batch in batches:

x_batch, y_batch = zip(*batch)

train_step(x_batch, y_batch)

current_step = tf.train.global_step(sess, global_step)

if current_step % FLAGS.evaluate_every == 0:

print("\nEvaluation:")

dev_step(x_test, y_test, writer=dev_summary_writer)

print("")

if current_step % FLAGS.checkpoint_every == 0:

path = saver.save(sess, checkpoint_prefix, global_step=current_step)

print("Saved model checkpoint to {}\n".format(path))