Kaggle比赛系列: (5) Mercari Price Suggestion Challenge(商品价格预测)

keras+RNN

一.sklearn包学习

from sklearn.preprocessing import LabelEncoder,MinMaxScaler

from sklearn.model_selection import train_test_split

- sklearn.preprocessing包中的LabelEncoder函数==>标签编码

le = LabelEncoder()#引用LabelEncoder方法一定记得加括号

le.fit(np.hstack([train.category_name, test.category_name]))

train.category_name = le.transform(train.category_name)

test.category_name = le.transform(test.category_name)

le.fit(np.hstack([train.brand_name, test.brand_name]))

train.brand_name = le.transform(train.brand_name)

test.brand_name = le.transform(test.brand_name)

del le#创建的一次性变量,记得手动删除

先fit(),再transform(). 将多类别字符属性转化为数值属性.值得注意的是,为了将训练集合测试集中的同一类别属性转化成相同的数值,需要使用np.hstack.

(1)np.vstack:轴0合并;(2) np.hstack:轴1合并;(3) np.dstack:轴2合并

2. MinMaxScaler函数 ==> 标准化函数

#SCALE target variable

train["target"] = np.log(train.price+1)#price偏度大,需要使用np.log转化为正态分布,+1是避免无穷出现

target_scaler = MinMaxScaler(feature_range=(-1, 1))#feature_range参数控制标准化范围

train["target"] = target_scaler.fit_transform(train.target.reshape(-1,1))#这里依然是fit()+transform(),不过这里是合并为一个函数fit_transform,注意这里,只有ndarray类型(数组)的数据才能进行reshape(),需要变化为np.array(train.target).reshape(-1,1)

pd.DataFrame(train.target).hist()#直方图绘制

- sklearn.model_selection模块的train_test_split函数 ==>将训练集划分为训练集和验证集

#EXTRACT DEVELOPTMENT TEST

dtrain, dvalid = train_test_split(train, random_state=123, train_size=0.99)

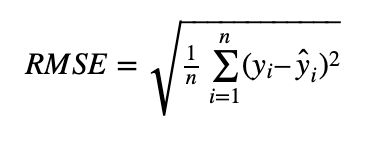

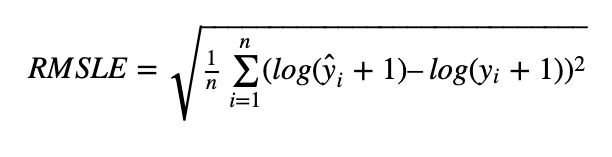

二.损失函数RMSLE(都是计算预测值与真实值间的距离)

RMSE 均方根误差(Root Mean Squared Error) 和 RMSLE 均方根对数误差(Root Mean Squared Logarithmic Error)

1.RMSLE 惩罚欠预测大于过预测,适用于某些需要欠预测损失更大的场景,如预测共享单车需求。

假如真实值为 1000,若预测值为 600,那么 RMSE=400, RMSLE=0.510

假如真实值为 1000,若预测值为 1400, 那么 RMSE=400, RMSLE=0.336

可以看出来在 RMSE 相同的情况下,预测值比真实值小这种情况的 RMSLE 比较大,即对于预测值小这种情况惩罚较大。

2.如果预测的值的范围很大,RMSE 会被一些大的值主导。这样即使你很多小的值预测准了,但是有一个非常大的值预测的不准确,RMSE 就会很大。 相应的,如果另外一个比较差的算法对这一个大的值准确一些,但是很多小的值都有偏差,可能 RMSE 会比前一个小。先取 log 再求 RMSE,可以稍微解决这个问题。RMSE 一般对于固定的平均分布的预测值才合理。

参考链接https://blog.csdn.net/qq_24671941/article/details/95868747

代码实现:

def rmsle(y,y_pred):

assert len(y) == len(y_pred)#断言函数

to_sum = [(math.log(y_pred[i] + 1) - math.log(y[i] + 1)) ** 2.0 for i,pred in enumerate(y_pred)]

return (sum(to_sum) * (1.0/len(y))) ** 0.5

三.缺失值填充

因为在训练集和测试集中,都在category_name\brand_name\item_description三个字段具有缺失值,又因为这三个字段的值都为字符串类型,且无法判断缺失值与存在值的相关性,直接使用"missing"来代替缺失值.

def handle_missing(dataset):

dataset.category_name.fillna('missing', inplace = True)

dataset.brand_name.fillna('missing', inplace = True)

dataset.item_description.fillna('missing', inplace = True)

train = handle_missing(train)

test = handle_missing(test)

四. 字符串属性转化为数值类型(描述性字符串)

name和item_description属性

from keras.preprocessing.text import Tokenizer#sklearn包也有预处理模块

raw_text = np.hstack([train.item_description.str.lower(), train.name.str.lower()])

tok_raw = Tokenizer()#必须初始化

tok_raw.fit_on_texts(raw_text)#fit()学习

train["seq_item_description"] = tok_raw.texts_to_sequences(train.item_description.str.lower())#应用,相当于transform转化

test["seq_item_description"] = tok_raw.texts_to_sequences(test.item_description.str.lower())

train["seq_name"] = tok_raw.texts_to_sequences(train.name.str.lower())

test["seq_name"] = tok_raw.texts_to_sequences(test.name.str.lower())

注意这里:虽然在构建raw_text时候只针对train数据集的item_descrpition和name进行fit()学习,同样可以直接将其应用在test数据集上,但是leaf觉得这里应该将test的两个属性也放入np.hstack中同一fit(),避免test中用train未曾出现的单词. 另为,注意单词的大小写, 使用lower()函数全部转化为小写.

重点函数:fit_on_texts() 与 texts_to_sequences()

max_name_seq = np.max([np.max(train.seq_name.apply(lambda x:len(x))), np.max(test.seq_name.apply(lambda x:len(x)))])

max_seq_item_description = np.max([np.max(train.seq_item_description.apply(lambda x: len(x)))

, np.max(test.seq_item_description.apply(lambda x: len(x)))])

注意这里不能使用len(train.seq_name),得到的结果是train的记录数(1482535),而不是每一条记录中seq_name的长度,在进行记录逐条操作的时候使用train.seq_name.apply(lambda)形式.lambda函数适合做循环任务.

五. keras模型建立

#KERAS DATA DEFINITION

from keras.preprocessing.sequence import pad_sequences

def get_keras_data(dataset):

X = {

'name': pad_sequences(dataset.seq_name, maxlen=MAX_NAME_SEQ)

,'item_desc': pad_sequences(dataset.seq_item_description, maxlen=MAX_ITEM_DESC_SEQ)

,'brand_name': np.array(dataset.brand_name)

,'category_name': np.array(dataset.category_name)

,'item_condition': np.array(dataset.item_condition_id)

,'num_vars': np.array(dataset[["shipping"]])

}

return X

X_train = get_keras_data(dtrain)

X_valid = get_keras_data(dvalid)

X_test = get_keras_data(test)

pad_sequences函数: keras只能接受长度相同的序列输入。因此如果目前序列长度参差不齐,这时需要使用pad_sequences()。该函数是将序列转化为经过填充以后的一个长度相同的新序列新序列。其他数据需转化为np.ndarray形式.

keras.preprocessing.sequence.pad_sequences(sequences,

maxlen=None,

dtype=‘int32’,

padding=‘pre’,

truncating=‘pre’,

value=0.)

sequences:浮点数或整数构成的 两层嵌套列表

e:

list_1 = [[2,3,4]]

keras.preprocessing.sequence.pad_sequences(list_1, maxlen=10)

array([[0, 0, 0, 0, 0, 0, 0, 2, 3, 4]], dtype=int32)

六. Keras 建模(model类型,RNN )

#KERAS MODEL DEFINITION

from keras.layers import Input, Dropout, Dense, BatchNormalization, Activation, concatenate, GRU, Embedding, Flatten, BatchNormalization

from keras.models import Model

from keras.callbacks import ModelCheckpoint, Callback, EarlyStopping

from keras import backend as K

def get_callbacks(filepath, patience=2):

es = EarlyStopping('val_loss', patience=patience, mode="min")

msave = ModelCheckpoint(filepath, save_best_only=True)

return [es, msave]

def rmsle_cust(y_true, y_pred):

first_log = K.log(K.clip(y_pred, K.epsilon(), None) + 1.)

second_log = K.log(K.clip(y_true, K.epsilon(), None) + 1.)

return K.sqrt(K.mean(K.square(first_log - second_log), axis=-1))

def get_model():

#params

dr_r = 0.1

#Inputs

name = Input(shape=[X_train["name"].shape[1]], name="name")

item_desc = Input(shape=[X_train["item_desc"].shape[1]], name="item_desc")

brand_name = Input(shape=[1], name="brand_name")

category_name = Input(shape=[1], name="category_name")

item_condition = Input(shape=[1], name="item_condition")

num_vars = Input(shape=[X_train["num_vars"].shape[1]], name="num_vars")

#Embeddings layers

emb_name = Embedding(MAX_TEXT, 50)(name)

emb_item_desc = Embedding(MAX_TEXT, 50)(item_desc)

emb_brand_name = Embedding(MAX_BRAND, 10)(brand_name)

emb_category_name = Embedding(MAX_CATEGORY, 10)(category_name)

emb_item_condition = Embedding(MAX_CONDITION, 5)(item_condition)

#rnn layer

rnn_layer1 = GRU(16) (emb_item_desc)

rnn_layer2 = GRU(8) (emb_name)

#main layer

main_l = concatenate([

Flatten() (emb_brand_name)

, Flatten() (emb_category_name)

, Flatten() (emb_item_condition)

, rnn_layer1

, rnn_layer2

, num_vars

])

main_l = Dropout(dr_r) (Dense(128) (main_l))

main_l = Dropout(dr_r) (Dense(64) (main_l))

#output

output = Dense(1, activation="linear") (main_l)

#model

model = Model([name, item_desc, brand_name

, category_name, item_condition, num_vars], output)

model.compile(loss="mse", optimizer="adam", metrics=["mae", rmsle_cust])

return model

model = get_model()

model.summary()

训练

#FITTING THE MODEL

BATCH_SIZE = 20000

epochs = 5

model = get_model()

model.fit(X_train, dtrain.target, epochs=epochs, batch_size=BATCH_SIZE, validation_data=(X_valid, dvalid.target), verbose=1)

Train on 1467709 samples, validate on 14826 samples

Epoch 1/5

1467709/1467709 [] - 99s 67us/step - loss: 2.0515 - mean_absolute_error: 1.0567 - rmsle_cust: 0.3344 - val_loss: 0.3265 - val_mean_absolute_error: 0.4356 - val_rmsle_cust: 0.1105

Epoch 2/5

1467709/1467709 [] - 97s 66us/step - loss: 0.3238 - mean_absolute_error: 0.4343 - rmsle_cust: 0.1100 - val_loss: 0.2671 - val_mean_absolute_error: 0.3909 - val_rmsle_cust: 0.0994

Epoch 3/5

1467709/1467709 [] - 97s 66us/step - loss: 0.2849 - mean_absolute_error: 0.4062 - rmsle_cust: 0.1030 - val_loss: 0.2521 - val_mean_absolute_error: 0.3792 - val_rmsle_cust: 0.0965

Epoch 4/5

1467709/1467709 [] - 98s 67us/step - loss: 0.2641 - mean_absolute_error: 0.3903 - rmsle_cust: 0.0991 - val_loss: 0.2434 - val_mean_absolute_error: 0.3720 - val_rmsle_cust: 0.0947

Epoch 5/5

1467709/1467709 [==============================] - 99s 67us/step - loss: 0.2488 - mean_absolute_error: 0.3783 - rmsle_cust: 0.0961 - val_loss: 0.2383 - val_mean_absolute_error: 0.3686 - val_rmsle_cust: 0.0939

验证集预测

#EVLUEATE THE MODEL ON DEV TEST: What is it doing?

val_preds = model.predict(X_valid)

val_preds = target_scaler.inverse_transform(val_preds)

val_preds = np.exp(val_preds)+1

#mean_absolute_error, mean_squared_log_error

y_true = np.array(dvalid.price.values)

y_pred = val_preds[:,0]

v_rmsle = rmsle(y_true, y_pred)

print(" RMSLE error on dev test: "+str(v_rmsle))

测试集预测

#CREATE PREDICTIONS

preds = model.predict(X_test, batch_size=BATCH_SIZE)

preds = target_scaler.inverse_transform(preds)

preds = np.exp(preds)-1

submission = test[["test_id"]]

submission["price"] = preds

submission.to_csv("./myNNsubmission.csv", index=False)

submission.price.hist()

七. Tricks

- all_data.drop(all_data[[‘train_id’,‘test_id’,‘price’]],axis=1,inplace=True)同时删除多个列.

- dataset_na = dataset_na.drop(dataset_na[dataset_na == 0].index).sort_values(ascending=False)删除指定行并排序. 注意这种表达形式:dataset.drop( dataset[dataset == 0].index )

- train = train.drop(index=(train.loc[(train.price.isnull())].index))删除指定行index=(train.loc[(train.price.isnull())].index)