房价预测2

学习:

https://blog.csdn.net/u012063773/article/details/79349256

https://www.cnblogs.com/massquantity/p/8640991.html

https://zhuanlan.zhihu.com/p/39429689

详解stacking过程

之前在房价预测1中对一些异常值进行了drop处理 后来在分割train和test的时候发现因为前边drop的时候什么也没考虑出错了

对异常值的处理要不就修改值 要不就在删除的时候要注意将该行的预测标签saleprice一起删除 并且注意数据集的index

目录

特征提取

数值类型

时间序列

分类数据

模型训练

分割数据集

融合模型1

LASSO MODEL

ELASTIC NET

XGBOOST

融合

提交

融合模型2

选择模型(未调参)

调参方法

Lasso

Ridge

SVR

KernelRidge

ElasticNet

BayesianRidge

集成

Stacking

提交

特征提取

数值类型

#增加总面积特征

full_1['2ndFlrSF'] = full['2ndFlrSF']

full_1['TotalSF'] = full_1['TotalBsmtSF'] + full_1['1stFlrSF'] + full_1['2ndFlrSF']#对于偏度skewness大于0.15的定量变量 标准化使其符合正态分布 提升质量

from scipy.special import boxcox1p

#对saleprice使用log1p较好(为啥?因为最好还是因为是预测值?)

full_1['SalePrice'] = np.log1p(full_1['SalePrice'])

lam = 0.15

t = ['GrLivArea', 'GarageArea', 'TotalBsmtSF', '1stFlrSF', 'LotFrontage','2ndFlrSF']

#其他连续分布的 采用boxcox1p

for feat in t:

full_1[feat] = boxcox1p(full_1[feat],lam)这段没太懂为什么大佬对log1p和boxcox1p这样选择 感觉都用boxcox1p也可以

log1p的使用

时间序列

#增加售出年份&月份

full_1['YrSold'] = full['YrSold']

full_1['MoSold'] = full['MoSold']#有重建

full_1['hasRemod'] = (full_1['YearBuilt']!=full_1['YearRemodAdd'])*1

#售出时房子年龄

full_1['houseAge'] = full_1['YrSold'].astype(int) - full_1['YearBuilt'].astype(int)

#售出时重建年龄

full_1['RemodAge'] = full_1['YrSold'].astype(int) - full_1['YearRemodAdd'].astype(int)本来这里用astype把年份月份转为了str类型 但是后面xgboost使用cart树 只接受数值类型的处理

XGBoost之类别特征的处理

分类数据

对于分成ex gd等类的字段 映射为数值

def QualToInt(x):

if(x=='Ex' or x=='GLQ' or x=='GdPrv'):

r=5

elif(x=='Gd' or x=='ALQ' or x=='MnPrv'):

r=4

elif(x=='TA' or x=='Av' or x=='BLQ' or x=='GdWo'):

r=3

elif(x=='Fa' or x=='Mn' or x=='Rec' or x=='MnWw'):

r=2

elif(x=='Po' or x=='No' or x=='LwQ' or x=='Unf'):

r=1

else:

r=0

return rfull_2 = full_1

full_2['BsmtCond'] = full_1['BsmtCond'].apply(QualToInt)

full_2['BsmtQual'] = full_1['BsmtQual'].apply(QualToInt)

full_2['BsmtExposure'] = full_1['BsmtExposure'].apply(QualToInt)

full_2['BsmtFinType1'] = full_1['BsmtFinType1'].apply(QualToInt)

full_2['BsmtFinType2'] = full_1['BsmtFinType2'].apply(QualToInt)

full_2['GarageCond'] = full_1['GarageCond'].apply(QualToInt)

full_2['GarageQual'] = full_1['GarageQual'].apply(QualToInt)

full_2['PoolQC'] = full_1['PoolQC'].apply(QualToInt)

full_2['KitchenQual'] = full_1['KitchenQual'].apply(QualToInt)

full_2['Fence'] = full_1['Fence'].apply(QualToInt)

full_2['FireplaceQu'] = full_1['FireplaceQu'].apply(QualToInt)其他的使用get_dummies进行one-hot编码

#MSZoning分区分类

MSZoning = pd.DataFrame()

MSZoning = pd.get_dummies(full_3['MSZoning'],prefix='MSZoning')

MSZoning.head()#添加one-hot编码产生的虚拟变量(dummy variables)

full_3 = pd.concat([full_3,MSZoning],axis=1)

#替代

full_3.drop('MSZoning',axis=1,inplace=True)

full_3.head()用value_counts查看后发现Utilities只有两种属性

full_3['Utilities'] = full_3['Utilities'].map({'AllPub':1, 'NoSeWa':0})最后得到100+个特征

模型训练

分割数据集

#经过清洗的训练数据有1460行

trainRow=1460

'''

sourceRow是我们在最开始合并数据前知道的,原始数据集有总共有1460条数据

从特征集合full_X中提取原始数据集提取前1460行数据时,我们要减去1,因为行号是从0开始的。

'''

#原始训练数据集:特征

train_X = full_3.loc[0:trainRow-1,:]

#原始训练数据集:标签

train_y = full_3.loc[0:trainRow-1,'SalePrice']

#预测数据集:特征

pred_X = full_3.loc[trainRow:,:]

train_y.shape[0]删除分割后的X数据集中的saleprice

#因为分割后的数据集里还有saleprice 删掉

train_X = train_X.drop(['SalePrice'],axis=1)

pred_X = pred_X.drop(['SalePrice'],axis=1)

融合模型1

定义验证得分函数

def rmse_cv(model):

rmse= np.sqrt(-cross_val_score(model, train_X, train_y, scoring="neg_mean_squared_error", cv = 5))

return(rmse)from sklearn.linear_model import Ridge

from sklearn.linear_model import Lasso

from sklearn.linear_model import ElasticNet

from sklearn.ensemble import GradientBoostingRegressor

import lightgbm as lgb

from sklearn.svm import SVR

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import RobustScaler

from sklearn.model_selection import KFold,GridSearchCV,cross_val_score

from xgboost.sklearn import XGBRegressor

from sklearn.kernel_ridge import KernelRidge

from sklearn.linear_model import BayesianRidge,LinearRegression

from sklearn.ensemble import RandomForestRegressor

from sklearn.svm import LinearSVR选用模型&复用之前代码&写验证函数的时候 要注意看清用的是回归模型还是分类模型

【虽然可能也不会有人跟我一样连这个都疏忽了

LASSO MODEL

clf1 = Lasso()

clf1.fit(train_X,train_y)

lasso_pred = np.expm1(clf1.predict(pred_X))

score1 = rmse_cv(clf1)

print("\nLasso score: {:.4f} ({:.4f})\n".format(score1.mean(), score1.std()))Lasso score: 0.2102 (0.0357)

ELASTIC NET

clf2 = ElasticNet(alpha=0.0005, l1_ratio=0.9)

clf2.fit(train_X,train_y)

elas_pred = np.expm1(clf2.predict(pred_X))

score2 = rmse_cv(clf2)

print("\nElasticNet score: {:.4f} ({:.4f})\n".format(score2.mean(), score2.std()))ElasticNet score: 0.1315 (0.0159)

XGBOOST

#n_estimators 基分类器循环次数 默认为100

#一般参数 取决于提升器,通常是树或线性模型

#提升参数 取决于选择的提升器的相关参数

#learning_rate学习率 [0,1] 默认为0.3

#gamma 控制叶子 默认为0 该参数越大 越不容易过拟合

#max_depth 每棵树的最大深度 默认为6 越大越容易过拟合

#min_child_weight 每个叶子的最小权重和 默认为1 越大越不易过拟合

#subsample 样本采样比率 (0,1] 默认为1

#colsample_bytree 列采样比率 (0,1] 默认为1 对每棵树生成用的特征进行列采样

#lambda 正则化参数 >=0 默认为1 越大越不易过拟合

#alpha 正则化参数 >=0 默认为1 越大越不易过拟合

#学习参数 取决于指定学习任务和相应的学习目标

#rmse越小越好

clf3=XGBRegressor(max_depth=10,

min_child_weight=2,

subsample=0.9,

colsample_bytree=0.6,

n_estimators=100,

gamma=0.05

)

clf3.fit(train_X,train_y)

xgb_pred = np.expm1(clf3.predict(pred_X))

score3 = rmse_cv(clf3)

print("\nxgb score: {:.4f} ({:.4f})\n".format(score3.mean(), score3.std()))

融合

final_score = 0.05*score1.mean() + 0.8*score2.mean()+0.15*score3.mean()

final_score0.13591452419859584

提交

final_result = 0.05*lasso_pred + 0.8*elas_pred + 0.15*xgb_pred

solution = pd.DataFrame({"id":test.index+1461, "SalePrice":final_result}, columns=['id', 'SalePrice'])

solution.to_csv("result1.csv", index = False)

融合模型2

选择模型(未调参)

models = [LinearRegression(),Ridge(),Lasso(alpha=0.01,max_iter=10000),RandomForestRegressor(),GradientBoostingRegressor(),SVR(),LinearSVR(),

ElasticNet(alpha=0.001,max_iter=10000),BayesianRidge(),KernelRidge(alpha=0.6, kernel='polynomial', degree=2, coef0=2.5),

XGBRegressor()]names = ["LR", "Ridge", "Lasso", "RF", "GBR", "SVR", "LinSVR", "Ela","SGD","Bay","Ker","Extra","Xgb"]

for name, model in zip(names, models):

score = rmse_cv(model)

print("{}: {:.6f}, {:.4f}".format(name,score.mean(),score.std()))

调参方法

class grid():

def __init__(self,model):

self.model = model

def grid_get(self,X,y,param_grid):

grid_search = GridSearchCV(self.model,param_grid,cv=5, scoring="neg_mean_squared_error")

grid_search.fit(X,y)

print(grid_search.best_params_, np.sqrt(-grid_search.best_score_))

grid_search.cv_results_['mean_test_score'] = np.sqrt(-grid_search.cv_results_['mean_test_score'])

print(pd.DataFrame(grid_search.cv_results_)[['params','mean_test_score','std_test_score']])

Lasso

sklearn实现套索回归(lasso regression)以及调参

#alpha 正则项系数,大于0

#max_iter : int 最大循环次数

grid(Lasso()).grid_get(train_X,train_y,{'alpha': [0.0004,0.0005,0.0007,0.0006,0.0009,0.0008],'max_iter':[10000]})

#'alpha': 0.0005, 'max_iter': 10000

#0.132566 0.004286

Ridge

#alpha 正则项系数,大于0

grid(Ridge()).grid_get(train_X,train_y,{'alpha':range(5,100,5)})

#'alpha': 5

#0.131947 0.004043

SVR

sklearn.svm.SVR的参数介绍

grid(SVR()).grid_get(train_X,train_y,{'gamma':[0.0001,0.0002,0.0003,0.0004,0.0005]})

#'gamma': 0.0001

#0.191549 0.003529#gamma : float,optional(默认='auto')

#C 惩罚系数

#epsilon : float,optional(默认值= 0.1)

grid(SVR()).grid_get(train_X,train_y,{'gamma':[0.0002,0.0003,0.0004,0.0005],'C':range(10,20,2),'epsilon':np.arange(0.1,1.5,0.2)})

#'gamma': 0.0002 C:12 epsilon:0.1

#0.204583 0.004987grid(SVR()).grid_get(train_X,train_y,{'gamma':[0.0002],'C':[12],'epsilon':[0.005,0.009,0.01,0.013,0.015],'kernel':['rbf']})

#'gamma': 0.0002 C:12 epsilon:0.01 kernel='rbf'

#0.204224 0.005218

KernelRidge

sklearn浅析(六)——Kernel Ridge Regression

这里使用kernel不同导致分数出了问题

#alpha float或者list(当y是多目标矩阵时

#degree poly核中的参数d,使用其他核时无效

#coef0 poly和sigmoid核中的0参数的替代值,使用其他核时无效

grid(KernelRidge()).grid_get(train_X,train_y,{'alpha':np.arange(0.1,1,0.1),'degree':range(1,15,2),'coef0':np.arange(0.1,1.5,0.2)})

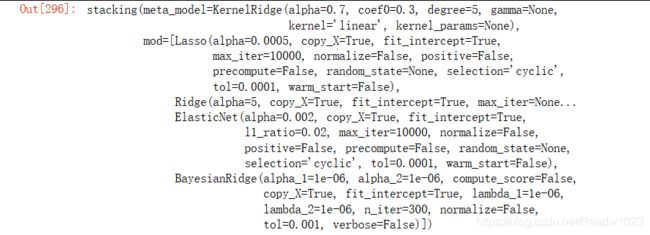

#'alpha': 0.7 degree:5 coef0:0.3

#0.132994 0.003998

ElasticNet

弹性网络( Elastic Net)

#alpha float或者list

#l1_ratio L1-norm和L2-norm的比例,取值范围是0到1的浮点数 调节L1和L2的凸组合

#max_iter 最高迭代次数

grid(ElasticNet()).grid_get(train_X,train_y,{'alpha':np.arange(0.001,0.01,0.001),'l1_ratio':np.arange(0.01,0.1,0.01),'max_iter':[10000]})

#'alpha': 0.002 l1_ratio:0.02 max_iter=10000

#0.131996 0.003997

BayesianRidge

集成

大佬在接下来使用了集成stacking的方法把模型两层融合

但是我提交后的结果跟第一种模型融合方法差不多

可能是PCA降维没做好或者过拟合或者特征工程做得不好【大佬都有400+

最近几周有点忙 先把代码贴上来过后再研究

from sklearn.base import BaseEstimator, TransformerMixin, RegressorMixin, clone

from sklearn.model_selection import KFold, cross_val_score, train_test_split

#根据权重加权平均

class AverageWeight(BaseEstimator, RegressorMixin):

def __init__(self,mod,weight):

self.mod = mod

self.weight = weight

def fit(self,X,y):

self.models_ = [clone(x) for x in self.mod]

for model in self.models_:

model.fit(X,y)

return self

def predict(self,X):

w = list()

pred = np.array([model.predict(X) for model in self.models_])

# for every data point, single model prediction times weight, then add them together

for data in range(pred.shape[1]):

single = [pred[model,data]*weight for model,weight in zip(range(pred.shape[0]),self.weight)]

w.append(np.sum(single))

return wlasso = Lasso(alpha=0.0005,max_iter=10000)

ridge = Ridge(alpha=5)

svr = SVR(gamma= 0.0002,kernel='rbf',C=12,epsilon=0.01)

ker = KernelRidge(alpha=0.7 ,degree=5 , coef0=0.3)

ela = ElasticNet(alpha=0.002,l1_ratio=0.02,max_iter=10000)

bay = BayesianRidge()# assign weights based on their gridsearch score

w1 = 0.15

w2 = 0.2

w3 = 0.05

w4 = 0.2

w5 = 0.2

w6 = 0.2weight_avg = AverageWeight(mod = [lasso,ridge,svr,ker,ela,bay],weight=[w1,w2,w3,w4,w5,w6])score = rmse_cv(weight_avg)

print(score.mean())

Stacking

class stacking(BaseEstimator, RegressorMixin, TransformerMixin):

def __init__(self,mod,meta_model):

self.mod = mod

self.meta_model = meta_model

self.kf = KFold(n_splits=5, random_state=42, shuffle=True)

def fit(self,X,y):

self.saved_model = [list() for i in self.mod]

oof_train = np.zeros((X.shape[0], len(self.mod)))

for i,model in enumerate(self.mod):

for train_index, val_index in self.kf.split(X,y):

renew_model = clone(model)

renew_model.fit(X[train_index], y[train_index])

self.saved_model[i].append(renew_model)

oof_train[val_index,i] = renew_model.predict(X[val_index])

self.meta_model.fit(oof_train,y)

return self

def predict(self,X):

whole_test = np.column_stack([np.column_stack(model.predict(X) for model in single_model).mean(axis=1)

for single_model in self.saved_model])

return self.meta_model.predict(whole_test)

def get_oof(self,X,y,test_X):

oof = np.zeros((X.shape[0],len(self.mod)))

test_single = np.zeros((test_X.shape[0],5))

test_mean = np.zeros((test_X.shape[0],len(self.mod)))

for i,model in enumerate(self.mod):

for j, (train_index,val_index) in enumerate(self.kf.split(X,y)):

clone_model = clone(model)

clone_model.fit(X[train_index],y[train_index])

oof[val_index,i] = clone_model.predict(X[val_index])

test_single[:,j] = clone_model.predict(test_X)

test_mean[:,i] = test_single.mean(axis=1)

return oof, test_meanfrom sklearn.preprocessing import Imputer

# must do imputer first, otherwise stacking won't work, and i don't know why.

a = Imputer().fit_transform(train_X)

b = Imputer().fit_transform(train_y.values.reshape(-1,1)).ravel()stack_model = stacking(mod=[lasso,ridge,svr,ker,ela,bay],meta_model=ker)score = rmse_cv2(stack_model,a,b)

print(score.mean())#Next we extract the features generated from stacking

#then combine them with original features.

X_train_stack, X_test_stack = stack_model.get_oof(a,b,pred_X)X_train_stack.shape, a.shape

X_train_add = np.hstack((a,X_train_stack))

X_test_add = np.hstack((pred_X,X_test_stack))

X_train_add.shape, X_test_add.shapescore = rmse_cv2(stack_model,X_train_add,b)

print(score.mean())

提交

stack_model = stacking(mod=[lasso,ridge,svr,ker,ela,bay],meta_model=ker)

stack_model.fit(a,b)pred = np.exp(stack_model.predict(pred_X))result=pd.DataFrame({'Id':test.Id, 'SalePrice':pred})

result.to_csv("result3.csv",index=False)

接下来要做的事:

有空了重新研究PCA降维和Stacking

刷题补充sql知识

开始为毕设做技术准备

再更有空了学习一下Hive/wind