Binder 源码解析

1, Binder

Binder是一种跨进程通信(调用)方法,以BluetoothService为例分析蓝牙服务的注册,获取过程。

ServiceManager:管理所有服务,主要是注册和获取,并且单独运行在一个进程中,通过init启动。

BluetoothService :和其它服务一样, 由SystemServer启动,运行于

Framework-res.apk 这一进程中。

代码不是很难,但是要注意以下几点:

1,注册服务是为了其他进程获取服务并且使用服务,注册服务就像开了一个带锁的房间,使用该房间必须拥有对应的钥匙。在上面的例子中:

注册蓝牙服务时,钥匙为String类型的BluetoothAdapter.BLUETOOTH_MANAGER_SERVICE

2,获取服务使用对应的钥匙就可以了,最后调用ServiceManager的getService方法。

2 Java/JNI解析

2.1 JNI 初始化

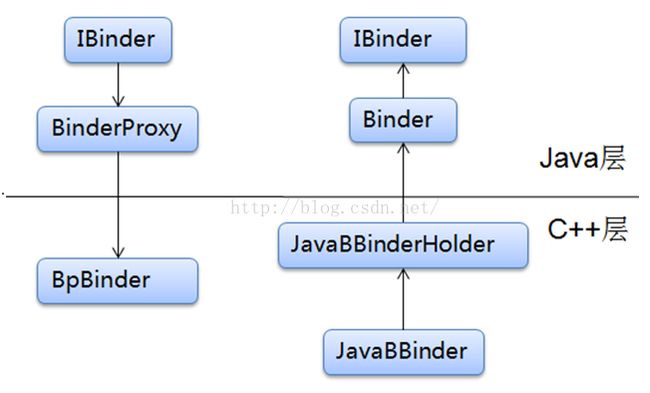

从图中可以看书,java层的3个类对应C/C++ 的一个android_util_Binder.cpp 文件,其中, BinderProxy 是Binder 的内部类。

注册步骤如下:

1. int register_android_os_Binder(JNIEnv* env)

2. {

3. if(int_register_android_os_Binder(env)< 0)

4. return -1;

5. if(int_register_android_os_BinderInternal(env)< 0)

6. return -1;

7. if(int_register_android_os_BinderProxy(env)< 0)

8. return -1;

9.

10. ···

11. return 0;

12. }

分别注册完成Binder,BinderInternal, BinderProxy三个类的初始化,以BinderProxy为例解析细节流程。

13. const char* const kBinderProxyPathName ="android/os/BinderProxy";

14. static intint_register_android_os_BinderProxy(JNIEnv* env)

15. {

16. jclass clazz = FindClassOrDie(env,"java/lang/Error");

17. gErrorOffsets.mClass =MakeGlobalRefOrDie(env, clazz);

18.

19. clazz = FindClassOrDie(env,kBinderProxyPathName); // 对应BinderProxy类

20. gBinderProxyOffsets.mClass= MakeGlobalRefOrDie(env, clazz);

21. gBinderProxyOffsets.mConstructor =GetMethodIDOrDie(env, clazz, "

22. gBinderProxyOffsets.mSendDeathNotice =GetStaticMethodIDOrDie(env, clazz,

23. "sendDeathNotice", "(Landroid/os/IBinder$DeathRecipient;)V");

24. gBinderProxyOffsets.mObject =GetFieldIDOrDie(env, clazz, "mObject", "J");

25. gBinderProxyOffsets.mSelf =GetFieldIDOrDie(env, clazz, "mSelf",

26. "Ljava/lang/ref/WeakReference;");

27. gBinderProxyOffsets.mOrgue =GetFieldIDOrDie(env, clazz, "mOrgue", "J");

28.

29. clazz = FindClassOrDie(env,"java/lang/Class");

30. gClassOffsets.mGetName =GetMethodIDOrDie(env, clazz, "getName",

31. "()Ljava/lang/String;");

32.

33. return RegisterMethodsOrDie(

34. env, kBinderProxyPathName,

35. gBinderProxyMethods,NELEM(gBinderProxyMethods));

36. }

主要做2件事情,

1,像几乎所有的JNI机制一样,注册函数 RegisterMethodsOrDie,以便于Java调用C/C++

2,为gBinderProxyOffsets 结构体初始化。

37. static struct binderproxy_offsets_t

38. {

39. //Class state.

40. jclass mClass;

41. jmethodID mConstructor;

42. jmethodID mSendDeathNotice;

43.

44. //Object state.

45. jfieldID mObject;

46. jfieldID mSelf;

47. jfieldID mOrgue;

48.

49. } gBinderProxyOffsets;

gBinderProxyOffsets有mClass 和 mObject 这2个重要的值,

gBinderProxyOffsets.mClass 对应java层的BinderProxy类。

2.2 ServiceManagerProxy对应

将ServiceManagerProxy.java 类和BinderProxy.java类对应起来,将BinderProxy.java类和C/C++的BpBinder.cpp 类对应起来,这样才可以将进行跨进程通信。

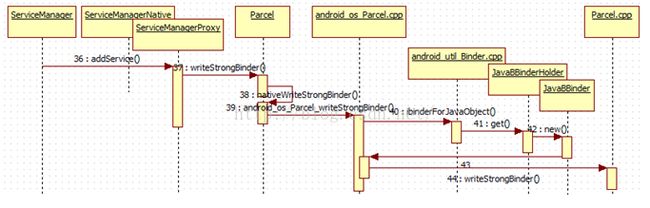

流程图

50. public static void addService(String name,IBinder service) {

51. try {

52. getIServiceManager().addService(name, service, false);

53. } catch (RemoteException e) {

54. Log.e(TAG, "error inaddService", e);

55. }

56. }

57.

首先看看getIServiceManager的到啥,

58. private static IServiceManager sServiceManager;

59. private static IServiceManagergetIServiceManager() {

60. if (sServiceManager != null) {

61. return sServiceManager;

62. }

63.

64. // Find the service manager

65. sServiceManager = ServiceManagerNative.asInterface(BinderInternal.getContextObject());

66. return sServiceManager;

67. }

这里面有2个比较重要的函数,错过的话,遗憾终生啊

68. static public IServiceManager asInterface(IBinder obj)

69. {

70. if (obj == null) {

71. return null;

72. }

73. IServiceManager in =(IServiceManager)obj.queryLocalInterface(descriptor);

74. if (in != null) {

75. return in;

76. }

77. return new ServiceManagerProxy(obj);

78. }

看到这里, IServiceManager对象就是ServiceManagerProxy对象,调用IServiceManager的方法就是调用ServiceManagerProxy中的方法。然后看看getContextObject方法, getContextObject 方法直接调用了android_os_BinderInternal_getContextObject 方法

79. static jobjectandroid_os_BinderInternal_getContextObject(JNIEnv* env, jobject clazz)

80. {

81. sp

82. return javaObjectForIBinder(env,b); // 构造BinderProxy对象并返回。

83. }

又要兵分两路了,

84. sp

85. {

86. return getStrongProxyForHandle(0);

87. }

88. sp

89. {

90. mLock.lock();

91. sp

92. mContexts.indexOfKey(name) >= 0 ?mContexts.valueFor(name) : NULL);

93. mLock.unlock();

94.

95. //printf("Getting context object %sfor %p\n", String8(name).string(), caller.get());

96.

97. if(object != NULL) return object;

98.

99. //Don't attempt to retrieve contexts if we manage them

100. if(mManagesContexts) {

101. ALOGE("getContextObject(%s) failed, but we manage thecontexts!\n",

102. String8(name).string());

103. return NULL;

104. }

105.

106. IPCThreadState* ipc = IPCThreadState::self();

107. {

108. Parcel data, reply;

109. // no interface token on this magic transaction

110. data.writeString16(name);

111. data.writeStrongBinder(caller);

112. status_t result = ipc->transact(0 /*magic*/, 0, data, &reply, 0);

113. if (result == NO_ERROR) {

114. object = reply.readStrongBinder();

115. }

116. }

117.

118. ipc->flushCommands();

119.

120. if(object != NULL) setContextObject(object, name);

121. return object;

122.}

在Android里,对于Service Manager Service这个特殊的服务而言,其对应的代理端的句柄值已经预先定死为0了,所以我们直接new BpBinder(0)拿到的就是个合法的BpBinder,其对端为“Service Manager Service实体”(至少目前可以先这么理解)。那么对于其他“服务实体”对应的代理,句柄值又是多少呢?使用方又该如何得到这个句柄值呢?我们总不能随便蒙一个句柄值吧。正如我们前文所述,要得到某个服务对应的BpBinder,主要得借助Service Manager Service系统服务,查询出一个合法的Binder句柄,并进而创建出合法的BpBinder。

123.sp

124.{

125. sp

126. AutoMutex _l(mLock);

127. handle_entry* e = lookupHandleLocked(handle);

128.

129. if(e != NULL) {

130. IBinder* b = e->binder;

131. if (b == NULL || !e->refs->attemptIncWeak(this)) {

132. ···

133. b = new BpBinder(handle);

134. e->binder = b;

135. if (b) e->refs = b->getWeakRefs();

136. result = b;

137. } else {

138. result.force_set(b);

139. e->refs->decWeak(this);

140. }

141. }

142.

143. return result;

144.}

145.jobject javaObjectForIBinder(JNIEnv* env,const sp

146.{

147. ···

148.

149. object= env->NewObject(gBinderProxyOffsets.mClass,gBinderProxyOffsets.mConstructor);

150. if(object != NULL) {

151. env->SetLongField(object, gBinderProxyOffsets.mObject, (jlong)val.get());

152. val->incStrong((void*)javaObjectForIBinder);

153. ···

154. }

155.

156. return object;

157.}

1,千呼万唤始出来, gBinderProxyOffsets.mClass对应java层的BinderProxy类,所以实例化了一个BinderProxy对象,并且返回。

2,env->SetLongField(object,gBinderProxyOffsets.mObject, (jlong)val.get());

这一句将gBinderProxyOffsets结构体的mObject值指向上面构造的BpBinder对象。

3,在ServiceManagerProxy构造函数中, mRemote对象就是返回的BinderProxy对象。

这样,就将ServiceManagerProxy,BinderProxy, BpBinder对应起来了。

2.3 transact 解析

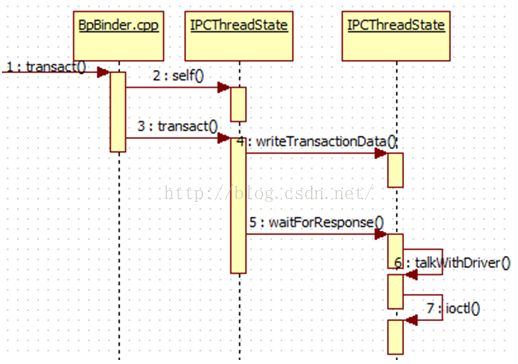

说了这么多,终于开始调用addService方法了,流程图中步骤71到76

158.public void addService(String name, IBinderservice, boolean allowIsolated)

159. throws RemoteException {

160. Parcel data = Parcel.obtain();

161. Parcel reply = Parcel.obtain();

162. data.writeInterfaceToken(IServiceManager.descriptor);

163. data.writeString(name);

164. data.writeStrongBinder(service);

165. data.writeInt(allowIsolated ? 1 : 0);

166. mRemote.transact(ADD_SERVICE_TRANSACTION,data, reply, 0);

167. reply.recycle();

168. data.recycle();

169.}

170.

171.

mRemote对象就是BinderProxy对象。

172.public boolean transact(int code, Parceldata, Parcel reply, int flags) throws RemoteException {

173. Binder.checkParcel(this, code, data, "Unreasonably large binderbuffer");

174. return transactNative(code,data, reply, flags);

175.}

176.

177.static jbooleanandroid_os_BinderProxy_transact(JNIEnv* env, jobject obj,

178. jint code, jobject dataObj, jobject replyObj, jint flags) // throwsRemoteException

179.{

180. ···

181. IBinder* target = (IBinder*)

182. env->GetLongField(obj, gBinderProxyOffsets.mObject);

183. ···

184. status_t err = target->transact(code,*data, reply, flags);

185. ···

186.

187. if(err == NO_ERROR) {

188. return JNI_TRUE;

189. }else if (err == UNKNOWN_TRANSACTION) {

190. return JNI_FALSE;

191. }

192.

193. signalExceptionForError(env, obj, err, true /*canThrowRemoteException*/,data->dataSize());

194. return JNI_FALSE;

195.}

196.

首先获取IBinder对象,由上文分析, gBinderProxyOffsets.mObject就是BpBinder对象,然后进一步调用BpBinder的transact 方法。

2.4 具体服务的代理

2.4.1 初始化

197.const char* const kBinderPathName ="android/os/Binder";

198.

199.static int int_register_android_os_Binder(JNIEnv* env)

200.{

201. jclass clazz = FindClassOrDie(env, kBinderPathName);

202.

203. gBinderOffsets.mClass =MakeGlobalRefOrDie(env, clazz);

204. gBinderOffsets.mExecTransact = GetMethodIDOrDie(env, clazz,"execTransact", "(IJJI)Z");

205. gBinderOffsets.mObject =GetFieldIDOrDie(env, clazz, "mObject", "J");

206.

207. return RegisterMethodsOrDie(

208. env, kBinderPathName,

209. gBinderMethods, NELEM(gBinderMethods));

210.}

同理, gBinderOffsets也是一个结构体, gBinderOffsets.mClass 指向java层的Binder

211.static struct bindernative_offsets_t

212.{

213. //Class state.

214. jclass mClass;

215. jmethodID mExecTransact;

216.

217. //Object state.

218. jfieldID mObject;

219.

220.} gBinderOffsets;

Binder.java 中Binder的构造函数中,会调用init()函数,调用android_os_Binder_init方法。

221.static void android_os_Binder_init(JNIEnv*env, jobject obj)

222.{

223. JavaBBinderHolder* jbh = new JavaBBinderHolder();

224. if(jbh == NULL) {

225. jniThrowException(env, "java/lang/OutOfMemoryError", NULL);

226. return;

227. }

228. ALOGV("Java Binder %p: acquiring first ref on holder %p", obj,jbh);

229. jbh->incStrong((void*)android_os_Binder_init);

230. env->SetLongField(obj,gBinderOffsets.mObject, (jlong)jbh);

231.}

232.

因此, gBinderOffsets.mObject指向JavaBBinderHolder, JavaBBinderHolder是android_os_Parcel.cpp中的一个类。

2.4.2 流程解析

233.public void addService(String name, IBinderservice, boolean allowIsolated)

234. throws RemoteException {

235. Parcel data = Parcel.obtain();

236. Parcel reply = Parcel.obtain();

237. data.writeInterfaceToken(IServiceManager.descriptor);

238. data.writeString(name);

239. data.writeStrongBinder(service);

240. data.writeInt(allowIsolated ? 1 : 0);

241. mRemote.transact(ADD_SERVICE_TRANSACTION, data, reply, 0);

242. reply.recycle();

243. data.recycle();

244. }

245.public final void writeStrongBinder(IBinderval) {

246. nativeWriteStrongBinder(mNativePtr,val);

247. }

248.static voidandroid_os_Parcel_writeStrongBinder(JNIEnv* env, jclass clazz, jlong nativePtr,jobject object)

249.{

250. Parcel* parcel = reinterpret_cast

251. if(parcel != NULL) {

252. const status_t err = parcel->writeStrongBinder(ibinderForJavaObject(env, object));

253. if (err != NO_ERROR) {

254. signalExceptionForError(env, clazz, err);

255. }

256. }

257.}

首先看看android_util_Binder.cpp的ibinderForJavaObject 方法

258.sp

259.{

260. if(obj == NULL) return NULL;

261.

262. if(env->IsInstanceOf(obj, gBinderOffsets.mClass)) {

263. JavaBBinderHolder* jbh =(JavaBBinderHolder*)

264. env->GetLongField(obj, gBinderOffsets.mObject);

265. return jbh != NULL ? jbh->get(env,obj) : NULL;

266. }

267.

268. if(env->IsInstanceOf(obj, gBinderProxyOffsets.mClass)) {

269. return (IBinder*)

270. env->GetLongField(obj, gBinderProxyOffsets.mObject);

271. }

272.

273. ALOGW("ibinderForJavaObject: %p is not a Binder object", obj);

274. return NULL;

275.}

看JavaBBinderHolder的get方法

276.

277.sp

278. {

279. AutoMutex _l(mLock);

280. sp

281. if (b == NULL) {

282. b = new JavaBBinder(env,obj);

283. mBinder = b;

284. ALOGV("Creating JavaBinder %p (refs %p) for Object %p,weakCount=%" PRId32 "\n",

285. b.get(), b->getWeakRefs(),obj, b->getWeakRefs()->getWeakCount());

286. }

287.

288. return b;

289. }

在添加服务时,一个具体的服务(比如PMS)在C/C++中对应一个JavaBBinder对象。

2.5 小结

BpBinder是客户端在C++层的代理,获取各种服务时实际返回的是BpBinder。

具体的服务在C++层的代理类为JavaBBinder

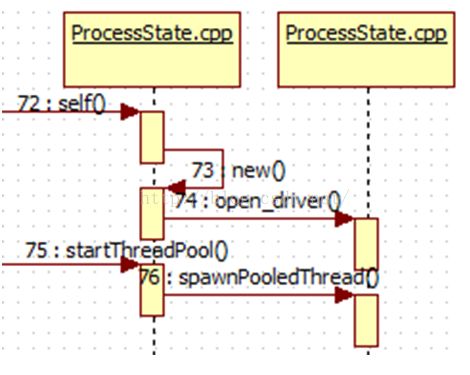

3 ProcessState

ProcessState,顾名思义,进程状态。一个进程仅有一个ProcessState对象。所有Android都是从Zygote进程fork出来的,在fork应用时,会调用onZygoteInit()函数,初始化Binder环境。

3.1 初始化

290.sp

291.proc ->startThreadPool(); // 启动线程池

初始化时,主要完成2件事情,打开驱动和启动线程池。

另外, mHandleToObject是本进程中记录所有BpBinder的向量表,

292.struct handle_entry {

293. IBinder* binder;

294. RefBase::weakref_type* refs;

295. };

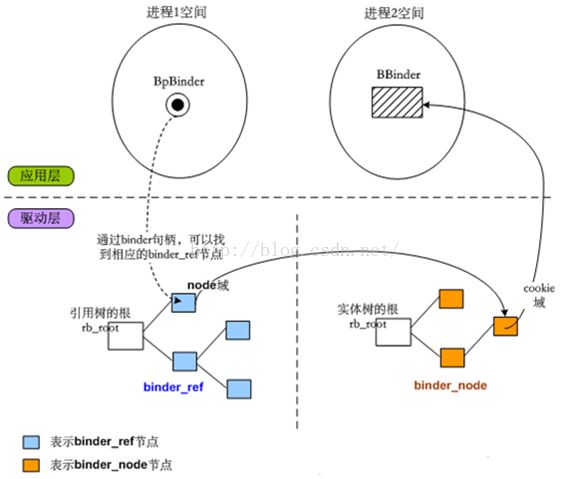

3.2 红黑树

Android系统中有很多应用都可以进行跨进程通信,每个应用都有一个进程,有很多线程,跨进程通信时,客户端和服务端在不同的进程中,相互之间是如何精确调用的呢?在此,利用了红黑树这种数据结构,存在于驱动层的对应的线程中。

当构造ProcessState并打开binder驱动之时,会调用到驱动层的binder_open()函数,而binder_proc就是在binder_open()函数中创建的。新创建的binder_proc会作为一个节点,插入一个总链表(binder_procs)中。

kernel /drivers/staging/android/binder.c

kernel/include/linux/list.h

296.static int binder_open(struct inode *nodp, struct file *filp)

297.{

298. struct binder_proc *proc;

299.

300. ···

301. hlist_add_head(&proc->proc_node,&binder_procs);

302. ···

303.

304. return0;

305.}

binder_proc结构体定义如下:

306.struct binder_proc {

307. structhlist_node proc_node;

308. structrb_root threads;

309. structrb_root nodes; // nodes树用于记录binder实体,

310. structrb_root refs_by_desc; // binder代理

311. structrb_root refs_by_node;// binder代理

312. ···

313.};

在一个进程中,有多少“被其他进程进行跨进程调用的”binder实体,就会在该进程对应的nodes树中生成多少个红黑树节点。另一方面,一个进程要访问多少其他进程的binder实体,则必须在其refs_by_desc树中拥有对应的引用节点。

这4棵树的节点类型是不同的,threads树的节点类型为binder_thread,nodes树的节点类型为binder_node,refs_by_desc树和refs_by_node树的节点类型相同,为binder_ref。这些节点内部都会包含rb_node子结构,该结构专门负责连接节点的工作。

binder_node的定义如下:

314.struct binder_node {

315. intdebug_id;

316. structbinder_work work;

317. union {

318. structrb_node rb_node;

319. structhlist_node dead_node;

320. };

321. structbinder_proc *proc;

322. structhlist_head refs;

323. intinternal_strong_refs;

324. intlocal_weak_refs;

325. intlocal_strong_refs;

326. binder_uintptr_t ptr;

327. binder_uintptr_t cookie;

328. unsignedhas_strong_ref:1;

329. unsignedpending_strong_ref:1;

330. unsignedhas_weak_ref:1;

331. unsignedpending_weak_ref:1;

332. unsignedhas_async_transaction:1;

333. unsignedaccept_fds:1;

334. unsignedmin_priority:8;

335. structlist_head async_todo;

336.};

337.struct binder_ref{

338. /*Lookups needed: */

339. /* node + proc => ref (transaction) */

340. /* desc + proc => ref (transaction, inc/decref) */

341. /* node => refs + procs (proc exit) */

342. intdebug_id;

343. structrb_node rb_node_desc;

344. structrb_node rb_node_node;

345. structhlist_node node_entry;

346. structbinder_proc *proc;

347. structbinder_node *node;

348. uint32_tdesc;

349. intstrong;

350. intweak;

351. structbinder_ref_death *death;

352.};

OK,现在我们可以更深入地说明binder句柄的作用了,比如进程1的BpBinder在发起跨进程调用时,向binder驱动传入了自己记录的句柄值,binder驱动就会在“进程1对应的binder_proc结构”的引用树中查找和句柄值相符的binder_ref节点,一旦找到binder_ref节点,就可以通过该节点的node域找到对应的binder_node节点,这个目标binder_node当然是从属于进程2的binder_proc啦,不过不要紧,因为binder_ref和binder_node都处于binder驱动的地址空间中,所以是可以用指针直接指向的。目标binder_node节点的cookie域,记录的其实是进程2中BBinder的地址,binder驱动只需把这个值反映给应用层,应用层就可以直接拿到BBinder了。这就是Binder完成精确打击的大体过程。

ProcessState中另一个比较有意思的域是mHandleToObject:

4, 驱动层

4.1 红黑树节点的产生

要知道,binder驱动在传输数据的时候,可不是仅仅简单地递送数据噢,它会分析被传输的数据,找出其中记录的binder对象,并生成相应的树节点。如果传输的是个binder实体对象,它不仅会在发起端对应的nodes树中添加一个binder_node节点,还会在目标端对应的refs_by_desc树、refs_by_node树中添加一个binder_ref节点,而且让binder_ref节点的node域指向binder_node节点。

可是,驱动层又是怎么知道所传的数据中有多少binder对象,以及这些对象的确切位置呢?在添加服务时, writeStrongBinder方法会将binder实体“打包”并写入parcel。

353.status_t Parcel::writeStrongBinder(constsp

354.{

355. return flatten_binder(ProcessState::self(), val, this);

356.}

357.

358.status_t flatten_binder(constsp

359. const sp

360.{

361. flat_binder_object obj;

362.

363. obj.flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

364. if(binder != NULL) {

365. IBinder *local = binder->localBinder();

366. if (!local) { // binder代理

367. BpBinder *proxy = binder->remoteBinder();

368. if (proxy == NULL) {

369. ALOGE("null proxy");

370. }

371. const int32_t handle = proxy ? proxy->handle() : 0;

372. obj.type = BINDER_TYPE_HANDLE;

373. obj.binder = 0; /* Don't pass uninitialized stack data to a remoteprocess */

374. obj.handle = handle;

375. obj.cookie = 0;

376. } else { // binder实体

377. obj.type = BINDER_TYPE_BINDER;

378. obj.binder = reinterpret_cast

379. obj.cookie =reinterpret_cast

380. }

381. }else {

382. obj.type = BINDER_TYPE_BINDER;

383. obj.binder = 0;

384. obj.cookie = 0;

385. }

386.

387. return finish_flatten_binder(binder,obj, out);

388.}

“打包”的意思就是把binder对象整理成flat_binder_object变量,

1,如果打包的是binder实体,那么flat_binder_object用cookie域记录binder实体的指针,即BBinder指针.

2,而如果打包的是binder代理,那么flat_binder_object用handle域记录的binder代理的句柄值。

389.inline static status_t finish_flatten_binder(

390. const sp

391.{

392. return out->writeObject(flat,false); // 直接调用writeObject方法

393.}

394.

395.status_t Parcel::writeObject(const flat_binder_object& val, bool nullMetaData)

396.{

397. const bool enoughData = (mDataPos+sizeof(val)) <= mDataCapacity;

398. const bool enoughObjects = mObjectsSize < mObjectsCapacity;

399. if(enoughData && enoughObjects) {

400.restart_write:

401. *reinterpret_cast

402.

403. // remember if it's a file descriptor

404. if (val.type == BINDER_TYPE_FD) {

405. if (!mAllowFds) {

406. // fail before modifying ourobject index

407. return FDS_NOT_ALLOWED;

408. }

409. mHasFds = mFdsKnown = true;

410. }

411.

412. // Need to write meta-data?

413. if (nullMetaData || val.binder != 0) {

414. mObjects[mObjectsSize] = mDataPos;

415. acquire_object(ProcessState::self(), val, this, &mOpenAshmemSize);

416. mObjectsSize++;

417. }

418.

419. return finishWrite(sizeof(flat_binder_object));

420. }

421.

422. if(!enoughData) {

423. const status_t err = growData(sizeof(val));

424. if (err != NO_ERROR) return err;

425. }

426. if(!enoughObjects) {

427. size_t newSize = ((mObjectsSize+2)*3)/2;

428. if (newSize < mObjectsSize) return NO_MEMORY; // overflow

429. binder_size_t* objects = (binder_size_t*)realloc(mObjects,newSize*sizeof(binder_size_t));

430. if (objects == NULL) return NO_MEMORY;

431. mObjects = objects;

432. mObjectsCapacity = newSize;

433. }

434.

435. goto restart_write;

436.}

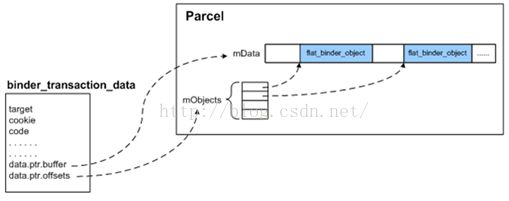

parcel对象内部会有一个buffer,记录着parcel中所有扁平化的数据,有些扁平数据是普通数据,而另一些扁平数据则记录着binder对象。所以parcel中会构造另一个mObjects数组,专门记录那些binder扁平数据所在的位置。

4.2 transact 解析

4.2.1 用户态

437.status_t BpBinder::transact(

438. uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

439.{

440. //Once a binder has died, it will never come back to life.

441. if(mAlive) {

442. status_t status = IPCThreadState::self()->transact(

443. mHandle, code, data, reply, flags);

444. if (status == DEAD_OBJECT) mAlive = 0;

445. return status;

446. }

447.

448. return DEAD_OBJECT;

449.}

450.status_t IPCThreadState::transact(int32_thandle, uint32_t code, const Parcel& data,

451. Parcel*reply, uint32_t flags)

452.{

453. ···

454. if(err == NO_ERROR) {

455. LOG_ONEWAY(">>>> SEND from pid %d uid %d %s",getpid(), getuid(),

456. (flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONEWAY");

457. err = writeTransactionData(BC_TRANSACTION,flags, handle, code, data, NULL);

458. }

459.

460. ···

461. err = waitForResponse(reply);

462.

463. return err;

464.}

首先看看writeTransactionData方法,只是将数据写入到mOut变量中, BC_TRANSACTION是应用程序向Binder驱动发送消息的消息码,而Binder驱动向应用程序回复的消息码以BR_开头,消息码的定义在binder_module.h中。

465.status_tIPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

466. int32_t handle, uint32_t code, const Parcel& data, status_t*statusBuffer)

467.{

468. binder_transaction_data tr;

469.

470. tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remoteprocess */

471. tr.target.handle = handle;

472. tr.code = code;

473. tr.flags = binderFlags;

474. tr.cookie = 0;

475. tr.sender_pid = 0;

476. tr.sender_euid = 0;

477.

478. const status_t err = data.errorCheck();

479. if(err == NO_ERROR) {

480. tr.data_size = data.ipcDataSize(); //待传递数据

481. tr.data.ptr.buffer =data.ipcData();

482. // binder对象在数据中的具体位置

483. tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

484. tr.data.ptr.offsets = data.ipcObjects();

485. }else if (statusBuffer) {

486. tr.flags |= TF_STATUS_CODE;

487. *statusBuffer = err;

488. tr.data_size = sizeof(status_t);

489. tr.data.ptr.buffer = reinterpret_cast

490. tr.offsets_size = 0;

491. tr.data.ptr.offsets = 0;

492. }else {

493. return (mLastError = err);

494. }

495.

496. mOut.writeInt32(cmd);

497. mOut.write(&tr, sizeof(tr));

498.

499. return NO_ERROR;

500.}

因此,当binder_transaction_data传递到binder驱动层后,驱动层可以准确地分析出数据中到底有多少binder对象,并分别进行处理,从而产生出合适的红黑树节点。此时,如果产生的红黑树节点是binder_node的话,binder_node的cookie域会被赋值成flat_binder_object所携带的cookie值,也就是用户态的BBinder地址值啦。这个新生成的binder_node节点被插入红黑树后,会一直严阵以待,以后当它成为另外某次传输动作的目标节点时,它的cookie域就派上用场了,此时cookie值会被反映到用户态,于是用户态就拿到了BBinder对象。

501.status_tIPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

502.{

503. uint32_t cmd;

504. int32_t err;

505.

506. while (1) {

507. // talkWithDriver()内部会完成跨进程事务

508. if ((err=talkWithDriver())< NO_ERROR) break;

509. err = mIn.errorCheck();

510. if (err < NO_ERROR) break;

511. if (mIn.dataAvail() == 0) continue;

512. // 事务的回复信息被记录在mIn中,所以下面进一步分析

513. cmd = (uint32_t)mIn.readInt32();

514.

515. IF_LOG_COMMANDS() {

516. alog << "Processing waitForResponse Command: "

517. << getReturnString(cmd)<< endl;

518. }

519.

520. switch (cmd) {

521. case BR_TRANSACTION_COMPLETE:

522. if (!reply && !acquireResult) goto finish;

523. break;

524.

525. case BR_DEAD_REPLY:

526. err = DEAD_OBJECT;

527. goto finish;

528.

529. case BR_FAILED_REPLY:

530. err = FAILED_TRANSACTION;

531. goto finish;

532.

533. case BR_ACQUIRE_RESULT:

534. {

535. ALOG_ASSERT(acquireResult !=NULL, "Unexpected brACQUIRE_RESULT");

536. const int32_t result =mIn.readInt32();

537. if (!acquireResult) continue;

538. *acquireResult = result ?NO_ERROR : INVALID_OPERATION;

539. }

540. goto finish;

541.

542. case BR_REPLY:

543. {

544. binder_transaction_data tr;

545. err = mIn.read(&tr,sizeof(tr));

546. ALOG_ASSERT(err == NO_ERROR,"Not enough command data for brREPLY");

547. if (err != NO_ERROR) gotofinish;

548.

549. if (reply) {

550. if ((tr.flags &TF_STATUS_CODE) == 0) {

551. reply->ipcSetDataReference(

552. reinterpret_cast

553. tr.data_size,

554. reinterpret_cast

555. tr.offsets_size/sizeof(binder_size_t),

556. freeBuffer, this);

557. } else {

558. err = *reinterpret_cast

559. freeBuffer(NULL,

560. reinterpret_cast

561. tr.data_size,

562. reinterpret_cast

563. tr.offsets_size/sizeof(binder_size_t), this);

564. }

565. } else {

566. freeBuffer(NULL,

567. reinterpret_cast

568. tr.data_size,

569. reinterpret_cast

570. tr.offsets_size/sizeof(binder_size_t), this);

571. continue;

572. }

573. }

574. goto finish;

575.

576. default:

577. err = executeCommand(cmd);

578. if (err != NO_ERROR) goto finish;

579. break;

580. }

581. }

582.

583.finish:

584. if(err != NO_ERROR) {

585. if (acquireResult) *acquireResult = err;

586. if (reply) reply->setError(err);

587. mLastError = err;

588. }

589.

590. return err;

591.}

waitForResponse()中是通过调用talkWithDriver()来和binder驱动打交道的,说到底会调用ioctl()函数。因为ioctl()函数在传递BINDER_WRITE_READ语义时,既会使用“输入buffer”,也会使用“输出buffer”,所以IPCThreadState专门搞了两个Parcel类型的成员变量:mIn和mOut。总之就是,mOut中的内容发出去,发送后的回复写进mIn。

592.status_t IPCThreadState::talkWithDriver(bool doReceive)

593.{

594. if(mProcess->mDriverFD <= 0) {

595. return -EBADF;

596. }

597.

598. binder_write_read bwr;

599.

600. //Is the read buffer empty?

601. const bool needRead = mIn.dataPosition() >= mIn.dataSize();

602.

603. //We don't want to write anything if we are still reading

604. //from data left in the input buffer and the caller

605. //has requested to read the next data.

606. const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

607.

608. bwr.write_size = outAvail;

609. bwr.write_buffer = (uintptr_t)mOut.data();

610.

611. //This is what we'll read.

612. if(doReceive && needRead) {

613. bwr.read_size = mIn.dataCapacity();

614. bwr.read_buffer = (uintptr_t)mIn.data();

615. }else {

616. bwr.read_size = 0;

617. bwr.read_buffer = 0;

618. }

619.

620. IF_LOG_COMMANDS() {

621. TextOutput::Bundle _b(alog);

622. if (outAvail != 0) {

623. alog << "Sending commands to driver: " << indent;

624. const void* cmds = (const void*)bwr.write_buffer;

625. const void* end = ((const uint8_t*)cmds)+bwr.write_size;

626. alog << HexDump(cmds, bwr.write_size) << endl;

627. while (cmds < end) cmds = printCommand(alog, cmds);

628. alog << dedent;

629. }

630. alog << "Size of receive buffer: " < 631. << ", needRead: " << needRead << ",doReceive: " << doReceive << endl; 632. } 633. 634. //Return immediately if there is nothing to do. 635. if((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR; 636. 637. bwr.write_consumed = 0; 638. bwr.read_consumed = 0; 639. status_t err; 640. do{ 641. IF_LOG_COMMANDS() { 642. alog << "About to read/write, write size = " < 643. } 644.#if defined(HAVE_ANDROID_OS) 645. if (ioctl(mProcess->mDriverFD,BINDER_WRITE_READ, &bwr) >= 0) 646. err = NO_ERROR; 647. else 648. err = -errno; 649.#else 650. err = INVALID_OPERATION; 651.#endif 652. if (mProcess->mDriverFD <= 0) { 653. err = -EBADF; 654. } 655. IF_LOG_COMMANDS() { 656. alog << "Finished read/write, write size = " < 657. } 658. }while (err == -EINTR); 659. 660. IF_LOG_COMMANDS() { 661. alog << "Our err: " << (void*)(intptr_t)err<< ", write consumed: " 662. << bwr.write_consumed << " (of " < 663. << "), readconsumed: " << bwr.read_consumed << endl; 664. } 665. 666. if(err >= NO_ERROR) { 667. if (bwr.write_consumed > 0) { 668. if (bwr.write_consumed < mOut.dataSize()) 669. mOut.remove(0,bwr.write_consumed); 670. else 671. mOut.setDataSize(0); 672. } 673. if (bwr.read_consumed > 0) { 674. mIn.setDataSize(bwr.read_consumed); 675. mIn.setDataPosition(0); 676. } 677. IF_LOG_COMMANDS() { 678. TextOutput::Bundle _b(alog); 679. alog << "Remaining data size: " << mOut.dataSize()<< endl; 680. alog << "Received commands from driver: " < 681. const void* cmds = mIn.data(); 682. const void* end = mIn.data() + mIn.dataSize(); 683. alog << HexDump(cmds, mIn.dataSize()) << endl; 684. while (cmds < end) cmds =printReturnCommand(alog, cmds); 685. alog << dedent; 686. } 687. return NO_ERROR; 688. } 689. 690. return err; 691.}

mIn和mOut的data会先整理进一个binder_write_read结构,然后再传给ioctl()函数。而最关键的一句,当然就是那句ioctl()了。此时使用的文件描述符就是前文我们说的ProcessState中记录的mDriverFD,说明是向binder驱动传递语义。BINDER_WRITE_READ表示我们希望读写一些数据。

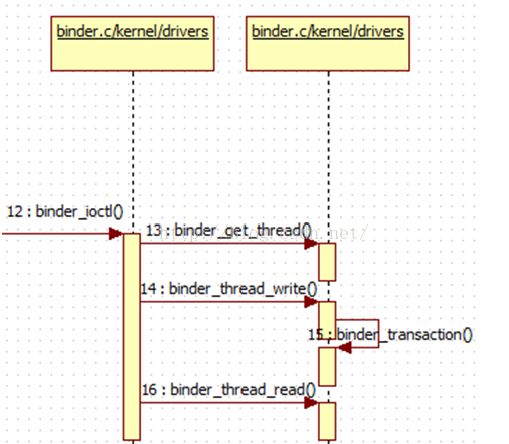

4.2.2 内核态

692.static long binder_ioctl(struct file *filp,unsigned int cmd, unsigned long arg)

693.{

694. intret;

695. ···

696. switch(cmd) {

697. caseBINDER_WRITE_READ: {

698. structbinder_write_read bwr;

699. ···

700. if(bwr.write_size > 0) {

701. ret= binder_thread_write(proc, thread,bwr.write_buffer, bwr.write_size,

702. &bwr.write_consumed);

703. trace_binder_write_done(ret);

704. if(ret < 0) {

705. bwr.read_consumed= 0;

706. if(copy_to_user(ubuf, &bwr, sizeof(bwr)))

707. ret= -EFAULT;

708. gotoerr;

709. }

710. }

711. if(bwr.read_size > 0) {

712. ret= binder_thread_read(proc, thread,bwr.read_buffer, bwr.read_size,

713. &bwr.read_consumed,filp->f_flags & O_NONBLOCK);

714. trace_binder_read_done(ret);

715. if(!list_empty(&proc->todo))

716. wake_up_interruptible(&proc->wait);

717. if(ret < 0) {

718. if(copy_to_user(ubuf, &bwr, sizeof(bwr)))

719. ret= -EFAULT;

720. gotoerr;

721. }

722. }

723. ···

724. trace_binder_ioctl_done(ret);

725. return ret;

726.}

首先看看binder_thread_write方法

727.int binder_thread_write(struct binder_proc*proc, struct binder_thread *thread,

728. void __user *buffer,int size, signed long *consumed)

729.{

730. . .. . . .

731. while (ptr < end && thread->return_error == BR_OK)

732. {

733. . . . . . .

734. switch (cmd)

735. {

736. . . . . . .

737. . . . . . .

738. case BC_TRANSACTION:

739. case BC_REPLY: {

740. struct binder_transaction_data tr;

741.

742. if (copy_from_user(&tr,ptr, sizeof(tr)))

743. return -EFAULT;

744. ptr += sizeof(tr);

745. binder_transaction(proc,thread, &tr, cmd == BC_REPLY);

746. break;

747. }

748. . . . . . .

749. . . . . . .

750. }

751. *consumed = ptr - buffer;

752. }

753. return 0;

754.}

这部分代码虽然长但比较简单,主要是从用户态拷贝来binder_transaction_data数据,并传给binder_transaction()函数进行实际的传输。

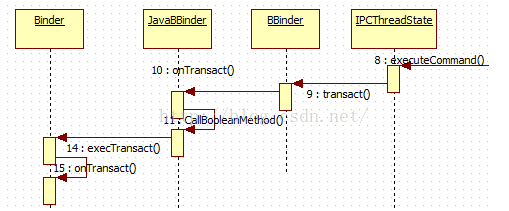

4.3 服务端

其中BBinder 是Binder.cpp 中的一个类

JavaBBinder 是android_util_Binder.cpp中的一个类。

其中最关键的一句是调用onTransaction()。因为我们的binder实体(服务)在本质上都是继承于BBinder的,而且我们一般都会重载onTransact()函数,所以上面这句onTransact()实际上调用的是具体binder实体(服务)的onTransact()成员函数。

4.3 获取服务

获取服务和添加服务的过程刚好相反。

755.public IBinder getService(String name) throwsRemoteException {

756. Parcel data = Parcel.obtain();

757. Parcel reply = Parcel.obtain();

758. data.writeInterfaceToken(IServiceManager.descriptor);

759. data.writeString(name);

760. mRemote.transact(GET_SERVICE_TRANSACTION, data, reply, 0);

761. IBinder binder = reply.readStrongBinder();

762. reply.recycle();

763. data.recycle();

764. return binder;

765. }

766.sp

767.{

768. sp

769. unflatten_binder(ProcessState::self(), *this, &val);

770. return val;

771.}

772.status_t unflatten_binder(const sp

773. const Parcel& in, sp

774.{

775. const flat_binder_object* flat = in.readObject(false);

776.

777. if(flat) {

778. switch (flat->type) {

779. case BINDER_TYPE_BINDER: // 如果是binder

780. *out = reinterpret_cast

781. returnfinish_unflatten_binder(NULL, *flat, in);

782. case BINDER_TYPE_HANDLE: // binder代理

783. *out = proc->getStrongProxyForHandle(flat->handle);

784. return finish_unflatten_binder(

785. static_cast

786. }

787. }

788. return BAD_TYPE;

789.}

看到没有,最后还是返回红黑树上的节点。

5, 小结

1,Binder原理和结构比较复杂,难以理解,有很多细节上的也没有论述。

2,结构主要是Java层,C++层,驱动层,从用户态到内核态,其中内核态的未详细论述。

3,Android在进程间传递数据是使用共享内存的方式,这样数据只需要复制一次就可以从一个进程到达另外一个进程了。Binder为每个进程创建了一个缓存区,这个缓存区在内核和用户空间进程间共享,只需要将发送数据所在进程的缓存区复制到目标进程所在进程的缓存区就可以了,一次复制大大提高了效率。