tensorflow保存模型和加载模型

又在公司的电脑上偷偷学习,趁boss没发现,赶紧发个blog

保存模型

关键代码:

saver = tf.train.Saver(max_to_step)

saver.save(session,filename)

例子:一元线性函数回归并保存模型

def line_regression():

x_data = np.random.rand(100)

y_data = x_data * 0.1 + 0.2

# 画图

plt.figure()

plt.scatter(x_data, y_data, color="red", marker="x")

# 创建线性模型,并初始化参数

b = tf.Variable(0.0, name="b")

k = tf.Variable(0.0, name="k")

y = x_data * k + b

# 构造二次代价函数

loss = tf.reduce_mean(tf.square(y - y_data))

# 梯度下降优化loss的optimizer 优化器,学习率是0.2

optimizer = tf.train.GradientDescentOptimizer(0.2)

# 最小化代价函数

train = optimizer.minimize(loss)

# 只保存最后一个

saver = tf.train.Saver(max_to_keep=1)

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for i in range(201):

sess.run(train)

if(i % 20 == 0):

k_value, b_value = sess.run([k, b])

# 输出k和b

print("%d,k=%f,b=%f" % (i, k_value, b_value))

# global_step 参数是迭代次数,会放到文件名后边

saver.save(sess,"models/line_regression")

# 得到预测值

prediction_value = y.eval()

# 画图比较一下

plt.plot(x_data, prediction_value, color="blue")

plt.legend()

plt.show()

- data-00000-of-00001和.index保存了所有的weights、biases、gradients等变量。

- meta保存了图结构。

- checkpoint文件是个文本文件,里面记录了保存的最新的checkpoint文件以及其它checkpoint文件列表

- 创建saver时,可以指定需要存储的tensor,如果没有指定,则全部保存。

- 创建saver时,可以指定保存的模型个数,利用max_to_keep=4,则最终会保存4个模型

- saver.save()函数里面可以设定global_step,说明是哪一步保存的模型。

- import_meta_graph导入的是meta文件的名字。然后restore时,是检查checkpoint,所以只填到checkpoint所在的路径下即可,不需要填checkpoint,不然会报错“ValueError: Can’t load save_path when it is None.”

- 最好在定义tensor的时候就指定名字,如上面代码中的

name='k'

加载模型

获取某些变量

def load_line_regression_model():

with tf.Session() as sess:

# 加载模型 import_meta_graph填的名字meta文件的名字

saver = tf.train.import_meta_graph("models/line_regression.meta")

# 检查checkpoint,所以只填到checkpoint所在的路径下即可,不需要填checkpoint

saver.restore(sess, tf.train.latest_checkpoint("models"))

# 是根据tensor的name获取的

k = sess.run("k:0")

b = sess.run("b:0")

# k = 0.099989,b = 0.200006

print("k = %f,b = %f" % (k, b))

利用模型进行估计和预测

def non_line_regression():

"""

desc:

非线性回归: y = x * x

"""

# 造数据

x_data = np.linspace(-0.5, 0.5, 200)[:, np.newaxis]

noise = np.random.normal(0, 0.02, x_data.shape)

y_data = np.square(x_data) + noise

# 定义两个placeholder

x = tf.placeholder(tf.float32, [None, 1], name="x")

y = tf.placeholder(tf.float32, [None, 1], name="y")

# 定义神经网络中间层

weight_L1 = tf.Variable(tf.random_normal([1, 10]))

bias_L1 = tf.Variable(tf.zeros([1, 10]))

output_L1 = tf.matmul(x, weight_L1) + bias_L1

L1 = tf.nn.tanh(output_L1)

# 定义神经网络输出层

weight_L2 = tf.Variable(tf.random_normal([10, 1]))

bias_L2 = tf.Variable(tf.zeros([1, 1]))

output_L2 = tf.matmul(L1, weight_L2) + bias_L2

prediction = tf.nn.tanh(output_L2, name="predict")

loss = tf.reduce_mean(tf.square(y - prediction))

train = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

saver = tf.train.Saver(max_to_keep=1)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(2000):

sess.run(train, feed_dict={x: x_data, y: y_data})

if(i % 100 == 0):

loss_value = sess.run(loss, feed_dict={x: x_data, y: y_data})

print("step:%d,loss:%f" % (i, loss_value))

saver.save(sess, "models/non_line_regression")

prediction_value = sess.run(prediction, feed_dict={x: x_data})

plt.figure()

plt.scatter(x_data, y_data, color="blue", marker="o")

plt.plot(x_data, prediction_value, color="r")

plt.show()

def load_non_line_regression():

"""

desc:

加载non_line_regression产生的模型并预测

"""

# 造数据

x_data = np.linspace(-0.5, 0.5, 200)[:, np.newaxis]

y_data = np.square(x_data)

with tf.Session() as sess:

saver = tf.train.import_meta_graph("models/non_line_regression.meta")

saver.restore(sess, tf.train.latest_checkpoint("models"))

graph = tf.get_default_graph()

# 得到的就是那两个placeholder

x = graph.get_tensor_by_name('x:0')

y = graph.get_tensor_by_name('y:0')

# 得到的就是最后的输出

predict = graph.get_tensor_by_name("predict:0")

# 把x传进去就是预测值

predict_value = sess.run(predict, feed_dict={x: x_data})

plt.figure()

plt.scatter(x_data, y_data, color="blue", marker="o", label="actual")

plt.scatter(x_data, predict_value, color="r", label="pred")

plt.legend()

plt.show()

完整代码

# tensorflow 加载模型

import os

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

import numpy as np

import tensorflow as tf

import warnings

import math

warnings.filterwarnings("ignore")

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

def line_regression():

"""

desc:

y = 0.1x + 0.2 一元线性模型回归,并把模型保存到models文件夹

"""

x_data = np.random.rand(100)

y_data = x_data * 0.1 + 0.2

# 画图

plt.figure()

plt.scatter(x_data, y_data, color="red", marker="x")

# 创建线性模型,并初始化参数

b = tf.Variable(0.0, name="b")

k = tf.Variable(0.0, name="k")

y = x_data * k + b

# 构造二次代价函数

loss = tf.reduce_mean(tf.square(y - y_data))

# 梯度下降优化loss的optimizer 优化器,学习率是0.2

optimizer = tf.train.GradientDescentOptimizer(0.2)

# 最小化代价函数

train = optimizer.minimize(loss)

# 只保存最后一个

saver = tf.train.Saver(max_to_keep=1)

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for i in range(201):

sess.run(train)

if(i % 20 == 0):

k_value, b_value = sess.run([k, b])

# 输出k和b

print("%d,k=%f,b=%f" % (i, k_value, b_value))

# global_step 参数是迭代次数,会放到文件名后边

saver.save(sess, "models/line_regression")

# 得到预测值

prediction_value = y.eval()

# 画图比较一下

plt.plot(x_data, prediction_value, color="blue")

plt.legend()

plt.show()

def load_line_regression_model():

"""

desc:

加载line_regression训练好的模型并获取tensor

"""

with tf.Session() as sess:

# 加载模型 import_meta_graph填的名字meta文件的名字

saver = tf.train.import_meta_graph("models/line_regression.meta")

# 检查checkpoint,所以只填到checkpoint所在的路径下即可,不需要填checkpoint

saver.restore(sess, tf.train.latest_checkpoint("models"))

# 根据name获取变量值

k = sess.run("k:0")

b = sess.run("b:0")

print("k = %f,b = %f" % (k, b))

def non_line_regression():

"""

desc:

非线性回归: y = x * x

"""

# 造数据

x_data = np.linspace(-0.5, 0.5, 200)[:, np.newaxis]

noise = np.random.normal(0, 0.02, x_data.shape)

y_data = np.square(x_data) + noise

# 定义两个placeholder

x = tf.placeholder(tf.float32, [None, 1], name="x")

y = tf.placeholder(tf.float32, [None, 1], name="y")

# 定义神经网络中间层

weight_L1 = tf.Variable(tf.random_normal([1, 10]))

bias_L1 = tf.Variable(tf.zeros([1, 10]))

output_L1 = tf.matmul(x, weight_L1) + bias_L1

L1 = tf.nn.tanh(output_L1)

# 定义神经网络输出层

weight_L2 = tf.Variable(tf.random_normal([10, 1]))

bias_L2 = tf.Variable(tf.zeros([1, 1]))

output_L2 = tf.matmul(L1, weight_L2) + bias_L2

prediction = tf.nn.tanh(output_L2, name="predict")

loss = tf.reduce_mean(tf.square(y - prediction))

train = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

saver = tf.train.Saver(max_to_keep=1)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(2000):

sess.run(train, feed_dict={x: x_data, y: y_data})

if(i % 100 == 0):

loss_value = sess.run(loss, feed_dict={x: x_data, y: y_data})

print("step:%d,loss:%f" % (i, loss_value))

saver.save(sess, "models/non_line_regression")

prediction_value = sess.run(prediction, feed_dict={x: x_data})

plt.figure()

plt.scatter(x_data, y_data, color="blue", marker="o")

plt.plot(x_data, prediction_value, color="r")

plt.show()

def load_non_line_regression():

"""

desc:

加载non_line_regression产生的模型并预测

"""

# 造数据

x_data = np.linspace(-0.5, 0.5, 200)[:, np.newaxis]

y_data = np.square(x_data)

with tf.Session() as sess:

saver = tf.train.import_meta_graph("models/non_line_regression.meta")

saver.restore(sess, tf.train.latest_checkpoint("models"))

graph = tf.get_default_graph()

# 得到的就是那两个placeholder

x = graph.get_tensor_by_name('x:0')

y = graph.get_tensor_by_name('y:0')

# 得到的就是最后的输出

predict = graph.get_tensor_by_name("predict:0")

predict_value = sess.run(predict, feed_dict={x: x_data})

plt.figure()

plt.scatter(x_data, y_data, color="blue", marker="o", label="actual")

plt.scatter(x_data, predict_value, color="r", label="pred")

plt.legend()

plt.show()

def train_model(epochs=5000, learning_rate=0.1):

"""

desc:

用DNN进行回归,并保存模型

"""

x_data = np.linspace(-1.0, 1.0, 500)[:, np.newaxis]

noise = np.random.normal(0, 0.02, x_data.shape)

# y = x * x 这个模型

y_data = np.square(x_data) + noise

# 定义两个placeholder

x = tf.placeholder(tf.float32, [None, 1], name="x")

y = tf.placeholder(tf.float32, [None, 1], name="y")

input_num = 1

hidden1_num = 50

hidden2_num = 10

output_num = 1

weights = {"L1": tf.Variable((tf.random_normal([input_num, hidden1_num]))),

"L2": tf.Variable((tf.random_normal([hidden1_num, hidden2_num]))),

"out": tf.Variable((tf.random_normal([hidden2_num, output_num])))}

bias = {"L1": tf.Variable((tf.zeros([1, hidden1_num]))),

"L2": tf.Variable((tf.zeros([1, hidden2_num]))),

"out": tf.Variable((tf.zeros([1, output_num])))}

L1 = tf.nn.tanh(tf.matmul(x, weights["L1"]) + bias["L1"])

L2 = tf.nn.tanh(tf.matmul(L1, weights["L2"]) + bias["L2"])

predict = tf.nn.tanh(

tf.matmul(L2, weights["out"]) + bias["out"], name="predict")

error = y - predict

loss = tf.reduce_mean(tf.square(error))

train = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss)

# max_to_keep=1 保存最后1个模型 会根据训练次数 一个一个覆盖的

saver = tf.train.Saver(max_to_keep=1)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for epoch in range(epochs):

sess.run(train, feed_dict={x: x_data, y: y_data})

if(epoch % 10 == 0):

print("step:%d,loss:%f" % (epoch, sess.run(

loss, feed_dict={x: x_data, y: y_data})))

saver.save(sess, "models/y=x2", global_step=epoch)

predict_value = sess.run(predict, feed_dict={x: x_data})

plt.figure()

plt.scatter(x_data, y_data, marker="x", color="red")

plt.plot(x_data, predict_value, color="blue")

plt.legend()

plt.show()

value = sess.run(predict, feed_dict={x: [[0.3]]})

print("0.3的预测值为%f" % value)

def load_model():

"""

desc:

恢复train_model函数的文件,并进行测试

.meta文件:一个协议缓冲,保存tensorflow中完整的graph、variables、operation、collection

checkpoint文件:一个二进制文件,包含了weights, biases, gradients和其他variables的值。

但是0.11版本后的都修改了,用.data和.index保存值,用checkpoint记录最新的记录。

"""

x_data = np.array([[0.2], [0.3], [-0.3], [0.15], [-0.21], [0.22]])

y_data = np.square(x_data)

with tf.Session() as sess:

saver = tf.train.import_meta_graph("models/y=x2-4990.meta")

saver.restore(sess, tf.train.latest_checkpoint("models"))

graph = tf.get_default_graph()

# 得到两个placeholder 和 预测值

x = graph.get_tensor_by_name("x:0")

y = graph.get_tensor_by_name("y:0")

predict = graph.get_tensor_by_name("predict:0")

# 进行预测

predict_value = sess.run(predict, feed_dict={x: x_data})

# 看看每个数据的差距多大

print(np.abs(predict_value - y_data))

plt.figure()

plt.scatter(x_data, y_data, marker="x", color="red", label="actual")

plt.scatter(x_data, predict_value, color="blue", label="pred")

plt.legend()

plt.show()

if __name__ == "__main__":

load_model()

需要先训练个模型,再加载(先执行train_model,后执行load_model)。执行结果如图:

手写数字应该那个也可以这么干,代码如下

# 训练mnist并保存模型,利用模型进行预测

# 深入mnist数据集

import os

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

import numpy as np

import tensorflow as tf

import warnings

import math

warnings.filterwarnings("ignore")

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

mnist = input_data.read_data_sets("MNIST_data", one_hot=True)

def addConnect(input, input_size, output_size, activate_function=None):

"""

Args:

input:输入的数据

input_size:输入的数据维度,也就是说一行几列

output_size: 输出维度,经过这层网络要输出一行几列的数据

activate_function: 激活函数

desc:

构建一层NN

"""

weight = tf.Variable(tf.truncated_normal(

[input_size, output_size], stddev=0.1))

bias = tf.Variable(tf.zeros([1, output_size]))

y = tf.add(tf.matmul(input, weight), bias)

if activate_function is None:

return y

else:

return activate_function(y)

def drawDigit(position, image, title, isTrue=True):

"""

desc:

封装plt对image的画图

args:

position:展示在plt.subplot的哪个位置

image:1 * 784 的ndarray

title:plot的title,

isTrue: 预测的是否是真

"""

plt.subplot(*position)

plt.imshow(image.reshape(-1, 28), cmap="gray_r")

plt.axis("off")

if not isTrue:

plt.title(title, color="red")

else:

plt.title(title)

def batchDraw(batch_size):

"""

desc:

批量图展示到plt上

args:

batch_size: 一次性展示多少张图,要完全平方数

"""

# mnist = input_data.read_data_sets("MNIST_data", one_hot=True)

# 从train获取batch_size 这么多个数据

images, labels = mnist.train.next_batch(batch_size)

# 有多少个图片,其实就是batch_size

image_number = images.shape[0]

# 想吧batch_size个图片放到一个大正方形展示,那么有sqrt(batch_size)行和列

row_number = math.ceil(image_number ** 0.5)

column_number = row_number

# 指定画布尺寸

plt.figure(figsize=(row_number, column_number))

for i in range(row_number):

for j in range(column_number):

index = i * column_number + j

if index < image_number:

position = (row_number, column_number, index+1)

image = images[index]

title = '%d' % (np.argmax(labels[index]))

drawDigit(position, image, title)

def predictByDNN(epochs=1000, batch_size=100):

"""

desc:

用NN和softmax识别mnist数据集

args:

epochs:训练次数

batch_size: 一批次训练多少张图片

"""

x = tf.placeholder(tf.float32, [None, 28*28], name="x")

y = tf.placeholder(tf.float32, [None, 10], name="y")

connect1 = addConnect(x, 28*28, 300, tf.nn.relu)

connect2 = addConnect(connect1, 300, 64, tf.nn.relu)

predict_y = addConnect(connect2, 64, 10)

predict_y = tf.nn.softmax(predict_y, name="predict")

loss = tf.reduce_mean(-tf.reduce_sum(y * tf.log(predict_y), 1))

optimizer = tf.train.GradientDescentOptimizer(0.5)

train = optimizer.minimize(loss)

saver = tf.train.Saver(max_to_keep=1)

# 初始化变量

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for epoch in range(epochs):

images, labels = mnist.train.next_batch(batch_size)

sess.run(train, feed_dict={x: images, y: labels})

if(epoch % 50 == 0):

correct_prediction = tf.equal(

tf.argmax(predict_y, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(

tf.cast(correct_prediction, tf.float32))

accuracy_value = sess.run(

accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels})

print('step:%d accuracy:%.4f' % (epoch, accuracy_value))

saver.save(sess, "models/mnist/mnist_model")

# 用测试集 可视化一下

images, labels = mnist.test.next_batch(batch_size)

predict_labels = sess.run(predict_y, feed_dict={x: images, y: labels})

image_number = images.shape[0]

row_number = math.ceil(image_number ** 0.5)

column_number = row_number

plt.figure(figsize=(row_number + 10, column_number + 10))

for i in range(row_number):

for j in range(column_number):

index = i * column_number + j

if index < image_number:

position = (row_number, column_number, index+1)

image = images[index]

actual = np.argmax(labels[index])

predict = np.argmax(predict_labels[index])

isTrue = actual == predict

title = 'act:%d--pred:%d' % (actual, predict)

drawDigit(position, image, title, isTrue)

def load_mnist_model(batch_size):

images, labels = mnist.test.next_batch(batch_size)

with tf.Session() as sess:

saver = tf.train.import_meta_graph("models/mnist/mnist_model.meta")

saver.restore(sess, tf.train.latest_checkpoint("models/mnist"))

graph = tf.get_default_graph()

# 得到两个placeholder 和 预测值

x = graph.get_tensor_by_name("x:0")

y = graph.get_tensor_by_name("y:0")

predict = graph.get_tensor_by_name("predict:0")

predict_values = sess.run(predict, feed_dict={x: images})

for i in range(batch_size):

predict_value = np.argmax(predict_values[i])

label = np.argmax(labels[i])

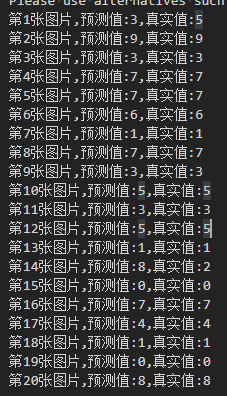

print("第%d张图片,预测值:%d,真实值:%d" % (i+1, predict_value, label))

if __name__ == "__main__":

# predictByDNN()

# plt.show()

load_mnist_model(20)