二、opencv学习笔记——MAC下安装配置opencv+contrib

在MAC下安装配置opencv+contrib,我也是踩了很多坑。不像Windows可以直接拿别人编译好的库在VS中配置就行,MAC由于最后要在终端配置,会自动查找编译路径,所以必须要自己一步一步来。

下面先说一下我遇到的几个问题:

一、configure过程中会出现文件无法下载问题,由于是外网下载,速度可能很慢会导致无法下载,主要是ippicv文件,有教程说可以先从网上下载对应文件放入根目录configure会通过,我没成功。我是成功下载,虽然速度有点慢。以下是对应文件下载地址:

ippicv等文件下载

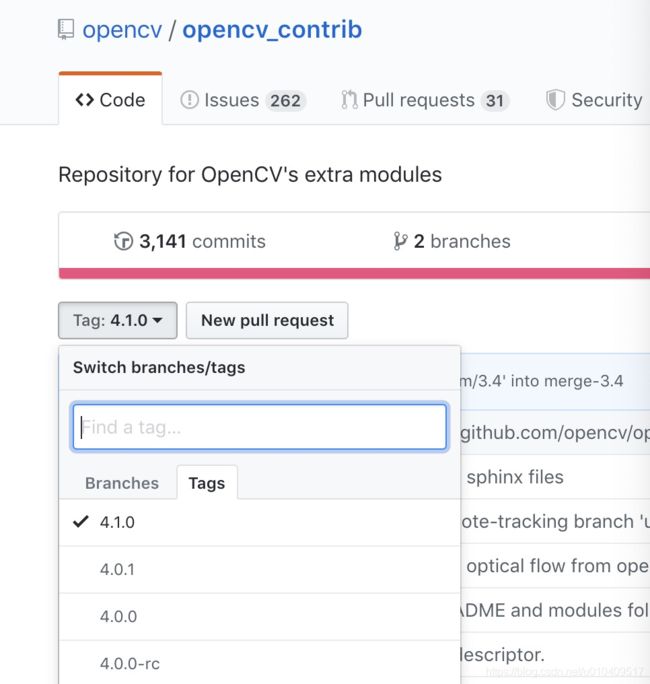

二、配置一遍后,要添加对应的contrib库,一定要下载和opencv版本一致的,可在分支里选择,我下的是4.1版本的

contrib库

三、第一遍configure后要选择opencv_enable_nonfree和build_opencv_world

![]()

![]()

下面开始进入正式教程:

1、安装homebrew,后续终端配置要用到

ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)”

2、下载cmake

cmake下载地址

3、下载opencv和对应opencv contrib

我用的是4.1.0,选择source版本

opencv下载

opencv contrib

4、cmake配置

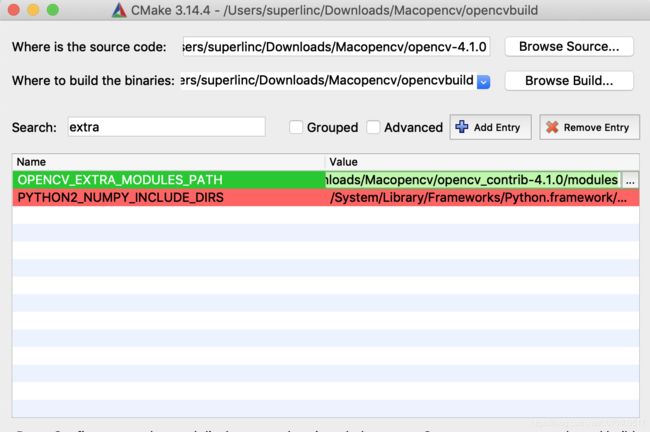

source code路径就是刚刚下载的opencv,build目录为自己新建的要编译的目录

点击configure,下载ippicv文件时会比较久,等待configuration done,选择opencv_enable_nonfree和build_opencv_world

额外路径选择下载的openc_contrib/modules

点击configure,configuratedone后红色消失,再点击generate,直到generate done。

cmake配置完毕

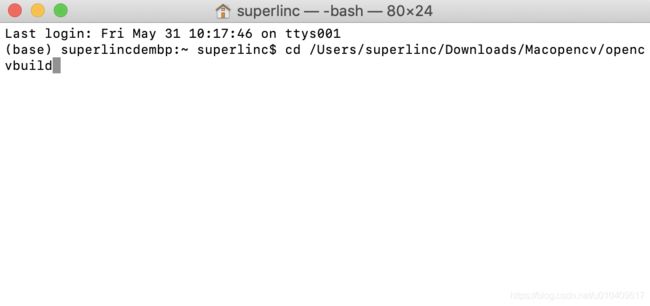

5、打开终端,cd到刚才你生成的编译路径中

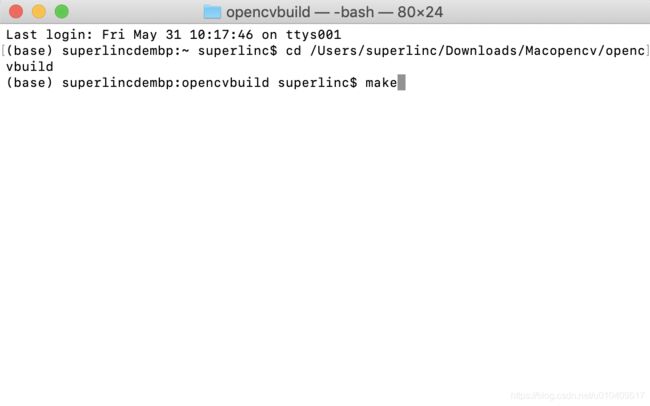

输入make,开始编译,时间会非常长

输入make,开始编译,时间会非常长

最后输入sudo make install,输入密码后继续等待,这样opencv+contrib就配置完毕了。

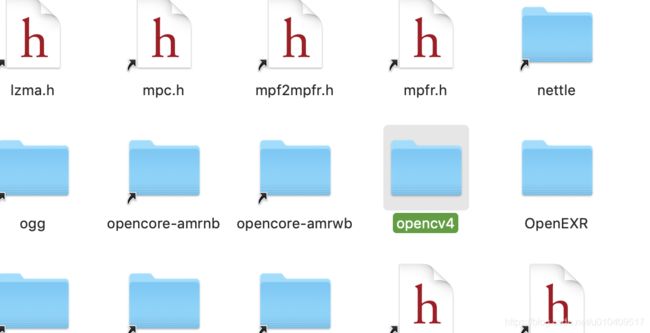

lib在/usr/local/lib(command+shift+g输入/usr/local/lib前往)

由于之前选了build_opencv_world,所以所有库都集成到了这里,后续调用直接调用这个就行了

header在/usr/local/include/opencv4中

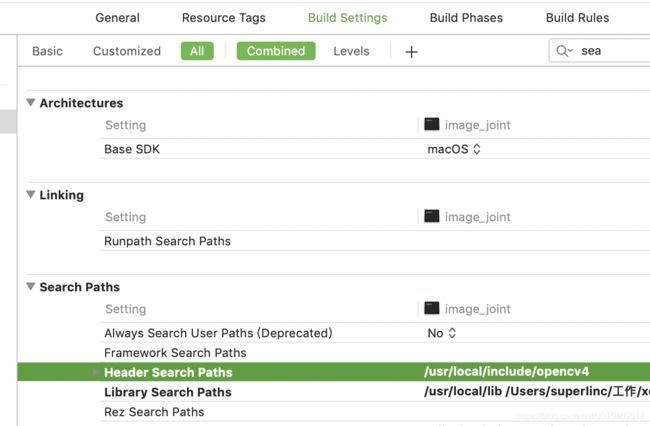

6、Xcode配置opencv

build settings输入search,在头路径选择/usr/local/include/opencv4,库路径选择/usr/local/lib

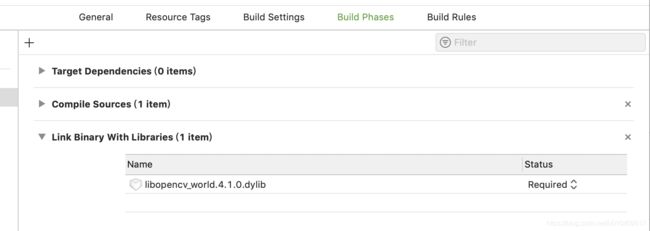

build phases——>link binary with libraries,添加库文件

7、测试,实现全景图拼接

把两张图片路径修改一下就行

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/features2d.hpp"

#include "opencv2/xfeatures2d.hpp"

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

void OptimizeSeam(Mat& img1, Mat& trans, Mat& dst);

typedef struct

{

Point2f left_top;

Point2f left_bottom;

Point2f right_top;

Point2f right_bottom;

}four_corners_t;

four_corners_t corners;

void CalcCorners(const Mat& H, const Mat& src)

{

double v2[] = { 0, 0, 1 };//左上角

double v1[3];//变换后的坐标值

Mat V2 = Mat(3, 1, CV_64FC1, v2); //列向量

Mat V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

//左上角(0,0,1)

cout << "V2: " << V2 << endl;

cout << "V1: " << V1 << endl;

corners.left_top.x = v1[0] / v1[2];

corners.left_top.y = v1[1] / v1[2];

//左下角(0,src.rows,1)

v2[0] = 0;

v2[1] = src.rows;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners.left_bottom.x = v1[0] / v1[2];

corners.left_bottom.y = v1[1] / v1[2];

//右上角(src.cols,0,1)

v2[0] = src.cols;

v2[1] = 0;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners.right_top.x = v1[0] / v1[2];

corners.right_top.y = v1[1] / v1[2];

//右下角(src.cols,src.rows,1)

v2[0] = src.cols;

v2[1] = src.rows;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners.right_bottom.x = v1[0] / v1[2];

corners.right_bottom.y = v1[1] / v1[2];

}

int main(int argc, char *argv[])

{

//Mat image01 = imread("input1.JPG", 1); //右图

//Mat image02 = imread("input3.JPG", 1); //左图

Mat image01 = imread("/Users/superlinc/工作/xcode work/image_joint/2.png", 1); //右图

Mat image02 = imread("/Users/superlinc/工作/xcode work/image_joint/1.png", 1); //左图

imshow("p1", image01);

imshow("p2", image02);

//灰度图转换

Mat image1, image2;

cvtColor(image01, image1, COLOR_RGB2GRAY);

cvtColor(image02, image2, COLOR_RGB2GRAY);

//提取特征点

Ptr surf = xfeatures2d::SURF::create(2000);

//SurfFeatureDetector Detector(2000);

vector keyPoint1, keyPoint2;

surf->detect(image1, keyPoint1);

surf->detect(image2, keyPoint2);

//特征点描述,为下边的特征点匹配做准备

//SurfDescriptorExtractor Descriptor;

Mat imageDesc1, imageDesc2;

surf->compute(image1, keyPoint1, imageDesc1);

surf->compute(image2, keyPoint2, imageDesc2);

FlannBasedMatcher matcher;

vector > matchePoints;

vector GoodMatchePoints;

vector train_desc(1, imageDesc1);

matcher.add(train_desc);

matcher.train();

matcher.knnMatch(imageDesc2, matchePoints, 3);

cout << "total match points: " << matchePoints.size() << endl;

// Lowe's algorithm,获取优秀匹配点

for (int i = 0; i < matchePoints.size(); i++)

{

if (matchePoints[i][0].distance < 0.4 * matchePoints[i][1].distance)

{

GoodMatchePoints.push_back(matchePoints[i][0]);

}

}

Mat first_match;

drawMatches(image02, keyPoint2, image01, keyPoint1, GoodMatchePoints, first_match);

imshow("first_match ", first_match);

vector imagePoints1, imagePoints2;

for (int i = 0; i < GoodMatchePoints.size(); i++)

{

imagePoints2.push_back(keyPoint2[GoodMatchePoints[i].queryIdx].pt);

imagePoints1.push_back(keyPoint1[GoodMatchePoints[i].trainIdx].pt);

}

//获取图像1到图像2的投影映射矩阵 尺寸为3*3 寻找从右边到左边的矩阵变换

Mat homo = findHomography(imagePoints1, imagePoints2, FM_RANSAC);

也可以使用getPerspectiveTransform方法获得透视变换矩阵,不过要求只能有4个点,效果稍差

//Mat homo=getPerspectiveTransform(imagePoints1,imagePoints2);

cout << "变换矩阵为:\n" << homo << endl << endl; //输出映射矩阵

//计算配准图的四个顶点坐标

CalcCorners(homo, image01);

cout << "left_top:" << corners.left_top << endl;

cout << "left_bottom:" << corners.left_bottom << endl;

cout << "right_top:" << corners.right_top << endl;

cout << "right_bottom:" << corners.right_bottom << endl;

//图像配准

Mat imageTransform1, imageTransform2;

//右边转到左边 矩阵变换

warpPerspective(image01, imageTransform1, homo, Size(MAX(corners.right_top.x, corners.right_bottom.x), image02.rows));

//warpPerspective(image01, imageTransform2, adjustMat*homo, Size(image02.cols*1.3, image02.rows*1.8));

imshow("直接经过透视矩阵变换", imageTransform1);

//创建拼接后的图,需提前计算图的大小

int dst_width = imageTransform1.cols; //取最右点的长度为拼接图的长度

int dst_height = image02.rows;

Mat dst(dst_height, dst_width, CV_8UC3);

dst.setTo(0);

imageTransform1.copyTo(dst(Rect(0, 0, imageTransform1.cols, imageTransform1.rows)));

image02.copyTo(dst(Rect(0, 0, image02.cols, image02.rows)));

imshow("b_dst", dst);

OptimizeSeam(image02, imageTransform1, dst);

imshow("dst", dst);

waitKey();

return 0;

}

//优化两图的连接处,使得拼接自然

void OptimizeSeam(Mat& img1, Mat& trans, Mat& dst)

{

int start = MIN(corners.left_top.x, corners.left_bottom.x);//开始位置,即重叠区域的左边界

double processWidth = img1.cols - start;//重叠区域的宽度

int rows = dst.rows;

int cols = img1.cols; //注意,是列数*通道数

double alpha = 1;//img1中像素的权重

for (int i = 0; i < rows; i++)

{

uchar* p = img1.ptr(i); //获取第i行的首地址

uchar* t = trans.ptr(i);

uchar* d = dst.ptr(i);

for (int j = start; j < cols; j++)

{

//如果遇到图像trans中无像素的黑点,则完全拷贝img1中的数据

if (t[j * 3] == 0 && t[j * 3 + 1] == 0 && t[j * 3 + 2] == 0)

{

alpha = 1;

}

else

{

//img1中像素的权重,与当前处理点距重叠区域左边界的距离成正比,实验证明,这种方法确实好

alpha = (processWidth - (j - start)) / processWidth;

}

d[j * 3] = p[j * 3] * alpha + t[j * 3] * (1 - alpha);

d[j * 3 + 1] = p[j * 3 + 1] * alpha + t[j * 3 + 1] * (1 - alpha);

d[j * 3 + 2] = p[j * 3 + 2] * alpha + t[j * 3 + 2] * (1 - alpha);

}

}

}

测试结果