opencv全景拼接源码解读与修改。

一篇好教程:http://kushalvyas.github.io/stitching.html

Stitcher::Status status = stitcher.stitch(imgs, pano);这一句是最上层的调用,它调用了以下函数:

1,Status status = estimateTransform(images);//估算相机变换和内参

--if (status != OK)return status;

2,return composePanorama(pano);//全景拼接1,Status status = estimateTransform(images);调用了以下函数

1.1 if ((status = matchImages()) != OK) return status;

1.2 if ((status = estimateCameraParams()) != OK) return status;1.1 matchImages()先计算work_scale,将图像resize到面积在work_megapix*10^6以下,(work_megapix 默认是0.6)

work_scale_ = std::min(1.0, std::sqrt(registr_resol_ * 1e6 / full_img.size().area()));再计算seam_scale,也是根据图像的面积小于seam_megapix*10^6,(seam_megapix 默认是0.1),seam_work_aspect目前还没用到

seam_scale_ = std::min(1.0, std::sqrt(seam_est_resol_ * 1e6 / full_img.size().area()));然后计算特征点放入features_数组

(*features_finder_)(img, features_[i]);然后匹配,使用查并集法,将图片的匹配关系找出,并删除那些不属于同一全景图的图片

(*features_matcher_)(features_, pairwise_matches_, matching_mask_);

...

indices_ = stitcher::leaveBiggestComponent(features_, pairwise_matches_, (float)conf_thresh_);1.2 根据单应性矩阵粗略估计出相机参数(焦距)—if ((status = estimateCameraParams()) != OK) return status;

1.2.1焦距参数的估计。根据前面求出的任意两幅图的匹配,我们根据两幅图的单应性矩阵H,求出符合条件的f,(4副图,16个匹配,求出8个符合条件的f),然后求出f的均值或者中值当成所有图形的粗略估计的f。

stitcher::HomographyBasedEstimator estimator;

if (!estimator(features_, pairwise_matches_, cameras_))return ERR_HOMOGRAPHY_EST_FAIL;它调用了

estimateFocal(features, pairwise_matches, focals);它又调用了

const MatchesInfo &m = pairwise_matches[i*num_images + j];//一对match可以估计出一个H,pairwise_matches是已有的输入

focalsFromHomography(m.H, f0, f1, f0ok, f1ok);//!!under the assumption that the camera undergoes rotations around its centre only.!!!最底层实现:从H计算f,原理暂时不清,有空看看MVG。

void focalsFromHomography(const Mat& H, double &f0, double &f1, bool &f0_ok, bool &f1_ok)

{

CV_Assert(H.type() == CV_64F && H.size() == Size(3, 3));

const double* h = H.ptr<double>();//h is a vector

double d1, d2; // Denominators

double v1, v2; // Focal squares value candidates

f1_ok = true;

d1 = h[6] * h[7];

d2 = (h[7] - h[6]) * (h[7] + h[6]);

v1 = -(h[0] * h[1] + h[3] * h[4]) / d1;

v2 = (h[0] * h[0] + h[3] * h[3] - h[1] * h[1] - h[4] * h[4]) / d2;

if (v1 < v2) std::swap(v1, v2);

if (v1 > 0 && v2 > 0) f1 = std::sqrt(std::abs(d1) > std::abs(d2) ? v1 : v2);

else if (v1 > 0) f1 = std::sqrt(v1);

else f1_ok = false;

f0_ok = true;

d1 = h[0] * h[3] + h[1] * h[4];

d2 = h[0] * h[0] + h[1] * h[1] - h[3] * h[3] - h[4] * h[4];

v1 = -h[2] * h[5] / d1;

v2 = (h[5] * h[5] - h[2] * h[2]) / d2;

if (v1 < v2) std::swap(v1, v2);

if (v1 > 0 && v2 > 0) f0 = std::sqrt(std::abs(d1) > std::abs(d2) ? v1 : v2);

else if (v1 > 0) f0 = std::sqrt(v1);

else f0_ok = false;

}1.2.2 根据匹配的内点构建最大生成树,然后广度优先搜索求出根节点,并求出camera的R矩阵,K矩阵以及光轴中心

findMaxSpanningTree(num_images, pairwise_matches, span_tree, span_tree_centers);//构建最大生成树

span_tree.walkBreadthFirst(span_tree_centers[0], CalcRotation(num_images, pairwise_matches, cameras));//广度优先求出根节点K矩阵:

struct CV_EXPORTS CameraParams

{

CameraParams();

CameraParams(const CameraParams& other);

const CameraParams& operator =(const CameraParams& other);

Mat K() const;

double focal; // Focal length

double aspect; // Aspect ratio

double ppx; // Principal point X

double ppy; // Principal point Y

Mat R; // Rotation

Mat t; // Translation

};R矩阵:

struct CalcRotation

{

CalcRotation(int _num_images, const std::vector光轴中心的值:

for (int i = 0; i < num_images; ++i)

{

cameras[i].ppx += 0.5 * features[i].img_size.width;

cameras[i].ppy += 0.5 * features[i].img_size.height;

}1.3 相机参数估计出后,用光束平差法Bundle Adjustment方法对所有图片进行相机参数校正

Bundle Adjustment(光束法平差)算法主要是解决所有相机参数的联合。这是全景拼接必须的一步,因为多个成对的单应性矩阵合成全景图时,会忽略全局的限制,造成累积误差。因此每一个图像都要加上光束法平差值,使图像被初始化成相同的旋转和焦距长度。光束法平差的目标函数是一个具有鲁棒性的映射误差的平方和函数。即每一个特征点都要映射到其他的图像中,计算出使误差的平方和最小的相机参数。具体的推导过程可以参见Automatic Panoramic Image Stitching using Invariant Features.pdf的第五章。

bundle_adjuster_->setConfThresh(conf_thresh_);

if (!(*bundle_adjuster_)(features_, pairwise_matches_, cameras_))return ERR_CAMERA_PARAMS_ADJUST_FAIL;1.3.1

首先计算setUpInitialCameraParams(cameras);

void BundleAdjusterRay::setUpInitialCameraParams(const std::vectorcam_params_[i*4+0] = cameras[i].focal;

cam_params_后面3个值,是cameras[i].R先经过奇异值分解,然后对u*vt进行Rodrigues运算,得到的rvec第一行3个值赋给cam_params_。

奇异值分解的定义:

在矩阵M的奇异值分解中 M = UΣV*

·U的列(columns)组成一套对M的正交”输入”或”分析”的基向量。这些向量是MM*的特征向量。

·V的列(columns)组成一套对M的正交”输出”的基向量。这些向量是M*M的特征向量。

·Σ对角线上的元素是奇异值,可视为是在输入与输出间进行的标量的”膨胀控制”。这些是M*M及MM*的奇异值,并与U和V的行向量相对应。

1.3.2 删除置信度小于门限的匹配对

for (int i = 0; i < num_images_ - 1; ++i){

for (int j = i + 1; j < num_images_; ++j){

const MatchesInfo& matches_info = pairwise_matches_[i * num_images_ + j];

if (matches_info.confidence > conf_thresh_)

edges_.push_back(std::make_pair(i, j));

}

}1.3.3 使用LM算法计算camera参数。

CvLevMarq solver(num_images_ * num_params_per_cam_,total_num_matches_ * num_errs_per_measurement_,term_criteria_);

Mat err, jac;

CvMat matParams = cam_params_;

cvCopy(&matParams, solver.param);

int iter = 0;

for(;;){

const CvMat* _param = 0;

CvMat* _jac = 0;

CvMat* _err = 0;

bool proceed = solver.update(_param, _jac, _err);

cvCopy(_param, &matParams);

if (!proceed || !_err)break;

if (_jac){

calcJacobian(jac);

CvMat tmp = jac;

cvCopy(&tmp, _jac);

}

if (_err){

calcError(err);

// LOG_CHAT(".");

iter++;

CvMat tmp = err;

cvCopy(&tmp, _err);

}

}

// Check if all camera parameters are valid

bool ok = true;

for (int i = 0; i < cam_params_.rows; ++i){

if (cvIsNaN(cam_params_.at<double>(i,0))){

ok = false;

break;

}

}

if (!ok)return false;

obtainRefinedCameraParams(cameras);

// Normalize motion to center image

Graph span_tree;

std::vector<int> span_tree_centers;

findMaxSpanningTree(num_images_, pairwise_matches, span_tree, span_tree_centers);

Mat R_inv = cameras[span_tree_centers[0]].R.inv();

for (int i = 0; i < num_images_; ++i)cameras[i].R = R_inv * cameras[i].R;

// LOGLN_CHAT("Bundle adjustment, time: " << ((getTickCount() - t) / getTickFrequency()) << " sec");

return true;

}1.3.4 Find median focal length and use it as final image scale

std::vector<double> focals;

for (size_t i = 0; i < cameras_.size(); ++i)

{

//LOGLN("Camera #" << indices_[i] + 1 << ":\n" << cameras_[i].K());

focals.push_back(cameras_[i].focal);

}

std::sort(focals.begin(), focals.end());

if (focals.size() % 2 == 1)

warped_image_scale_ = static_cast<float>(focals[focals.size() / 2]);

else

warped_image_scale_ = static_cast<float>(focals[focals.size() / 2 - 1] + focals[focals.size() / 2]) * 0.5f;1.4 波形校正

if (do_wave_correct_)

{

std::vector前面几节把相机旋转矩阵计算出来,但是还有一个因素需要考虑,就是由于拍摄者拍摄图片的时候不一定是水平的,轻微的倾斜会导致全景图像出现飞机曲线,因此我们要对图像进行波形校正,主要是寻找每幅图形的“上升向量”(up_vector),使他校正成

opencv实现的源码如下(实际就是求解特征值,计算出U向量,再将up向量乘在相机参数上(水平旋转或者垂直旋转))

void waveCorrect(std::vector2,Stitcher::composePanorama函数

2.1 单应性矩阵变换

由图像匹配,Bundle Adjustment算法以及波形校验,求出了图像的相机参数以及旋转矩阵,接下来就对图形进行单应性矩阵变换,亮度的增量补偿以及多波段融合(图像金字塔)。首先介绍的就是单应性矩阵变换:

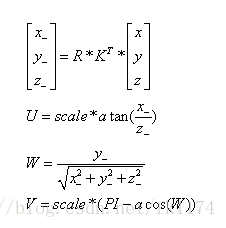

源图像的点(x,y,z=1),图像的旋转矩阵R,图像的相机参数矩阵K,经过变换后的同一坐标(x_,y_,z_),然后映射到球形坐标(u,v,w),他们之间的关系如下:

实现:

void PlaneProjector::mapForward(float x, float y, float &u, float &v)

{

float x_ = r_kinv[0] * x + r_kinv[1] * y + r_kinv[2];

float y_ = r_kinv[3] * x + r_kinv[4] * y + r_kinv[5];

float z_ = r_kinv[6] * x + r_kinv[7] * y + r_kinv[8];

x_ = t[0] + x_ / z_ * (1 - t[2]);

y_ = t[1] + y_ / z_ * (1 - t[2]);

u = scale * x_;

v = scale * y_;

}这个函数新版中并没用调用,调用的是corners[i] = w->warp(seam_est_imgs_[i], K, cameras_[i].R, INTER_LINEAR, BORDER_CONSTANT, images_warped[i]);//Projects the image, and return top-left corner of projected image

这个函数。这里的w是

Ptr<stitcher::RotationWarper> w = warper_->create(float(warped_image_scale_ * seam_work_aspect_));但是并没用RotationWarper::warp的源代码,好像是从库里调用的。

2.2 曝光补偿。

图像拼接中,由于拍摄的图片有可能因为光圈或者光线的问题,导致相邻图片重叠区域出现亮度差,所以在拼接时就需要对图像进行亮度补偿,(opencv只对重叠区域进行了亮度补偿,这样会导致图像融合处虽然光照渐变,但是图像整体的光强没有柔和的过渡)。

exposure_comp_->feed(corners, images_warped, masks_warped);//曝光补偿同样,也没有源代码。

2.3 寻找缝合线

seam_finder_->find(images_warped_f, corners, masks_warped);// Find seams寻找缝合线