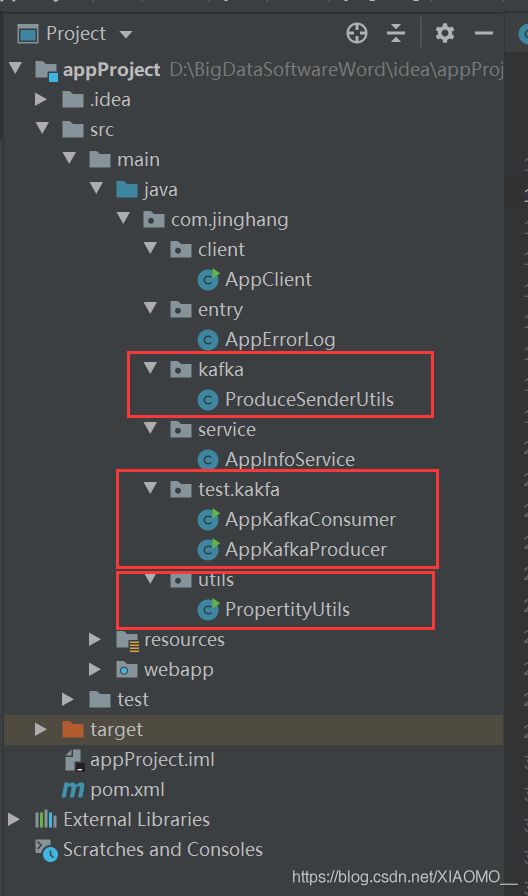

APP项目(二)

目录:开始写kafka的代码

com.jinghang.kafka

com.jinghang.test.kakfa

com.jinghang.utils

com.jinghang.kafka.ProduceSenderUtils

package com.jinghang.kafka;

import java.util.Properties;

import org.apache.kafka.clients.producer.Callback;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.apache.kafka.common.serialization.StringSerializer;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import org.springframework.beans.factory.DisposableBean;

import com.jinghang.utils.PropertityUtils;

public class ProduceSenderUtils implements DisposableBean{

private Logger logger = LogManager.getLogger(ProduceSenderUtils.class);

private KafkaProducer producer = null;

//构造器

public ProduceSenderUtils() {

Properties props = new Properties();

props.setProperty(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, PropertityUtils.getValue("brokerList"));

props.setProperty(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

props.setProperty(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

// props.put("bootstrap.servers", "hadoop02:9092");

// props.put("acks", "all");

// props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

producer = new KafkaProducer<>(props);

}

public void sendMessage(String topic,String message){

producer.send(new ProducerRecord(topic, message),new Callback() {

@Override

public void onCompletion(RecordMetadata metadata, Exception exception) {

if (exception != null) {

logger.error("发送消息失败!!");

}else {

logger.info("发送消息成功!! "+metadata.offset()+"=="+metadata.partition()+"=="+metadata.topic());

}

}

});

}

@Override

public void destroy() throws Exception {

if (producer != null ) {

producer.close();

}

}

}

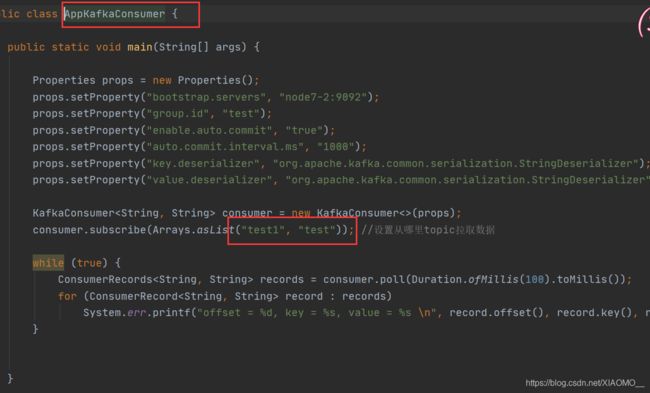

com.jinghang.test.kakfa.AppKafkaConsumer

package com.jinghang.test.kakfa;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

public class AppKafkaConsumer {

public static void main(String[] args) {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "node7-2:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "true");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer consumer = new KafkaConsumer<>(props);

consumer.subscribe(Arrays.asList("test1", "test")); //设置从哪里topic拉取数据

while (true) {

ConsumerRecords records = consumer.poll(Duration.ofMillis(100).toMillis());

for (ConsumerRecord record : records)

System.err.printf("offset = %d, key = %s, value = %s \n", record.offset(), record.key(), record.value());

}

}

}

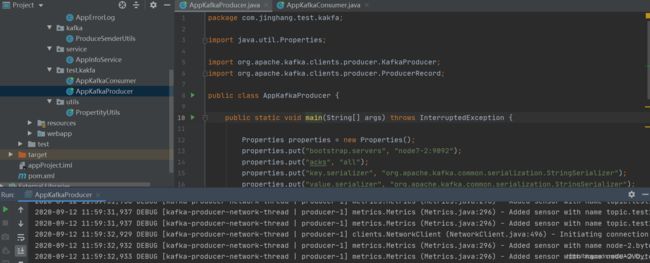

com.jinghang.test.kakfa.AppKafkaProducer

package com.jinghang.test.kakfa;

import java.util.Properties;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

public class AppKafkaProducer {

public static void main(String[] args) throws InterruptedException {

Properties properties = new Properties();

properties.put("bootstrap.servers", "node7-2:9092");

properties.put("acks", "all");

properties.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer kafkaProducer = new KafkaProducer(properties);

//生产者

for (int i = 0; i < 10000; i++) {

kafkaProducer.send(new ProducerRecord("test1", i+""));

Thread.sleep(1000);

}

}

} com.jinghang.utils.PropertityUtils

package com.jinghang.utils;

import com.typesafe.config.Config;

import com.typesafe.config.ConfigFactory;

public class PropertityUtils {

public final static Config config = ConfigFactory.load();

//从配置 根据key 获取到 value

public static String getValue(String key){

return config.getString(key).trim();

}

public static void main(String[] args) {

System.out.println(new PropertityUtils().getValue("filepath"));

}

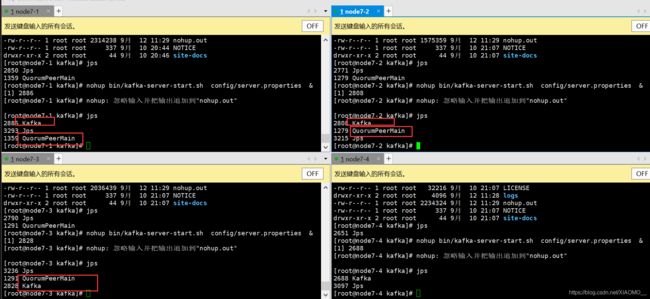

}运行前准备:

起虚拟机,

起zookeeper: bin/zkServer.sh restart

起kafka:

4台同时启动:nohup bin/kafka-server-start.sh config/server.properties &

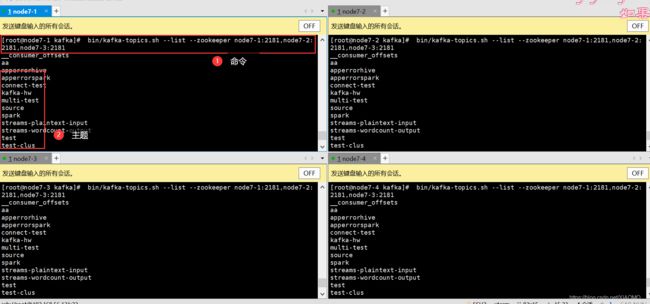

查看主题: bin/kafka-topics.sh --list --zookeeper node7-1:2181,node7-2:2181,node7-3:2181

运行AppKafkaConsumer代码:

生产者发送消息:

bin/kafka-console-producer.sh --broker-list node7-1:9092,node7-2:9092,node7-3:9092,node7-4:9092 --topic test

运行AppKafkaProducer代码:

消费者:bin/kafka-console-consumer.sh --bootstrap-server node7-1:9092,node7-2:9092,node7-3:9092,node7-4:9092 --topic test --from-beginning