Flink学习笔记(五):与kafka、HBase整合案例

文章目录

-

- 案例1.从kafka读取数据再写入kafka

- 案例2.从kafka读取数据写入hbase

- 案例3.从kafka读取数据写入Mysql

案例1.从kafka读取数据再写入kafka

环境:

- flink版本:1.7.2

- kafka版本:2.1.1

flink与kafka交互有多种方式,本案例通过dataStream的addSource和addSink的方式与kafka交互。flink默认支持kafka作为source和sink,引入flink自带的连接器和kafka依赖:

<!-- 注意版本,其中0.11是kafka client版本,2.11是scala版本,1.7.2是flink版本。 -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.11_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.12</artifactId>

<version>1.0.0</version>

</dependency>

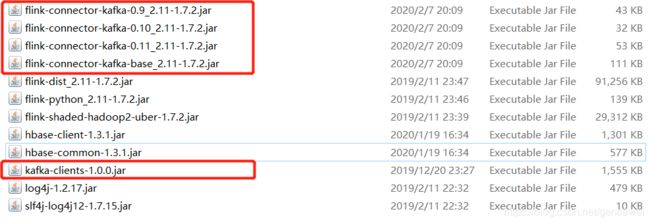

如果不是maven项目,可自行下载以下的jar包,加入到外部lib中:

代码如下:

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.api.java.tuple.Tuple

import org.apache.flink.streaming.api.CheckpointingMode

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.connectors.kafka.{

FlinkKafkaConsumer011, FlinkKafkaProducer011}

import scala.util.Random

/**

* flink以kafka为source和sink

*/

object FlinkFromKafka {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

/**

* 如果启用了Flink的Checkpint机制,

* 那么Flink Kafka Consumer将会从指定的Topic中消费消息,

* 然后定期地将Kafka offsets信息、状态信息以及其他的操作信息进行Checkpint。

* 所以,如果Flink作业出故障了,Flink将会从最新的Checkpint中恢复,

* 并且从上一次偏移量开始读取Kafka中消费消息。

*/

env.enableCheckpointing(2000,CheckpointingMode.EXACTLY_ONCE)

//消费者配置

val properties = new Properties

properties.put("bootstrap.servers", "hadoop02:9092,hadoop03:9092,hadoop04:9092")

properties.put("group.id", "g3")

// properties.put("auto.offset.reset", "earliest")

// properties.put("auto.offset.reset","latest");

properties.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer")

properties.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer")

//创建消费者 入参(topic,序列化schema,消费者配置)

val consumer = new FlinkKafkaConsumer011[String]("topic1", new SimpleStringSchema(), properties)

consumer.setStartFromEarliest() //设置从最开始消费

/**

* 这里有个问题:为什么设置为从最开始消费,每次重启项目都会消费所有旧消息呢?

* 在实际开发中,如果重启就会消费所有旧消息,肯定是有问题的。

*/

//以kafkaConsumer作为source创建流

val stream: DataStream[String] = env.addSource(consumer).setParallelism(1)//设置并行度为1

// val kStream: KeyedStream[(String, Int), Tuple] = stream.map((_, 1)).keyBy(0)

// val data = kStream.timeWindow(Time.seconds(5)).reduce((x, y) => (x._1, x._2 + y._2))

// data.print()

//处理逻辑:过滤出“a”,拼接一个随机数,然后写出到kafka

val filterStream = stream.filter("a".equals(_)).map( _ + "_" + Random.nextLong())

//生产者配置

val props = new Properties

props.put("bootstrap.servers", "hadoop02:9092,hadoop03:9092,hadoop04:9092")

//创建生产者,入参(topic,序列化schema,生产者配置)

val producer = new FlinkKafkaProducer011[String]("flinkFilter",new SimpleStringSchema(),props)

filterStream.print()

//以kafkaProducer作为sink

filterStream.addSink(producer)

env.execute("flink_kafka")

}

}

案例2.从kafka读取数据写入hbase

由于flink默认不支持hbase作为sink,所以需要自定义sink(这个项目没有运行过,需要调试)。首先引入kafka connector和hbase的依赖:

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.11_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.12</artifactId>

<version>1.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.3.1</version>

</dependency>

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.CheckpointingMode

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.connectors.kafka.{

FlinkKafkaConsumer011, FlinkKafkaProducer011}

/**

* flink以kafka为source,以hbase为sink

*/

object FlinkKafkaHbase {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.enableCheckpointing(2000,CheckpointingMode.EXACTLY_ONCE)

//消费者配置

val properties = new Properties

properties.put("bootstrap.servers", "hadoop02:9092,hadoop03:9092,hadoop04:9092")

properties.put("group.id", "g3")

properties.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer")

properties.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer")

//创建消费者 入参(topic,序列化schema,消费者配置)

val consumer = new FlinkKafkaConsumer011[String]("topic1", new SimpleStringSchema(), properties)

consumer.setStartFromEarliest() //设置从最开始消费

/**

* 这里有个问题:为什么设置为从最开始消费,每次重启项目都会消费所有旧消息呢?

* 在实际开发中,如果重启就会消费所有旧消息,肯定是有问题的。

*/

//以kafkaConsumer作为source创建流

val stream: DataStream[String] = env.addSource(consumer).setParallelism(1)//设置并行度为1

//处理逻辑

val filterStream = stream.filter(_.contains("student"))

val hbaseSink = new HbaseSink("student","info")

filterStream.addSink(hbaseSink)

env.execute("flink_kafka_hbase")

}

}

HbaseSink代码:

import org.apache.flink.configuration.Configuration

import org.apache.flink.streaming.api.functions.sink.{

RichSinkFunction, SinkFunction}

import org.apache.hadoop.hbase.{

HBaseConfiguration, HColumnDescriptor, HTableDescriptor, TableName}

import org.apache.hadoop.hbase.client._

import org.apache.hadoop.hbase.io.compress.Compression

import org.apache.hadoop.hbase.util.Bytes

/**

* 自定义sink类

*/

class HbaseSink(tableName: String, family: String) extends RichSinkFunction[String]{

var hConnection:Connection = _

var hTable:HTable = _

//open方法会在invoke方法之前调用,一般做一些初始化操作

override def open(parameters:Configuration): Unit = {

super.open(parameters)

val conf = HBaseConfiguration.create()

//设置zk连接

conf.set("hbase.zookeeper.quorum", "162.168.2.9,162,162.168.2.8")

conf.set("hbase.zookeeper.property.clientPort", "24002")

//需要kerberos认证的要加这一句

//conf.set("hbase.security.authentication","kerberos")

//创建连接

hConnection = ConnectionFactory.createConnection(conf)

//创建表

val admin: Admin = hConnection.getAdmin

val table: TableName = TableName.valueOf(tableName)

if (admin.tableExists(table)) {

System.out.println("----" + tableName + "表已存在-----")

//删除表

//admin.disableTable(table);

//admin.deleteTable(table);

}

else {

val descriptor: HTableDescriptor = new HTableDescriptor(tableName)

val columnDescriptor: HColumnDescriptor = new HColumnDescriptor(family)

columnDescriptor.setCompactionCompressionType(Compression.Algorithm.SNAPPY)

descriptor.addFamily(columnDescriptor)

System.out.println("----开始建表" + tableName + "-----")

admin.createTable(descriptor)

System.out.println("----建表完成" + tableName + "-----")

}

//获取表实例

hTable = hConnection.getTable(TableName.valueOf(tableName)).asInstanceOf[HTable]

//设置缓存为5M,关闭自动刷写,这两个设置一起实现hbase批量入库(一次写入5M数据)

hTable.setWriteBufferSize(1024 * 5 * 1024)

hTable.setAutoFlush(false, false)

}

//sink的实际逻辑在这个方法完成

override def invoke(value: String, context: SinkFunction.Context[_]): Unit = {

//解析内容

val arr = value.split(":")

val rowKey = arr(0)

val name = arr(1)

val age = arr(1)

val put: Put = new Put(Bytes.toBytes(rowKey))

put.setDurability(Durability.SKIP_WAL)

put.addColumn(Bytes.toBytes(family), Bytes.toBytes("name"), Bytes.toBytes(name))

put.addColumn(Bytes.toBytes(family), Bytes.toBytes("age"), Bytes.toBytes(age))

hTable.put(put)

}

//close一般做一些清理工作,例如关闭连接

override def close(): Unit = {

hTable.close()

}

}

案例3.从kafka读取数据写入Mysql

本案例是通过flink sql与kafka和Mysql交互,将source注册为table。这个项目也没有运行过。

难点主要是:

Rowtime定义很麻烦,必须是原始数据就是timestamp,否则没法转?

Flink类型如何转换?

嵌套类型如何处理?

如何用sql分窗?

如何解决Flink时间是utc时间,无法修改,与中国时间差8小时问题?

要使用flink Table API和flink sql,需要先引入table的依赖:

项目代码:

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.table.api.scala.StreamTableEnvironment

import org.apache.flink.table.api.{

EnvironmentSettings}

import org.apache.flink.table.descriptors.{

Json, Kafka, Rowtime, Schema}

import org.apache.flink.api.common.typeinfo.Types

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.table.sources.tsextractors.{

ExistingField}

object readkafka {

def main(args: Array[String]):Unit ={

// **********************

// BLINK STREAMING QUERY

// **********************

// get streaming runtime

val bsEnv = StreamExecutionEnvironment.getExecutionEnvironment

// set event time as stream time

bsEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

// get blink streaming settings

val bsSettings = EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build()

// get streaming table runtime

val bsTableEnv = StreamTableEnvironment.create(bsEnv, bsSettings)

bsTableEnv

.connect(new Kafka()

.version("0.10")

.topic("student")

.property("bootstrap.servers","192.168.1.1:9092")

.property("group.id","test_20191025")

.property("zookeeper.connect","192.168.1.1:2222")

.startFromEarliest())

.withFormat(new Json()

.failOnMissingField(false)

.schema(Types.ROW_NAMED(

Array("stu_id", "name", "time", "register_time", "detail", "mf"),

Types.STRING,

Types.STRING,

Types.LONG,

Types.LONG,

Types.ROW_NAMED(

Array("age", "grade"),

Types.STRING,

Types.STRING),

Types.STRING)))

.withSchema(new Schema()

.field("stu_id", Types.STRING)

.field("name", Types.STRING)

.field("rowtime", Types.SQL_TIMESTAMP)

.rowtime(new Rowtime()

.timestampsFromExtractor(new ExistingField("time"))

.watermarksPeriodicBounded(5000))

.field("register_time", Types.LONG)

.field("detail", Types.ROW_NAMED(Array("age","grade"), Types.INT, Types.INT))

.field("gender",Types.STRING).from("mf")

)

.inAppendMode()

.registerTableSource("testKafkaSource")

bsTableEnv.sqlUpdate("CREATE TABLE testMysqlSink(" +

"stu_num bigint, avg_age double, tumble_start TIMESTAMP(3), tumble_end TIMESTAMP(3)" +

") WITH (" +

"'connector.type' = 'jdbc'," +

"'connector.url' = 'jdbc:mysql://192.168.1.1:3306/test?autoReconnect=true'," +

"'connector.table' = 'stu_20191023'," +

"'connector.driver' = 'com.mysql.jdbc.Driver'," +

"'connector.username' = '111111', " +

"'connector.password' = '111111'," +

"'connector.write.flush.max-rows' = '1'" +

")")

bsTableEnv.sqlUpdate("INSERT INTO testMysqlSink " +

"select " +

"count(dinstinct stu_id) as stu_num" +

",avg(age) as avg_age" +

",cast(from_unixtime(unix_timestamp(cast(TUMBLE_START(rowtime, INTERVAL '1' MINUTE) as varchar)) + 8*60*60,'yyyy-MM-dd HH:mm:ss') as timestamp) as tumble_start" +

",cast(from_unixtime(unix_timestamp(cast(TUMBLE_END(rowtime, INTERVAL '1' MINUTE) as varchar)) + 8*60*60,'yyyy-MM-dd HH:mm:ss') as timestamp) as tumble_end " +

"from testKafkaSource " +

"group by TUMBLE(rowtime, INTERVAL '1' MINUTE)")

// set flink job name

bsTableEnv.execute("test_1028_1")

}

}