【pyspark】酷酷的hive推数程序(至Hbase)

【pyspark】酷酷的hive推数程序(至Hbase)

-

- 前言

- 效果

- pyspark脚本

- Submit脚本

- 后记

前言

首先要说明博主这里的hive与Hbase是在物理隔离的两个集群里,如果是同一个集群环境的话,理论上运行速度是会更快的。

采用Shell+Python编写的spark程序,client模式下,输出做了高亮处理,让运行过程更直观(cluster模式需要对输出进行微调,不然会找不到相关类的错误,这是由于lib包的问题导致的)。

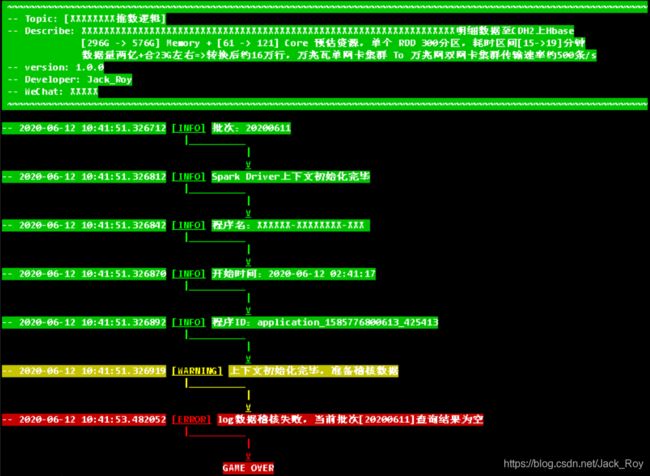

效果

pyspark脚本

我们直接上脚本(需要改zk地址,你的sql逻辑,你的rdd转换逻辑,最后要注意通过API推送Hbase格式有要求:[(‘row_key’, [‘row_key’, ‘列簇’, ‘字段1’, ‘字段1value([字典])’])]):

# coding=utf-8

import os

import sys

import json

import requests

import datetime

from pyspark import SparkConf

from pyspark import SparkContext

from pyspark.sql import SparkSession,SQLContext

# 需要用到的表(我这里的逻辑是将这两张表的数据合在一起)

TABLE1=" table1 "

TABLE2=" table2 "

TEMP="%Y-%m-%d %H:%M:%S"

# 你的job name(随便起)

APP_NAME="Jack_Roy"

# 换成你的zk地址

ZK_HOSTS="172.0.0.10,172.0.0.11,172.0.0.12"

HBASE_TABLE_NAME="hbase_table"

KEY_CONV="org.apache.spark.examples.pythonconverters.StringToImmutableBytesWritableConverter"

VALUE_CONV="org.apache.spark.examples.pythonconverters.StringListToPutConverter"

CONF={

"hbase.zookeeper.quorum":ZK_HOSTS,"hbase.mapred.outputtable":HBASE_TABLE_NAME,"mapreduce.outputformat.class":"org.apache.hadoop.hbase.mapreduce.TableOutputFormat","mapreduce.job.output.key.class":"org.apache.hadoop.hbase.io.ImmutableBytesWritable","mapreduce.job.output.value.class":"org.apache.hadoop.io.Writable"}

def title():

local_log("START",32," ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~")

local_log("START",32," -- Topic: [Test]")

local_log("START",32," -- Describe: 推送数据至Hbase")

local_log("START",32," -- version: 1.0.0")

local_log("START",32," -- Developer: Jack_Roy")

local_log("START",32," -- WeChat: xxxxxxx")

local_log("START",32," ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~")

def close_spark():

spark.stop()

return

def get_dataframe(TABLE_NAME):

local_log("WARNING",33,"懒加载dataframe:"+TABLE_NAME)

# 这里写你的sql查询逻辑

df=spark.sql("SELECT * \

FROM "+ TABLE_NAME +" ")

return df

def next_pubctuation(color):

print(" \033[1;"+str(color)+"m|__________\033[0m")

print(" \033[1;"+str(color)+"m|\033[0m")

print(" \033[1;4;"+str(color)+"mV\033[0m")

def local_log(msg,color,strs):

if msg == "START":

print("\033[1;37;"+str(color+10)+"m"+strs+"\033[0m")

else:

print("\033[1;37;"+str(color+10)+"m-- "+str(datetime.datetime.now())+"\033[0m \033[1;4;"+str(color)+"m["+msg+"]\033[0m \033[1;37;"+str(color+10)+"m"+strs+"\033[0m")

next_pubctuation(color)

if msg == "ERROR":

print(" \033[1;5;37;"+str(color+10)+"mGAME OVER\033[0m")

elif msg == "SUCCESS":

print(" \033[1;5;37;"+str(color+10)+"mGAME CLEARANCE\033[0m")

def do_shell(shell):

os.system(shell)

def df_to_rdd(df,code):

# 这里写你的各项rdd操作

# 要注意,最终推送格式为[('row_key', ['row_key', '列簇', '字段1', '字段1value([字典])'])]

rdd=df.rdd.map(lambda x:()).reduceByKey(lambda x,y:)

return rdd

def set_spark(sc,park):

sc.setLogLevel('ERROR')

if __name__ == '__main__':

VERSION_DATE=sys.argv[1]

spark=SparkSession.builder.appName(APP_NAME).config("spark.sql.broadcastTimeout","3600").enableHiveSupport().getOrCreate()

sc = spark.sparkContext

set_spark(sc,spark)

APPLICATION_ID=sc.applicationId

START_TIME=datetime.datetime.utcfromtimestamp(sc.startTime/1000).strftime(TEMP)

do_shell("clear")

title()

do_shell("echo ''")

local_log("INFO",32,"批次:"+str(VERSION_DATE))

local_log("INFO",32,"Spark Driver上下文初始化完毕")

local_log("INFO",32,"程序名: "+str(APP_NAME))

local_log("INFO",32,"开始时间:"+str(START_TIME))

local_log("INFO",32,"程序ID:"+str(APPLICATION_ID))

# 获取dataframe

df_1=get_dataframe(TABLE1)

df_2=get_dataframe(TABLE2)

local_log("INFO",32,"dataframe加载完毕,转换为rdd")

local_log("WARNING",33,"触发行动算子")

rdd=df_to_rdd(df_a1,"t2").union(df_to_rdd(df_2,"t2"))

local_log("WARNING",33,"rdd转换完成,即将写入hbase")

rdd.saveAsNewAPIHadoopDataset(conf=CONF,keyConverter=KEY_CONV,valueConverter=VALUE_CONV)

local_log("SUCCESS",32,"hbase写入完成,任务成功,关闭spark")

close_spark()

Submit脚本

这个没什么好描述的,采用shell写的(要找到相关jar包!):

#!/bin/bash

export HADOOP_USER_NAME=hdfs

date

#开始时间戳

start_time=`date +%Y-%m-%d-%H:%M:%S`

accdate=`date -d '-1 day' +%Y%m%d`

lib_path=/opt/hbase_jars

# 去spark、hbase lib目录下找到这些jar包!!!!!!!放在/opt/hbase_jars或者hdfs上

lib_jars=\

$lib_path/spark-examples-******.jar, \

$lib_path/hbase-common-******.jar, \

$lib_path/hbase-client-******.jar, \

$lib_path/hbase-hadoop2-******.jar, \

$lib_path/hbase-annotations-******.jar, \

$lib_path/hbase-it-1.2.0-******.jar, \

$lib_path/hbase-prefix-tree-******.jar, \

$lib_path/hbase-procedure-******.jar, \

$lib_path/hbase-protocol-******.jar, \

$lib_path/hbase-resource-******.jar, \

$lib_path/hbase-rest-******.jar, \

$lib_path/hbase-server-******.jar, \

$lib_path/hbase-shell-******.jar, \

$lib_path/hbase-spark-******.jar, \

$lib_path/hbase-thrift-******.jar, \

$lib_path/htrace-core-******.jar

spark2-submit --driver-memory 16G --executor-memory 14G --driver-cores 1 --executor-cores 3 --num-executors 20 --conf spark.sql.shuffle.partitions=300 --conf spark.shuffle.consolidateFiles=true --conf spark.dynamicAllocation.maxExecutors=40 --master yarn --deploy-mode cluster --name Jack_Roy --jars $lib_jars ./hive_to_hbase.py

后记

在Submit模式改为cluster之后,可能会遇到报错,博主的解决方案是将jar存在hdfs上,以便在cluster模式下能够动态读取。

大家有什么问题可下方留言交流。