kafka java api编程

1)创建kafka的topic(fyy_topic)

/home/opt/kafka_2.11-0.10.2.2/bin/kafka-topics.sh --create --zookeeper 01.server.bd:2181,02.server.bd:2181,03.server.bd:2181 --replication-factor 3 --partitions 3 --topic fyy_topic

2)查看topic的信息

/home/opt/kafka_2.11-0.10.2.2/bin/kafka-topics.sh --describe --zookeeper 01.server.bd:2181,02.server.bd:2181,03.server.bd:2181 --topic fyy_topic

3)kafka生产者

(1)创建kafka常用配置文件(KafkaProperties.java)

package com.fyy.spark.kafka;

/**

* @author fanyanyan

* @Title: KafkaProperties

* @ProjectName SparkStreamingProject

*/

/**

* Kafka常用配置文件

*/

public class KafkaProperties {

public static final String ZK = "01.server.bd:2181,02.server.bd:2181,03.server.bd:2181";

public static final String TOPIC = "fyy_topic";

public static final String BROKER_LIST = "01.server.bd:9092,02.server.bd:9092,03.server.bd:9092";

public static final String GROUP_ID = "fyy_group1";

}(2)创建kafka消费者(KafkaProducer.java)

package com.fyy.spark.kafka;

/**

* @author fanyanyan

* @Title: KafkaProducer

* @ProjectName SparkStreamingProject

*/

import kafka.javaapi.producer.Producer;

import kafka.producer.KeyedMessage;

import kafka.producer.ProducerConfig;

import java.util.Properties;

/**

* Kafka生产者

*/

public class KafkaProducer extends Thread{

private String topic;

private Producer producer;

public KafkaProducer(String topic) {

this.topic = topic;

Properties properties = new Properties();

properties.put("metadata.broker.list",KafkaProperties.BROKER_LIST);

properties.put("serializer.class","kafka.serializer.StringEncoder");

properties.put("request.required.acks","1");

producer = new Producer(new ProducerConfig(properties));

}

@Override

public void run() {

int messageNo = 1;

// 循环产生数据流

while(true) {

String message = "fyy_message_" + messageNo;

producer.send(new KeyedMessage(topic, message));

System.out.println("Sent: " + message);

messageNo ++ ;

try{

Thread.sleep(1000);

} catch (Exception e){

e.printStackTrace();

}

}

}

} (3)创建消费者(KafkaConsumer.java)

package com.fyy.spark.kafka;

/**

* @author fanyanyan

* @Title: KafkaConsumer

* @ProjectName SparkStreamingProject

*/

import kafka.consumer.Consumer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.javaapi.consumer.ConsumerConnector;

import kafka.consumer.KafkaStream;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

/**

* Kafka消费者

*/

public class KafkaConsumer extends Thread{

private String topic;

public KafkaConsumer(String topic){

this.topic = topic;

}

private ConsumerConnector createConnector(){

Properties properties = new Properties();

properties.put("zookeeper.connect", KafkaProperties.ZK);

properties.put("group.id",KafkaProperties.GROUP_ID);

return Consumer.createJavaConsumerConnector(new ConsumerConfig(properties));

}

@Override

public void run() {

ConsumerConnector consumer = createConnector();

Map topicCountMap = new HashMap();

topicCountMap.put(topic, 1);

Map>> messageStream = consumer.createMessageStreams(topicCountMap);

KafkaStream stream = messageStream.get(topic).get(0);

// 获取每次接收到的数据

ConsumerIterator iterator = stream.iterator();

while (iterator.hasNext()){

String message = new String(iterator.next().message());

System.out.println("rec: " + message);

}

}

}

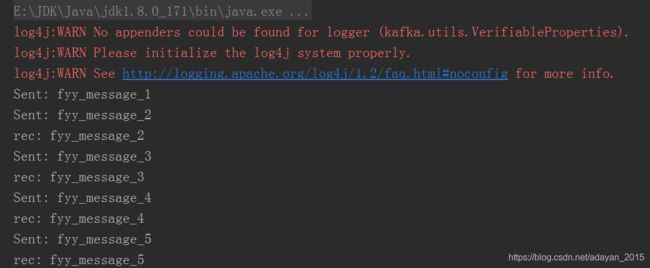

(4)执行入口程序(KafkaTestApp.java)

package com.fyy.spark.kafka;

import com.fyy.spark.kafka.KafkaConsumer;

/**

* @author fanyanyan

* @Title: KafkaTesttApp

* @ProjectName SparkStreamingProject

*/

/**

* Kafka Java API测试

*/

public class KafkaTesttApp {

public static void main(String[] args) {

// 生产者

new KafkaProducer(KafkaProperties.TOPIC).start();

// 消费者

new KafkaConsumer(KafkaProperties.TOPIC).start();

}

}4)补充一下kafka的常用的命令行操作

# 查看topic信息

/home/opt/kafka_2.11-0.10.2.2/bin/kafka-topics.sh --list --zookeeper 01.server.bd:2181,02.server.bd:2181,03.server.bd:2181

# 创建topic

/home/opt/kafka_2.11-0.10.2.2/bin/kafka-topics.sh --create --zookeeper 01.server.bd:2181,02.server.bd:2181,03.server.bd:2181 --replication-factor 3 --partitions 3 --topic fyy_topic

# 删除topic

server.properties 设置 delete.topic.enable=true

/home/opt/kafka_2.11-0.10.2.2/bin/kafka-topics.sh --delete --zookeeper 01.server.bd:2181,02.server.bd:2181,03.server.bd:2181 --topic fyy_topic

# 往Kafka的topic中写入数据(命令行的生成者)

/home/opt/kafka_2.11-0.10.2.2/bin/kafka-console-producer.sh --broker-list 01.server.bd:9092,02.server.bd:9092,03.server.bd:9092 --topic fyy_topic

#启动消费者

/home/opt/kafka_2.11-0.10.2.2/bin/kafka-console-consumer.sh --zookeeper 01.server.bd:2181,02.server.bd:2181,03.server.bd:2181 --topic fyy_topic--from-beginning

# 查看kafka的描述信息

/home/opt/kafka_2.11-0.10.2.2/bin/kafka-topics.sh --describe --zookeeper 01.server.bd:2181,02.server.bd:2181,03.server.bd:2181 --topic fyy_topic