HDFS新增节点与删除节点

准备工作:

- 创建一台全新的虚拟机

- 关闭防火墙

- 修改主机名

- 给IP起别名

- 关闭selinux

- ssh免密登录

- 将主节点的文件远程发送到本虚拟机:

jdk,Hadoop相关文件,jdk和Hadoop配置文件等

文章目录

- 1. 新增节点

- 1.1 在主节点创建dfs.hosts文件

- 1.2 node01编辑hdfs-site.xml添加以下配置

- 1.3 刷新namenode 更新resourceManager节点

- 1.4 namenode的slaves文件增加新服务节点主机名称

- 1.5 单独启动新增节点

- 1.6 浏览器查看

- 1.7 使用负载均衡命令,让数据均匀负载所有机器

- 2. 删除节点

- 2.1 创建dfs.hosts.exclude配置文件

- 2.2 编辑namenode所在机器的hdfs-site.xml

- 2.3 刷新namenode,刷新resourceManager

- 2.4 节点退役完成,停止该节点进程

- 2.5 从include文件中删除退役节点

- 2.6 从namenode的slave文件中删除退役节点

- 2.7 如果数据负载不均衡,执行以下命令进行均衡负载

1. 新增节点

1.1 在主节点创建dfs.hosts文件

在node01也就是namenode所在的机器的/export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop目录下

添加如下主机名称(包含新服役的节点)

[root@node01 Hadoop]# cd /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop

[root@node01 Hadoop]# vim dfs.hosts

node01

node02

node03

node04

1.2 node01编辑hdfs-site.xml添加以下配置

node01执行以下命令

[root@node01 hadoop]# cd /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop

[root@node01 hadoop]# vim hdfs-site.xml

添加如下代码

dfs.hosts</name>

/export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop/dfs.hosts</value>

</property>

1.3 刷新namenode 更新resourceManager节点

node01执行以下命令刷新

[root@node01 hadoop]# hdfs dfsadmin -refreshNodes

Refresh nodes successful

[root@node01 hadoop]# yarn rmadmin -refreshNodes

19/03/16 11:19:47 INFO client.RMProxy: Connecting to ResourceManager at node01/192.168.52.100:8033

1.4 namenode的slaves文件增加新服务节点主机名称

node01执行以下命令编辑slaves文件

[root@node01 hadoop]# cd /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop

[root@node01 hadoop]# vim slaves

node01

node02

node03

node04

1.5 单独启动新增节点

node04服务器执行以下命令,启动datanode和nodemanager

[root@node04 hadoop-2.6.0-cdh5.14.0]# cd /export/servers/hadoop-2.6.0-cdh5.14.0

[root@node04 hadoop-2.6.0-cdh5.14.0]# sbin/hadoop-daemon.sh start datanode

starting datanode, logging to /export/servers/hadoop-2.6.0-cdh5.14.0/logs/hadoop-root-datanode-node04.out

[root@node04 hadoop-2.6.0-cdh5.14.0]# sbin/yarn-daemon.sh start nodemanager

nodemanager running as process 2097. Stop it first.

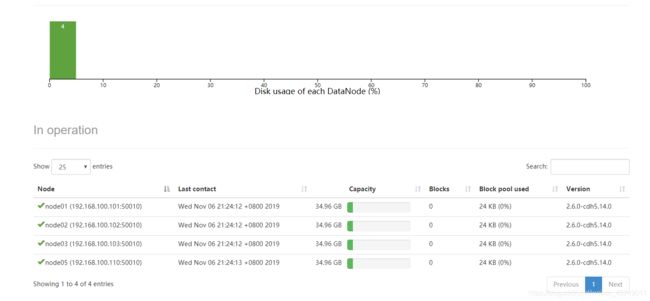

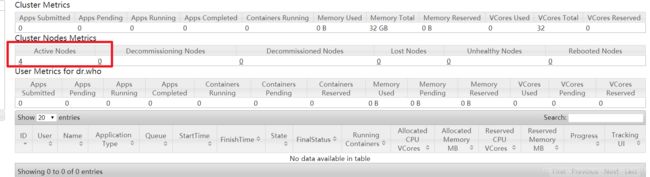

1.6 浏览器查看

http://node01:50070/dfshealth.html#tab-overview

http://node01:8088/cluster

1.7 使用负载均衡命令,让数据均匀负载所有机器

node01执行以下命令

[root@node01 sbin]# cd /export/servers/hadoop-2.6.0-cdh5.14.0

[root@node01 hadoop-2.6.0-cdh5.14.0]# sbin/start-balancer.sh

starting balancer, logging to /export/servers/hadoop-2.6.0-cdh5.14.0/logs/hadoop-root-balancer-node01.out

Time Stamp Iteration# Bytes Already Moved Bytes Left To Move Bytes Being Moved

2. 删除节点

2.1 创建dfs.hosts.exclude配置文件

在namenod的 /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop目录下创建dfs.hosts.exclude文件,并添加需要退役的主机名称

node01执行以下命令

[root@node01 sbin]# cd /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop

[root@node01 hadoop]# vim dfs.hosts.exclude

node04

2.2 编辑namenode所在机器的hdfs-site.xml

编辑namenode所在的机器的hdfs-site.xml配置文件,添加以下配置

node01执行以下命令

[root@node01 hadoop]# cd /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop

[root@node01 hadoop]# vim hdfs-site.xml

dfs.hosts.exclude</name>

/export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop/dfs.hosts.exclude</value>

</property>

2.3 刷新namenode,刷新resourceManager

在namenode所在的机器执行以下命令,刷新namenode,刷新resourceManager

[root@node01 hadoop]# hdfs dfsadmin -refreshNodes

Refresh nodes successful

[root@node01 hadoop]# yarn rmadmin -refreshNodes

19/11/08 00:10:30 INFO client.RMProxy: Connecting to ResourceManager at node01/192.168.100.101:8033

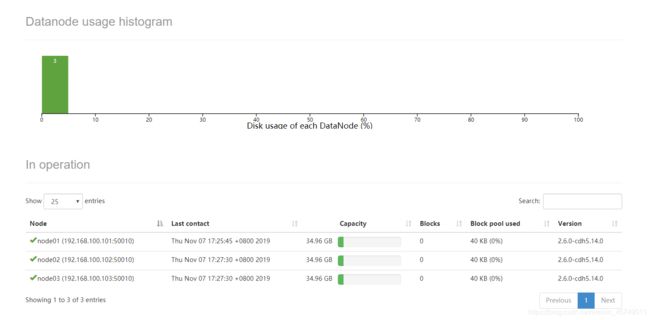

2.4 节点退役完成,停止该节点进程

等待退役节点状态为decommissioned(所有块已经复制完成),停止该节点及节点资源管理器。注意:如果副本数是3,服役的节点小于等于3,是不能退役成功的,需要修改副本数后才能退役。

node01执行以下命令,停止该节点进程

[root@node01 sbin]# cd /export/servers/hadoop-2.6.0-cdh5.14.0

[root@node01 hadoop-2.6.0-cdh5.14.0]# sbin/hadoop-daemon.sh stop datanode

stopping datanode

[root@node01 hadoop-2.6.0-cdh5.14.0]# sbin/yarn-daemon.sh stop nodemanager

stopping nodemanager

2.5 从include文件中删除退役节点

namenode所在节点也就是node01执行以下命令删除退役节点

[root@node01 hadoop-2.6.0-cdh5.14.0]# cd /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop

[root@node01 hadoop]# vim dfs.hosts

node01

node02

node03

namenode所在节点也就是node01执行以下命令刷新namenode和resourceManager

[root@node01 hadoop]# hdfs dfsadmin -refreshNodes

Refresh nodes successful

[root@node01 hadoop]# yarn rmadmin -refreshNodes

19/11/08 00:20:23 INFO client.RMProxy: Connecting to ResourceManager at node01/192.168.100.101:8033

2.6 从namenode的slave文件中删除退役节点

namenode所在机器也就是node01执行以下命令从slaves文件中删除退役节点

[root@node01 hadoop]# cd /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop

[root@node01 hadoop]# vim slaves

node01

node02

node03

2.7 如果数据负载不均衡,执行以下命令进行均衡负载

node01执行以下命令进行均衡负载

[root@node01 hadoop]# cd /export/servers/hadoop-2.6.0-cdh5.14.0/

[root@node01 hadoop-2.6.0-cdh5.14.0]# sbin/start-balancer.sh

starting balancer, logging to /export/servers/hadoop-2.6.0-cdh5.14.0/logs/hadoop-root-balancer-node01.out

Time Stamp Iteration# Bytes Already Moved Bytes Left To Move Bytes Being Moved