Idea无法创建scala.class的解决方法

Idea无法创建scala.class的解决方法

- 面临的问题

- 1:pom.xml

- 2:scala在windows的安装

- 3:Global Libraries

面临的问题

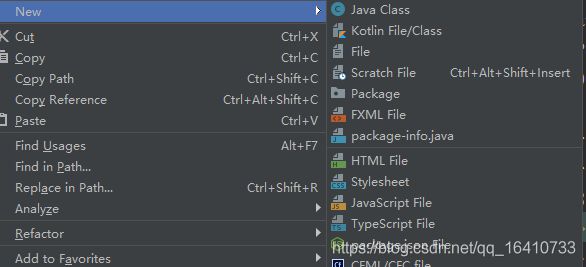

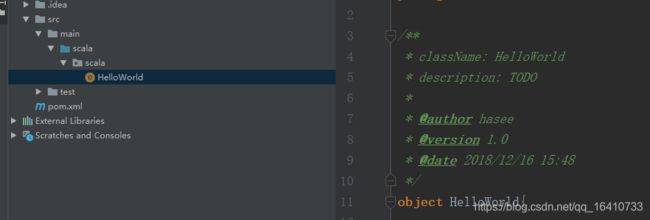

在学习scala编程的时候,跟着课程的步骤进行练习,可是右键点击new的时候,没有出现scala.class,下面是关于本人对于此问题的解决步骤

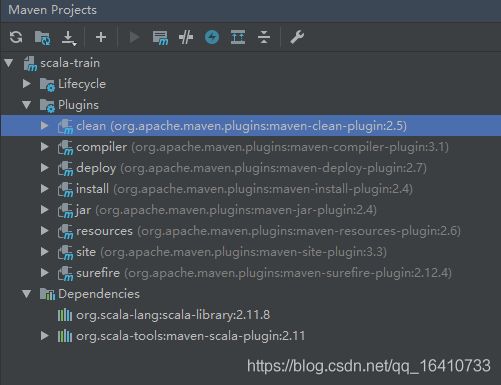

1:pom.xml

因为用的是maven,所以最初的想法是,会不会是我依赖包导错了,于是去检查了Maven Projects:

看不出个所以然,所以干脆去github上面拷贝了一段依赖下来:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>scala</groupId>

<artifactId>scala-train</artifactId>

<version>1.0-SNAPSHOT</version>

<inceptionYear>2008</inceptionYear>

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<encoding>UTF-8</encoding>

<spark.version>2.1.1</spark.version>

<scala.version>2.11.8</scala.version>

<scala.compat.version>2.11</scala.compat.version>

</properties>

<repositories>

<repository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</pluginRepository>

</pluginRepositories>

<dependencies>

<!-- Scala lang, spark core and spark sql are all

scoped as provided as spark-submit will provide these -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_${scala.compat.version}</artifactId>

<version>${spark.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_${scala.compat.version}</artifactId>

<version>${spark.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.5</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.clapper</groupId>

<artifactId>grizzled-slf4j_${scala.compat.version}</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>com.typesafe</groupId>

<artifactId>config</artifactId>

<version>1.3.2</version>

</dependency>

<!-- Test Scopes -->

<dependency>

<groupId>org.scalactic</groupId>

<artifactId>scalactic_${scala.compat.version}</artifactId>

<version>3.0.5</version>

</dependency>

<dependency>

<groupId>org.scalatest</groupId>

<artifactId>scalatest_${scala.compat.version}</artifactId>

<version>3.0.5</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<!-- see http://davidb.github.com/scala-maven-plugin -->

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.3.1</version>

<configuration>

<compilerPlugins>

<compilerPlugin>

<groupId>com.artima.supersafe</groupId>

<artifactId>supersafe_${scala.version}</artifactId>

<version>1.1.3</version>

</compilerPlugin>

</compilerPlugins>

</configuration>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

<configuration>

<args>

<arg>-feature</arg>

<arg>-deprecation</arg>

<arg>-dependencyfile</arg>

<arg>${project.build.directory}/.scala_dependencies</arg>

</args>

</configuration>

</execution>

</executions>

</plugin>

<!-- disable surefire -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.18.1</version>

<configuration>

<skipTests>true</skipTests>

<useFile>false</useFile>

<disableXmlReport>true</disableXmlReport>

<includes>

<include>**/*Test.*

**/ *Suite.*</include>

</includes>

</configuration>

</plugin>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<version>1.5.0</version>

<executions>

<execution>

<id>run-local</id>

<goals>

<goal>exec</goal>

</goals>

<configuration>

<executable>spark-submit</executable>

<arguments>

<argument>--master</argument>

<argument>local</argument>

<argument>${project.build.directory}/${project.artifactId}-${project.version}.jar

</argument>

</arguments>

</configuration>

</execution>

<execution>

<id>run-yarn</id>

<goals>

<goal>exec</goal>

</goals>

<configuration>

<environmentVariables>

<HADOOP_CONF_DIR>

${basedir}/spark-remote/conf

</HADOOP_CONF_DIR>

</environmentVariables>

<executable>spark-submit</executable>

<arguments>

<argument>--master</argument>

<argument>yarn</argument>

<argument>${project.build.directory}/${project.artifactId}-${project.version}.jar

</argument>

</arguments>

</configuration>

</execution>

</executions>

</plugin>

<!-- Use the shade plugin to remove all the provided artifacts (such as spark itself) from the jar -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.1.1</version>

<configuration>

<!-- Remove signed keys to prevent security exceptions on uber jar -->

<!-- See https://stackoverflow.com/a/6743609/7245239 -->

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF\/*.SF</exclude>

<exclude>META-INF\/*.DSA</exclude>

<exclude>META-INF\/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer

implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>net.martinprobson.spark.SparkTest</mainClass>

</transformer>

</transformers>

<artifactSet>

<excludes>

<exclude>javax.servlet:*</exclude>

<exclude>org.apache.hadoop:*</exclude>

<exclude>org.apache.maven.plugins:*</exclude>

<exclude>org.apache.spark:*</exclude>

<exclude>org.apache.avro:*</exclude>

<exclude>org.apache.parquet:*</exclude>

<exclude>org.scala-lang:*</exclude>

</excludes>

</artifactSet>

</configuration>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

2:scala在windows的安装

由于之前一直用的虚拟机跑,所以并未在本机上安装scala,所以不知道会不会是这个原因,于是重新去官网上下载了scala-2.11.8的windows版本,具体的安装过程在此网站https://www.cnblogs.com/freeweb/p/5623372.html,但是发现问题仍旧没有解决。

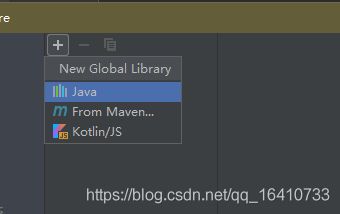

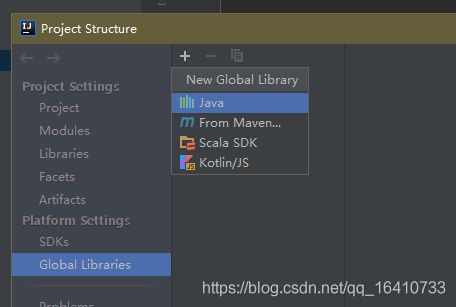

3:Global Libraries

无奈,只能上网查找,发现是没有添加scala sdk,具体如图:

点击加号,却没有scala sdk

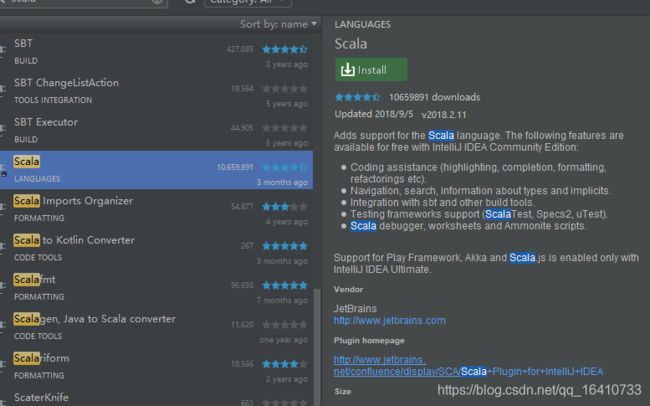

去setting->Plugins->install JetBrains plugin搜索Scala ,选中Scala,点击右侧Install, 即进入在线安装。

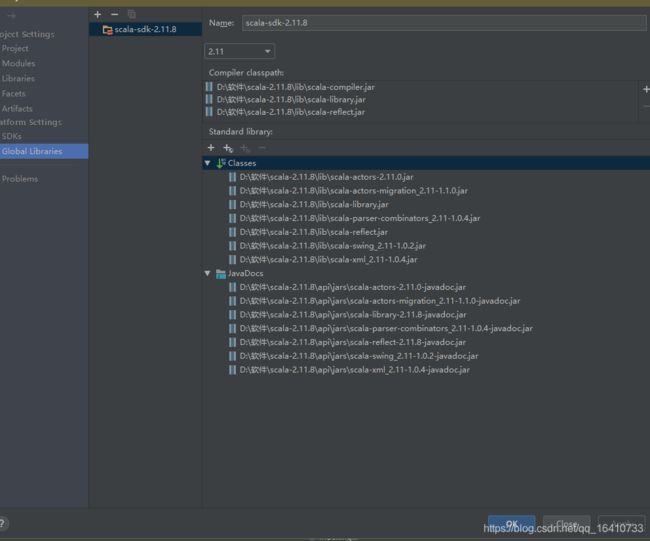

重启Idea后,发现原来的scala类已经可以用了

但为了防止意外,还是先引入Scala SDK

点击Apply使其生效,接下来就可以愉快地进行scala编程啦~!!!!

由于这是本人第一次编写csdn博客,可能写的会很粗糙,还请各位看官见谅~~