python中引入json文件报错:json.decoder.JSONDecodeError: Expecting ',' delimiter: line 1 column 1780 (char 17

昨天还可以正常运行的python文件今天突然报错

PS C:\Users\18200\Desktop\python-wh> & D:/Python/Python37-32/python.exe c:/Users/18200/Desktop/python-wh/compare/main.py

Traceback (most recent call last):

File "c:/Users/18200/Desktop/python-wh/compare/main.py", line 12, in <module>

crawler.run()

File "c:\Users\18200\Desktop\python-wh\compare\service\crawler.py", line 36, in run

self.crawler()

File "c:\Users\18200\Desktop\python-wh\compare\service\crawler.py", line 60, in crawler

self.overall_parser(overall_information=overall_information)

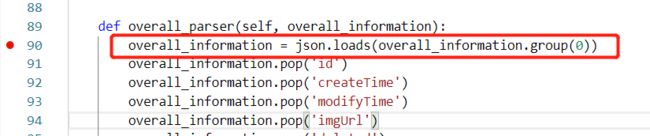

File "c:\Users\18200\Desktop\python-wh\compare\service\crawler.py", line 89, in overall_parser

overall_information = json.loads(overall_information.group(0))

File "D:\Python\Python37-32\lib\json\__init__.py", line 348, in loads

return _default_decoder.decode(s)

File "D:\Python\Python37-32\lib\json\decoder.py", line 337, in decode

obj, end = self.raw_decode(s, idx=_w(s, 0).end())

File "D:\Python\Python37-32\lib\json\decoder.py", line 353, in raw_decode

obj, end = self.scan_once(s, idx)

json.decoder.JSONDecodeError: Expecting ',' delimiter: line 1 column 1780 (char 1779)

根据搜到的解析:

JSONDecodeError告诉你很多数据在发生错误之前被成功解析;

这可能是因为此时存在无效数据(格式错误或损坏的JSON文档)或数据被截断

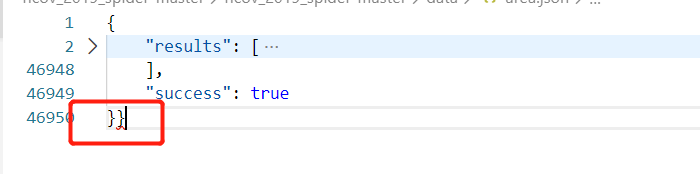

检查代码读取的json文件

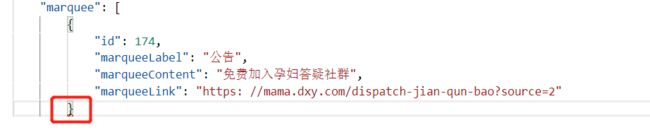

暂时看不出,将文件format为json格式便于查看

果然文档末尾报错

多了一个**}**,删掉再次测试,继续报同样的错。扎心了。继续搜。。

借鉴https://stackoverflow.com/questions/33984903/json-decoder-jsondecodeerror-expecting-value-line-1-column-1-char-0

修改我的jsonload代码

原本是

with open('data/overall.json', 'r', encoding='utf-8') as f:

overall_json = f.read()

overall_json = json.loads(overall_json)

with open('data/area.json', 'r', encoding='utf-8') as f:

area_json = f.read()

area_json = json.loads(area_json)

修改为

with open('data/overall.json', 'r', encoding='utf-8') as jsonfile:

# overall_json = f.read()

overall_json = json.loads(overall_json)

with open('data/area.json', 'r', encoding='utf-8') as jsonfile:

# area_json = f.read()

area_json = json.loads(area_json)

依旧报错,心累。。看来照搬别人的方法暂时没有用,debug也看不出具体问题在哪,直到看到这个

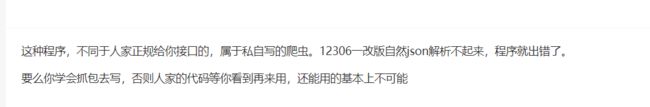

似乎有点坑到自己,那就只能返回debug看错误了。一开始直接在报错语句这里设置断点找不到错误

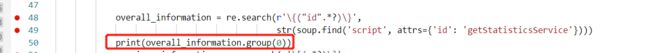

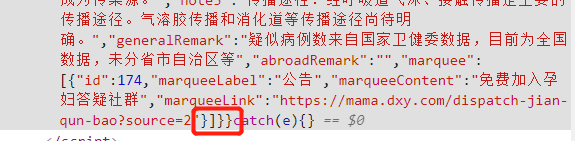

往上一步走,看一下overall_information这个变量,将结果输出看看

将输出的变量整理后发现确实有问题

特别是对比源代码可以发现缺少了后面的括号

符合了上面说的所有错误,代码不对导致json数据引入时出现格式错误,这里的re.search(r'\{("id".*?)\}'需要修改以匹配上后面的所有括号![]()

改为re.search(r'\{("id".*?)\}]\}',终于成功!!

估计就是如上所说,爬虫的网页改了代码导致之前的正则无法匹配了吧,心累,果然,自己写才是正解。