spiderkeeper+scrapyd 监控scrapy爬虫

scrapyd官方文档,scrapyd-client官方文档,spiderkeeper官方文档

Scrapyd是一个服务,用来运行scrapy爬虫;scrapyd-client提供scrapyd-deploy工具,方便部署scrapy项目到scrapyd中。spiderkeeper可视化管理工具。

C:\Windows\system32>pip install scprayd scrapyd-client spiderkeeper

window环境需要在C:\Program Files\Python36\Scripts目录下添加scrapyd-deploy.bat文件:

@echo off

"C:\Program Files\Python36\python.exe" "C:\Program Files\Python36\Scripts\scrapyd-deploy" %*此时scrapyd-deploy命令才可用。

新建一个目录,然后启动scrapyd服务:

D:\ksy\scrapyd>scrpyd

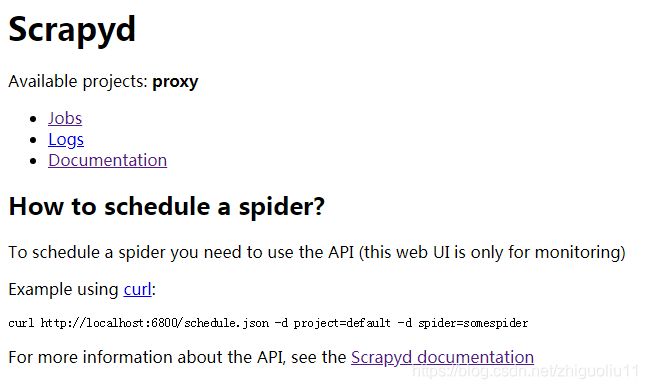

访问http://localhost:6800,可得:

启动spiderkeeper服务:

D:\ksy\scrapyd>spiderkeeper

SpiderKeeper startd on 0.0.0.0:5000 username:admin/password:admin with scrapyd servers:http://localhost:6800

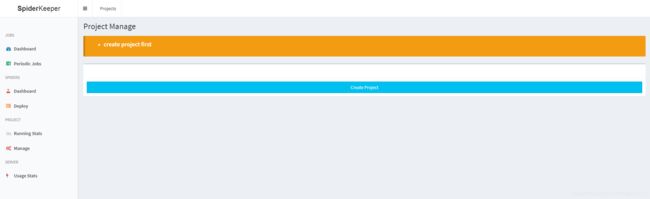

访问http://localhost:5000,默认账号和密码为admin

- 点击Create Project按钮创建一个项目,

- 进入scrapy项目的根目录D:\ksy\proxy,修改scrapy.cfg

-

[deploy] url = http://localhost:6800/ project = proxy然后执行命令:

D:\ksy\proxy>scrapyd-deploy

Server response (200):

{"node_name": "zhiguo-PC", "status": "ok", "project": "proxy", "version": "1568792829", "spiders": 14}

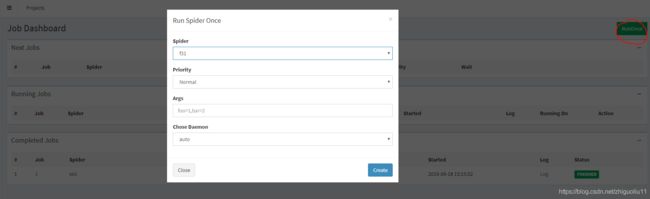

运行一个scrapy项目: