ELman神经网络matlab实现

ELman神经网络matlab实现

by:Z.H.Gao

一.输入样本

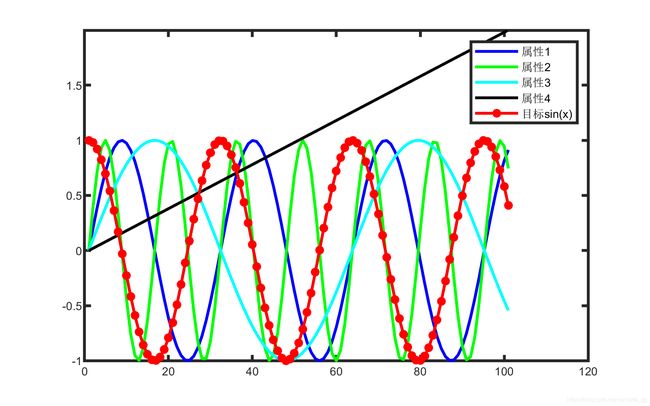

用sin(xt)、sin(2xt)、sin(0.5xt)和时间t,预测cos(xt)

FIG.1. 原始数据

二.matlab实现代码

clear all;close all;clc

%sine,‘tanh’,Lrate=0.02,Nohidden=12;

%sine,‘sigmoid’,Lrate=0.2,Nohidden=12;

load sine

sine=sine’;

sine=mapminmax(sine,0,1);

sine=sine’;

t=sine(:,1);

inst=sine(:,2:end-1);

label=sine(:,end);

%%

datalength=90;

trainx=inst(1:datalength,:);

trainy=label(1:datalength,:);

testx=inst(datalength+1:end,:);

testy=label(datalength+1:end,:);

%%

epoch=1000;

Lrate=0.8;

momentum=1;

backstep=20;

ActivationF=‘sigmoid’;

Nohidden=12;%隐藏层节点数目不能取太小

inputW=2 * rand(size(trainx,2),Nohidden)-1;

inputB=rand(1,Nohidden);

inputBW=[inputB;inputW];

outputW=2 * rand(Nohidden,size(trainy,2))-1;

outputB=rand(1,size(trainy,2));

outputBW=[outputB;outputW];

hiddenW=2 * rand(Nohidden,Nohidden)-1;

stateH=zeros(datalength,Nohidden);

%%

for v=1:1:epoch

%%

%正向计算,样本由1到n,顺序输入

for i=1:1:datalength

x=[1,trainx(i,:)];

if i==1

tempH(i,:)=x * inputBW;

else

tempH(i,:)=x * inputBW+stateH(i-1,:) * hiddenW;

end

H = ActivationFunction(tempH(i,:),ActivationF);

stateH(i,:) = H;

tempY(i,1) = [1,H] * outputBW;

end

trainResult = ActivationFunction(tempY,ActivationF);

Error=trainResult-trainy;

trainMSE(v,1)=sum(sum(Error.^2))/datalength;

%%

%反向计算,回溯的样本不能太少

DinputBW=zeros(size(inputBW));

DhiddenW=zeros(size(hiddenW));

Dout=Error. * GradientValue(tempY,ActivationF);

DoutputBW=[ones(datalength,1),stateH]’ * Dout;

DH=Dout * outputBW’;

DH=DH(:,2:end);

for i = datalength: -1 :1

DtempH = DH(i,:). * GradientValue(tempH(i,:),ActivationF);

for bptt_i = i: -1 :max(1,i-backstep)

DinputBW=DinputBW+[1,trainx(bptt_i,:)]’ * DtempH;

if bptt_i-1>0

DhiddenW=DhiddenW+stateH(bptt_i-1,:)’ * DtempH;

DtempH=DtempH*hiddenW’. * GradientValue(tempH(bptt_i-1,:),ActivationF);

end

end

end

%%

inputBW=inputBW-Lrate * DinputBW;

hiddenW=hiddenW-Lrate * DhiddenW;

outputW=outputW-Lrate * DoutputW;

% Lrate=0.9999 * Lrate;

%%

end

%%

%测试过程

for i=1:1:size(testx,1)

x=[1,testx(i,:)];

tempH(i+datalength,:)=x * inputBW+stateH(i+datalength-1,:)*hiddenW;

H = ActivationFunction(tempH(i+datalength,:),ActivationF);

stateH(i+datalength,:) = H;

tempResult(i,1) = [1,H]*outputBW;

end

testResult = ActivationFunction(tempResult,ActivationF);

Error=testResult-testy;

testMSE=sum(sum(Error.^2))/size(testx,1)

%%

t1=t(1:datalength,:);t2=t(datalength+1:end,:);

figure(1);plot(trainMSE);

figure(2);plot(t1,trainy,’-*b’);hold on;plot(t1,trainResult,’-or’);

hold on;plot(t2,testy,’-*k’);hold on;plot(t2,testResult,’-og’);

训 练 M S E 训练MSE 训练MSE

E L m a n 计 算 结 果 ELman计算结果 ELman计算结果

三. matlab tool box 实现ELman

clear all;close all;clc

%%%%%%%%%%%%%%%%%%%%type、open、edit可以打开源代码%%%%%%%%%%%%%%%%%%%%

load sine

t=sine(:,1);

inst=sine(:,2:end-1);

label=sine(:,end);

%%

datalength=90;

trainx=inst(1:datalength,:)’;

trainy=label(1:datalength,:)’;

testx=inst(datalength+1:end,:)’;

testy=label(datalength+1:end,:)’;

%%

TF1=‘tansig’;TF2=‘tansig’;%‘tansig’,‘purelin’,‘logsig’

net=newelm(trainx,trainy,[6,4],{TF1 TF2},‘traingda’);

net.trainParam.epochs=1000;

net.trainParam.goal=1e-7;

net.trainParam.lr=0.5;

net.trainParam.mc=0.9;%动量因子的设置,默认为0.9

net.trainParam.show=25;%显示的间隔次数

net.trainFcn=‘traingda’;

net.divideFcn=’’;

[net,tr]=train(net,trainx,trainy);

[trainoutput,trainPerf]=sim(net,trainx,[],[],trainy);%sim(网络,输入,初始输入延迟,初始层延迟,输出,初始输出延迟,最终层延迟)

[testoutput,testPerf]=sim(net,testx,[],[],testy);%测试数据,经BP得到的结果;

%%

MSE=mse(testoutput-testy)

figure(1)

t1=t(1:datalength,:);t2=t(datalength+1:end,:);

plot(t1,trainy,’-k*’);hold on;plot(t1,trainoutput,’-g*’);

plot(t2,testy,’-b*’);hold on;plot(t2,testoutput,’-r*’);

参考文献

[1] https://zhuanlan.zhihu.com/p/26891871

[2] https://zhuanlan.zhihu.com/p/26892413

[3] https://zybuluo.com/hanbingtao/note/541458