二十六、案例:寻找CSDN不同粉丝之间共同关注的博主

关注专栏《破茧成蝶——大数据篇》查看相关系列的文章~

一、需求分析

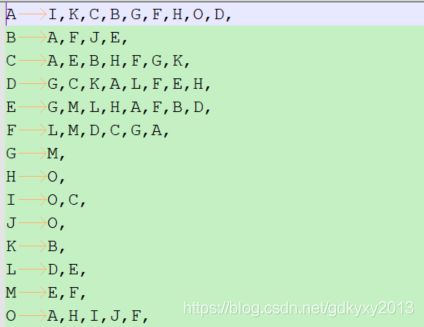

现有CSDN好友列表数据如下:

其中,|前面的代表某个CSDN的用户,|后面的为某用户关注的博主名称。要求:求出哪些人两两之间关注了共同的博主,以及他们共同关注了哪些博主。

实现思路分析:对于这个需求,可以分两步进行实现。1、首先求出不同的博主都有哪些粉丝。2、遍历粉丝得到其关注的共同博主。

二、代码实现

2.1 第一步中的Mapper方法

package com.xzw.hadoop.mapreduce.csdn_fans;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* @author: xzw

* @create_date: 2020/12/7 13:22

* @desc:

* @modifier:

* @modified_date:

* @desc:

*/

public class OneFansMapper extends Mapper {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] fields = value.toString().split(":");

String person = fields[0];

String[] fans = fields[1].split(",");

for (String fan : fans) {//输出(人,粉丝)

context.write(new Text(fan), new Text(person));

}

}

}

2.2 第一步中的Reducer方法

package com.xzw.hadoop.mapreduce.csdn_fans;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* @author: xzw

* @create_date: 2020/12/7 13:27

* @desc:

* @modifier:

* @modified_date:

* @desc:

*/

public class OneFansReducer extends Reducer {

@Override

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

StringBuffer sb = new StringBuffer();

for (Text value: values) {

sb.append(value).append(",");

}

context.write(key, new Text(sb.toString()));

}

}

2.3第一步中的Driver类

package com.xzw.hadoop.mapreduce.csdn_fans;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* @author: xzw

* @create_date: 2020/12/7 13:36

* @desc:

* @modifier:

* @modified_date:

* @desc:

*/

public class OneFansDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{"C:\\Users\\Machenike\\Desktop\\file\\fans.txt", "C:\\Users\\Machenike\\Desktop\\file" +

"\\output3"};

Job job = Job.getInstance(new Configuration());

job.setJarByClass(OneFansDriver.class);

job.setMapperClass(OneFansMapper.class);

job.setReducerClass(OneFansReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

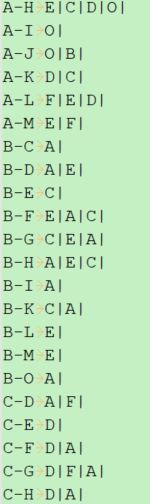

2.4 第一步测试结果

2.5 第二步Mapper方法

package com.xzw.hadoop.mapreduce.csdn_fans;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

import java.util.Arrays;

/**

* @author: xzw

* @create_date: 2020/12/7 13:42

* @desc:

* @modifier:

* @modified_date:

* @desc:

*/

public class TwoFansMapper extends Mapper {

private Text k = new Text();//粉丝

private Text v = new Text();//博主

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] fields = value.toString().split("\t");

v.set(fields[0]);

String[] fans = fields[1].split(",");

Arrays.sort(fans);

for (int i = 0; i < fans.length - 1; i++) {

for (int j = i + 1; j < fans.length; j++) {

k.set(fans[i] + "-" + fans[j]);

context.write(k, v);

}

}

}

}

2.6 第二步Reducer方法

package com.xzw.hadoop.mapreduce.csdn_fans;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* @author: xzw

* @create_date: 2020/12/7 13:50

* @desc:

* @modifier:

* @modified_date:

* @desc:

*/

public class TwoFansReducer extends Reducer {

private Text v = new Text();

@Override

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

StringBuffer sb = new StringBuffer();

for (Text value : values) {

sb.append(value).append("|");

}

v.set(sb.toString());

context.write(key, v);

}

}

2.7 第二步的Driver类

package com.xzw.hadoop.mapreduce.csdn_fans;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* @author: xzw

* @create_date: 2020/12/7 13:53

* @desc:

* @modifier:

* @modified_date:

* @desc:

*/

public class TwoFansDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{"C:\\Users\\Machenike\\Desktop\\file\\output3", "C:\\Users\\Machenike\\Desktop\\file" +

"\\output4"};

Job job = Job.getInstance(new Configuration());

job.setJarByClass(TwoFansDriver.class);

job.setMapperClass(TwoFansMapper.class);

job.setReducerClass(TwoFansReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

2.8 第二步测试结果