python 基于标签的推荐Tag-based及SimpleTagBased、TagBased-TFIDF等算法实现

python 基于标签的推荐Tag-based及SimpleTagBased、TagBased-TFIDF等算法实现

-

-

- 1. 概览

- 2. 基本概念

-

- 2.1 用户画像

-

- 2.1.1 定义

- 2.1.2 步骤

- 2.1.3 标签来源

- 2.1.4 标签相关的数据结构

- 2.1.5 如何给用户推荐标签

- 2.2 Simple Tag-based

-

- 2.2.1 计算公式

- 2.3 Norm Tag-based

-

- 2.3.1 计算公式

- 2.4 Tag-based TFIDF

-

- 2.4.1 计算公式

- 3. 代码实现

-

-

- 3.1 数据介绍

- 3.2 基于pandas dataframe的标签推荐

-

- 3.2.1 python代码

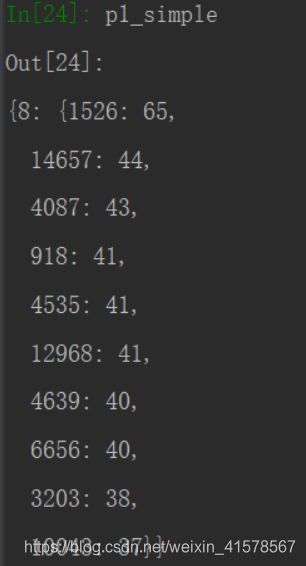

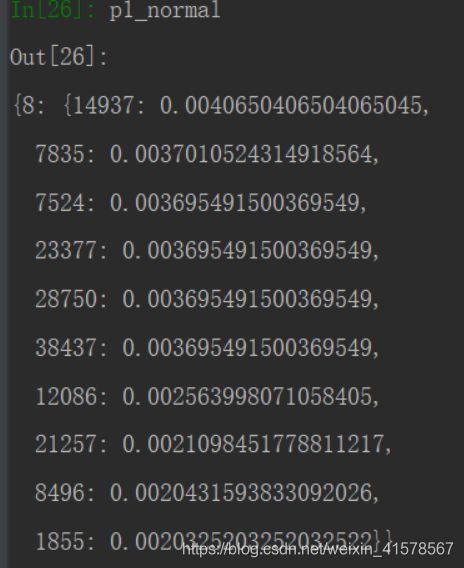

- 3.2.2 结果展示

-

-

1. 概览

本篇主要介绍关于推荐算法相关的知识:基于标签的推荐,涉及了3个原理较简单的计算方法(Simple Tag-based、Normal Tag-based、Tag-based-Tfidf ),以及基于pandas dataframe的python代码实现。

dataframe的效率比用字典实现低很多,主要是因为该算法涉及到较多的取值操作,字典的取值的平均时间复杂度当然要比dataframe用loc快很多。

2. 基本概念

2.1 用户画像

2.1.1 定义

用户画像毋庸置疑,即是对用户行为特征的总结归纳和描述,以更好的提升业务质量。

2.1.2 步骤

- 定义全局的用户唯一标识id(例如身份证、手机号、用户id等)

- 给用户打标签

- 根据标签为为业务带来不同阶段的收益。在用户生命周期的3个阶段:获客、粘客、留客 中,根据各类的用户数据对用户进行标签化、用户画像等,尽最大限度对用户进行留存。

2.1.3 标签来源

-

PGC:通过工作人员进行打标签(质量高,数据规范)

通过聚类算法对物品进行降为形成不同的标签。物品的标签化,本身是对物品的降维,所以可以利用聚类、降维的方法进行物品标签的生成。目前主流的聚类方法有:Kmeans,EM,DBScan,层级聚类,PCA等等。

-

UGC:通过用户进行打标签(质量低,数据不规范)

2.1.4 标签相关的数据结构

- 用户打标签的记录【user_id, item_id, tag】

- 用户打过的标签【user_id, tag】

- 用户打过标签的item【user_id, item】

- 打上某标签的item【tag, item】

- 同样打过某标签的用户【tag, user_id】

2.1.5 如何给用户推荐标签

根据用户的标签行为数据,通过SimpleTagBased 、 NormTagBased 及 TagBased-TFIDF 等方法,当用户A给某item打了标签T,进行以下4种类型的推荐:

-

给用户A推荐系统中热门标签的item

-

item上有热门的标签T-hot,给用户A推荐其他打了T-hot标签的items

-

用户自己经常对不同item打上标签T1等,对同样有T1标签的其他item进行推荐

-

将以上几种进行融合。

2.2 Simple Tag-based

2.2.1 计算公式

s c o r e ( u , i ) = ∑ t u s e r _ t a g s [ u , t ] ∗ t a g _ i t e m s [ t , i ] score(u, i)=\sum_t{user\_tags[u, t] * tag\_items[t, i]} score(u,i)=t∑user_tags[u,t]∗tag_items[t,i]

其中 user_tags[u,t] 表示【用户u使用标签t的次数】,tag_items[t, i] 表示【物品i被打上标签t的次数】

2.3 Norm Tag-based

2.3.1 计算公式

s c o r e ( u , i ) = ∑ t u s e r _ t a g s [ u , t ] u s e r _ t a g s [ u ] ∗ t a g _ i t e m s [ t , i ] t a g _ i t e m s [ t ] score(u,i)=\sum_t{\frac{user\_tags[u, t]}{user\_tags[u]} * \frac{tag\_items[t, i]}{tag\_items[t]}} score(u,i)=t∑user_tags[u]user_tags[u,t]∗tag_items[t]tag_items[t,i]

其中 user_tags[u] 表示【用户u打标签的总次数】,tag_items[t] 表示【标签t被使用的总次数】

2.4 Tag-based TFIDF

2.4.1 计算公式

s c o r e ( u , i ) = ∑ t u s e r _ t a g s [ u , t ] l o g ( 1 + t a g _ u s e r s [ t ] ) ∗ t a g _ i t e m s [ t , i ] score(u,i)=\sum_t{\frac{user\_tags[u, t]}{log(1 + tag\_users[t])} * tag\_items[t, i]} score(u,i)=t∑log(1+tag_users[t])user_tags[u,t]∗tag_items[t,i]

其中 tag_users[t] 表示【标签t被用户使用的总次数】

3. 代码实现

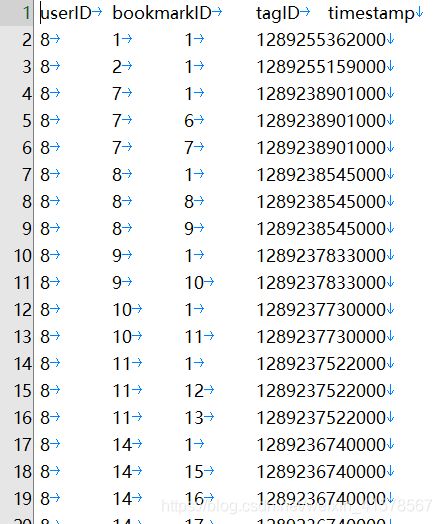

3.1 数据介绍

标准的userID -> bookmarkID -> tagID 记录行数据

3.2 基于pandas dataframe的标签推荐

3.2.1 python代码

# -*- coding: utf-8 -*-

"""

Created on 2020/11/18 13:54

@author: Irvinfaith

@email: [email protected]

"""

import operator

import time

import random

import pandas as pd

import numpy as np

class TagBased(object):

def __init__(self, data_path, sep='\t'):

self.data_path = data_path

self.sep = sep

self.calc_result = {

}

self.table = self.__load_data(data_path, sep)

def __load_data(self, data_path, sep):

table = pd.read_table(data_path, sep=sep)

return table

def __calc_frequency(self, table):

# user -> item

user_item = table.groupby(by=['userID','bookmarkID'])['tagID'].count()

# user -> tag

user_tag = table.groupby(by=['userID', 'tagID'])['bookmarkID'].count()

# tag -> item

tag_item = table.groupby(by=['tagID', 'bookmarkID'])['userID'].count()

# tag -> user

tag_user = table.groupby(by=['tagID', 'userID'])['bookmarkID'].count()

return {

"user_item": user_item, "user_tag": user_tag, "tag_item": tag_item, "tag_user": tag_user}

def train_test_split(self, ratio, seed):

return self.__train_test_split(self.table, ratio, seed)

def __train_test_split(self, table, ratio, seed):

random.seed(seed)

t1 = time.time()

stratify_count = table.groupby(by='userID')['userID'].count()

stratify_df = pd.DataFrame({

"count":stratify_count})

stratify_df['test_num'] = (stratify_df['count'] * ratio ).apply(int)

test_id = []

train_id = []

"""

==========================================

# 方法 1:dataframe的iterrows遍历行

# 10次执行平均耗时约 2.3秒

==========================================

# for index, row in stratify_df.iterrows():

# tmp_ids = table[table['userID'] == index].index.tolist()

# tmp_test_id = random.sample(tmp_ids, row['test_num'])

# test_id.extend(tmp_test_id)

# train_id.extend(list(set(tmp_ids) -set(tmp_test_id)))

==========================================

# 方法 2:series map和dataframe apply

# 10次执行平均耗时约 2.2秒

==========================================

按理来说,apply + map会比 iterrows 快很多,可能下面这个写法存储了较多的list,主要是为了方便查看拆分的结果,

所以在遍历取数的时候会花费更多的时间。

虽然apply+map方法只比iterrows快了0.1秒左右,但是在写法上我还是喜欢用apply+map。

"""

stratify_df['ids'] = stratify_df.index.map(lambda x: table[table['userID'] == x].index.tolist())

stratify_df['test_index'] = stratify_df.apply(lambda x: random.sample(x['ids'], x['test_num']), axis=1)

stratify_df['train_index'] = stratify_df.apply(lambda x: list(set(x['ids']) - set(x['test_index'])), axis=1)

stratify_df['test_index'].apply(lambda x: test_id.extend(x))

stratify_df['train_index'].apply(lambda x: train_id.extend(x))

train_data = table.iloc[train_id].reset_index(drop=True)

test_data = table.iloc[test_id].reset_index(drop=True)

print("Split train test dataset by stratification, time took: %.4f" % (time.time() - t1))

return {

"train_data": train_data, "test_data": test_data}

def __calc_item_recommendation(self, user_id, user_item, user_tag, tag_item, n, method):

marked_item = user_item[user_id].index

recommend = {

}

# t1 = time.time()

# user_id -> tag -> item -> count

marked_tag = user_tag.loc[user_id]

marked_tag_sum = marked_tag.values.sum()

for tag_index, tag_count in marked_tag.iteritems():

selected_item = tag_item.loc[tag_index]

selected_item_sum = selected_item.values.sum()

tag_selected_users_sum = self.calc_result['tag_user'].loc[tag_index].values.sum()

for item_index, tag_item_count in selected_item.iteritems():

if item_index in marked_item:

continue

if item_index not in recommend:

if method == 'norm':

recommend[item_index] = (tag_count / marked_tag_sum) * (tag_item_count / selected_item_sum)

elif method == 'simple':

recommend[item_index] = tag_count * tag_item_count

elif method == 'tfidf':

recommend[item_index] = tag_count / np.log(1 + tag_selected_users_sum) * tag_item_count

else:

raise TypeError("Invalid method `{}`, `method` only support `norm`, `simple` and `tfidf`".format(method))

else:

if method == 'norm':

recommend[item_index] += (tag_count / marked_tag_sum) * (tag_item_count / selected_item_sum)

elif method == 'simple':

recommend[item_index] += tag_count * tag_item_count

elif method == 'tfidf':

recommend[item_index] += tag_count / np.log(1 + tag_selected_users_sum) * tag_item_count

else:

raise TypeError("Invalid method `{}`, `method` only support `norm`, `simple` and `tfidf`".format(method))

# print(time.time() - t1)

sorted_recommend = sorted(recommend.items(), key=lambda x: (x[1]), reverse=True)[:n]

return {

user_id: dict(sorted_recommend)}

def __eval(self, train_recommend, test_data):

user_id = [i for i in train_recommend.keys()][0]

test_data_item = test_data['bookmarkID'].unique()

tp = len(set(test_data_item) & set(train_recommend[user_id].keys()))

# for item_id in test_data_item:

# if item_id in train_recommend[user_id]:

# tp += 1

return tp

def fit(self, train_data):

self.calc_result = self.__calc_frequency(train_data)

def predict(self, user_id, n, method='simple'):

return self.__calc_item_recommendation(user_id,

self.calc_result['user_item'],

self.calc_result['user_tag'],

self.calc_result['tag_item'],

n,

method)

def eval(self, n, test_data):

t1 = time.time()

test_data_user_id = test_data['userID'].unique()

total_tp = 0

tpfp = 0

tpfn = 0

check = []

for user_id in test_data_user_id:

train_recommend = self.predict(user_id, n)

user_test_data = test_data[test_data['userID'] == user_id]

total_tp += self.__eval(train_recommend, user_test_data)

tpfn += len(user_test_data['bookmarkID'].unique())

tpfp += n

check.append((user_id, total_tp, tpfn, tpfp))

recall = total_tp / tpfn

precision = total_tp / tpfp

print("Recall: %10.4f" % (recall * 100))

print("Precision: %10.4f" % (precision * 100))

print(time.time() - t1)

return recall, precision, check

if __name__ == '__main__':

file_path = "user_taggedbookmarks-timestamps.dat"

tb = TagBased(file_path, '\t')

train_test_data = tb.train_test_split(0.2, 88)

tb.fit(train_test_data['train_data'])

calc_result = tb.calc_result

# 使用3种方法,预测用户id为8 的排序前10的item

p1_simple = tb.predict(8, 10)

p1_tf = tb.predict(8, 10, method='tfidf')

p1_normal = tb.predict(8, 10, method='norm')