2020.9.29课堂笔记(Sqoop介绍及数据迁移)

一.Sqoop概述

Sqoop是一个用于在Hadoop和关系数据库之间传输数据的工具:官网链接

- 将数据从RDBMS导入到HDFS、Hive、HBase- 从HDFS导出数据到RDBMS- 使用MapReduce导入和导出数据,提供并行操作和容错

目标用户 - 系统管理员、数据库管理员- 大数据分析师、大数据开发工程师等

Sqoop环境搭建文档

一、Sqoop安装

安装Sqoop的前提是已经具备Java和Hadoop、Zookeeper、MySQL的环境,如何往Hive和HBase导入数据,应具备相关Hive、HBase环境。

1.1 下载并解压

1.上传安装包sqoop-1.4.6-cdh5.14.2.tar.gz到虚拟机中

2.解压sqoop安装包到指定目录,

[hadoop@hadoop102 software]$ tar -zxf sqoop-1.4.6-cdh5.14.2.tar.gz -C /opt/install/

3.创建软连接

[hadoop@hadoop102 ~]$ ln -s /opt/install/sqoop-1.4.6-cdh5.14.2/ /opt/install/sqoop

4.配置环境变量

[hadoop@hadoop102 ~]$ vi /etc/profile

添加如下内容:

export SQOOP_HOME=/opt/install/sqoop

export PATH=$SQOOP_HOME/bin:$PATH

让配置文件生效。

[hadoop@hadoop102 ~]$ source /etc/profile

1.2 修改配置文件

Sqoop的配置文件与大多数大数据框架类似,在sqoop根目录下的conf目录中。

1.重命名配置文件

[hadoop@hadoop102 conf]$ mv sqoop-env-template.sh sqoop-env.sh

2.修改配置文件

sqoop-env.sh

export HADOOP_COMMON_HOME=/opt/install/hadoop

export HADOOP_MAPRED_HOME=/opt/install/hadoop

export HIVE_HOME=/opt/install/hive

export ZOOKEEPER_HOME=/opt/install/zookeeper

export ZOOCFGDIR=/opt/install/zookeeper

export HBASE_HOME=/opt/install/hbase

1.3 拷贝JDBC驱动

拷贝jdbc驱动到sqoop的lib目录下,驱动包见其他资料。

[hadoop@hadoop102 software] cp mysql-connector-java-5.1.27-bin.jar /opt/install/sqoop/lib/

1.4 验证Sqoop

我们可以通过某一个command来验证sqoop配置是否正确:

sqoop help

出现一些Warning警告(警告信息已省略),并伴随着帮助命令的输出:

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

1.5 测试Sqoop是否能够成功连接数据库

[hadoop@hadoop102 conf]$ sqoop list-databases --connect jdbc:mysql://hadoop101:3306/ --username root --password 123456

这里需要配置自己mysql数据库的连接信息。

出现如下输出:

information_schema

hive

mysql

performance_schema

二.Sqoop操作:相关文档

1.从RDB导入数据到HDFS

sqoop import \

--connect jdbc:mysql://localhost:3306/retail_db \

--driver com.mysql.jdbc.Driver \

--table customers \

--username root \

--password ok \

--target-dir /data/retail_db/customers \

--m 3

sqoop-import是sqoop import的别名

- 通过Where语句过滤导入表

sqoop import \

--connect jdbc:mysql://localhost:3306/retail_db \

--driver com.mysql.jdbc.Driver \

--table orders --where "order_id < 500" \

--username root \

--password ok \

--delete-target-dir \

--target-dir /data/retail_db/orders \

--m 3

- 通过COLUMNS过滤导入表

sqoop import \

--connect jdbc:mysql://localhost:3306/retail_db \

--driver com.mysql.jdbc.Driver \

--table customers \

--columns "customer_id,customer_fname,customer_lname" \

--username root \

--password ok \

--delete-target-dir \

--target-dir /data/retail_db/customers \

--m 3

- 使用query方式导入数据

sqoop import \

--connect jdbc:mysql://localhost:3306/retail_db \

--driver com.mysql.jdbc.Driver \

--query "select * from orders where order_status!='CLOSED' and \$CONDITIONS" \

--username root \

--password ok \

--split-by order_id \

--delete-target-dir \

--target-dir /data/retail_db/orders \

-m 3

- 使用Sqoop增量导入数据- Incremental指定增量导入的模式- 1)append:追加数据记录- 2)lastmodified:可追加更新的数据

sqoop import \

--connect jdbc:mysql://localhost:3306/retail_db \

--table orders \

--username root \

--password ok \

--incremental append \

--check-column order_date \

--last-value '2013-07-24 00:00:00' \

--target-dir /data/retail_db/orders \

--m 3

2.导入数据到hive

| Argument | Description |

|---|---|

--hive-home |

Override $HIVE_HOME |

--hive-import |

Import tables into Hive (Uses Hive’s default delimiters if none are set.) |

--hive-overwrite |

Overwrite existing data in the Hive table. |

--create-hive-table |

If set, then the job will fail if the target hive table exits. By default this property is false. |

--hive-table |

Sets the table name to use when importing to Hive. |

--hive-drop-import-delims |

Drops \n, \r, and \01 from string fields when importing to Hive. |

--hive-delims-replacement |

Replace \n, \r, and \01 from string fields with user defined string when importing to Hive. |

--hive-partition-key |

Name of a hive field to partition are sharded on |

--hive-partition-value |

String-value that serves as partition key for this imported into hive in this job. |

--map-column-hive |

Override default mapping from SQL type to Hive type for configured columns. |

- 1.复制jar包

#复制hive的jar包

cp /opt/install/hive/lib/hive-common-1.1.0-cdh5.14.2.jar /opt/install/sqoop/lib/

cp /opt/install/hive/lib/hive-shims* /opt/install/sqoop/lib/

- 2.导入

sqoop import \

--connect jdbc:mysql://localhost:3306/retail_db \

--table orders \

--username root \

--password ok \

--hive-import \

--create-hive-table \

--hive-database retail_db \

--hive-table orders \

--m 3

- 3.导入到hive分区表,注意分区字段不能当作普同字段导入表中

sqoop import \

--connect jdbc:mysql://localhost:3306/retail_db \

--driver com.mysql.jdbc.Driver \

--query "select order_id,order_status from orders where order_date>='2013-11-03' and order_date<'2013-11-04' and \$CONDITIONS" \

--username root \

--password ok \

--delete-target-dir \

--target-dir /data/retail_db/orders \

--split-by order_status \

--hive-import \

--create-hive-table \

--hive-database retail_db \

--hive-table orders \

--hive-partition-key "order_date" \

--hive-partition-value "2013-11-03" \

--m 3

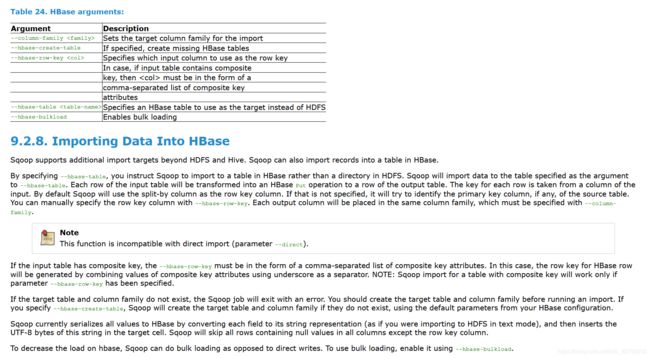

3.导入数据到HBase

| Argument | Description |

|---|---|

--column-family |

Sets the target column family for the import |

--hbase-create-table |

If specified, create missing HBase tables |

--hbase-row-key |

Specifies which input column to use as the row key In case, if input table contains composite key, then |

--hbase-table |

Specifies an HBase table to use as the target instead of HDFS |

--hbase-bulkload |

Enables bulk loading |

#hbase创建表

create 'emp_hbase_import','details'

#导入到hbase

sqoop import \

--connect jdbc:mysql://localhost:3306/sqoop \

--username root \

--password ok \

--table emp \

--columns "EMPNO,ENAME,JOB,SAL,COMM" \

--hbase-table emp_hbase_import \

--column-family details \

--hbase-row-key "EMPNO" \

--m 1

4.hdfs导出到MySQL

#先在mysql创建一个空表

create table customers_demo as select * from customers where 1=2;

#创建目录,上传数据

hdfs dfs -mkdir /customerinput

hdfs dfs -put customers.csv /customerinput/

#导出到mysql

sqoop export \

--connect jdbc:mysql://localhost:3306/retail_db \

--username root \

--password ok \

--table customers_demo \

--export-dir /customerinput/ \

--fields-terminated-by ',' \

--m 1

5.sqoop脚本

#sqoop脚本

#1编写脚本,内容如下

#############################

import

--connect

jdbc:mysql://hadoop01:3306/retail_db

--driver

com.mysql.jdbc.Driver

--table

customers

--username

root

--password

root

--target-dir

/data/retail_db/customers

--delete-target-dir

--m 3

##############################

#2执行脚本

sqoop --options-file job_RDBMS2HDFS.opt

6.sqoop的job任务

#创建job 注意import前必须有空格

sqoop job \

--create mysqlToHdfs \

-- import \

--connect jdbc:mysql://localhost:3306/retail_db \

--table orders \

--username root \

--password ok \

--incremental append \

--check-column order_date \

--last-value '0' \

--target-dir /data/retail_db/orders \

--m 3

#查看job

sqoop job --list

#执行job,可设置crontab定时执行 用的比较多

sqoop job --exec mysqlToHdfs