contos8伪分布式部署hadoop-3.2.1

知识了解

伪分布式部署模式介绍 :Hadoop守护进程运行在本地机器上,模拟一个小规模的的集群。

该模式在单机模式之上增加了代码调试功能,允许你检查内存使用情况,HDFS输入/输出,

以及其他的守护进 程交互。

一、获取软件包

请参考上一篇centos8单机(本地模式)部署hadoop-3.2.1

链接: https://blog.csdn.net/dp340823/article/details/112855683

二、修改配置文件

1.配置环境变量

vim /etc/profile#set java environment

export JAVA_HOME=/usr/java/jdk/

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

#HADOOP_HOME

export HADOOP_HOME=/opt/hadoop-3.2.1

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

环境变量生效

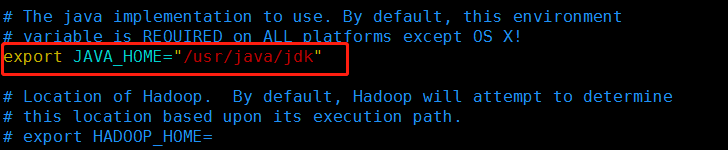

source /etc/profile2.修改配置文件hadoop-env.sh

vim ${HADOOP_HOME}/etc/hadoop/hadoop-env.sh3.修改配置文件mapred-env.sh

vim /opt/hadoop-3.2.1/etc/hadoop/mapred-env.sh4.修改配置文件yarn-env.sh

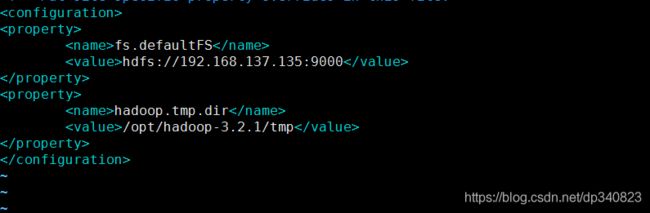

vim /opt/hadoop-3.2.1/etc/hadoop/yarn-env.sh5.修改配置文件core-site.xml

vim /opt/hadoop-3.2.1/etc/hadoop/core-site.xml

fs.defaultFS

hdfs://192.168.137.135:9000

hadoop.tmp.dir

/opt/hadoop-3.2.1/tmp

配置临时目录前,请先创建此目录,不创建也可以。

HDFS的NameNode数据默认都存放这个目录下,查看 *-default.xml 等默认配置文件,就可以看到很多依赖

${hadoop.tmp.dir} 的配置。

默认的 hadoop.tmp.dir 是 /tmp/hadoop-${user.name} ,此时有个问题就是NameNode会将HDFS的元数据存

储在这个/tmp目录下,如果操作系统重启了,系统会清空/tmp目录下的东西,导致NameNode元数据丢失,

是个非常严重的问题,所有我们应该修改这个路径。

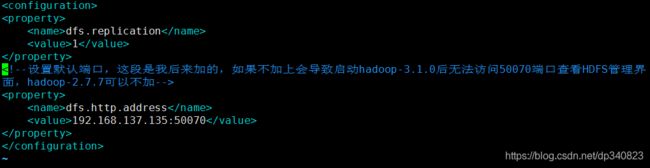

6.修改配置文件hdfs-site.xml

vim /opt/hadoop-3.2.1/etc/hadoop/hdfs-site.xml

dfs.replication

1

dfs.http.address

192.168.137.135:50070

dfs.replication配置的是HDFS存储时的备份数量,

因为这里是伪分布式环境只有一个节点,所以这里设置为 1。

7.修改配置文件mapred-site.xml

vim /opt/hadoop-3.2.1/etc/hadoop/mapred-site.xml

mapreduce.framework.name

yarn

8.修改配置文件yarn-site.xml

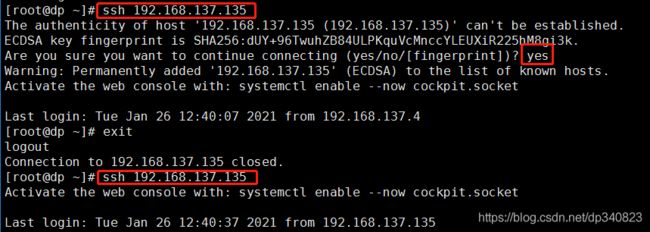

vim /opt/hadoop-3.2.1/etc/hadoop/yarn-site.xml三、设置ssh免密码登录

cd ~

ssh-keygen -t rsa一直回车

cd .ssh/

cp id_rsa.pub authorized_keys

ssh 192.168.137.135这样就OK了

四、开启hadoop

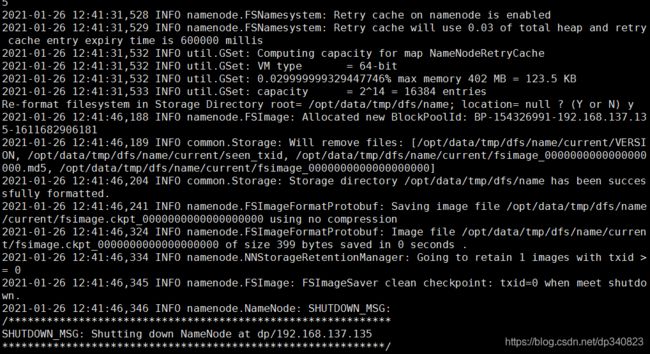

1.格式化NameNode

hdfs namenode -format

验证:

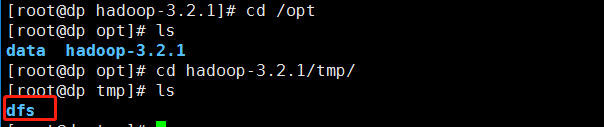

2.查看hdfs临时目录

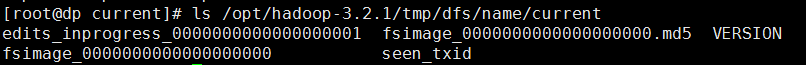

ls /opt/hadoop-3.2.1/tmp/dfs/name/current

fsimage是NameNode元数据在内存满了后,持久化保存到的文件。

fsimage*.md5 是校验文件,用于校验fsimage的完整性。

seen_txid 是hadoop的版本

vession文件里保存:

namespaceID:NameNode的唯一ID。

clusterID:集群ID,NameNode和DataNode的集群ID应该一致,表明是一个集群。

3.使用start-all开启所有进程

start-all.sh4.用jps验证

有这六个进程时证明了你的环境已经配置好了。

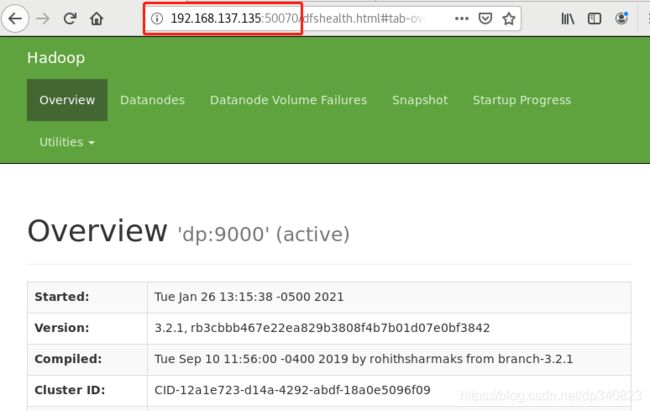

五、访问应用

1.关闭防火墙

防火墙会阻止非本机对服务发起的请求,所以,如果要让外界访问到hadoop服务一定要配置防火墙,如果是在虚拟机上,就可以直接关闭了。

使用systemctl stop firewalld 来零时关闭

使用systemctl disable firewalld 来永久关闭

2.访问

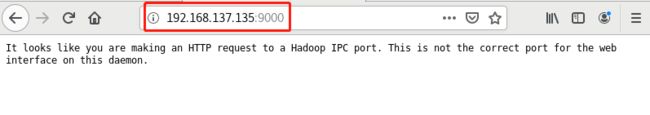

http://192.168.137.135:50070

http://192.168.137.135:9000

完成!!!