Spark之RDD编程快速入门

注:笔者用的spark1.6.3版本的,计划是用spark1.x版本大致了解一遍spark,然后再用spark2.x正式玩玩。

RDD 基本原理可见RDD 基本原理

一、RDD的简单介绍

- RDD是一种只读的、分区的记录集合。

- RDD分为两类,transformation(map/filter/groupBy/join等) 与 action(count/ take / collect / first / reduce等)

- 其中转换得到的RDD是惰性求值的,也就是说,整个转换过程只是记录了转换的轨迹,并不会发生真正的计算,只有遇到行动操作时,才会发生真正的计算

RDD 常用的Transformation:

- filter(func):筛选出满足函数func的元素,并返回一个新的数据集

- map(func):将每个元素传递到函数func中,并将结果返回为一个新的数据集

- flatMap(func):与map()相似,但每个输入元素都可以映射到0或多个输出结果

- groupByKey():应用于(K,V)键值对的数据集时,返回一个新的(K, Iterable)形式的数据集

- reduceByKey(func):应用于(K,V)键值对的数据集时,返回一个新的(K, V)形式的数据集,其中的每个值是将每个key传递到函数func中进行聚合行动操作是真正触发计算的地方。Spark程序执行到行动操作时,才会执行真正的计算,从文件中加载数据,完成一次又一次转换操作,最终,完成行动操作得到结果。

常见的行动操作(Action API):

- count() 返回数据集中的元素个数

- collect() 以数组的形式返回数据集中的所有元素

- first() 返回数据集中的第一个元素

- take(n) 以数组的形式返回数据集中的前n个元素

- reduce(func) 通过函数func(输入两个参数并返回一个值)

聚合数据集中的元素 - foreach(func) 将数据集中的每个元素传递到函数func中运行

二、RDD的创建

RDD创建方式:

- a. 通过parallelize函数把一般数据结构加载为RDD,makeRDD():通过makeRDD函数把一般数据结构加载为RDD

- b. 从外部存储创建RDD,例如通过textFile直接加载数据文件为RDD

- c. 通过已存在的RDD创建新的RDD

scala> val a = sc.parallelize(List("dog", "cat", "owl", "gnu", "ant"), 2)

scala> a.partitions.size

res24: Int = 2- 其中数字2为设定的分区数。

如下没有指定分区,系统会默认给其分配,查看默认给分配了8个分区

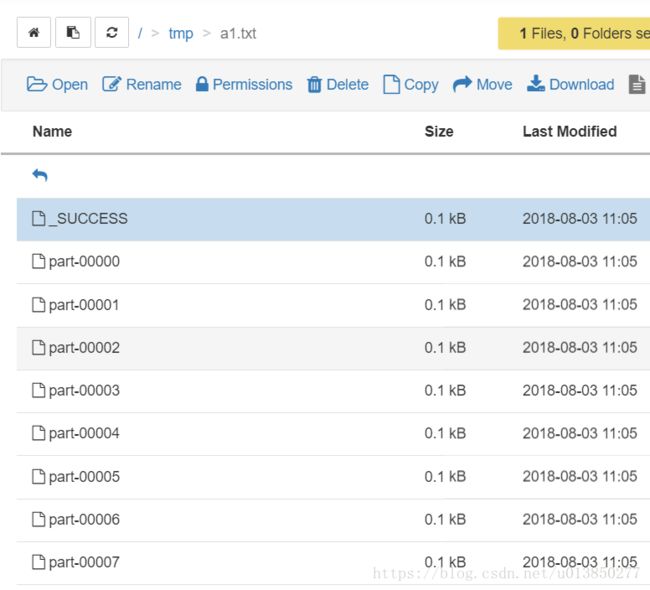

scala> val a1 = sc.parallelize(List("dog", "cat", "owl", "gnu", "ant123","123","4321"))

scala> a1.saveAsTextFile("/tmp/a1.txt")

scala> a1.partitions.size

res22: Int = 8- 当然也可以指定保存为多少个分区,如下指定保存为一个分区,这时生成的结果只在一个分区中

scala> a1.repartition(1).saveAsTextFile("/tmp/a2.txt")三、数据准备:

将数据上传至Hdfs中

- student.txt

1001 李正明

1002 王一磊

1003 陈志华

1004 张永丽

1005 赵信

1006 古明远

1007 刘浩明

1008 沈彬

1009 李子琪

1010 五嘉栋

1011 柳梦文

1012 钱多多- result_bigdata.txt

1001 大数据基础 90

1002 大数据基础 94

1003 大数据基础 100

1004 大数据基础 99

1005 大数据基础 90

1006 大数据基础 93

1007 大数据基础 100

1008 大数据基础 93

1009 大数据基础 89

1010 大数据基础 78

1011 大数据基础 91

1012 大数据基础 84- result_math.txt

1001 应用数学 90

1002 应用数学 94

1003 应用数学 100

1004 应用数学 100

1005 应用数学 94

1006 应用数学 80

1007 应用数学 90

1008 应用数学 94

1009 应用数学 84

1010 应用数学 86

1011 应用数学 79

1012 应用数学 91四、编程样例

(1)取出成绩排名前5的学生成绩信息

- 思路:

先将两个成绩表的RDD中的数据进行转换,每条数据被分成3列,表示学生ID、课程、成绩,分隔符为“ ”,存储为三元组格式,成绩要求转化为Int类型,可以直接通过toInt的转化

通过sortBy对元组中的成绩列降序排序,排序位置是每个元组的第3位的成绩

通过take操作取出每个RDD的前5个值就是成绩排在前5的学生

进入spark-shell

./bin/spark-shell

val math = sc.textFile("/tmp/result_math.txt")

val bigdata = sc.textFile("/tmp/result_bigdata.txt")

val m_math = math .map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

val m_bigdata = bigdata.map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

val mathResult = m_math.sortBy(_._3,false).take(5)

val bigdataResult = m_bigdata.sortBy(_._3,false).take(5)(2)找出单科成绩为100的学生ID

- 在上述的基础中,可以直接使用已存在的RDD

思路:将当科成绩为100的学生取出并通过union方法合并,从合并后的结果中取出学生ID,并将重复的去掉

具体代码如下:

scala> val student_100 = m_math.filter(_._3 == 100).union(m_bigdata.filter(_._3 == 100))

scala> val result = student_100.map(_._1).distinct

scala> val resultArray = result.collect(3)输出每位学生的总成绩,要求将两个成绩表中学生ID相同的成绩相加。

scala> :paste

val math = sc.textFile("/tmp/result_math.txt")

val bigdata = sc.textFile("/tmp/result_bigdata.txt")

val m_math = math .map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

val m_bigdata = bigdata.map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

val all_scores = (m_math union m_bigdata).map(x=>(x._1,x._3)).reduceByKey((x,y) => x+y).collect(4)计算每个学生的平均成绩

解法一:取得样例三中总成绩all_scores对其进行求平均值便可,如下

scala> val aveScores = all_scores.map(x => (x._1,x._2/2.0))

scala> aveScores.collect

res2: Array[(String, Double)] = Array((1005,92.0), (1012,87.5), (1001,90.0), (1009,86.5), (1002,94.0), (1006,86.5), (1010,82.0), (1003,100.0), (1007,95.0), (1008,93.5), (1011,85.0), (1004,99.5))解法二:CombineByKey

(5)汇总学生成绩并以文本格式存储在HDFS上,数据汇总为学生ID,姓名,大数据成绩,数学成绩,总分,平均分。

scala> :paste

val math = sc.textFile("/tmp/result_math.txt")

val bigdata = sc.textFile("/tmp/result_bigdata.txt")

val student = sc.textFile("/tmp/student.txt")

val m_student = student.map{x=> val line=x.split(" ");(line(0),line(1))}

val m_math = math .map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

val m_bigdata = bigdata.map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

val all_scores = (m_math union m_bigdata).map(x=>(x._1,x._3)).reduceByKey((x,y) => x+y)

val ave_scores = all_scores.map(x => (x._1,x._2/2.0))

val studentInfo = m_student.join(m_bigdata.map(x=>(x._1,x._3))).join(m_math.map(x=>(x._1,x._3))).join(all_scores ).join(ave_scores)

studentInfo.repartition(1).saveAsTextFile("/tmp/student")保存的结果如下:

[root@dn02 ~]# hdfs dfs -cat /tmp/student/part-00000

(1005,((((赵信,90),94),184),92.0))

(1012,((((钱多多,84),91),175),87.5))

(1001,((((李正明,90),90),180),90.0))

(1009,((((李子琪,89),84),173),86.5))

(1002,((((王一磊,94),94),188),94.0))

(1006,((((古明远,93),80),173),86.5))

(1010,((((五嘉栋,78),86),164),82.0))

(1003,((((陈志华,100),100),200),100.0))

(1007,((((刘浩明,100),90),190),95.0))

(1008,((((沈彬,93),94),187),93.5))

(1011,((((柳梦文,91),79),170),85.0))

(1004,((((张永丽,99),100),199),99.5))五、编程样例执行全过程

- 上述样例一全过程:

scala> val math = sc.textFile("/tmp/result_math.txt")

18/08/02 10:38:22 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 336.5 KB, free 1066.5 KB)

18/08/02 10:38:22 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 28.5 KB, free 1095.1 KB)

18/08/02 10:38:22 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on localhost:43561 (size: 28.5 KB, free: 511.0 MB)

18/08/02 10:38:22 INFO SparkContext: Created broadcast 2 from textFile at :27

math: org.apache.spark.rdd.RDD[String] = /tmp/result_math.txt MapPartitionsRDD[7] at textFile at :27 scala> val bigdata = sc.textFile("/tmp/result_bigdata.txt")

18/08/02 10:38:36 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 336.5 KB, free 1431.6 KB)

18/08/02 10:38:36 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 28.5 KB, free 1460.1 KB)

18/08/02 10:38:36 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on localhost:43561 (size: 28.5 KB, free: 511.0 MB)

18/08/02 10:38:36 INFO SparkContext: Created broadcast 3 from textFile at :27

bigdata: org.apache.spark.rdd.RDD[String] = /tmp/result_bigdata.txt MapPartitionsRDD[9] at textFile at :27

scala> val m_math = math .map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

m_math: org.apache.spark.rdd.RDD[(String, String, Int)] = MapPartitionsRDD[10] at map at :29 scala> val m_bigdata = bigdata.map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

m_bigdata: org.apache.spark.rdd.RDD[(String, String, Int)] = MapPartitionsRDD[11] at map at :29 scala> val mathResult = m_math.sortBy(_._3,false).take(5)

18/08/02 10:39:07 INFO FileInputFormat: Total input paths to process : 1

18/08/02 10:39:07 INFO SparkContext: Starting job: sortBy at :31

18/08/02 10:39:07 INFO DAGScheduler: Got job 0 (sortBy at :31) with 2 output partitions

18/08/02 10:39:07 INFO DAGScheduler: Final stage: ResultStage 0 (sortBy at :31)

18/08/02 10:39:07 INFO DAGScheduler: Parents of final stage: List()

18/08/02 10:39:07 INFO DAGScheduler: Missing parents: List()

18/08/02 10:39:07 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[14] at sortBy at :31), which has no missing parents

18/08/02 10:39:07 INFO MemoryStore: Block broadcast_4 stored as values in memory (estimated size 4.1 KB, free 1464.2 KB)

18/08/02 10:39:07 INFO MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 2.2 KB, free 1466.5 KB)

18/08/02 10:39:07 INFO BlockManagerInfo: Added broadcast_4_piece0 in memory on localhost:43561 (size: 2.2 KB, free: 511.0 MB)

18/08/02 10:39:07 INFO SparkContext: Created broadcast 4 from broadcast at DAGScheduler.scala:1008

18/08/02 10:39:07 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[14] at sortBy at :31)

18/08/02 10:39:07 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

18/08/02 10:39:07 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, partition 0,ANY, 2152 bytes)

18/08/02 10:39:07 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, partition 1,ANY, 2152 bytes)

18/08/02 10:39:07 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

18/08/02 10:39:07 INFO Executor: Running task 1.0 in stage 0.0 (TID 1)

18/08/02 10:39:07 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_math.txt:133+134

18/08/02 10:39:07 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_math.txt:0+133

18/08/02 10:39:07 INFO deprecation: mapred.tip.id is deprecated. Instead, use mapreduce.task.id

18/08/02 10:39:07 INFO deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

18/08/02 10:39:07 INFO deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

18/08/02 10:39:07 INFO deprecation: mapred.task.partition is deprecated. Instead, use mapreduce.task.partition

18/08/02 10:39:07 INFO deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

18/08/02 10:39:07 INFO LineRecordReader: Found UTF-8 BOM and skipped it

18/08/02 10:39:07 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 2293 bytes result sent to driver

18/08/02 10:39:07 INFO Executor: Finished task 1.0 in stage 0.0 (TID 1). 2293 bytes result sent to driver

18/08/02 10:39:07 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 152 ms on localhost (1/2)

18/08/02 10:39:07 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 179 ms on localhost (2/2)

18/08/02 10:39:07 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

18/08/02 10:39:07 INFO DAGScheduler: ResultStage 0 (sortBy at :31) finished in 0.199 s

18/08/02 10:39:07 INFO DAGScheduler: Job 0 finished: sortBy at :31, took 0.278488 s

18/08/02 10:39:07 INFO SparkContext: Starting job: take at :31

18/08/02 10:39:07 INFO DAGScheduler: Registering RDD 12 (sortBy at :31)

18/08/02 10:39:07 INFO DAGScheduler: Got job 1 (take at :31) with 1 output partitions

18/08/02 10:39:07 INFO DAGScheduler: Final stage: ResultStage 2 (take at :31)

18/08/02 10:39:07 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 1)

18/08/02 10:39:07 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 1)

18/08/02 10:39:07 INFO DAGScheduler: Submitting ShuffleMapStage 1 (MapPartitionsRDD[12] at sortBy at :31), which has no missing parents

18/08/02 10:39:07 INFO MemoryStore: Block broadcast_5 stored as values in memory (estimated size 4.7 KB, free 1471.2 KB)

18/08/02 10:39:07 INFO MemoryStore: Block broadcast_5_piece0 stored as bytes in memory (estimated size 2.6 KB, free 1473.8 KB)

18/08/02 10:39:07 INFO BlockManagerInfo: Added broadcast_5_piece0 in memory on localhost:43561 (size: 2.6 KB, free: 511.0 MB)

18/08/02 10:39:07 INFO SparkContext: Created broadcast 5 from broadcast at DAGScheduler.scala:1008

18/08/02 10:39:07 INFO DAGScheduler: Submitting 2 missing tasks from ShuffleMapStage 1 (MapPartitionsRDD[12] at sortBy at :31)

18/08/02 10:39:07 INFO TaskSchedulerImpl: Adding task set 1.0 with 2 tasks

18/08/02 10:39:07 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 2, localhost, partition 0,ANY, 2141 bytes)

18/08/02 10:39:07 INFO TaskSetManager: Starting task 1.0 in stage 1.0 (TID 3, localhost, partition 1,ANY, 2141 bytes)

18/08/02 10:39:07 INFO Executor: Running task 0.0 in stage 1.0 (TID 2)

18/08/02 10:39:07 INFO Executor: Running task 1.0 in stage 1.0 (TID 3)

18/08/02 10:39:07 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_math.txt:133+134

18/08/02 10:39:07 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_math.txt:0+133

18/08/02 10:39:07 INFO LineRecordReader: Found UTF-8 BOM and skipped it

18/08/02 10:39:07 INFO Executor: Finished task 0.0 in stage 1.0 (TID 2). 2309 bytes result sent to driver

18/08/02 10:39:07 INFO Executor: Finished task 1.0 in stage 1.0 (TID 3). 2309 bytes result sent to driver

18/08/02 10:39:08 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 2) in 38 ms on localhost (1/2)

18/08/02 10:39:08 INFO TaskSetManager: Finished task 1.0 in stage 1.0 (TID 3) in 39 ms on localhost (2/2)

18/08/02 10:39:08 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

18/08/02 10:39:08 INFO DAGScheduler: ShuffleMapStage 1 (sortBy at :31) finished in 0.042 s

18/08/02 10:39:08 INFO DAGScheduler: looking for newly runnable stages

18/08/02 10:39:08 INFO DAGScheduler: running: Set()

18/08/02 10:39:08 INFO DAGScheduler: waiting: Set(ResultStage 2)

18/08/02 10:39:08 INFO DAGScheduler: failed: Set()

18/08/02 10:39:08 INFO DAGScheduler: Submitting ResultStage 2 (MapPartitionsRDD[16] at sortBy at :31), which has no missing parents

18/08/02 10:39:08 INFO MemoryStore: Block broadcast_6 stored as values in memory (estimated size 3.4 KB, free 1477.2 KB)

18/08/02 10:39:08 INFO MemoryStore: Block broadcast_6_piece0 stored as bytes in memory (estimated size 2021.0 B, free 1479.2 KB)

18/08/02 10:39:08 INFO BlockManagerInfo: Added broadcast_6_piece0 in memory on localhost:43561 (size: 2021.0 B, free: 511.0 MB)

18/08/02 10:39:08 INFO SparkContext: Created broadcast 6 from broadcast at DAGScheduler.scala:1008

18/08/02 10:39:08 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 2 (MapPartitionsRDD[16] at sortBy at :31)

18/08/02 10:39:08 INFO TaskSchedulerImpl: Adding task set 2.0 with 1 tasks

18/08/02 10:39:08 INFO TaskSetManager: Starting task 0.0 in stage 2.0 (TID 4, localhost, partition 0,NODE_LOCAL, 1894 bytes)

18/08/02 10:39:08 INFO Executor: Running task 0.0 in stage 2.0 (TID 4)

18/08/02 10:39:08 INFO ShuffleBlockFetcherIterator: Getting 2 non-empty blocks out of 2 blocks

18/08/02 10:39:08 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 4 ms

18/08/02 10:39:08 INFO Executor: Finished task 0.0 in stage 2.0 (TID 4). 1480 bytes result sent to driver

18/08/02 10:39:08 INFO TaskSetManager: Finished task 0.0 in stage 2.0 (TID 4) in 62 ms on localhost (1/1)

18/08/02 10:39:08 INFO TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

18/08/02 10:39:08 INFO DAGScheduler: ResultStage 2 (take at :31) finished in 0.063 s

18/08/02 10:39:08 INFO DAGScheduler: Job 1 finished: take at :31, took 0.136507 s

mathResult: Array[(String, String, Int)] = Array((1003,应用数学,100), (1004,应用数学,100), (1002,应用数学,94), (1005,应用数学,94), (1008,应用数学,94)) scala> val bigdataResult = m_bigdata.sortBy(_._3,false).take(5)

18/08/02 10:39:17 INFO FileInputFormat: Total input paths to process : 1

18/08/02 10:39:17 INFO SparkContext: Starting job: sortBy at :31

18/08/02 10:39:17 INFO DAGScheduler: Got job 2 (sortBy at :31) with 2 output partitions

18/08/02 10:39:17 INFO DAGScheduler: Final stage: ResultStage 3 (sortBy at :31)

18/08/02 10:39:17 INFO DAGScheduler: Parents of final stage: List()

18/08/02 10:39:17 INFO DAGScheduler: Missing parents: List()

18/08/02 10:39:17 INFO DAGScheduler: Submitting ResultStage 3 (MapPartitionsRDD[19] at sortBy at :31), which has no missing parents

18/08/02 10:39:17 INFO MemoryStore: Block broadcast_7 stored as values in memory (estimated size 4.2 KB, free 1483.3 KB)

18/08/02 10:39:17 INFO MemoryStore: Block broadcast_7_piece0 stored as bytes in memory (estimated size 2.3 KB, free 1485.6 KB)

18/08/02 10:39:17 INFO BlockManagerInfo: Added broadcast_7_piece0 in memory on localhost:43561 (size: 2.3 KB, free: 511.0 MB)

18/08/02 10:39:17 INFO SparkContext: Created broadcast 7 from broadcast at DAGScheduler.scala:1008

18/08/02 10:39:17 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 3 (MapPartitionsRDD[19] at sortBy at :31)

18/08/02 10:39:17 INFO TaskSchedulerImpl: Adding task set 3.0 with 2 tasks

18/08/02 10:39:17 INFO TaskSetManager: Starting task 0.0 in stage 3.0 (TID 5, localhost, partition 0,ANY, 2155 bytes)

18/08/02 10:39:17 INFO TaskSetManager: Starting task 1.0 in stage 3.0 (TID 6, localhost, partition 1,ANY, 2155 bytes)

18/08/02 10:39:17 INFO Executor: Running task 0.0 in stage 3.0 (TID 5)

18/08/02 10:39:17 INFO Executor: Running task 1.0 in stage 3.0 (TID 6)

18/08/02 10:39:17 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_bigdata.txt:0+151

18/08/02 10:39:17 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_bigdata.txt:151+152

18/08/02 10:39:17 INFO LineRecordReader: Found UTF-8 BOM and skipped it

18/08/02 10:39:17 INFO Executor: Finished task 0.0 in stage 3.0 (TID 5). 2348 bytes result sent to driver

18/08/02 10:39:17 INFO Executor: Finished task 1.0 in stage 3.0 (TID 6). 2348 bytes result sent to driver

18/08/02 10:39:17 INFO TaskSetManager: Finished task 0.0 in stage 3.0 (TID 5) in 14 ms on localhost (1/2)

18/08/02 10:39:17 INFO TaskSetManager: Finished task 1.0 in stage 3.0 (TID 6) in 15 ms on localhost (2/2)

18/08/02 10:39:17 INFO TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool

18/08/02 10:39:17 INFO DAGScheduler: ResultStage 3 (sortBy at :31) finished in 0.017 s

18/08/02 10:39:17 INFO DAGScheduler: Job 2 finished: sortBy at :31, took 0.024577 s

18/08/02 10:39:17 INFO SparkContext: Starting job: take at :31

18/08/02 10:39:17 INFO DAGScheduler: Registering RDD 17 (sortBy at :31)

18/08/02 10:39:17 INFO DAGScheduler: Got job 3 (take at :31) with 1 output partitions

18/08/02 10:39:17 INFO DAGScheduler: Final stage: ResultStage 5 (take at :31)

18/08/02 10:39:17 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 4)

18/08/02 10:39:17 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 4)

18/08/02 10:39:17 INFO DAGScheduler: Submitting ShuffleMapStage 4 (MapPartitionsRDD[17] at sortBy at :31), which has no missing parents

18/08/02 10:39:17 INFO MemoryStore: Block broadcast_8 stored as values in memory (estimated size 4.7 KB, free 1490.3 KB)

18/08/02 10:39:17 INFO MemoryStore: Block broadcast_8_piece0 stored as bytes in memory (estimated size 2.6 KB, free 1492.9 KB)

18/08/02 10:39:17 INFO BlockManagerInfo: Added broadcast_8_piece0 in memory on localhost:43561 (size: 2.6 KB, free: 511.0 MB)

18/08/02 10:39:17 INFO SparkContext: Created broadcast 8 from broadcast at DAGScheduler.scala:1008

18/08/02 10:39:17 INFO DAGScheduler: Submitting 2 missing tasks from ShuffleMapStage 4 (MapPartitionsRDD[17] at sortBy at :31)

18/08/02 10:39:17 INFO TaskSchedulerImpl: Adding task set 4.0 with 2 tasks

18/08/02 10:39:17 INFO TaskSetManager: Starting task 0.0 in stage 4.0 (TID 7, localhost, partition 0,ANY, 2144 bytes)

18/08/02 10:39:17 INFO TaskSetManager: Starting task 1.0 in stage 4.0 (TID 8, localhost, partition 1,ANY, 2144 bytes)

18/08/02 10:39:17 INFO Executor: Running task 0.0 in stage 4.0 (TID 7)

18/08/02 10:39:17 INFO Executor: Running task 1.0 in stage 4.0 (TID 8)

18/08/02 10:39:17 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_bigdata.txt:151+152

18/08/02 10:39:17 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_bigdata.txt:0+151

18/08/02 10:39:17 INFO LineRecordReader: Found UTF-8 BOM and skipped it

18/08/02 10:39:17 INFO Executor: Finished task 1.0 in stage 4.0 (TID 8). 2309 bytes result sent to driver

18/08/02 10:39:17 INFO TaskSetManager: Finished task 1.0 in stage 4.0 (TID 8) in 11 ms on localhost (1/2)

18/08/02 10:39:17 INFO Executor: Finished task 0.0 in stage 4.0 (TID 7). 2309 bytes result sent to driver

18/08/02 10:39:17 INFO TaskSetManager: Finished task 0.0 in stage 4.0 (TID 7) in 17 ms on localhost (2/2)

18/08/02 10:39:17 INFO TaskSchedulerImpl: Removed TaskSet 4.0, whose tasks have all completed, from pool

18/08/02 10:39:17 INFO DAGScheduler: ShuffleMapStage 4 (sortBy at :31) finished in 0.017 s

18/08/02 10:39:17 INFO DAGScheduler: looking for newly runnable stages

18/08/02 10:39:17 INFO DAGScheduler: running: Set()

18/08/02 10:39:17 INFO DAGScheduler: waiting: Set(ResultStage 5)

18/08/02 10:39:17 INFO DAGScheduler: failed: Set()

18/08/02 10:39:17 INFO DAGScheduler: Submitting ResultStage 5 (MapPartitionsRDD[21] at sortBy at :31), which has no missing parents

18/08/02 10:39:17 INFO MemoryStore: Block broadcast_9 stored as values in memory (estimated size 3.4 KB, free 1496.3 KB)

18/08/02 10:39:17 INFO MemoryStore: Block broadcast_9_piece0 stored as bytes in memory (estimated size 2021.0 B, free 1498.3 KB)

18/08/02 10:39:17 INFO BlockManagerInfo: Added broadcast_9_piece0 in memory on localhost:43561 (size: 2021.0 B, free: 511.0 MB)

18/08/02 10:39:17 INFO SparkContext: Created broadcast 9 from broadcast at DAGScheduler.scala:1008

18/08/02 10:39:17 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 5 (MapPartitionsRDD[21] at sortBy at :31)

18/08/02 10:39:17 INFO TaskSchedulerImpl: Adding task set 5.0 with 1 tasks

18/08/02 10:39:17 INFO TaskSetManager: Starting task 0.0 in stage 5.0 (TID 9, localhost, partition 0,NODE_LOCAL, 1894 bytes)

18/08/02 10:39:17 INFO Executor: Running task 0.0 in stage 5.0 (TID 9)

18/08/02 10:39:17 INFO ShuffleBlockFetcherIterator: Getting 2 non-empty blocks out of 2 blocks

18/08/02 10:39:17 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms

18/08/02 10:39:17 INFO Executor: Finished task 0.0 in stage 5.0 (TID 9). 1505 bytes result sent to driver

18/08/02 10:39:17 INFO TaskSetManager: Finished task 0.0 in stage 5.0 (TID 9) in 6 ms on localhost (1/1)

18/08/02 10:39:17 INFO TaskSchedulerImpl: Removed TaskSet 5.0, whose tasks have all completed, from pool

18/08/02 10:39:17 INFO DAGScheduler: ResultStage 5 (take at :31) finished in 0.006 s

18/08/02 10:39:17 INFO DAGScheduler: Job 3 finished: take at :31, took 0.036589 s

bigdataResult: Array[(String, String, Int)] = Array((1003,大数据基础,100), (1007,大数据基础,100), (1004,大数据基础,99), (1002,大数据基础,94), (1006,大数据基础,93)) - 上述样例二的全过程:

scala> val student_100 = m_math.filter(_._3 == 100).union(m_bigdata.filter(_._3 == 100))

student_100: org.apache.spark.rdd.RDD[(String, String, Int)] = UnionRDD[36] at union at :35 scala> val result = student_100.map(_._1).distinct

result: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[40] at distinct at :37 scala> val resultArray = result.collect

18/08/02 10:55:13 INFO SparkContext: Starting job: collect at :39

18/08/02 10:55:13 INFO DAGScheduler: Registering RDD 38 (distinct at :37)

18/08/02 10:55:13 INFO DAGScheduler: Got job 10 (collect at :39) with 4 output partitions

18/08/02 10:55:13 INFO DAGScheduler: Final stage: ResultStage 15 (collect at :39)

18/08/02 10:55:13 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 14)

18/08/02 10:55:13 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 14)

18/08/02 10:55:13 INFO DAGScheduler: Submitting ShuffleMapStage 14 (MapPartitionsRDD[38] at distinct at :37), which has no missing parents

18/08/02 10:55:13 INFO MemoryStore: Block broadcast_18 stored as values in memory (estimated size 5.7 KB, free 1508.5 KB)

18/08/02 10:55:13 INFO MemoryStore: Block broadcast_18_piece0 stored as bytes in memory (estimated size 3.1 KB, free 1511.6 KB)

18/08/02 10:55:13 INFO BlockManagerInfo: Added broadcast_18_piece0 in memory on localhost:43561 (size: 3.1 KB, free: 511.0 MB)

18/08/02 10:55:13 INFO SparkContext: Created broadcast 18 from broadcast at DAGScheduler.scala:1008

18/08/02 10:55:13 INFO DAGScheduler: Submitting 4 missing tasks from ShuffleMapStage 14 (MapPartitionsRDD[38] at distinct at :37)

18/08/02 10:55:13 INFO TaskSchedulerImpl: Adding task set 14.0 with 4 tasks

18/08/02 10:55:13 INFO TaskSetManager: Starting task 0.0 in stage 14.0 (TID 38, localhost, partition 0,ANY, 2250 bytes)

18/08/02 10:55:13 INFO TaskSetManager: Starting task 1.0 in stage 14.0 (TID 39, localhost, partition 1,ANY, 2250 bytes)

18/08/02 10:55:13 INFO TaskSetManager: Starting task 2.0 in stage 14.0 (TID 40, localhost, partition 2,ANY, 2253 bytes)

18/08/02 10:55:13 INFO TaskSetManager: Starting task 3.0 in stage 14.0 (TID 41, localhost, partition 3,ANY, 2253 bytes)

18/08/02 10:55:13 INFO Executor: Running task 0.0 in stage 14.0 (TID 38)

18/08/02 10:55:13 INFO Executor: Running task 1.0 in stage 14.0 (TID 39)

18/08/02 10:55:13 INFO Executor: Running task 3.0 in stage 14.0 (TID 41)

18/08/02 10:55:13 INFO Executor: Running task 2.0 in stage 14.0 (TID 40)

18/08/02 10:55:13 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_math.txt:0+133

18/08/02 10:55:13 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_bigdata.txt:151+152

18/08/02 10:55:13 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_math.txt:133+134

18/08/02 10:55:13 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_bigdata.txt:0+151

18/08/02 10:55:13 INFO LineRecordReader: Found UTF-8 BOM and skipped it

18/08/02 10:55:13 INFO LineRecordReader: Found UTF-8 BOM and skipped it

18/08/02 10:55:13 INFO Executor: Finished task 1.0 in stage 14.0 (TID 39). 2311 bytes result sent to driver

18/08/02 10:55:13 INFO Executor: Finished task 0.0 in stage 14.0 (TID 38). 2311 bytes result sent to driver

18/08/02 10:55:13 INFO Executor: Finished task 2.0 in stage 14.0 (TID 40). 2311 bytes result sent to driver

18/08/02 10:55:13 INFO TaskSetManager: Finished task 0.0 in stage 14.0 (TID 38) in 14 ms on localhost (1/4)

18/08/02 10:55:13 INFO Executor: Finished task 3.0 in stage 14.0 (TID 41). 2311 bytes result sent to driver

18/08/02 10:55:13 INFO TaskSetManager: Finished task 2.0 in stage 14.0 (TID 40) in 14 ms on localhost (2/4)

18/08/02 10:55:13 INFO TaskSetManager: Finished task 1.0 in stage 14.0 (TID 39) in 14 ms on localhost (3/4)

18/08/02 10:55:13 INFO TaskSetManager: Finished task 3.0 in stage 14.0 (TID 41) in 14 ms on localhost (4/4)

18/08/02 10:55:13 INFO TaskSchedulerImpl: Removed TaskSet 14.0, whose tasks have all completed, from pool

18/08/02 10:55:13 INFO DAGScheduler: ShuffleMapStage 14 (distinct at :37) finished in 0.017 s

18/08/02 10:55:13 INFO DAGScheduler: looking for newly runnable stages

18/08/02 10:55:13 INFO DAGScheduler: running: Set()

18/08/02 10:55:13 INFO DAGScheduler: waiting: Set(ResultStage 15)

18/08/02 10:55:13 INFO DAGScheduler: failed: Set()

18/08/02 10:55:13 INFO DAGScheduler: Submitting ResultStage 15 (MapPartitionsRDD[40] at distinct at :37), which has no missing parents

18/08/02 10:55:13 INFO MemoryStore: Block broadcast_19 stored as values in memory (estimated size 3.3 KB, free 1514.9 KB)

18/08/02 10:55:13 INFO MemoryStore: Block broadcast_19_piece0 stored as bytes in memory (estimated size 1952.0 B, free 1516.8 KB)

18/08/02 10:55:13 INFO BlockManagerInfo: Added broadcast_19_piece0 in memory on localhost:43561 (size: 1952.0 B, free: 511.0 MB)

18/08/02 10:55:13 INFO SparkContext: Created broadcast 19 from broadcast at DAGScheduler.scala:1008

18/08/02 10:55:13 INFO DAGScheduler: Submitting 4 missing tasks from ResultStage 15 (MapPartitionsRDD[40] at distinct at :37)

18/08/02 10:55:13 INFO TaskSchedulerImpl: Adding task set 15.0 with 4 tasks

18/08/02 10:55:13 INFO TaskSetManager: Starting task 2.0 in stage 15.0 (TID 42, localhost, partition 2,NODE_LOCAL, 1894 bytes)

18/08/02 10:55:13 INFO TaskSetManager: Starting task 3.0 in stage 15.0 (TID 43, localhost, partition 3,NODE_LOCAL, 1894 bytes)

18/08/02 10:55:13 INFO TaskSetManager: Starting task 0.0 in stage 15.0 (TID 44, localhost, partition 0,PROCESS_LOCAL, 1894 bytes)

18/08/02 10:55:13 INFO TaskSetManager: Starting task 1.0 in stage 15.0 (TID 45, localhost, partition 1,PROCESS_LOCAL, 1894 bytes)

18/08/02 10:55:13 INFO Executor: Running task 3.0 in stage 15.0 (TID 43)

18/08/02 10:55:13 INFO Executor: Running task 2.0 in stage 15.0 (TID 42)

18/08/02 10:55:13 INFO Executor: Running task 0.0 in stage 15.0 (TID 44)

18/08/02 10:55:13 INFO Executor: Running task 1.0 in stage 15.0 (TID 45)

18/08/02 10:55:13 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 4 blocks

18/08/02 10:55:13 INFO ShuffleBlockFetcherIterator: Getting 3 non-empty blocks out of 4 blocks

18/08/02 10:55:13 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

18/08/02 10:55:13 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

18/08/02 10:55:13 INFO ShuffleBlockFetcherIterator: Getting 0 non-empty blocks out of 4 blocks

18/08/02 10:55:13 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

18/08/02 10:55:13 INFO ShuffleBlockFetcherIterator: Getting 0 non-empty blocks out of 4 blocks

18/08/02 10:55:13 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

18/08/02 10:55:13 INFO Executor: Finished task 1.0 in stage 15.0 (TID 45). 1165 bytes result sent to driver

18/08/02 10:55:13 INFO Executor: Finished task 0.0 in stage 15.0 (TID 44). 1165 bytes result sent to driver

18/08/02 10:55:13 INFO Executor: Finished task 3.0 in stage 15.0 (TID 43). 1172 bytes result sent to driver

18/08/02 10:55:13 INFO Executor: Finished task 2.0 in stage 15.0 (TID 42). 1179 bytes result sent to driver

18/08/02 10:55:13 INFO TaskSetManager: Finished task 1.0 in stage 15.0 (TID 45) in 5 ms on localhost (1/4)

18/08/02 10:55:13 INFO TaskSetManager: Finished task 0.0 in stage 15.0 (TID 44) in 5 ms on localhost (2/4)

18/08/02 10:55:13 INFO TaskSetManager: Finished task 3.0 in stage 15.0 (TID 43) in 7 ms on localhost (3/4)

18/08/02 10:55:13 INFO TaskSetManager: Finished task 2.0 in stage 15.0 (TID 42) in 7 ms on localhost (4/4)

18/08/02 10:55:13 INFO TaskSchedulerImpl: Removed TaskSet 15.0, whose tasks have all completed, from pool

18/08/02 10:55:13 INFO DAGScheduler: ResultStage 15 (collect at :39) finished in 0.006 s

18/08/02 10:55:13 INFO DAGScheduler: Job 10 finished: collect at :39, took 0.037964 s

resultArray: Array[String] = Array(1003, 1007, 1004) - 样例三执行的全过程:

scala> :paste

// Entering paste mode (ctrl-D to finish)

val math = sc.textFile("/tmp/result_math.txt")

val bigdata = sc.textFile("/tmp/result_bigdata.txt")

val m_math = math .map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

val m_bigdata = bigdata.map{x=>val line=x.split(" ");(line(0),line(1),line(2).toInt)}

val all_scores = (m_math union m_bigdata).map(x=>(x._1,x._3)).reduceByKey((x,y) => x+y).collect

// Exiting paste mode, now interpreting.

18/08/02 16:35:14 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 336.5 KB, free 336.5 KB)

18/08/02 16:35:14 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 28.5 KB, free 365.0 KB)

18/08/02 16:35:14 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on localhost:51245 (size: 28.5 KB, free: 511.1 MB)

18/08/02 16:35:14 INFO SparkContext: Created broadcast 0 from textFile at :27

18/08/02 16:35:14 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 336.5 KB, free 701.5 KB)

18/08/02 16:35:14 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 28.5 KB, free 730.0 KB)

18/08/02 16:35:14 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on localhost:51245 (size: 28.5 KB, free: 511.1 MB)

18/08/02 16:35:14 INFO SparkContext: Created broadcast 1 from textFile at :28

18/08/02 16:35:14 INFO FileInputFormat: Total input paths to process : 1

18/08/02 16:35:14 INFO FileInputFormat: Total input paths to process : 1

18/08/02 16:35:14 INFO SparkContext: Starting job: collect at :32

18/08/02 16:35:14 INFO DAGScheduler: Registering RDD 7 (map at :32)

18/08/02 16:35:14 INFO DAGScheduler: Got job 0 (collect at :32) with 4 output partitions

18/08/02 16:35:14 INFO DAGScheduler: Final stage: ResultStage 1 (collect at :32)

18/08/02 16:35:14 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 0)

18/08/02 16:35:14 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 0)

18/08/02 16:35:14 INFO DAGScheduler: Submitting ShuffleMapStage 0 (MapPartitionsRDD[7] at map at :32), which has no missing parents

18/08/02 16:35:14 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 5.2 KB, free 735.2 KB)

18/08/02 16:35:14 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 2.9 KB, free 738.1 KB)

18/08/02 16:35:14 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on localhost:51245 (size: 2.9 KB, free: 511.1 MB)

18/08/02 16:35:14 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1008

18/08/02 16:35:14 INFO DAGScheduler: Submitting 4 missing tasks from ShuffleMapStage 0 (MapPartitionsRDD[7] at map at :32)

18/08/02 16:35:14 INFO TaskSchedulerImpl: Adding task set 0.0 with 4 tasks

18/08/02 16:35:14 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, partition 0,ANY, 2250 bytes)

18/08/02 16:35:14 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, partition 1,ANY, 2250 bytes)

18/08/02 16:35:14 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, localhost, partition 2,ANY, 2253 bytes)

18/08/02 16:35:14 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, localhost, partition 3,ANY, 2253 bytes)

18/08/02 16:35:14 INFO Executor: Running task 2.0 in stage 0.0 (TID 2)

18/08/02 16:35:14 INFO Executor: Running task 1.0 in stage 0.0 (TID 1)

18/08/02 16:35:14 INFO Executor: Running task 3.0 in stage 0.0 (TID 3)

18/08/02 16:35:14 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

18/08/02 16:35:14 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_math.txt:0+133

18/08/02 16:35:14 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_math.txt:133+134

18/08/02 16:35:14 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_bigdata.txt:151+152

18/08/02 16:35:14 INFO HadoopRDD: Input split: hdfs://nn01.bmsoft.com.cn:8020/tmp/result_bigdata.txt:0+151

18/08/02 16:35:14 INFO deprecation: mapred.tip.id is deprecated. Instead, use mapreduce.task.id

18/08/02 16:35:14 INFO deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

18/08/02 16:35:14 INFO deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

18/08/02 16:35:14 INFO deprecation: mapred.task.partition is deprecated. Instead, use mapreduce.task.partition

18/08/02 16:35:14 INFO deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

18/08/02 16:35:14 INFO LineRecordReader: Found UTF-8 BOM and skipped it

18/08/02 16:35:14 INFO LineRecordReader: Found UTF-8 BOM and skipped it

18/08/02 16:35:14 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 2256 bytes result sent to driver

18/08/02 16:35:14 INFO Executor: Finished task 1.0 in stage 0.0 (TID 1). 2256 bytes result sent to driver

18/08/02 16:35:14 INFO Executor: Finished task 2.0 in stage 0.0 (TID 2). 2256 bytes result sent to driver

18/08/02 16:35:14 INFO Executor: Finished task 3.0 in stage 0.0 (TID 3). 2256 bytes result sent to driver

18/08/02 16:35:14 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 180 ms on localhost (1/4)

18/08/02 16:35:14 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 183 ms on localhost (2/4)

18/08/02 16:35:14 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 204 ms on localhost (3/4)

18/08/02 16:35:14 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 182 ms on localhost (4/4)

18/08/02 16:35:14 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

18/08/02 16:35:14 INFO DAGScheduler: ShuffleMapStage 0 (map at :32) finished in 0.222 s

18/08/02 16:35:14 INFO DAGScheduler: looking for newly runnable stages

18/08/02 16:35:14 INFO DAGScheduler: running: Set()

18/08/02 16:35:14 INFO DAGScheduler: waiting: Set(ResultStage 1)

18/08/02 16:35:14 INFO DAGScheduler: failed: Set()

18/08/02 16:35:14 INFO DAGScheduler: Submitting ResultStage 1 (ShuffledRDD[8] at reduceByKey at :32), which has no missing parents

18/08/02 16:35:14 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 2.7 KB, free 740.8 KB)

18/08/02 16:35:14 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 1619.0 B, free 742.3 KB)

18/08/02 16:35:14 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on localhost:51245 (size: 1619.0 B, free: 511.1 MB)

18/08/02 16:35:14 INFO SparkContext: Created broadcast 3 from broadcast at DAGScheduler.scala:1008

18/08/02 16:35:14 INFO DAGScheduler: Submitting 4 missing tasks from ResultStage 1 (ShuffledRDD[8] at reduceByKey at :32)

18/08/02 16:35:14 INFO TaskSchedulerImpl: Adding task set 1.0 with 4 tasks

18/08/02 16:35:14 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 4, localhost, partition 0,NODE_LOCAL, 1894 bytes)

18/08/02 16:35:14 INFO TaskSetManager: Starting task 1.0 in stage 1.0 (TID 5, localhost, partition 1,NODE_LOCAL, 1894 bytes)

18/08/02 16:35:14 INFO TaskSetManager: Starting task 2.0 in stage 1.0 (TID 6, localhost, partition 2,NODE_LOCAL, 1894 bytes)

18/08/02 16:35:14 INFO TaskSetManager: Starting task 3.0 in stage 1.0 (TID 7, localhost, partition 3,NODE_LOCAL, 1894 bytes)

18/08/02 16:35:14 INFO Executor: Running task 1.0 in stage 1.0 (TID 5)

18/08/02 16:35:14 INFO Executor: Running task 0.0 in stage 1.0 (TID 4)

18/08/02 16:35:14 INFO Executor: Running task 2.0 in stage 1.0 (TID 6)

18/08/02 16:35:14 INFO Executor: Running task 3.0 in stage 1.0 (TID 7)

18/08/02 16:35:14 INFO ShuffleBlockFetcherIterator: Getting 4 non-empty blocks out of 4 blocks

18/08/02 16:35:14 INFO ShuffleBlockFetcherIterator: Getting 4 non-empty blocks out of 4 blocks

18/08/02 16:35:14 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 6 ms

18/08/02 16:35:14 INFO ShuffleBlockFetcherIterator: Getting 4 non-empty blocks out of 4 blocks

18/08/02 16:35:14 INFO ShuffleBlockFetcherIterator: Getting 2 non-empty blocks out of 4 blocks

18/08/02 16:35:14 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 7 ms

18/08/02 16:35:14 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 7 ms

18/08/02 16:35:14 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 6 ms

18/08/02 16:35:14 INFO Executor: Finished task 2.0 in stage 1.0 (TID 6). 1356 bytes result sent to driver

18/08/02 16:35:14 INFO Executor: Finished task 1.0 in stage 1.0 (TID 5). 1333 bytes result sent to driver

18/08/02 16:35:14 INFO TaskSetManager: Finished task 2.0 in stage 1.0 (TID 6) in 42 ms on localhost (1/4)

18/08/02 16:35:14 INFO TaskSetManager: Finished task 1.0 in stage 1.0 (TID 5) in 44 ms on localhost (2/4)

18/08/02 16:35:14 INFO Executor: Finished task 0.0 in stage 1.0 (TID 4). 1382 bytes result sent to driver

18/08/02 16:35:14 INFO Executor: Finished task 3.0 in stage 1.0 (TID 7). 1356 bytes result sent to driver

18/08/02 16:35:14 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 4) in 49 ms on localhost (3/4)

18/08/02 16:35:14 INFO TaskSetManager: Finished task 3.0 in stage 1.0 (TID 7) in 45 ms on localhost (4/4)

18/08/02 16:35:14 INFO DAGScheduler: ResultStage 1 (collect at :32) finished in 0.048 s

18/08/02 16:35:14 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

18/08/02 16:35:14 INFO DAGScheduler: Job 0 finished: collect at :32, took 0.417303 s

math: org.apache.spark.rdd.RDD[String] = /tmp/result_math.txt MapPartitionsRDD[1] at textFile at :27

bigdata: org.apache.spark.rdd.RDD[String] = /tmp/result_bigdata.txt MapPartitionsRDD[3] at textFile at :28

m_math: org.apache.spark.rdd.RDD[(String, String, Int)] = MapPartitionsRDD[4] at map at :29

m_bigdata: org.apache.spark.rdd.RDD[(String, String, Int)] = MapPartitionsRDD[5] at map at :30

all_scores: Array[(String, Int)] = Array((1005,184), (1012,175), (1001,180), (1009,173), (1002,188), (1006,173), (1010,164), (1003,200), (1007,190), (1008,187), (1011,170), (1004,199))

scala> - 样例4执行过程:

前面步骤与样例3一样的已省略

scala> val aveScores = all_scores.map(x => (x._1,x._2/2.0))

aveScores: org.apache.spark.rdd.RDD[(String, Double)] = MapPartitionsRDD[10] at map at :37

scala> aveScores.collect

18/08/03 10:44:00 INFO SparkContext: Starting job: collect at :40

18/08/03 10:44:00 INFO DAGScheduler: Got job 2 (collect at :40) with 4 output partitions

18/08/03 10:44:00 INFO DAGScheduler: Final stage: ResultStage 5 (collect at :40)

18/08/03 10:44:00 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 4)

18/08/03 10:44:00 INFO DAGScheduler: Missing parents: List()

18/08/03 10:44:00 INFO DAGScheduler: Submitting ResultStage 5 (MapPartitionsRDD[10] at map at :37), which has no missing parents

18/08/03 10:44:00 INFO MemoryStore: Block broadcast_5 stored as values in memory (estimated size 3.1 KB, free 750.3 KB)

18/08/03 10:44:00 INFO MemoryStore: Block broadcast_5_piece0 stored as bytes in memory (estimated size 1842.0 B, free 752.1 KB)

18/08/03 10:44:00 INFO BlockManagerInfo: Added broadcast_5_piece0 in memory on localhost:59770 (size: 1842.0 B, free: 511.1 MB)

18/08/03 10:44:00 INFO SparkContext: Created broadcast 5 from broadcast at DAGScheduler.scala:1008

18/08/03 10:44:00 INFO DAGScheduler: Submitting 4 missing tasks from ResultStage 5 (MapPartitionsRDD[10] at map at :37)

18/08/03 10:44:00 INFO TaskSchedulerImpl: Adding task set 5.0 with 4 tasks

18/08/03 10:44:00 INFO TaskSetManager: Starting task 0.0 in stage 5.0 (TID 12, localhost, partition 0,NODE_LOCAL, 1894 bytes)

18/08/03 10:44:00 INFO TaskSetManager: Starting task 1.0 in stage 5.0 (TID 13, localhost, partition 1,NODE_LOCAL, 1894 bytes)

18/08/03 10:44:00 INFO TaskSetManager: Starting task 2.0 in stage 5.0 (TID 14, localhost, partition 2,NODE_LOCAL, 1894 bytes)

18/08/03 10:44:00 INFO TaskSetManager: Starting task 3.0 in stage 5.0 (TID 15, localhost, partition 3,NODE_LOCAL, 1894 bytes)

18/08/03 10:44:00 INFO Executor: Running task 0.0 in stage 5.0 (TID 12)

18/08/03 10:44:00 INFO Executor: Running task 1.0 in stage 5.0 (TID 13)

18/08/03 10:44:00 INFO Executor: Running task 3.0 in stage 5.0 (TID 15)

18/08/03 10:44:00 INFO Executor: Running task 2.0 in stage 5.0 (TID 14)

18/08/03 10:44:00 INFO ShuffleBlockFetcherIterator: Getting 4 non-empty blocks out of 4 blocks

18/08/03 10:44:00 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

18/08/03 10:44:00 INFO ShuffleBlockFetcherIterator: Getting 4 non-empty blocks out of 4 blocks

18/08/03 10:44:00 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms

18/08/03 10:44:00 INFO ShuffleBlockFetcherIterator: Getting 4 non-empty blocks out of 4 blocks

18/08/03 10:44:00 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

18/08/03 10:44:00 INFO ShuffleBlockFetcherIterator: Getting 2 non-empty blocks out of 4 blocks

18/08/03 10:44:00 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 3 ms

18/08/03 10:44:00 INFO Executor: Finished task 3.0 in stage 5.0 (TID 15). 1370 bytes result sent to driver

18/08/03 10:44:00 INFO Executor: Finished task 2.0 in stage 5.0 (TID 14). 1370 bytes result sent to driver

18/08/03 10:44:00 INFO Executor: Finished task 0.0 in stage 5.0 (TID 12). 1397 bytes result sent to driver

18/08/03 10:44:00 INFO Executor: Finished task 1.0 in stage 5.0 (TID 13). 1340 bytes result sent to driver

18/08/03 10:44:00 INFO TaskSetManager: Finished task 3.0 in stage 5.0 (TID 15) in 8 ms on localhost (1/4)

18/08/03 10:44:00 INFO TaskSetManager: Finished task 0.0 in stage 5.0 (TID 12) in 12 ms on localhost (2/4)

18/08/03 10:44:00 INFO TaskSetManager: Finished task 2.0 in stage 5.0 (TID 14) in 10 ms on localhost (3/4)

18/08/03 10:44:00 INFO TaskSetManager: Finished task 1.0 in stage 5.0 (TID 13) in 13 ms on localhost (4/4)

18/08/03 10:44:00 INFO DAGScheduler: ResultStage 5 (collect at :40) finished in 0.013 s

18/08/03 10:44:00 INFO TaskSchedulerImpl: Removed TaskSet 5.0, whose tasks have all completed, from pool

18/08/03 10:44:00 INFO DAGScheduler: Job 2 finished: collect at :40, took 0.020852 s

res2: Array[(String, Double)] = Array((1005,92.0), (1012,87.5), (1001,90.0), (1009,86.5), (1002,94.0), (1006,86.5), (1010,82.0), (1003,100.0), (1007,95.0), (1008,93.5), (1011,85.0), (1004,99.5))

注:spark-shell 下同样支持用Tab键进行提示操作。

参考自Spark大数据技术与应用-PPT课件。