Pytorch初学实战(一):基于的CNN的Fashion MNIST图像分类

1.引言

1.1.什么是Pytorch

PyTorch是一个开源的Python机器学习库。

1.2.什么是CNN

卷积神经网络(Convolutional Neural Networks)是一种深度学习模型或类似于人工神经网络的多层感知器,常用来分析视觉图像。

1.2.什么是MNIST

MNIST是一个入门级的计算机视觉数据集,它包含各种手写数字图片,以及每一张图片对应的标签,告诉我们这个是数字几。

这里我们选择的是由kaggle提供的Fashion MNIST,包含的是各种服装的图片,难度相较于原始的MNIST而言要更高。

数据集介绍与下载:点此

数据中包含10种不同的衣物,分别为:

- 0 T-shirt/top

- 1 Trouser

- 2 Pullover

- 3 Dress

- 4 Coat

- 5 Sandal

- 6 Shirt

- 7 Sneaker

- 8 Bag

- 9 Ankle boot

2.分析

完整代码于文末给出。

2.1.CNN结构

本文采用如下结构的卷积神经网络:

| Layer | Input | Kernel | Output |

|---|---|---|---|

| INPUT | 28×28 | / | 28×28 |

| CONV1 | 28×28 | 5×5(padding=2) | 16×28×28 |

| POOL1 | 16×28×28 | 2×2 | 16×14×14 |

| CONV2 | 16×14×14 | 3×3 | 32×12×12 |

| CONV3 | 32×12×12 | 3×3 | 64×10×10 |

| POOL2 | 64×10×10 | 2×2 | 64×5×5 |

| FC | 64×5×5 | / | 10 |

各层的详细分析如下:

- 输入层INPUT: 数据集中的原始数据为28×28的图像,无需额外调整。

- 卷积层CONV1: 采用5×5的卷积核,并采用2个像素进行边缘填充,保证卷积得到的16张特征图仍保持28×28的大小不变。

之后,还进行数据的归一化,防止数据在进行Relu之前因为数据过大而导致网络性能的不稳定;之后再进行Relu处理。 - 池化层POOL1: 采用2×2的采样空间,进行最大池化。输出得到16张14×14的特征图。

- 卷积层CONV2: 采用3×3的卷积核,之后同样进行归一化与Relu处理。最后得到32张12×12的特征图。

- 卷积层CONV3: 采用3×3的卷积核,之后同样进行归一化与Relu处理。最后得到64张10×10的特征图。

- 池化层POOL2: 采用2×2的采样空间,进行最大池化。输出得到64张5×5的特征图。

- 输出层FC: 输入为64张5×5的特征图。将这些特征图先压缩成向量,然后进行全连接,最后得到10维的向量。

2.2.CNN代码

class CNN(nn.Module):

def __init__(self):

#nn.Module子类的函数必须在构造函数中执行父类的构造函数

super(CNN, self).__init__()

#卷积层conv1

self.conv1 = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=5, padding=2),

nn.BatchNorm2d(16),

nn.ReLU())

#池化层pool1

self.pool1=nn.MaxPool2d(2)

#卷积层conv2

self.conv2 = nn.Sequential(

nn.Conv2d(16, 32, kernel_size=3),

nn.BatchNorm2d(32),

nn.ReLU())

#卷积层conv3

self.conv3 = nn.Sequential(

nn.Conv2d(32, 64, kernel_size=3),

nn.BatchNorm2d(64),

nn.ReLU())

#池化层pool2

self.pool2=nn.MaxPool2d(2)

#全连接层fc(输出层)

self.fc = nn.Linear(5*5*64, 10)

#前向传播

def forward(self, x):

out = self.conv1(x)

out = self.pool1(out)

out = self.conv2(out)

out = self.conv3(out)

out = self.pool2(out)

#压缩成向量以供全连接

out = out.view(out.size(0), -1)

out = self.fc(out)

return out

2.3.数据观察

打开下载得到的fashion-mnist_train.csv与fashion-mnist_test.csv:

可以发现训练集包含60000个样本,测试集包含10000个样本;每个样本包含其实际对应的图片类型label以及对应的各像素pixel1~pixel784灰度值,即28×28的图像。

2.4.数据导入

构造FashionMNISTDataset类,以方便使用pytorch的dataloader进行数据加载。

该类需要指定三个函数:

- init:主要作用是进行数据的加载,指定X(特征),Y(标签)与len(样本容量)三个变量。这里将原始的784维向量调整为28×28的矩阵作为特征X。

- len:样本容量。

- getitem:返回(样本,标签)元组,其实就是返回了一张图片及其对应的分类。

class FashionMNISTDataset(Dataset):

def __init__(self, csv_file, transform = None):

data = pd.read_csv(csv_file)

self.X = np.array(data.iloc[:, 1:]).reshape(-1, 1, 28, 28).astype(float)

self.Y = np.array(data.iloc[:, 0])

self.len = len(self.X)

del data

def __len__(self):

return self.len

def __getitem__(self, idx):

item = self.X[idx]

label = self.Y[idx]

return (item, label)

然后读取数据,创建训练集train_dataset与测试集test_dataset:

from pathlib import Path

DATA_PATH = Path('./data/')

train_dataset = FashionMNISTDataset(csv_file = DATA_PATH / "fashion-mnist_train.csv")

test_dataset = FashionMNISTDataset(csv_file = DATA_PATH / "fashion-mnist_test.csv")

最后利用dataloader进行导入。

关于超参数BATCH_SIZE:

- 影响的是每次训练的样本个数

- 一般设置为2的幂或者2的倍数

- 越大的话,内存利用率更高,矩阵乘法的并行化效率提高,跑完一次epoch(全数据集)所需要的迭代次数减小,对于相同数据量的处理速度进一步加快,并且训练震荡也可能越小

- 但是越大的话也会对内存容量要求更高,超出电脑性能限制则可能引发OOM异常

shuffle则影响的是是否打乱数据,一般只需要打乱训练集数据,测试集数据不需要打乱。

from torch.utils.data import DataLoader as dataloader

BATCH_SIZE = 256

train_loader = dataloader(dataset=train_dataset, batch_size=BATCH_SIZE, shuffle=True)

test_loader = dataloader(dataset=test_dataset, batch_size=BATCH_SIZE, shuffle=False)

2.5.训练

2.5.1.开始

首先实例化一个CNN对象,并指定是使用CPU还是GPU进行训练:

cnn = CNN()

DEVICE = torch.device("cpu")

if torch.cuda.is_available():

DEVICE = torch.device("cuda")

cnn = cnn.to(DEVICE)

2.5.2.损失函数

由于本问题是一个多分类问题,使用了softmax回归将神经网络前向传播得到的结果变成概率分布,所以使用交叉熵损失。

criterion = nn.CrossEntropyLoss().to(DEVICE)

2.5.3.优化器

Adam优化器在大多数情况下都能取得不错的结果:

LEARNING_RATE = 0.01

optimizer = torch.optim.Adam(cnn.parameters(), lr=LEARNING_RATE)

2.5.4.开始训练

注意这里为了演示方便,训练批次TOTAL_EPOCHS设置成了5,为了更好的训练结果可以增大训练批次。

TOTAL_EPOCHS = 5

losses = []

for epoch in range(TOTAL_EPOCHS):

#在每个批次下,遍历每个训练样本

for i, (images, labels) in enumerate(train_loader):

images = images.float().to(DEVICE)

labels = labels.to(DEVICE)

#清零

optimizer.zero_grad()

outputs = cnn(images)

#计算损失函数

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

losses.append(loss.cpu().data.item());

if (i+1) % 100 == 0:

print ('Epoch : %d/%d, Iter : %d/%d, Loss: %.4f'%(epoch+1, TOTAL_EPOCHS, i+1, len(train_dataset)//BATCH_SIZE, loss.data.item()))

2.5.5.结果评估

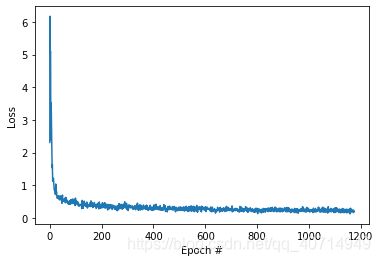

可视化训练结果:

plt.xlabel('Epoch #')

plt.ylabel('Loss')

plt.plot(losses)

plt.show()

torch.save(cnn.state_dict(), "fm-cnn3.pth")

最终结果评估具体的流程如下:

- 将CNN模型切换成eval模式。eval模式是相对于train模式而言的,前者用于模型评估阶段,后者用于模型训练阶段。

- 将图片放入网络中进行运算,得到结果outputs。

- 将概率分布形式的outputs数据进行独热处理,即选择“可能性最大”的分类作为当前图片的分类。

- 判断分类结果是否正确,并最终统计正确率。

cnn.eval()

correct = 0

total = 0

for images, labels in test_loader:

images = images.float().to(DEVICE)

outputs = cnn(images).cpu()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum()

print('准确率: %.4f %%' % (100 * correct / total))

在笔者机器上运算得到的准确率为91.63%

3.完整代码

# -*- coding: utf-8 -*-

import torch

from pathlib import Path

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from torch.utils.data import Dataset

from torch.utils.data import DataLoader as dataloader

import torch.nn as nn

class FashionMNISTDataset(Dataset):

def __init__(self, csv_file, transform = None):

data = pd.read_csv(csv_file)

self.X = np.array(data.iloc[:, 1:]).reshape(-1, 1, 28, 28).astype(float)

self.Y = np.array(data.iloc[:, 0])

self.len = len(self.X)

del data

def __len__(self):

return self.len

def __getitem__(self, idx):

item = self.X[idx]

label = self.Y[idx]

return (item, label)

class CNN(nn.Module):

def __init__(self):

#nn.Module子类的函数必须在构造函数中执行父类的构造函数

super(CNN, self).__init__()

#卷积层conv1

self.conv1 = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=5, padding=2),

nn.BatchNorm2d(16),

nn.ReLU())

#池化层pool1

self.pool1=nn.MaxPool2d(2)

#卷积层conv2

self.conv2 = nn.Sequential(

nn.Conv2d(16, 32, kernel_size=3),

nn.BatchNorm2d(32),

nn.ReLU())

#卷积层conv3

self.conv3 = nn.Sequential(

nn.Conv2d(32, 64, kernel_size=3),

nn.BatchNorm2d(64),

nn.ReLU())

#池化层pool2

self.pool2=nn.MaxPool2d(2)

#全连接层fc(输出层)

self.fc = nn.Linear(5*5*64, 10)

#前向传播

def forward(self, x):

out = self.conv1(x)

out = self.pool1(out)

out = self.conv2(out)

out = self.conv3(out)

out = self.pool2(out)

#压缩成向量以供全连接

out = out.view(out.size(0), -1)

out = self.fc(out)

return out

DATA_PATH = Path('./data/')

train_dataset = FashionMNISTDataset(csv_file = DATA_PATH / "fashion-mnist_train.csv")

test_dataset = FashionMNISTDataset(csv_file = DATA_PATH / "fashion-mnist_test.csv")

BATCH_SIZE=256

train_loader = dataloader(dataset=train_dataset,

batch_size=BATCH_SIZE,

shuffle=True)

test_loader = dataloader(dataset=test_dataset,

batch_size=BATCH_SIZE,

shuffle=False)

cnn = CNN()

DEVICE = torch.device("cpu")

if torch.cuda.is_available():

DEVICE = torch.device("cuda")

cnn = cnn.to(DEVICE)

criterion = nn.CrossEntropyLoss().to(DEVICE)

LEARNING_RATE = 0.01

optimizer = torch.optim.Adam(cnn.parameters(), lr=LEARNING_RATE)

TOTAL_EPOCHS = 5

losses = []

for epoch in range(TOTAL_EPOCHS):

#在每个批次下,遍历每个训练样本

for i, (images, labels) in enumerate(train_loader):

images = images.float().to(DEVICE)

labels = labels.to(DEVICE)

#清零

optimizer.zero_grad()

outputs = cnn(images)

#计算损失函数

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

losses.append(loss.cpu().data.item());

if (i+1) % 100 == 0:

print ('Epoch : %d/%d, Iter : %d/%d, Loss: %.4f'%(epoch+1, TOTAL_EPOCHS, i+1, len(train_dataset)//BATCH_SIZE, loss.data.item()))

plt.xlabel('Epoch #')

plt.ylabel('Loss')

plt.plot(losses)

plt.show()

torch.save(cnn.state_dict(), "fm-cnn3.pth")

cnn.eval()

correct = 0

total = 0

for images, labels in test_loader:

images = images.float().to(DEVICE)

outputs = cnn(images).cpu()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum()

print('准确率: %.4f %%' % (100 * correct / total))