Flink 1.9源码学习01 ----启动源码分析

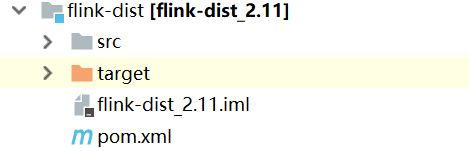

1,先找到对应的模块 flink-dist模块:

2,我们可以看到很多脚本,全是启动脚本命令

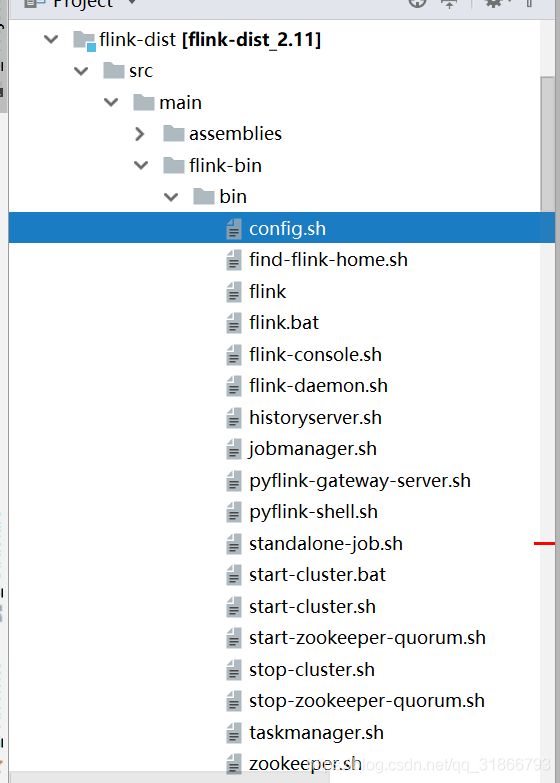

因为我们要找启动命令,standalone模式下我们通过start-cluster.sh这个脚本启动,打开看看:

#!/usr/bin/env bash

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

################################################################################

bin=`dirname "$0"`

bin=`cd "$bin"; pwd`

. "$bin"/config.sh

# Start the JobManager instance(s)

shopt -s nocasematch

if [[ $HIGH_AVAILABILITY == "zookeeper" ]]; then

# HA Mode

readMasters

echo "Starting HA cluster with ${#MASTERS[@]} masters."

for ((i=0;i<${#MASTERS[@]};++i)); do

master=${MASTERS[i]}

webuiport=${WEBUIPORTS[i]}

if [ ${MASTERS_ALL_LOCALHOST} = true ] ; then

"${FLINK_BIN_DIR}"/jobmanager.sh start "${master}" "${webuiport}"

else

ssh -n $FLINK_SSH_OPTS $master -- "nohup /bin/bash -l \"${FLINK_BIN_DIR}/jobmanager.sh\" start ${master} ${webuiport} &"

fi

done

else

echo "Starting cluster."

# Start single JobManager on this machine

"$FLINK_BIN_DIR"/jobmanager.sh start

fi

shopt -u nocasematch

# Start TaskManager instance(s)

TMSlaves start

简单的看到这个脚本主要是启动了 jobmanager.sh脚本 跟 config.sh 中 TMSlaves 方法调用

我们先看 jobmanager.sh脚本做了啥:

#!/usr/bin/env bash

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

################################################################################

# Start/stop a Flink JobManager.

USAGE="Usage: jobmanager.sh ((start|start-foreground) [host] [webui-port])|stop|stop-all"

STARTSTOP=$1

HOST=$2 # optional when starting multiple instances

WEBUIPORT=$3 # optional when starting multiple instances

if [[ $STARTSTOP != "start" ]] && [[ $STARTSTOP != "start-foreground" ]] && [[ $STARTSTOP != "stop" ]] && [[ $STARTSTOP != "stop-all" ]]; then

echo $USAGE

exit 1

fi

bin=`dirname "$0"`

bin=`cd "$bin"; pwd`

. "$bin"/config.sh

ENTRYPOINT=standalonesession

if [[ $STARTSTOP == "start" ]] || [[ $STARTSTOP == "start-foreground" ]]; then

if [ ! -z "${FLINK_JM_HEAP_MB}" ] && [ "${FLINK_JM_HEAP}" == 0 ]; then

echo "used deprecated key \`${KEY_JOBM_MEM_MB}\`, please replace with key \`${KEY_JOBM_MEM_SIZE}\`"

else

flink_jm_heap_bytes=$(parseBytes ${FLINK_JM_HEAP})

FLINK_JM_HEAP_MB=$(getMebiBytes ${flink_jm_heap_bytes})

fi

if [[ ! ${FLINK_JM_HEAP_MB} =~ $IS_NUMBER ]] || [[ "${FLINK_JM_HEAP_MB}" -lt "0" ]]; then

echo "[ERROR] Configured JobManager memory size is not a valid value. Please set '${KEY_JOBM_MEM_SIZE}' in ${FLINK_CONF_FILE}."

exit 1

fi

if [ "${FLINK_JM_HEAP_MB}" -gt "0" ]; then

export JVM_ARGS="$JVM_ARGS -Xms"$FLINK_JM_HEAP_MB"m -Xmx"$FLINK_JM_HEAP_MB"m"

fi

# Add JobManager-specific JVM options

export FLINK_ENV_JAVA_OPTS="${FLINK_ENV_JAVA_OPTS} ${FLINK_ENV_JAVA_OPTS_JM}"

# Startup parameters

args=("--configDir" "${FLINK_CONF_DIR}" "--executionMode" "cluster")

if [ ! -z $HOST ]; then

args+=("--host")

args+=("${HOST}")

fi

if [ ! -z $WEBUIPORT ]; then

args+=("--webui-port")

args+=("${WEBUIPORT}")

fi

fi

if [[ $STARTSTOP == "start-foreground" ]]; then

exec "${FLINK_BIN_DIR}"/flink-console.sh $ENTRYPOINT "${args[@]}"

else

"${FLINK_BIN_DIR}"/flink-daemon.sh $STARTSTOP $ENTRYPOINT "${args[@]}"

fi

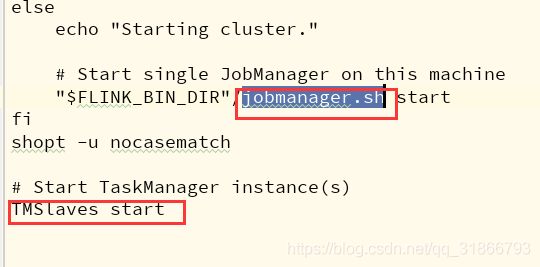

我们可以看到 在jobmanager.sh中导入了一些配置文件参数以外,脚本在最后执行了flink-daemon.sh 脚本,我们继续跟踪下去:

-->>

#!/usr/bin/env bash

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

################################################################################

# Start/stop a Flink daemon.

USAGE="Usage: flink-daemon.sh (start|stop|stop-all) (taskexecutor|zookeeper|historyserver|standalonesession|standalonejob) [args]"

STARTSTOP=$1

DAEMON=$2

ARGS=("${@:3}") # get remaining arguments as array

bin=`dirname "$0"`

bin=`cd "$bin"; pwd`

. "$bin"/config.sh

case $DAEMON in

(taskexecutor)

CLASS_TO_RUN=org.apache.flink.runtime.taskexecutor.TaskManagerRunner

;;

(zookeeper)

CLASS_TO_RUN=org.apache.flink.runtime.zookeeper.FlinkZooKeeperQuorumPeer

;;

(historyserver)

CLASS_TO_RUN=org.apache.flink.runtime.webmonitor.history.HistoryServer

;;

(standalonesession)

CLASS_TO_RUN=org.apache.flink.runtime.entrypoint.StandaloneSessionClusterEntrypoint

;;

(standalonejob)

CLASS_TO_RUN=org.apache.flink.container.entrypoint.StandaloneJobClusterEntryPoint

;;

(*)

echo "Unknown daemon '${DAEMON}'. $USAGE."

exit 1

;;

esac

if [ "$FLINK_IDENT_STRING" = "" ]; then

FLINK_IDENT_STRING="$USER"

fi

FLINK_TM_CLASSPATH=`constructFlinkClassPath`

pid=$FLINK_PID_DIR/flink-$FLINK_IDENT_STRING-$DAEMON.pid

mkdir -p "$FLINK_PID_DIR"

# Log files for daemons are indexed from the process ID's position in the PID

# file. The following lock prevents a race condition during daemon startup

# when multiple daemons read, index, and write to the PID file concurrently.

# The lock is created on the PID directory since a lock file cannot be safely

# removed. The daemon is started with the lock closed and the lock remains

# active in this script until the script exits.

command -v flock >/dev/null 2>&1

if [[ $? -eq 0 ]]; then

exec 200<"$FLINK_PID_DIR"

flock 200

fi

# Ascending ID depending on number of lines in pid file.

# This allows us to start multiple daemon of each type.

id=$([ -f "$pid" ] && echo $(wc -l < "$pid") || echo "0")

FLINK_LOG_PREFIX="${FLINK_LOG_DIR}/flink-${FLINK_IDENT_STRING}-${DAEMON}-${id}-${HOSTNAME}"

log="${FLINK_LOG_PREFIX}.log"

out="${FLINK_LOG_PREFIX}.out"

log_setting=("-Dlog.file=${log}" "-Dlog4j.configuration=file:${FLINK_CONF_DIR}/log4j.properties" "-Dlogback.configurationFile=file:${FLINK_CONF_DIR}/logback.xml")

JAVA_VERSION=$(${JAVA_RUN} -version 2>&1 | sed 's/.*version "\(.*\)\.\(.*\)\..*"/\1\2/; 1q')

# Only set JVM 8 arguments if we have correctly extracted the version

if [[ ${JAVA_VERSION} =~ ${IS_NUMBER} ]]; then

if [ "$JAVA_VERSION" -lt 18 ]; then

JVM_ARGS="$JVM_ARGS -XX:MaxPermSize=256m"

fi

fi

case $STARTSTOP in

(start)

# Rotate log files

rotateLogFilesWithPrefix "$FLINK_LOG_DIR" "$FLINK_LOG_PREFIX"

# Print a warning if daemons are already running on host

if [ -f "$pid" ]; then

active=()

while IFS='' read -r p || [[ -n "$p" ]]; do

kill -0 $p >/dev/null 2>&1

if [ $? -eq 0 ]; then

active+=($p)

fi

done < "${pid}"

count="${#active[@]}"

if [ ${count} -gt 0 ]; then

echo "[INFO] $count instance(s) of $DAEMON are already running on $HOSTNAME."

fi

fi

# Evaluate user options for local variable expansion

FLINK_ENV_JAVA_OPTS=$(eval echo ${FLINK_ENV_JAVA_OPTS})

echo "Starting $DAEMON daemon on host $HOSTNAME."

$JAVA_RUN $JVM_ARGS ${FLINK_ENV_JAVA_OPTS} "${log_setting[@]}" -classpath "`manglePathList "$FLINK_TM_CLASSPATH:$INTERNAL_HADOOP_CLASSPATHS"`" ${CLASS_TO_RUN} "${ARGS[@]}" > "$out" 200<&- 2>&1 < /dev/null &

mypid=$!

# Add to pid file if successful start

if [[ ${mypid} =~ ${IS_NUMBER} ]] && kill -0 $mypid > /dev/null 2>&1 ; then

echo $mypid >> "$pid"

else

echo "Error starting $DAEMON daemon."

exit 1

fi

;;

(stop)

if [ -f "$pid" ]; then

# Remove last in pid file

to_stop=$(tail -n 1 "$pid")

if [ -z $to_stop ]; then

rm "$pid" # If all stopped, clean up pid file

echo "No $DAEMON daemon to stop on host $HOSTNAME."

else

sed \$d "$pid" > "$pid.tmp" # all but last line

# If all stopped, clean up pid file

[ $(wc -l < "$pid.tmp") -eq 0 ] && rm "$pid" "$pid.tmp" || mv "$pid.tmp" "$pid"

if kill -0 $to_stop > /dev/null 2>&1; then

echo "Stopping $DAEMON daemon (pid: $to_stop) on host $HOSTNAME."

kill $to_stop

else

echo "No $DAEMON daemon (pid: $to_stop) is running anymore on $HOSTNAME."

fi

fi

else

echo "No $DAEMON daemon to stop on host $HOSTNAME."

fi

;;

(stop-all)

if [ -f "$pid" ]; then

mv "$pid" "${pid}.tmp"

while read to_stop; do

if kill -0 $to_stop > /dev/null 2>&1; then

echo "Stopping $DAEMON daemon (pid: $to_stop) on host $HOSTNAME."

kill $to_stop

else

echo "Skipping $DAEMON daemon (pid: $to_stop), because it is not running anymore on $HOSTNAME."

fi

done < "${pid}.tmp"

rm "${pid}.tmp"

fi

;;

(*)

echo "Unexpected argument '$STARTSTOP'. $USAGE."

exit 1

;;

esac

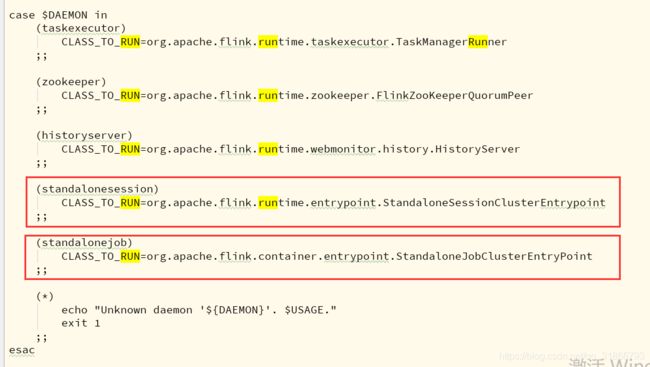

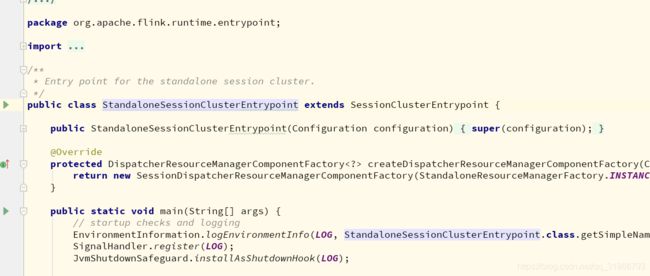

如上图,根据脚本我们知道,脚本调用了相应的入口类

org.apache.flink.runtime.entrypoint.StandaloneSessionClusterEntrypoint

我们找到这类,以后再慢慢分析:

org.apache.flink.runtime.entrypoint.StandaloneSessionClusterEntrypoint

--->>>

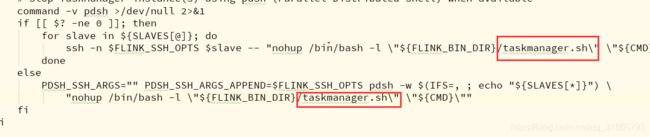

我们来看看TaskManager的启动方法config.sh 中 TMSlaves start:

# TMSlaves start|stop

TMSlaves() {

CMD=$1

readSlaves

if [ ${SLAVES_ALL_LOCALHOST} = true ] ; then

# all-local setup

for slave in ${SLAVES[@]}; do

"${FLINK_BIN_DIR}"/taskmanager.sh "${CMD}"

done

else

# non-local setup

# Stop TaskManager instance(s) using pdsh (Parallel Distributed Shell) when available

command -v pdsh >/dev/null 2>&1

if [[ $? -ne 0 ]]; then

for slave in ${SLAVES[@]}; do

ssh -n $FLINK_SSH_OPTS $slave -- "nohup /bin/bash -l \"${FLINK_BIN_DIR}/taskmanager.sh\" \"${CMD}\" &"

done

else

PDSH_SSH_ARGS="" PDSH_SSH_ARGS_APPEND=$FLINK_SSH_OPTS pdsh -w $(IFS=, ; echo "${SLAVES[*]}") \

"nohup /bin/bash -l \"${FLINK_BIN_DIR}/taskmanager.sh\" \"${CMD}\""

fi

fi

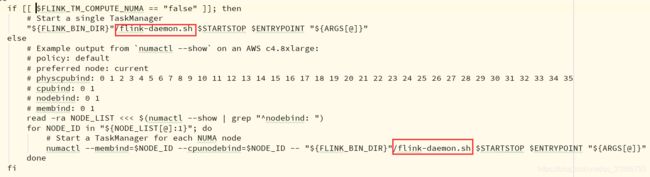

}进入taskManager.sh脚本,最后发现还是调用了flink-daemon.sh脚本

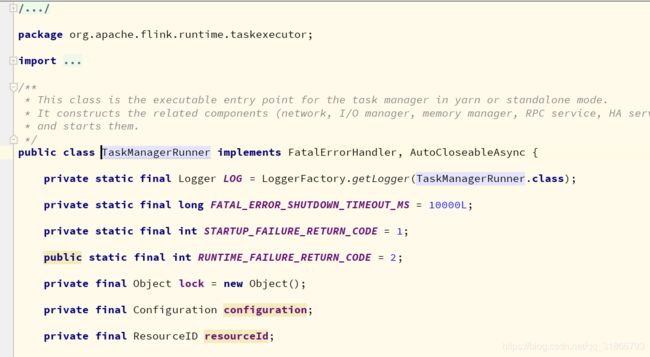

最后调用的入口类:

CLASS_TO_RUN=org.apache.flink.runtime.taskexecutor.TaskManagerRunner

--->>>

总结:通过start-cluster.sh脚本启动命令,我们发现 通过调用jobmanager.sh跟taskmanager.sh脚本 最后都调用了flink-daemon.sh脚本然后调用了相应的启动入口类,从而启动了集群。

参考大佬文章:https://www.cnblogs.com/ljygz/p/11398761.html