完全分布式--集群群起

完全分布式——集群群起

超级关键关键关键★

1.配置slaves

首先在hadoop03中配置

[root@hadoop03 ~]# vim /opt/module/hadoop-2.5.0-cdh5.3.6/etc/hadoop/slaves

hadoop03

hadoop04

hadoop05

在文件中添加的内容,不允许有的多余的空格,以及空行

在hadoop03中使用xsync将配置分发到其余节点中

[root@hadoop03 hadoop]# xsync slaves

fname=slaves

pdir=/opt/module/hadoop-2.5.0-cdh5.3.6/etc/hadoop

------------------- hadoop4 --------------

sending incremental file list

slaves

sent 99 bytes received 37 bytes 90.67 bytes/sec

total size is 27 speedup is 0.20

------------------- hadoop5 --------------

sending incremental file list

slaves

sent 72 bytes received 37 bytes 218.00 bytes/sec

total size is 27 speedup is 0.25

刚开始使用的xsync时出现了错误

rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1039) [sender=3.0.6]

解决方法:改变文件xsync的权限

[root@hadoop03 hadoop]# chmod 777 slaves

2.启动集群

准备工作

为了演示群起集群脚本,首先将hadoop03、hadoop04、hadoop05的NameNode和DataNode

再次强调,只有hadoop03有NameNode

[root@hadoop03 hadoop-2.5.0-cdh5.3.6]# sbin/hadoop-daemon.sh stop datanode

stopping datanode

[root@hadoop03 hadoop-2.5.0-cdh5.3.6]# sbin/hadoop-daemon.sh stop namenode

stopping namenode

[root@hadoop04 hadoop-2.5.0-cdh5.3.6]# sbin/hadoop-daemon.sh stop datanode

stopping datanode

[root@hadoop05 hadoop-2.5.0-cdh5.3.6]# sbin/hadoop-daemon.sh stop datanode

stopping datanode

2.1启动HDFS

启动的是HDFS中的节点 NameNode、DataNode和secondaryNameNode

[root@hadoop03 hadoop-2.5.0-cdh5.3.6]# sbin/start-dfs.sh

Starting namenodes on [hadoop03]

hadoop03: starting namenode, logging to /opt/module/hadoop-2.5.0-cdh5.3.6/logs/hadoop-root-namenode-hadoop03.out

hadoop03: starting datanode, logging to /opt/module/hadoop-2.5.0-cdh5.3.6/logs/hadoop-root-datanode-hadoop03.out

hadoop04: starting datanode, logging to /opt/module/hadoop-2.5.0-cdh5.3.6/logs/hadoop-root-datanode-hadoop04.out

hadoop05: starting datanode, logging to /opt/module/hadoop-2.5.0-cdh5.3.6/logs/hadoop-root-datanode-hadoop05.out

Starting secondary namenodes [hadoop05]

hadoop05: starting secondarynamenode, logging to /opt/module/hadoop-2.5.0-cdh5.3.6/logs/hadoop-root-secondarynamenode-hadoop05.out

此时分别查看hadoop03、hadoop04、hadoop05中的进程是否与当时的部署一致

hadoop03

[root@hadoop03 hadoop-2.5.0-cdh5.3.6]# jps

6836 DataNode

6741 NameNode

7099 Jps

hadoop04

[root@hadoop04 hadoop-2.5.0-cdh5.3.6]# jps

7318 Jps

7243 DataNode

hadoop05

[root@hadoop05 hadoop-2.5.0-cdh5.3.6]# jps

6786 Jps

6677 SecondaryNameNode

6620 DataNode

2.2启动YARN

因为ResourceManager部署在hadoop04上

所以ResourceManager必须在hadoop04上启动,其余都不行

[root@hadoop04 hadoop-2.5.0-cdh5.3.6]# sbin/start-yarn.sh

成功开启后,hadoop03,hadoop04以及hadoop05的jps中的运行进程就和集群部署规划一致

hadoop04

[root@hadoop04 hadoop-2.5.0-cdh5.3.6]# jps

7760 NodeManager

7386 ResourceManager

7883 Jps

7243 DataNode

hadoop03

[root@hadoop03 hadoop-2.5.0-cdh5.3.6]# jps

6836 DataNode

7173 NodeManager

6741 NameNode

7341 Jps

hadoop05

[root@hadoop05 hadoop-2.5.0-cdh5.3.6]# jps

6868 NodeManager

6677 SecondaryNameNode

7035 Jps

6620 DataNode

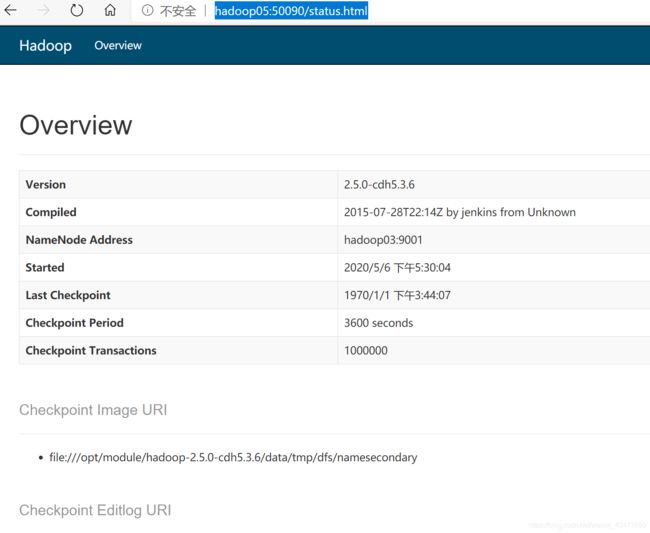

最后Web端查看SecondaryNameNode

网址:hadoop05:50090

铛铛,成功~

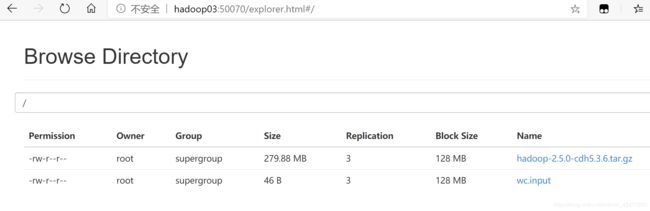

3. 集群基本测试

上传文件到集群

####### 3.1 上传小文件

[root@hadoop03 hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -put wcinput/wc.input /

[root@hadoop03 hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -put /opt/software/hadoop-2.5.0-cdh5.3.6.tar.gz /