服务器centos7伪分布部署hadoop3.1.2

为了毕业,我使用一台腾讯云的服务器部署hadoop进行开发。

系统:CentOS Linux release 7.7.1908 (Core)

hadoop版本:hadoop-3.1.2.tar.gz

1、首先,我们必须要有java环境,java版本1.8(向上兼容到了j10)。同时要将java配置进环境变量中

[root@shengxi ~]# java -version

openjdk version "1.8.0_222"

OpenJDK Runtime Environment (build 1.8.0_222-b10)

OpenJDK 64-Bit Server VM (build 25.222-b10, mixed mode)

[root@shengxi ~]# vim /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222/

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

2、配置本机虚拟域名。因为我是云服务器,所以不应该使用127.0.0.1进行hadoop配置的,所以应该使用本机ip(注意:不是公网ip,而是内网ip)。

//查看ip

[root@shengxi ~]# ifconfig -a

eth0: flags=4163 mtu 1500

inet 172.17.x.x netmask 255.255.240.0 broadcast 172.17.15.255

ether 52:54:00:8a:fa:12 txqueuelen 1000 (Ethernet)

RX packets 1767653 bytes 1932785383 (1.8 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 775608 bytes 93677869 (89.3 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1 (Local Loopback)

RX packets 2 bytes 276 (276.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2 bytes 276 (276.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

其中eth0中的172.17.x.x就是我的本地ip了。

[root@shengxi ~]# vim /etc/hosts

172.17.x.x shengxi

172.17.x.x hadoop

127.0.0.1 localhost.localdomain localhost

127.0.0.1 localhost4.localdomain4 localhost4

::1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

增加一句 ip: hostname来配置虚拟域名,这里配置了一个hadoop作为hadoop开发用的虚拟域名。

3、增加一个hadoop用户,因为使用root用户启动的hadoop会出现一些报错,而且访问的时候会出现路径不对应的情况。因此我直接增加一个hadoop user配置root权限。(可以直接将/etc/passwd中的对应用户的uid改为0——root权限)

//新增用户

[root@shengxi ~]# adduser hadoop

//修改密码

[root@shengxi ~]# passwd hadoop

Changing password for user hadoop.

//输入两个新密码

New password:

Retype new password:

passwd: all authentication tokens updated successfully.修改/etc/sudoers文件,增加一句hadoop ALL=(ALL) NOPASSWD:ALL这样hadoop使用root权限时就不用输入密码了。

## Allow root to run any commands anywhere

root ALL=(ALL) ALL

hadoop ALL=(ALL) NOPASSWD:ALL3、ssh安装,因为我们是云服务器,自带ssh环境,就不需要安装了。直接配置不需要密码登录就好了。

4、获取压缩包,并解压。

//获取压缩包

[root@shengxi ~]# wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.1.2/hadoop-3.1.2.tar.gz

//解压

[root@shengxi ~]# tar -zxvf hadoop-3.1.2.tar.gz

5、为了方便管理,将hadoop文件夹移动到一个你习惯的文件路径下,然后将文件夹所有人改成hadoop。

[root@shengxi ~]# mv hadoop-3.1.2 /usr/local/

[root@shengxi ~]# cd /usr/local/

[root@shengxi local]# ls

bin games include lib64 qcloud share yd.socket.server

etc hadoop-3.1.2 lib libexec sbin src

[root@shengxi local]#

修改文件所属用户和用户组

[root@shengxi local]# chown -R hadoop:root hadoop-3.1.2/

[root@shengxi local]# ls -l hadoop-3.1.2/

total 204

drwxrwxrwx 2 hadoop root 4096 Jan 29 2019 bin

drwxrwxrwx 3 hadoop root 4096 Jan 29 2019 etc

drwxrwxrwx 2 hadoop root 4096 Jan 29 2019 include

drwxrwxrwx 3 hadoop root 4096 Jan 29 2019 lib

drwxrwxrwx 4 hadoop root 4096 Jan 29 2019 libexec

-rwxrwxrwx 1 hadoop root 147145 Jan 23 2019 LICENSE.txt

-rwxrwxrwx 1 hadoop root 21867 Jan 23 2019 NOTICE.txt

-rwxrwxrwx 1 hadoop root 1366 Jan 23 2019 README.txt

drwxrwxrwx 3 hadoop root 4096 Jan 29 2019 sbin

drwxrwxrwx 4 hadoop root 4096 Jan 29 2019 share

配置环境变量

[hadoop@shengxi ~]$ vim /etc/profile

#配置hadoop环境变量

export HADOOP_HOME=/usr/local/hadoop-3.1.2

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

[hadoop@shengxi ~]$ source /etc/profile

6、测试单机hadoop是否成功。

*测试hadoop version

[hadoop@shengxi ~]$ source /etc/profile

[hadoop@shengxi ~]$ hadoop version

Hadoop 3.1.2

Source code repository https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a

Compiled by sunilg on 2019-01-29T01:39Z

Compiled with protoc 2.5.0

From source with checksum 64b8bdd4ca6e77cce75a93eb09ab2a9

This command was run using /usr/local/hadoop-3.1.2/share/hadoop/common/hadoop-common-3.1.2.jar

[hadoop@shengxi ~]$

**测试二,使用mapreduce统计单词出现的次数。

在用户文件夹下创建一个input文件夹,在里面写几个文件。我写了三个txt,每个文件间有重复的单词。

[hadoop@shengxi ~]$ mkdir input

[hadoop@shengxi ~]$ cd input

//新建并编辑三个文件

[hadoop@shengxi input]$ vim f1.txt

[hadoop@shengxi input]$ vim f2.txt

[hadoop@shengxi input]$ vim f3.txt

[hadoop@shengxi input]$ ll

total 12

-rw-r--r-- 1 root root 11 Oct 13 13:59 f1.txt

-rw-r--r-- 1 root root 25 Oct 13 14:01 f2.txt

-rw-r--r-- 1 root root 19 Oct 13 14:01 f3.txt

//调用方法 注意:不可以创建输出文件夹,如果存在output文件夹,那就改结果路径,或者删除output

hadoop jar /usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar wordcount input output

2019-10-13 14:09:28,762 INFO impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2019-10-13 14:09:28,879 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

2019-10-13 14:09:28,879 INFO impl.MetricsSystemImpl: JobTracker metrics system started

2019-10-13 14:09:29,089 INFO input.FileInputFormat: Total input files to process : 3

2019-10-13 14:09:29,116 INFO mapreduce.JobSubmitter: number of splits:3

2019-10-13 14:09:29,345 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local696442689_0001

2019-10-13 14:09:29,346 INFO mapreduce.JobSubmitter: Executing with tokens: []

2019-10-13 14:09:29,569 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

2019-10-13 14:09:29,570 INFO mapreduce.Job: Running job: job_local696442689_0001

2019-10-13 14:09:29,575 INFO mapred.LocalJobRunner: OutputCommitter set in config null

2019-10-13 14:09:29,583 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2019-10-13 14:09:29,583 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2019-10-13 14:09:29,583 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2019-10-13 14:09:29,650 INFO mapred.LocalJobRunner: Waiting for map tasks

2019-10-13 14:09:29,650 INFO mapred.LocalJobRunner: Starting task: attempt_local696442689_0001_m_000000_0

2019-10-13 14:09:29,672 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2019-10-13 14:09:29,672 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2019-10-13 14:09:29,691 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2019-10-13 14:09:29,695 INFO mapred.MapTask: Processing split: file:/home/hadoop/input/f2.txt:0+25

2019-10-13 14:09:29,802 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2019-10-13 14:09:29,802 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2019-10-13 14:09:29,802 INFO mapred.MapTask: soft limit at 83886080

2019-10-13 14:09:29,802 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2019-10-13 14:09:29,803 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2019-10-13 14:09:29,810 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2019-10-13 14:09:29,815 INFO mapred.LocalJobRunner:

2019-10-13 14:09:29,815 INFO mapred.MapTask: Starting flush of map output

2019-10-13 14:09:29,815 INFO mapred.MapTask: Spilling map output

2019-10-13 14:09:29,815 INFO mapred.MapTask: bufstart = 0; bufend = 42; bufvoid = 104857600

2019-10-13 14:09:29,816 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214384(104857536); length = 13/6553600

2019-10-13 14:09:29,827 INFO mapred.MapTask: Finished spill 0

2019-10-13 14:09:29,835 INFO mapred.Task: Task:attempt_local696442689_0001_m_000000_0 is done. And is in the process of committing

2019-10-13 14:09:29,846 INFO mapred.LocalJobRunner: map

2019-10-13 14:09:29,846 INFO mapred.Task: Task 'attempt_local696442689_0001_m_000000_0' done.

2019-10-13 14:09:29,853 INFO mapred.Task: Final Counters for attempt_local696442689_0001_m_000000_0: Counters: 18

File System Counters

FILE: Number of bytes read=316771

FILE: Number of bytes written=815692

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=1

Map output records=4

Map output bytes=42

Map output materialized bytes=56

Input split bytes=95

Combine input records=4

Combine output records=4

Spilled Records=4

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=22

Total committed heap usage (bytes)=135335936

File Input Format Counters

Bytes Read=25

2019-10-13 14:09:29,853 INFO mapred.LocalJobRunner: Finishing task: attempt_local696442689_0001_m_000000_0

2019-10-13 14:09:29,853 INFO mapred.LocalJobRunner: Starting task: attempt_local696442689_0001_m_000001_0

2019-10-13 14:09:29,858 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2019-10-13 14:09:29,859 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2019-10-13 14:09:29,859 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2019-10-13 14:09:29,860 INFO mapred.MapTask: Processing split: file:/home/hadoop/input/f3.txt:0+19

2019-10-13 14:09:29,906 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2019-10-13 14:09:29,906 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2019-10-13 14:09:29,906 INFO mapred.MapTask: soft limit at 83886080

2019-10-13 14:09:29,906 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2019-10-13 14:09:29,906 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2019-10-13 14:09:29,908 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2019-10-13 14:09:29,909 INFO mapred.LocalJobRunner:

2019-10-13 14:09:29,909 INFO mapred.MapTask: Starting flush of map output

2019-10-13 14:09:29,909 INFO mapred.MapTask: Spilling map output

2019-10-13 14:09:29,909 INFO mapred.MapTask: bufstart = 0; bufend = 32; bufvoid = 104857600

2019-10-13 14:09:29,909 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214388(104857552); length = 9/6553600

2019-10-13 14:09:29,910 INFO mapred.MapTask: Finished spill 0

2019-10-13 14:09:29,923 INFO mapred.Task: Task:attempt_local696442689_0001_m_000001_0 is done. And is in the process of committing

2019-10-13 14:09:29,924 INFO mapred.LocalJobRunner: map

2019-10-13 14:09:29,924 INFO mapred.Task: Task 'attempt_local696442689_0001_m_000001_0' done.

2019-10-13 14:09:29,925 INFO mapred.Task: Final Counters for attempt_local696442689_0001_m_000001_0: Counters: 18

File System Counters

FILE: Number of bytes read=317094

FILE: Number of bytes written=815768

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=1

Map output records=3

Map output bytes=32

Map output materialized bytes=44

Input split bytes=95

Combine input records=3

Combine output records=3

Spilled Records=3

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=21

Total committed heap usage (bytes)=182521856

File Input Format Counters

Bytes Read=19

2019-10-13 14:09:29,925 INFO mapred.LocalJobRunner: Finishing task: attempt_local696442689_0001_m_000001_0

2019-10-13 14:09:29,925 INFO mapred.LocalJobRunner: Starting task: attempt_local696442689_0001_m_000002_0

2019-10-13 14:09:29,935 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2019-10-13 14:09:29,935 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2019-10-13 14:09:29,935 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2019-10-13 14:09:29,936 INFO mapred.MapTask: Processing split: file:/home/hadoop/input/f1.txt:0+11

2019-10-13 14:09:29,979 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2019-10-13 14:09:29,979 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2019-10-13 14:09:29,979 INFO mapred.MapTask: soft limit at 83886080

2019-10-13 14:09:29,979 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2019-10-13 14:09:29,979 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2019-10-13 14:09:29,981 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2019-10-13 14:09:29,982 INFO mapred.LocalJobRunner:

2019-10-13 14:09:29,982 INFO mapred.MapTask: Starting flush of map output

2019-10-13 14:09:29,983 INFO mapred.MapTask: Spilling map output

2019-10-13 14:09:29,983 INFO mapred.MapTask: bufstart = 0; bufend = 20; bufvoid = 104857600

2019-10-13 14:09:29,983 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214392(104857568); length = 5/6553600

2019-10-13 14:09:29,984 INFO mapred.MapTask: Finished spill 0

2019-10-13 14:09:29,996 INFO mapred.Task: Task:attempt_local696442689_0001_m_000002_0 is done. And is in the process of committing

2019-10-13 14:09:30,000 INFO mapred.LocalJobRunner: map

2019-10-13 14:09:30,000 INFO mapred.Task: Task 'attempt_local696442689_0001_m_000002_0' done.

2019-10-13 14:09:30,001 INFO mapred.Task: Final Counters for attempt_local696442689_0001_m_000002_0: Counters: 18

File System Counters

FILE: Number of bytes read=317409

FILE: Number of bytes written=815830

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=1

Map output records=2

Map output bytes=20

Map output materialized bytes=30

Input split bytes=95

Combine input records=2

Combine output records=2

Spilled Records=2

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=25

Total committed heap usage (bytes)=168112128

File Input Format Counters

Bytes Read=11

2019-10-13 14:09:30,001 INFO mapred.LocalJobRunner: Finishing task: attempt_local696442689_0001_m_000002_0

2019-10-13 14:09:30,001 INFO mapred.LocalJobRunner: map task executor complete.

2019-10-13 14:09:30,007 INFO mapred.LocalJobRunner: Waiting for reduce tasks

2019-10-13 14:09:30,007 INFO mapred.LocalJobRunner: Starting task: attempt_local696442689_0001_r_000000_0

2019-10-13 14:09:30,034 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2019-10-13 14:09:30,034 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2019-10-13 14:09:30,035 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2019-10-13 14:09:30,037 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@17c3dca2

2019-10-13 14:09:30,038 WARN impl.MetricsSystemImpl: JobTracker metrics system already initialized!

2019-10-13 14:09:30,064 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=326402048, maxSingleShuffleLimit=81600512, mergeThreshold=215425360, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2019-10-13 14:09:30,078 INFO reduce.EventFetcher: attempt_local696442689_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2019-10-13 14:09:30,103 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local696442689_0001_m_000001_0 decomp: 40 len: 44 to MEMORY

2019-10-13 14:09:30,115 INFO reduce.InMemoryMapOutput: Read 40 bytes from map-output for attempt_local696442689_0001_m_000001_0

2019-10-13 14:09:30,116 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 40, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->40

2019-10-13 14:09:30,118 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local696442689_0001_m_000002_0 decomp: 26 len: 30 to MEMORY

2019-10-13 14:09:30,120 WARN io.ReadaheadPool: Failed readahead on ifile

EBADF: Bad file descriptor

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posix_fadvise(Native Method)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posixFadviseIfPossible(NativeIO.java:270)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX$CacheManipulator.posixFadviseIfPossible(NativeIO.java:147)

at org.apache.hadoop.io.ReadaheadPool$ReadaheadRequestImpl.run(ReadaheadPool.java:208)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2019-10-13 14:09:30,122 INFO reduce.InMemoryMapOutput: Read 26 bytes from map-output for attempt_local696442689_0001_m_000002_0

2019-10-13 14:09:30,122 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 26, inMemoryMapOutputs.size() -> 2, commitMemory -> 40, usedMemory ->66

2019-10-13 14:09:30,124 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local696442689_0001_m_000000_0 decomp: 52 len: 56 to MEMORY

2019-10-13 14:09:30,125 WARN io.ReadaheadPool: Failed readahead on ifile

EBADF: Bad file descriptor

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posix_fadvise(Native Method)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posixFadviseIfPossible(NativeIO.java:270)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX$CacheManipulator.posixFadviseIfPossible(NativeIO.java:147)

at org.apache.hadoop.io.ReadaheadPool$ReadaheadRequestImpl.run(ReadaheadPool.java:208)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2019-10-13 14:09:30,125 INFO reduce.InMemoryMapOutput: Read 52 bytes from map-output for attempt_local696442689_0001_m_000000_0

2019-10-13 14:09:30,125 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 52, inMemoryMapOutputs.size() -> 3, commitMemory -> 66, usedMemory ->118

2019-10-13 14:09:30,126 INFO reduce.EventFetcher: EventFetcher is interrupted.. Returning

2019-10-13 14:09:30,127 INFO mapred.LocalJobRunner: 3 / 3 copied.

2019-10-13 14:09:30,127 INFO reduce.MergeManagerImpl: finalMerge called with 3 in-memory map-outputs and 0 on-disk map-outputs

2019-10-13 14:09:30,131 INFO mapred.Merger: Merging 3 sorted segments

2019-10-13 14:09:30,131 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 91 bytes

2019-10-13 14:09:30,132 INFO reduce.MergeManagerImpl: Merged 3 segments, 118 bytes to disk to satisfy reduce memory limit

2019-10-13 14:09:30,132 INFO reduce.MergeManagerImpl: Merging 1 files, 118 bytes from disk

2019-10-13 14:09:30,133 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce

2019-10-13 14:09:30,133 INFO mapred.Merger: Merging 1 sorted segments

2019-10-13 14:09:30,137 WARN io.ReadaheadPool: Failed readahead on ifile

EBADF: Bad file descriptor

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posix_fadvise(Native Method)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posixFadviseIfPossible(NativeIO.java:270)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX$CacheManipulator.posixFadviseIfPossible(NativeIO.java:147)

at org.apache.hadoop.io.ReadaheadPool$ReadaheadRequestImpl.run(ReadaheadPool.java:208)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2019-10-13 14:09:30,138 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 106 bytes

2019-10-13 14:09:30,138 INFO mapred.LocalJobRunner: 3 / 3 copied.

2019-10-13 14:09:30,140 INFO Configuration.deprecation: mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

2019-10-13 14:09:30,141 INFO mapred.Task: Task:attempt_local696442689_0001_r_000000_0 is done. And is in the process of committing

2019-10-13 14:09:30,142 INFO mapred.LocalJobRunner: 3 / 3 copied.

2019-10-13 14:09:30,142 INFO mapred.Task: Task attempt_local696442689_0001_r_000000_0 is allowed to commit now

2019-10-13 14:09:30,143 INFO output.FileOutputCommitter: Saved output of task 'attempt_local696442689_0001_r_000000_0' to file:/home/hadoop/output

2019-10-13 14:09:30,147 INFO mapred.LocalJobRunner: reduce > reduce

2019-10-13 14:09:30,147 INFO mapred.Task: Task 'attempt_local696442689_0001_r_000000_0' done.

2019-10-13 14:09:30,148 INFO mapred.Task: Final Counters for attempt_local696442689_0001_r_000000_0: Counters: 24

File System Counters

FILE: Number of bytes read=317753

FILE: Number of bytes written=816010

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Combine input records=0

Combine output records=0

Reduce input groups=6

Reduce shuffle bytes=130

Reduce input records=9

Reduce output records=6

Spilled Records=9

Shuffled Maps =3

Failed Shuffles=0

Merged Map outputs=3

GC time elapsed (ms)=0

Total committed heap usage (bytes)=168112128

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Output Format Counters

Bytes Written=62

2019-10-13 14:09:30,148 INFO mapred.LocalJobRunner: Finishing task: attempt_local696442689_0001_r_000000_0

2019-10-13 14:09:30,151 INFO mapred.LocalJobRunner: reduce task executor complete.

2019-10-13 14:09:30,574 INFO mapreduce.Job: Job job_local696442689_0001 running in uber mode : false

2019-10-13 14:09:30,575 INFO mapreduce.Job: map 100% reduce 100%

2019-10-13 14:09:30,576 INFO mapreduce.Job: Job job_local696442689_0001 completed successfully

2019-10-13 14:09:30,597 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=1269027

FILE: Number of bytes written=3263300

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=3

Map output records=9

Map output bytes=94

Map output materialized bytes=130

Input split bytes=285

Combine input records=9

Combine output records=9

Reduce input groups=6

Reduce shuffle bytes=130

Reduce input records=9

Reduce output records=6

Spilled Records=18

Shuffled Maps =3

Failed Shuffles=0

Merged Map outputs=3

GC time elapsed (ms)=68

Total committed heap usage (bytes)=654082048

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=55

File Output Format Counters

Bytes Written=62

[hadoop@shengxi ~]$

查看结果:

[hadoop@shengxi home]$ cd hadoop/

[hadoop@shengxi ~]$ ll

total 8

drwxrwxrwx 2 root root 4096 Oct 13 14:00 input

drwxr-xr-x 2 hadoop hadoop 4096 Oct 13 14:09 output

[hadoop@shengxi ~]$ cd output/

[hadoop@shengxi output]$ ll

total 4

-rw-r--r-- 1 hadoop hadoop 50 Oct 13 14:09 part-r-00000

-rw-r--r-- 1 hadoop hadoop 0 Oct 13 14:09 _SUCCESS

[hadoop@shengxi output]$ cat part-r-00000

dfads 1

dfjlaskd 1

hello 3

ldlkjfh 2

my 1

world 1

[hadoop@shengxi output]$

至此,hadoop的单机版就安装好了。下面开始进行伪分布式部署。

7、进行伪分布部署

(1)修改在/usr/local/hadoop/etc/hadoop的各种配置

(2)给hadoop-env.sh,yarn-env.sh,mapred-env.sh增加java_home的连接。我直接将我的sh修改部分cat出来。

[hadoop@shengxi hadoop]$ cat hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

#

[hadoop@shengxi hadoop]$ cat mapred-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

[hadoop@shengxi hadoop]$ cat yarn-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

(3) 修改core-site.xml。

fs.defaultFS

hdfs://hadoop:9000

hadoop.http.staticuser.user

hadoop

hadoop.tmp.dir

/usr/local/hadoop/data/tmp

fs.trash.interval

7200

(4)创建对应的文件夹

//傻瓜式创建文件夹

[hadoop@shengxi hadoop-3.1.2]$ mkdir data

[hadoop@shengxi hadoop-3.1.2]$ cd data

[hadoop@shengxi data]$ mkdir tmp

[hadoop@shengxi data]$ mkdir namenode

[hadoop@shengxi data]$ mkdir datanode

[hadoop@shengxi data]$ cd ../

[hadoop@shengxi hadoop-3.1.2]$ chmod -R 777 data/

[hadoop@shengxi hadoop-3.1.2]$

(5)修改hdfs-site.xml,副本数常规是3个,但是我们是伪分布,只使用一个就行了。

dfs.permissions.enabled

false

dfs.replication

1

dfs.namenode.name.dir

/usr/local/hadoop/data/namenode

dfs.datanode.data.dir

/usr/local/hadoop/data/datanode

(6)修改 mapred-site.xml

mapreduce.framework.name

yarn

yarn.app.mapreduce.am.env

HADOOP_MAPRED_HOME=/usr/local/hadoop

mapreduce.map.env

HADOOP_MAPRED_HOME=/usr/local/hadoop

mapreduce.reduce.env

HADOOP_MAPRED_HOME=/usr/local/hadoop

mapreduce.map.memory.mb

2048

mapreduce.jobhistory.address

hadoop:10020

mapreduce.jobhistory.webapp.address

hadoop:19888

(7)修改yarn-site.xml

yarn.resourcemanager.hostname

hadoop

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

604800

至此,配置文件修完成,实现格式化hadoop就行了。

hadoop namenode -format[root@shengxi bin]# hadoop namenode -format

WARNING: Use of this script to execute namenode is deprecated.

WARNING: Attempting to execute replacement "hdfs namenode" instead.

2019-10-13 15:21:29,211 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = shengxi/172.17.0.15

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.1.2

STARTUP_MSG: classpath = /usr/local/hadoop-3.1.2/etc/hadoop:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-server-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-net-3.6.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/token-provider-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jersey-json-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/zookeeper-3.4.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jackson-databind-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/httpclient-4.5.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-compress-1.18.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/accessors-smart-1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-lang3-3.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jsch-0.1.54.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jetty-security-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jetty-xml-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerby-util-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/json-smart-2.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-common-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/snappy-java-1.0.5.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jetty-webapp-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-client-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/avro-1.7.7.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jersey-core-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jackson-core-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jetty-http-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jetty-server-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jackson-annotations-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/netty-3.10.5.Final.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/hadoop-annotations-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/metrics-core-3.2.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jetty-servlet-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-io-2.5.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jersey-server-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/curator-client-2.13.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerby-config-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/re2j-1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/asm-5.0.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/curator-framework-2.13.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/commons-codec-1.11.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jetty-util-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/stax2-api-3.1.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jetty-io-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/httpcore-4.4.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jersey-servlet-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/hadoop-auth-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-util-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/kerb-core-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/hadoop-kms-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/hadoop-common-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/hadoop-common-3.1.2-tests.jar:/usr/local/hadoop-3.1.2/share/hadoop/common/hadoop-nfs-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-net-3.6.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-databind-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/httpclient-4.5.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-compress-1.18.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/paranamer-2.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-lang3-3.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-security-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-xml-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jettison-1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/json-smart-2.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-webapp-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/avro-1.7.7.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-core-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-http-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-server-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-annotations-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/okio-1.6.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/hadoop-annotations-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-servlet-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-io-2.5.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/re2j-1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/asm-5.0.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-util-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/gson-2.2.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jetty-io-9.3.24.v20180605.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/httpcore-4.4.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/hadoop-auth-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.2-tests.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-client-3.1.2-tests.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.2-tests.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-client-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-nfs-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/hdfs/hadoop-hdfs-3.1.2-tests.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.2-tests.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/java-util-1.9.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/fst-2.50.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/guice-4.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/objenesis-1.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/jersey-client-1.19.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/jackson-jaxrs-base-2.7.8.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/dnsjava-2.1.7.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/json-io-2.5.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-registry-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-tests-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-client-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-services-api-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-common-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-services-core-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-router-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-common-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-api-3.1.2.jar:/usr/local/hadoop-3.1.2/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.1.2.jar

STARTUP_MSG: build = https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a; compiled by 'sunilg' on 2019-01-29T01:39Z

STARTUP_MSG: java = 1.8.0_222

************************************************************/

2019-10-13 15:21:29,232 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2019-10-13 15:21:29,421 INFO namenode.NameNode: createNameNode [-format]

2019-10-13 15:21:30,291 INFO common.Util: Assuming 'file' scheme for path /usr/local/hadoop/data/namenode in configuration.

2019-10-13 15:21:30,291 INFO common.Util: Assuming 'file' scheme for path /usr/local/hadoop/data/namenode in configuration.

Formatting using clusterid: CID-d1d9f073-058a-4ff6-9edb-abf48551e43c

2019-10-13 15:21:30,345 INFO namenode.FSEditLog: Edit logging is async:true

2019-10-13 15:21:30,362 INFO namenode.FSNamesystem: KeyProvider: null

2019-10-13 15:21:30,363 INFO namenode.FSNamesystem: fsLock is fair: true

2019-10-13 15:21:30,365 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2019-10-13 15:21:30,373 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

2019-10-13 15:21:30,374 INFO namenode.FSNamesystem: supergroup = supergroup

2019-10-13 15:21:30,374 INFO namenode.FSNamesystem: isPermissionEnabled = false

2019-10-13 15:21:30,374 INFO namenode.FSNamesystem: HA Enabled: false

2019-10-13 15:21:30,434 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2019-10-13 15:21:30,448 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

2019-10-13 15:21:30,448 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2019-10-13 15:21:30,454 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2019-10-13 15:21:30,454 INFO blockmanagement.BlockManager: The block deletion will start around 2019 Oct 13 15:21:30

2019-10-13 15:21:30,456 INFO util.GSet: Computing capacity for map BlocksMap

2019-10-13 15:21:30,458 INFO util.GSet: VM type = 64-bit

2019-10-13 15:21:30,459 INFO util.GSet: 2.0% max memory 444.7 MB = 8.9 MB

2019-10-13 15:21:30,459 INFO util.GSet: capacity = 2^20 = 1048576 entries

2019-10-13 15:21:30,469 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

2019-10-13 15:21:30,483 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

2019-10-13 15:21:30,483 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

2019-10-13 15:21:30,483 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2019-10-13 15:21:30,483 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2019-10-13 15:21:30,483 INFO blockmanagement.BlockManager: defaultReplication = 1

2019-10-13 15:21:30,483 INFO blockmanagement.BlockManager: maxReplication = 512

2019-10-13 15:21:30,483 INFO blockmanagement.BlockManager: minReplication = 1

2019-10-13 15:21:30,483 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

2019-10-13 15:21:30,484 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2019-10-13 15:21:30,484 INFO blockmanagement.BlockManager: encryptDataTransfer = false

2019-10-13 15:21:30,484 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2019-10-13 15:21:30,538 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215

2019-10-13 15:21:30,552 INFO util.GSet: Computing capacity for map INodeMap

2019-10-13 15:21:30,552 INFO util.GSet: VM type = 64-bit

2019-10-13 15:21:30,552 INFO util.GSet: 1.0% max memory 444.7 MB = 4.4 MB

2019-10-13 15:21:30,552 INFO util.GSet: capacity = 2^19 = 524288 entries

2019-10-13 15:21:30,565 INFO namenode.FSDirectory: ACLs enabled? false

2019-10-13 15:21:30,566 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2019-10-13 15:21:30,566 INFO namenode.FSDirectory: XAttrs enabled? true

2019-10-13 15:21:30,566 INFO namenode.NameNode: Caching file names occurring more than 10 times

2019-10-13 15:21:30,571 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2019-10-13 15:21:30,573 INFO snapshot.SnapshotManager: SkipList is disabled

2019-10-13 15:21:30,580 INFO util.GSet: Computing capacity for map cachedBlocks

2019-10-13 15:21:30,580 INFO util.GSet: VM type = 64-bit

2019-10-13 15:21:30,580 INFO util.GSet: 0.25% max memory 444.7 MB = 1.1 MB

2019-10-13 15:21:30,580 INFO util.GSet: capacity = 2^17 = 131072 entries

2019-10-13 15:21:30,587 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2019-10-13 15:21:30,587 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2019-10-13 15:21:30,587 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2019-10-13 15:21:30,596 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2019-10-13 15:21:30,596 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2019-10-13 15:21:30,598 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2019-10-13 15:21:30,598 INFO util.GSet: VM type = 64-bit

2019-10-13 15:21:30,598 INFO util.GSet: 0.029999999329447746% max memory 444.7 MB = 136.6 KB

2019-10-13 15:21:30,598 INFO util.GSet: capacity = 2^14 = 16384 entries

2019-10-13 15:21:30,640 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1558567234-172.17.0.15-1570951290630

2019-10-13 15:21:30,682 INFO common.Storage: Storage directory /usr/local/hadoop/data/namenode has been successfully formatted.

2019-10-13 15:21:30,690 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/data/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

2019-10-13 15:21:30,792 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/data/namenode/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds .

2019-10-13 15:21:30,813 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2019-10-13 15:21:30,819 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at shengxi/172.17.0.15

************************************************************/

[root@shengxi bin]#

最重要的是下面这一段中的successfully formatted.和txid>=0有些版本还会返回status=0。

2019-10-13 15:21:30,682 INFO common.Storage: Storage directory /usr/local/hadoop/data/namenode has been successfully formatted.

2019-10-13 15:21:30,690 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/data/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

2019-10-13 15:21:30,792 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/data/namenode/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds .

2019-10-13 15:21:30,813 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

(8)启动环境,注意2.x和3.x是不一样的。

* 2.x的是

sbin/hadoop-daemon.sh start namenode

sbin/hadoop-daemon.sh start datanode

sbin/yarn-daemon.sh start resourcemanager

sbin/yarn-daemon.sh start nodemanager

sbin/mr-jobhistory-daemon.sh start historyserver

* 3.x的启动方式是:

hdfs --daemon start namenode

hdfs --daemon start datanode

yarn --daemon start resourcemanager

yarn --daemon start nodemanager

yarn --daemon start timelineserver

结果如下:

[root@shengxi sbin]# hdfs --daemon start namenode

[root@shengxi sbin]# hdfs --daemon start datanode

[root@shengxi sbin]# yarn --daemon start resourcemanager

WARNING: YARN_CONF_DIR has been replaced by HADOOP_CONF_DIR. Using value of YARN_CONF_DIR.

[root@shengxi sbin]# yarn --daemon start nodemanager

WARNING: YARN_CONF_DIR has been replaced by HADOOP_CONF_DIR. Using value of YARN_CONF_DIR.

[root@shengxi sbin]# yarn --daemon start timelineserver

WARNING: YARN_CONF_DIR has been replaced by HADOOP_CONF_DIR. Using value of YARN_CONF_DIR验证:

[root@shengxi hadoop-3.1.2]# jps

721 DataNode

610 NameNode

1268 ApplicationHistoryServer

1111 NodeManager

844 ResourceManager

1293 Jps

[root@shengxi hadoop-3.1.2]#

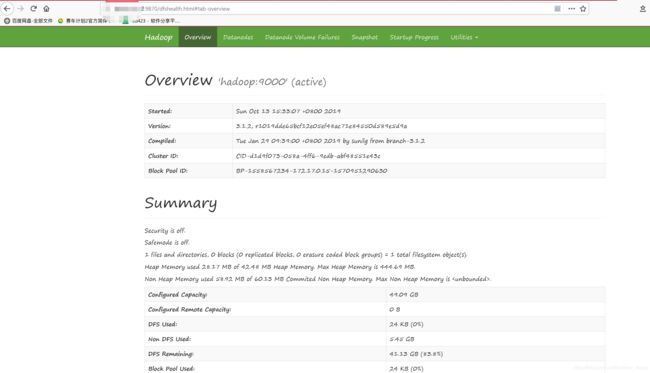

进行webUI检测(注意:云控制台要开启对应的端口)

web管理 |2.x端口| 3.x端口

NameNode | 8020 | 9820

NameNode HTTP UI | 50070 | 9870

DataNode | 50010 | 9866

Secondary NameNode HTTP UI | 50090 | 9868

DataNode IPC | 50020 | 9867

DataNode HTTP UI | 50075 | 9864

DataNode | 50010 | 9866

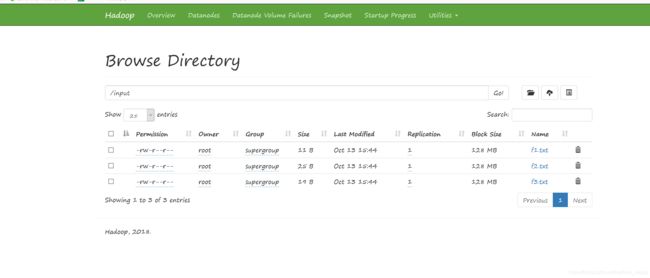

将单机测试的input文件夹copy到分布式里面,真实路径就是在hdfs-site.xml设定的。即:/usr/local/hadoop/data/datanode

利用web新建文件夹input将home里面的input文件夹中的文件上传到input中。

[root@shengxi ~]# hdfs dfs -put input/* /input

[root@shengxi ~]#

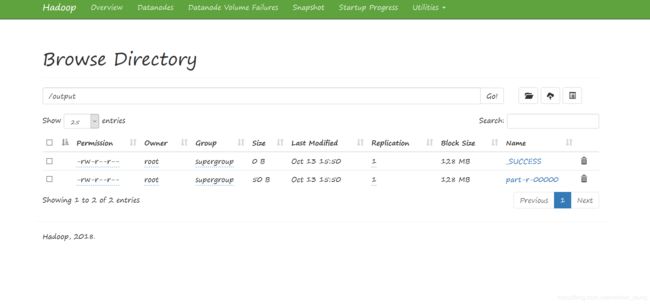

开始测试,命令是

hadoop jar /usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar wordcount /input /output

运行过程如下

[root@shengxi ~]# hadoop jar /usr/local/hadoop-3.1.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar wordcount /input /output

2019-10-13 15:49:46,920 INFO client.RMProxy: Connecting to ResourceManager at hadoop/172.17.0.15:8032

2019-10-13 15:49:47,690 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1570951999899_0002

2019-10-13 15:49:48,063 INFO input.FileInputFormat: Total input files to process : 3

2019-10-13 15:49:48,961 INFO mapreduce.JobSubmitter: number of splits:3

2019-10-13 15:49:49,708 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1570951999899_0002

2019-10-13 15:49:49,710 INFO mapreduce.JobSubmitter: Executing with tokens: []

2019-10-13 15:49:49,967 INFO conf.Configuration: resource-types.xml not found

2019-10-13 15:49:49,968 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2019-10-13 15:49:50,048 INFO impl.YarnClientImpl: Submitted application application_1570951999899_0002

2019-10-13 15:49:50,109 INFO mapreduce.Job: The url to track the job: http://hadoop:8088/proxy/application_1570951999899_0002/

2019-10-13 15:49:50,110 INFO mapreduce.Job: Running job: job_1570951999899_0002

2019-10-13 15:49:58,477 INFO mapreduce.Job: Job job_1570951999899_0002 running in uber mode : false

2019-10-13 15:49:58,479 INFO mapreduce.Job: map 0% reduce 0%

2019-10-13 15:50:13,719 INFO mapreduce.Job: map 100% reduce 0%

2019-10-13 15:50:21,799 INFO mapreduce.Job: map 100% reduce 100%

2019-10-13 15:50:23,828 INFO mapreduce.Job: Job job_1570951999899_0002 completed successfully

2019-10-13 15:50:23,930 INFO mapreduce.Job: Counters: 53

File System Counters

FILE: Number of bytes read=118

FILE: Number of bytes written=864161

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=343

HDFS: Number of bytes written=50

HDFS: Number of read operations=14

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=3

Launched reduce tasks=1

Data-local map tasks=3

Total time spent by all maps in occupied slots (ms)=75502

Total time spent by all reduces in occupied slots (ms)=5829

Total time spent by all map tasks (ms)=37751

Total time spent by all reduce tasks (ms)=5829

Total vcore-milliseconds taken by all map tasks=37751

Total vcore-milliseconds taken by all reduce tasks=5829

Total megabyte-milliseconds taken by all map tasks=77314048

Total megabyte-milliseconds taken by all reduce tasks=5968896

Map-Reduce Framework

Map input records=3

Map output records=9

Map output bytes=94

Map output materialized bytes=130

Input split bytes=288

Combine input records=9

Combine output records=9

Reduce input groups=6

Reduce shuffle bytes=130

Reduce input records=9

Reduce output records=6

Spilled Records=18

Shuffled Maps =3

Failed Shuffles=0

Merged Map outputs=3

GC time elapsed (ms)=832

CPU time spent (ms)=1980

Physical memory (bytes) snapshot=716304384

Virtual memory (bytes) snapshot=13735567360

Total committed heap usage (bytes)=436482048

Peak Map Physical memory (bytes)=205828096

Peak Map Virtual memory (bytes)=3649929216

Peak Reduce Physical memory (bytes)=106946560

Peak Reduce Virtual memory (bytes)=2791301120

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=55

File Output Format Counters

Bytes Written=50

[root@shengxi ~]#

在命令行中查看

hdfs dfs -cat /output/*//结果为

[root@shengxi ~]# hdfs dfs -cat /output/*

dfads 1

dfjlaskd 1

hello 3

ldlkjfh 2

my 1

world 1

[root@shengxi ~]#

结果是和单机版一模一样的。

在web中也可以看到结果就是成功了。