HashMap的底层原理面试必考题。为什么面试官如此青睐这道题?

HashMap里面涉及了很多的知识点,可以比较全面考察面试者的基本功,想要拿到一个好offer,这是一个迈不过的坎,接下来我们用最通俗易懂的语言带着大家揭开HashMap的神秘面纱。

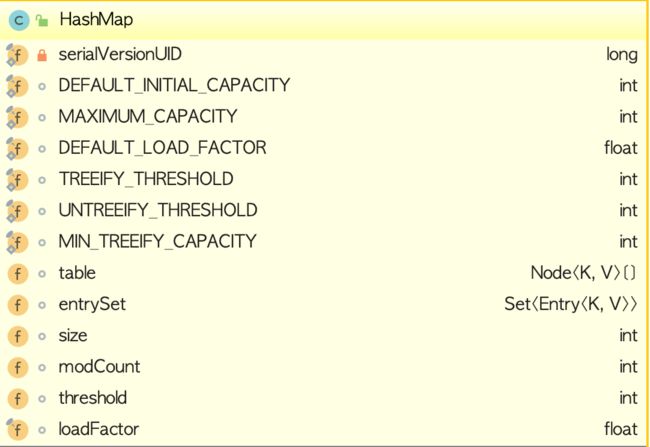

数据结构

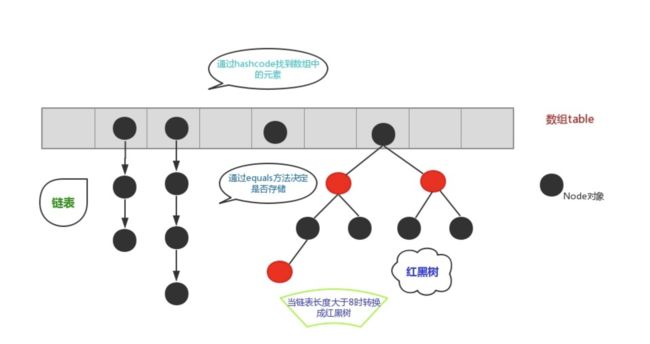

HashMap的数据结构为 : 数组+(链表或红黑树)。

/**

* The table, initialized on first use, and resized as

* necessary. When allocated, length is always a power of two.

* (We also tolerate length zero in some operations to allow

* bootstrapping mechanics that are currently not needed.)

*/

transient Node[] table;

HashMap的数据模型:

public class HashMap extends AbstractMap implements Map, Cloneable, Serializable {

static class Node implements Map.Entry {

final int hash;

final K key;

V value;

Node next;

}

...

/**

* Entry for Tree bins. Extends LinkedHashMap.Entry (which in turn

* extends Node) so can be used as extension of either regular or

* linked node.

*/

static final class TreeNode extends LinkedHashMap.Entry {

TreeNode parent; // red-black tree links

TreeNode left;

TreeNode right;

TreeNode prev; // needed to unlink next upon deletion

boolean red;

...

}

}

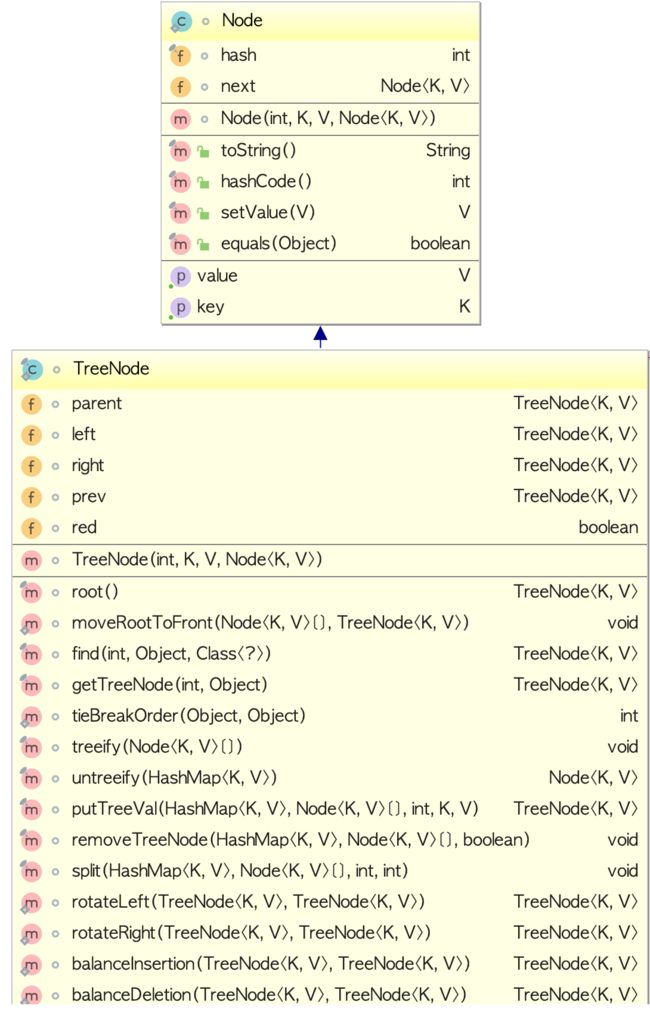

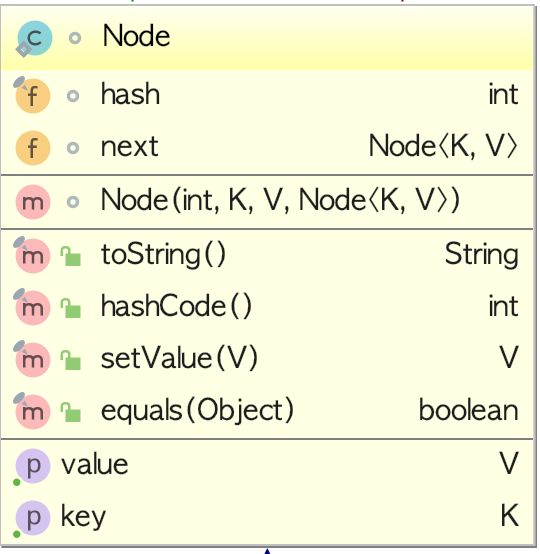

Node的模型:

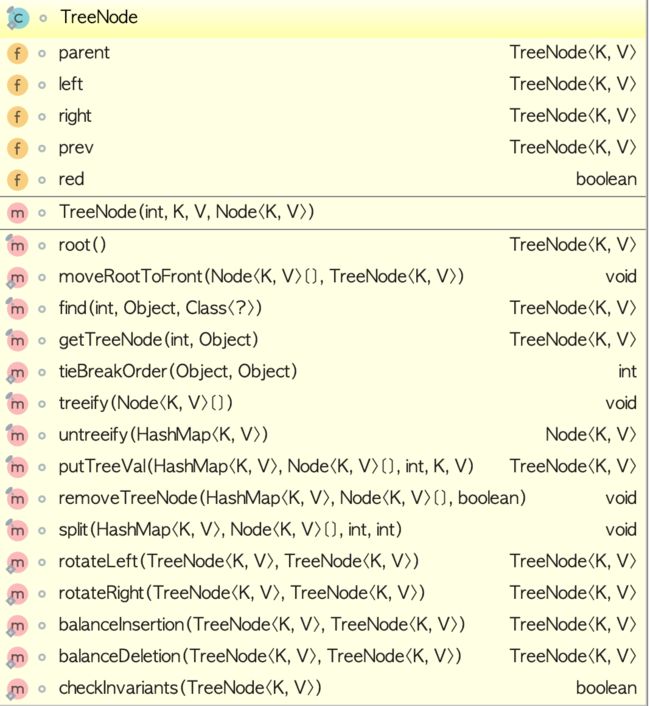

TreeNode的模型:

算法

链表转红黑树

红黑树插入节点、删除节点、查询节点算法

红黑树的五个性质

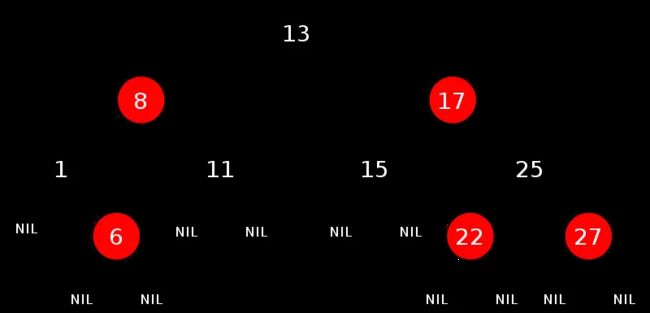

红黑树,一种二叉查找树,但在每个结点上增加一个存储位表示结点的颜色,可以是Red或Black。

通过对任何一条从根到叶子的路径上各个结点着色方式的限制,红黑树确保没有一条路径会比其他路径长出俩倍,因而是接近平衡的。

红黑树,作为一棵二叉查找树,满足二叉查找树的一般性质。下面,来了解下 二叉查找树的一般性质。

二叉查找树,也称有序二叉树(ordered binary tree),或已排序二叉树(sorted binary tree),是指一棵空树或者具有下列性质的二叉树:

- 若任意节点的左子树不空,则左子树上所有结点的值均小于它的根结点的值;

- 若任意节点的右子树不空,则右子树上所有结点的值均大于它的根结点的值;

- 任意节点的左、右子树也分别为二叉查找树。

- 没有键值相等的节点(no duplicate nodes)。

因为一棵由n个结点随机构造的二叉查找树的高度为lgn,所以顺理成章,二叉查找树的一般操作的执行时间为O(lgn)。但二叉查找树若退化成了一棵具有n个结点的线性链后,则这些操作最坏情况运行时间为O(n)。

红黑树虽然本质上是一棵二叉查找树,但它在二叉查找树的基础上增加了着色和相关的性质使得红黑树相对平衡,从而保证了红黑树的查找、插入、删除的时间复杂度最坏为O(log n)。

但它是如何保证一棵n个结点的红黑树的高度始终保持在logn的呢?

这就引出了红黑树的5个性质:

1.每个结点要么是红的要么是黑的。

2.根结点是黑的。

3.每个叶结点(叶结点即指树尾端NIL指针或NULL结点)都是黑的。

4.如果一个结点是红的,那么它的两个儿子都是黑的。

5.对于任意结点而言,其到叶结点树尾端NIL指针的每条路径都包含相同数目的黑结点。

正是红黑树的这5条性质,使一棵n个结点的红黑树始终保持了logn的高度,从而也就解释了上面所说的:

红黑树的查找、插入、删除的时间复杂度最坏为O(log n)

这一结论成立的原因。

这个源码有点复杂,后面再分析。

为什么节点数大于8的时候转红黑树?

/*

* Implementation notes.

*

* This map usually acts as a binned (bucketed) hash table, but

* when bins get too large, they are transformed into bins of

* TreeNodes, each structured similarly to those in

* java.util.TreeMap. Most methods try to use normal bins, but

* relay to TreeNode methods when applicable (simply by checking

* instanceof a node). Bins of TreeNodes may be traversed and

* used like any others, but additionally support faster lookup

* when overpopulated. However, since the vast majority of bins in

* normal use are not overpopulated, checking for existence of

* tree bins may be delayed in the course of table methods.

*

* Tree bins (i.e., bins whose elements are all TreeNodes) are

* ordered primarily by hashCode, but in the case of ties, if two

* elements are of the same "class C implements Comparable",

* type then their compareTo method is used for ordering. (We

* conservatively check generic types via reflection to validate

* this -- see method comparableClassFor). The added complexity

* of tree bins is worthwhile in providing worst-case O(log n)

* operations when keys either have distinct hashes or are

* orderable, Thus, performance degrades gracefully under

* accidental or malicious usages in which hashCode() methods

* return values that are poorly distributed, as well as those in

* which many keys share a hashCode, so long as they are also

* Comparable. (If neither of these apply, we may waste about a

* factor of two in time and space compared to taking no

* precautions. But the only known cases stem from poor user

* programming practices that are already so slow that this makes

* little difference.)

*

* Because TreeNodes are about twice the size of regular nodes, we

* use them only when bins contain enough nodes to warrant use

* (see TREEIFY_THRESHOLD). And when they become too small (due to

* removal or resizing) they are converted back to plain bins. In

* usages with well-distributed user hashCodes, tree bins are

* rarely used. Ideally, under random hashCodes, the frequency of

* nodes in bins follows a Poisson distribution

* (http://en.wikipedia.org/wiki/Poisson_distribution) with a

* parameter of about 0.5 on average for the default resizing

* threshold of 0.75, although with a large variance because of

* resizing granularity. Ignoring variance, the expected

* occurrences of list size k are (exp(-0.5) * pow(0.5, k) /

* factorial(k)). The first values are:

*

* 0: 0.60653066

* 1: 0.30326533

* 2: 0.07581633

* 3: 0.01263606

* 4: 0.00157952

* 5: 0.00015795

* 6: 0.00001316

* 7: 0.00000094

* 8: 0.00000006

* more: less than 1 in ten million

*

* The root of a tree bin is normally its first node. However,

* sometimes (currently only upon Iterator.remove), the root might

* be elsewhere, but can be recovered following parent links

* (method TreeNode.root()).

*

* All applicable internal methods accept a hash code as an

* argument (as normally supplied from a public method), allowing

* them to call each other without recomputing user hashCodes.

* Most internal methods also accept a "tab" argument, that is

* normally the current table, but may be a new or old one when

* resizing or converting.

*

* When bin lists are treeified, split, or untreeified, we keep

* them in the same relative access/traversal order (i.e., field

* Node.next) to better preserve locality, and to slightly

* simplify handling of splits and traversals that invoke

* iterator.remove. When using comparators on insertion, to keep a

* total ordering (or as close as is required here) across

* rebalancings, we compare classes and identityHashCodes as

* tie-breakers.

*

* The use and transitions among plain vs tree modes is

* complicated by the existence of subclass LinkedHashMap. See

* below for hook methods defined to be invoked upon insertion,

* removal and access that allow LinkedHashMap internals to

* otherwise remain independent of these mechanics. (This also

* requires that a map instance be passed to some utility methods

* that may create new nodes.)

*

* The concurrent-programming-like SSA-based coding style helps

* avoid aliasing errors amid all of the twisty pointer operations.

*/

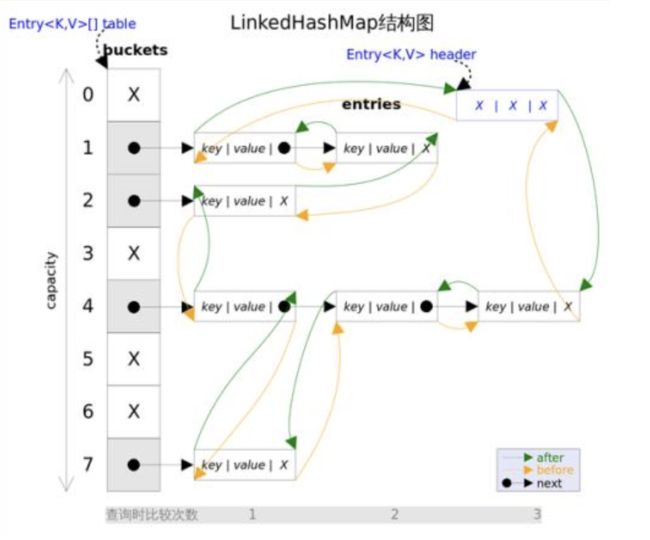

LinkedHashMap

public class LinkedHashMap

extends HashMap

implements Map

Hash table and linked list implementation of the Map interface, with predictable iteration order. This implementation differs from HashMap in that it maintains a doubly-linked list running through all of its entries. This linked list defines the iteration ordering, which is normally the order in which keys were inserted into the map (insertion-order). Note that insertion order is not affected if a key is re-inserted into the map. (A key k is reinserted into a map m if m.put(k, v) is invoked when m.containsKey(k) would return true immediately prior to the invocation.)

This implementation spares its clients from the unspecified, generally chaotic ordering provided by HashMap (and Hashtable), without incurring the increased cost associated with TreeMap. It can be used to produce a copy of a map that has the same order as the original, regardless of the original map's implementation:

void foo(Map m) {

Map copy = new LinkedHashMap(m);

...

}

This technique is particularly useful if a module takes a map on input, copies it, and later returns results whose order is determined by that of the copy. (Clients generally appreciate having things returned in the same order they were presented.)

参考资料

https://www.bilibili.com/read/cv369222?from=category_34

Kotlin开发者社区

专注分享 Java、 Kotlin、Spring/Spring Boot、MySQL、redis、neo4j、NoSQL、Android、JavaScript、React、Node、函数式编程、编程思想、"高可用,高性能,高实时"大型分布式系统架构设计主题。