densepose标注/COCO-DensePose Dataset

densepose的标注问题,一直困扰着我,在这里认真研读论文相关部分,以求得线索。

2. COCO-DensePose Dataset

Gathering rich, high-quality training sets has been a catalyst for progress in the classification [38], detection and segmentation [8, 26] tasks. There currently exists no manually collected ground truth for dense human pose estimation for real images. The works of [22] and [43] can be used as surrogates, but as we show in Sec. 4 provide worse supervision.

收集丰富,高质量的训练集已成为分类[38],检测和分割[8,26]任务取得进展的催化剂。 当前不存在用于实际图像的密集人体姿势估计的手动收集的ground truth。 [22]和[43]的成果可以用作替代品,但正如我们在第四部分展示的,它提供较差的监督。

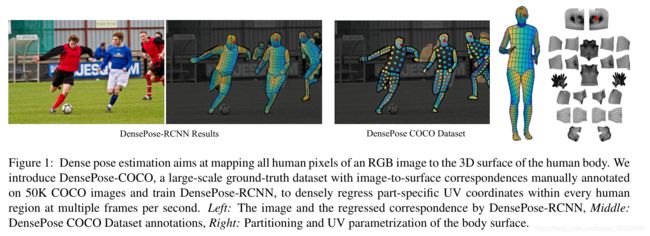

In this Section we introduce our COCO-DensePose dataset, alongside with evaluation measures that allow us to quantify progress in the task in Sec. 4. We have gathered annotations for 50K humans, collecting more than 5 million manually annotated correspondences.

在本节中,我们介绍了COCO-DensePose数据集,以及评估方法,使我们在第四部分能够量化回归。 我们收集了5万个人的标注,收集了超过500万个手动标注的对应关系。

We start with a presentation of our annotation pipeline, since this required several design choices that may be more generally useful for 3D annotation. We then turn to an analysis of the accuracy of the gathered ground-truth, alongside with the resulting performance measures used to assess the different methods.

我们从标注流程的表示开始,因为这需要一些对3D标注更通用的设计选择。 然后,我们转向对收集的ground-trut 的精确性的分析,以及用于评估不同方法所产生的性能指标。

2.1. Annotation System

In this work, we involve human annotators to establish dense correspondences from 2D images to surface-based representations of the human body. If done naively, this would require ‘hunting vertices’ for every 2D image point, by manipulating a surface through rotations - which can be frustratingly inefficient. Instead, we construct an annotation pipeline through which we can efficiently gather anno tations for image-to-surface correspondence.

在这项工作中,我们引入人类标注器,建立从2D图像到基于表面的人体表示的密集对应关系。 如果天真地做,这将需要通过旋转一个表面操作来为每个2D图像点提供“狩猎顶点”,这可能会令人沮丧地效率低下。 取而代之的是,我们构造了一条标注流程,通过该流程我们可以有效地收集图像到表面的对应关系的标注。

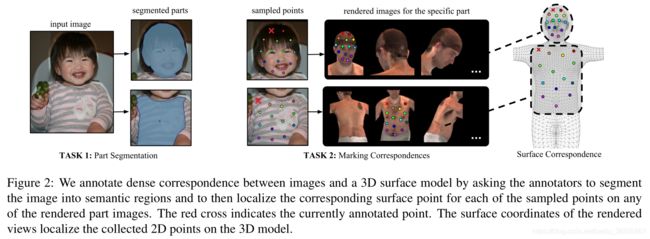

As shown in Fig. 2, in the first stage we ask annotators to delineate regions corresponding to visible, semantically defined body parts. These include Head, Torso, Lower/Upper Arms, Lower/Upper Legs, Hands and Feet. In order to use simplify the UV parametrization we design the parts to be isomorphic to a plane, partitioning the limbs and torso into lower-upper and frontal-back parts.

如图2所示,在第一阶段,我们要求标注器描绘的语义定义身体部位区域是可见的。 这些包括头,躯干,下/上臂,下/大腿,手和脚。 为了简化UV参数化,我们将身体部位设计与一个平面同构,将肢体和躯干分为上下、前后部分。

For head, hands and feet, we use the manually obtained UV fields provided in the SMPL model [27]. For the rest of the parts we obtain the unwrapping via multi dimensional scaling applied to pairwise geodesic distances.The UV fields for the resulting 24 parts are visualized in Fig. 1 (right).

对于头,手和脚,我们手动获得的UV区域,它由SMPL模型[27]提供的。 对于其余的身体部位,我们获得展开,这个展开通过应用于成对测地距离的多维比例缩放获得。图1(右)显示了生成的24个身体部位的UV区域。

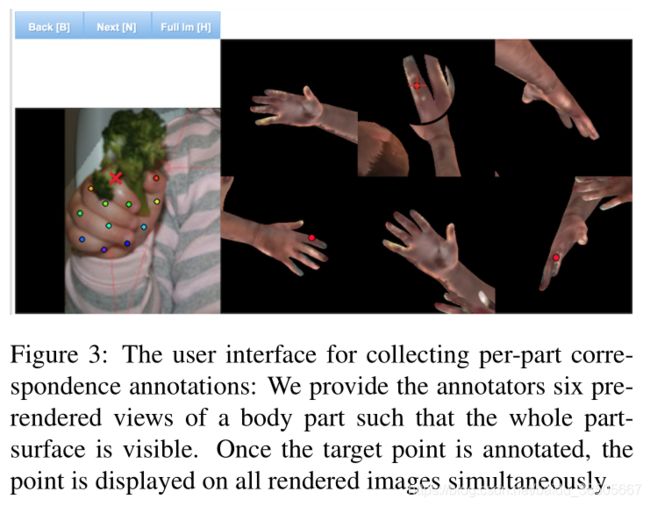

We instruct the annotators to estimate the body part behind the clothes, so that for instance wearing a large skirt would not complicate the subsequent annotation of correspondences. In the second stage we sample every part region with a set of roughly equidistant points obtained via k-means and request the annotators to bring these points in correspondence with the surface. The number of sampled points varies based on the size of the part and the maximum number of sampled points per part is 14. In order to simplify this task we ‘unfold’ the part surface by providing six pre-rendered views of the same body part and allow the user to place landmarks on any of them Fig. 3. This allows the annotator to choose the most convenient point of view by selecting one among six options instead of manually rotating the surface.

我们指示标注器估算衣服后面的身体部位,以便不会使随后的对应标注复杂化,例如穿着大裙子。 在第二阶段中,我们通过k均值获得一组大致等距的点来对每个身体部位进行采样,并要求标注器将这些点与表面相对应。 采样点的数量根据身体部位的大小和采样点最大数量而有所不同,每个部位的采样点数最大为14。为简化任务,我们通过提供六个相同人体部位的预渲染视图来“呈现”身体部位表面,并允许用户在其中任何一个上放置landmarks(图3)。 允许标注器通过从六个视图中选择一个来选择最方便的视角,而不是手动旋转曲面。

As the user indicates a point on any of the rendered part views, its surface coordinates are used to simultaneously show its position on the remaining views – this gives a global overview of the correspondence. The image points are presented to the annotator in a horizontal/vertical succession, which makes it easier to deliver geometrically consistent annotations by avoiding self-crossings of the surface.This two-stage annotation process has allowed us to very efficiently gather highly accurate correspondences. If we quantify the complexity of the annotation task in terms of the time it takes to complete it, we have seen that the part segmentation and correspondence annotation tasks take approximately the same time, which is surprising given the more challenging nature of the latter task. Visualizations of the collected annotations are provided in Fig. 4, where the partitioning of the surface and U, V coordinates are shown in Fig. 1.

当用户在任何渲染的身体部位视图上标记一个点时,其表面坐标同时显示其在其余视图上(这将提供对应关系的全局概览)。 图像点以水平/垂直连续的方式显示给标注器,从而避免了表面的自相交,从而更易于传递几何上一致的标注。此两阶段注释过程使我们能够非常有效地收集高度准确的对应关系。 如果我们根据完成标注任务的时间来量化标注任务的复杂性,就会发现身体部位分割和对应关系标注任务花费的时间大约是相同的,考虑到对应关系标注任务更具挑战性,这是令人惊讶的。 图4提供了所收集标注的可视化,其中图1显示了表面和U,V坐标的划分。

可以发现annotator标注器是个关键工具。