刘二大人《Pytorch深度学习实践》代码(一)

刘二大人《Pytorch深度学习实践》代码(一)

文章目录

- 刘二大人《Pytorch深度学习实践》代码(一)

- 前言

- P2 线性模型

- P3 梯度下降算法

- P4 反向传播

前言

有理论基础,但是编程很垃圾,就在B站上找Pytorch的视频,随手点开的视频《PyTorch深度学习实践》完结合集,讲的很好,想记录一下老师用来举例子的代码(有个别看视频的时候写的注解仅供参考)

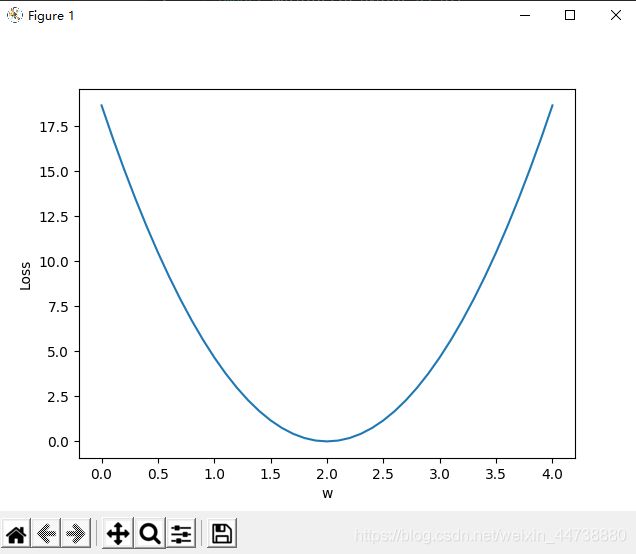

P2 线性模型

菜鸡刚上手碰到的两个问题:

(1)导入matplotlib的时候忘记了后面的.pyplot

(2)最后显示图像的语句忘记删掉缩进(python的缩进真的要格外注意)

```bash

import numpy as np

import matplotlib.pyplot as plt

#数据集

x_data = [1.0,2.0,3.0]

y_data = [2.0,4.0,6.0]

#定义模型

def forward(x):

return x * w

#定义损失函数

def loss(x,y):

y_pred = forward(x)

return (y_pred-y) * (y_pred-y)

#存放权重和权重损失值对应的列表

w_list = []

mse_list = []

#w取值为0-4,间隔为0.1

for w in np.arange(0.0,4.1,0.1):

print("w=",w)

l_sum = 0

# 把数据集里的数据取出来拼成x_val和y_val

for x_val,y_val in zip(x_data,y_data):

y_pred_val = forward(x_val)

loss_val = loss(x_val, y_val)

l_sum += loss_val

print('\t',x_val,y_val,y_pred_val,loss_val)

print("MSE=",l_sum/3)

w_list.append(w)

mse_list.append(l_sum/3)

plt.plot(w_list, mse_list)

plt.ylabel('Loss')

plt.xlabel('w')

plt.show()

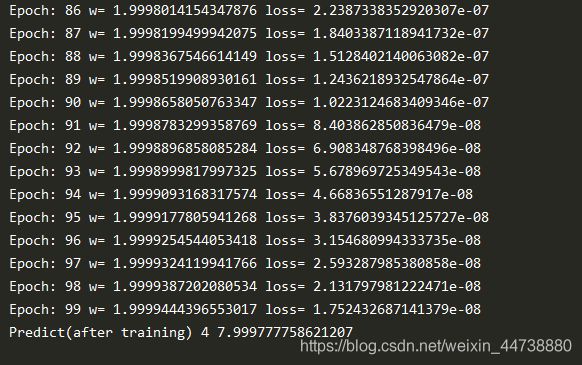

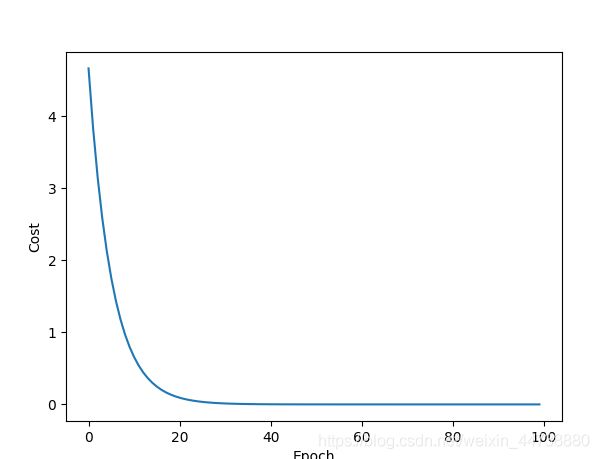

P3 梯度下降算法

代码如下:

import numpy as np

import matplotlib.pyplot as plt

#数据集

x_data = [1.0,2.0,3.0]

y_data = [2.0,4.0,6.0]

w = 1.0

#定义模型

def forward(x):

return x * w

#求MSE

def cost(xs, ys):

cost = 0

for x, y in zip(xs, ys):

y_pred = forward(x)

cost += (y_pred - y) ** 2

return cost / len(xs)#除样本数量(求均值)

def gradient(xs, ys):

grad = 0

for x, y in zip(xs, ys):

grad += 2*x*(x*w-y)

return grad / len(xs)

print('Predict (before training)', 4, forward(4))

epoch_list = []

cost_val_list = []

for epoch in range(100):

cost_val = cost(x_data, y_data)

grad_val = gradient(x_data, y_data)

w -= 0.01 * grad_val

print('Epoch:', epoch, 'w=',w, 'loss=', cost_val)

epoch_list.append(epoch)

cost_val_list.append(cost_val)

print('Predict(after training)', 4 ,forward(4))

plt.plot(epoch_list, cost_val_list)

plt.ylabel('Cost')

plt.xlabel('Epoch')

plt.show()

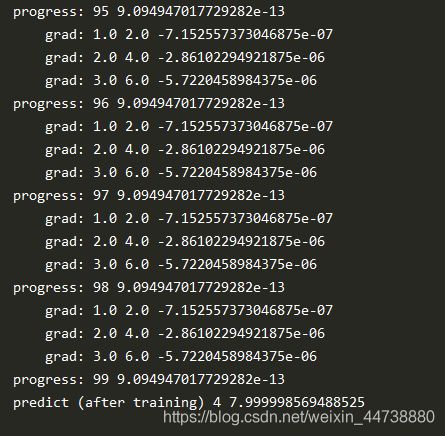

P4 反向传播

代码如下:

import torch

import matplotlib.pyplot as plt

x_data = [1.0,2.0,3.0]

y_data = [2.0,4.0,6.0]

w = torch.Tensor([1.0])

w.requires_grad = True#需要计算梯度

def forward(x):

return x * w

def loss(x, y):

y_pred = forward(x)

return (y_pred - y) ** 2

print("predict (before training)", 4, forward(4).item())

for epoch in range(100):

for x, y in zip(x_data, y_data):

l = loss(x, y)

l.backward()

print('\tgrad:', x, y, w.grad.item())

w.data = w.data - 0.01 * w.grad.data

w.grad.data.zero_()

print("progress:", epoch, l.item())

print("predict (after training)", 4, forward(4).item())